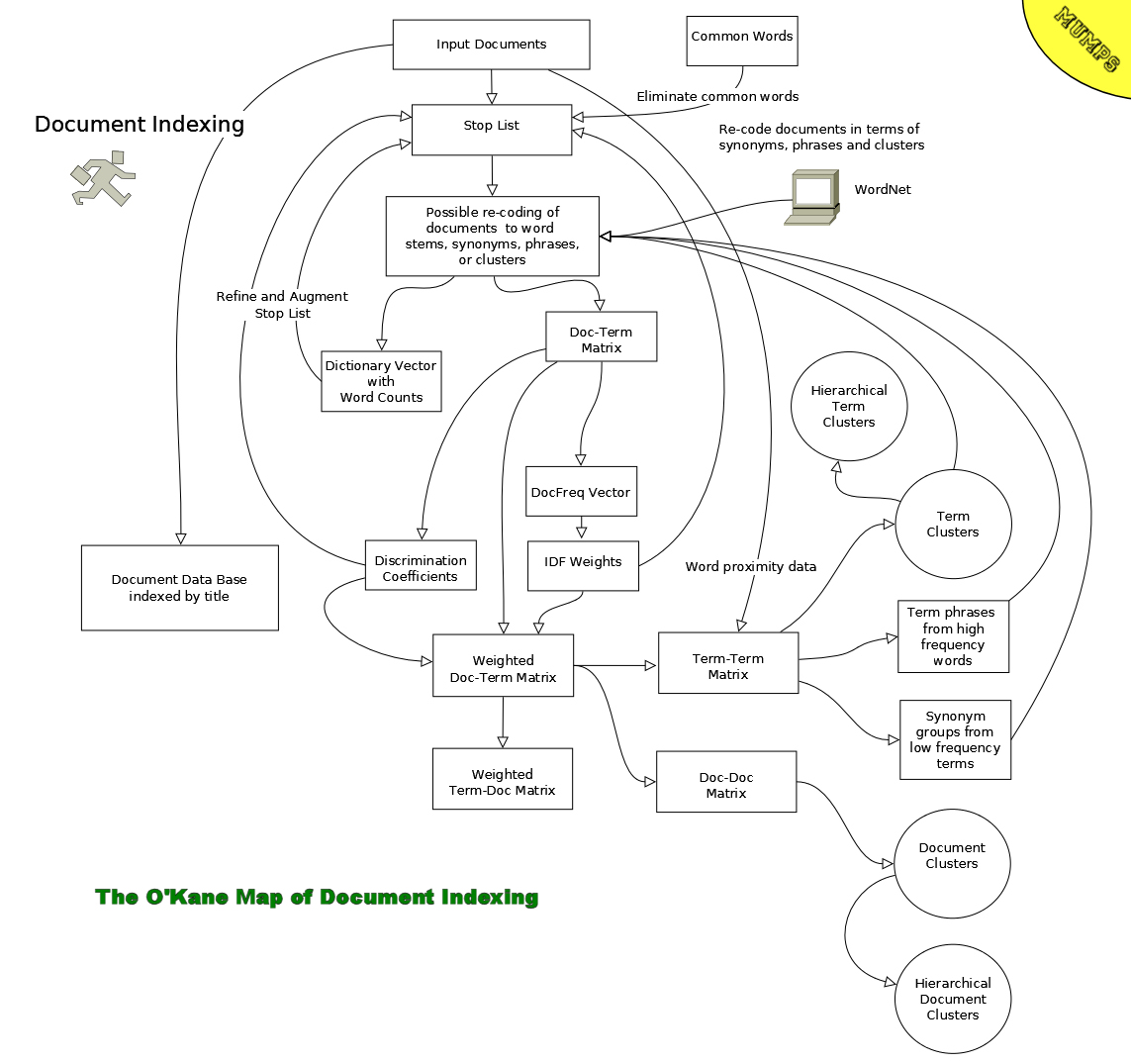

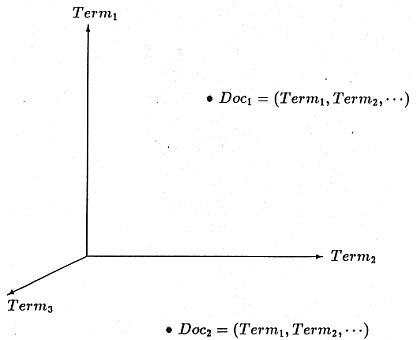

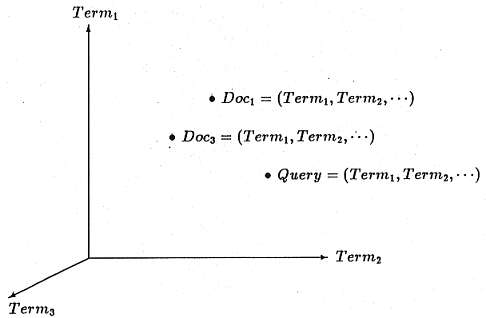

One popular approach to automatic document indexing,

the vector space model, views computer

generated document vectors as describing a hyperspace in which the number of dimensions

(axes) is equal to the number of indexing terms. This approach was originally proposed by

G. Salton:

Each document vector is a point in that space defined by the distance along the axis associated with each

document term proportional to the term's importance or significance in the document being represented.

Queries are also portrayed as vectors that define points in the document hyperspace. Documents whose points in the

hyperspace lie within an adjustable envelope of distance from the query vector point are retrieved.

The information storage and retrieval process involves converting user typed queries to query vectors and correlating these with

document vectors in order to select and rank documents for presentation to the user.

Most (IR) systems have been implemented in C, Pascal and C++, although these languages

provide little native support for the hyperspace model. Similarly, popular off-the-shelf legacy relational

data base systems are inadequate to efficiently represent or manipulate sparse document vectors in the

manner needed to effectively implement IR systems.

Document are viewed as points in a hyperspace whose

axes are the terms used in the document vectors.

The location of a document in the space is determined by

the degree to which the terms are present in a document.

Some terms occur several times while other occur not at all.

Terms also have weights associated with their content

indicating strength and this is factored into the equation

as well.

Query vectors are also treated as points in the hyperspace

and the documents that lie within a set distance of the query

are determined to satisfy the query.

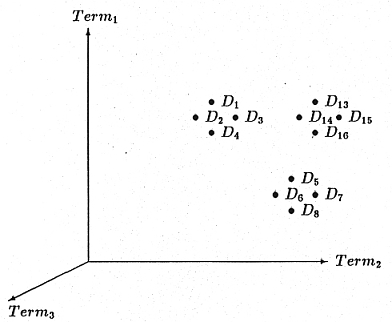

Clustering involves identifying groupings of documents

and constructing a cluster centroid vector to

speed information storage and retrieval. Hierarchies of clusters

can also be constructed.

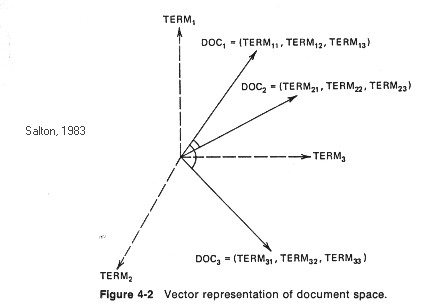

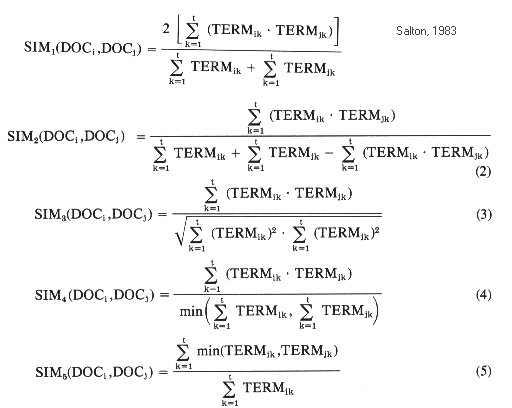

In the above, taken from Salton 1983, the Cosine is formula 3.

The formulae calculate the similarity between Doci and Docj

by examining the relationships between termi,k and termj,k

where termi,k is the weight of term k in document i and

termj,k is the weight of term k in document j.

Sim1 is known as the Dice coefficient and

Sim2 is known as the Jaccard coefficient

(see: Jaccard 1912, "The distribution of the flora of the alpine zone", New Phytologist 11:37-50).

Related Similarity Functions

See

Sam's String Metrics for a discussion of:

- Hamming distance

- Levenshtein distance

- Needleman-Wunch distance or Sellers Algorithm

- Smith-Waterman distance

- Gotoh Distance or Smith-Waterman-Gotoh distance

- Block distance or L1 distance or City block distance

- Monge Elkan distance

- Jaro distance metric

- Jaro Winkler

- SoundEx distance metric

- Matching Coefficient

- Dice.s Coefficient

- Jaccard Similarity or Jaccard Coefficient or Tanimoto coefficient

- Overlap Coefficient

- Euclidean distance or L2 distance

- Cosine similarity

- Variational distance

- Hellinger distance or Bhattacharyya distance

- Information Radius (Jensen-Shannon divergence)

- Harmonic Mean

- Skew divergence

- Confusion Probability

- Tau

- Fellegi and Sunters (SFS) metric

- TFIDF or TF/IDF

- FastA

- BlastP

- Maximal matches

- q-gram

- Ukkonen Algorithms

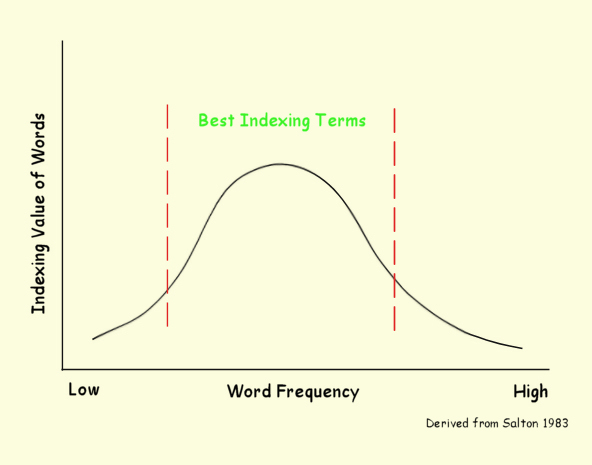

Assigning Word Weights

Words used for indexing vary in their ability to indicate content

and, thus, their importance as indexing terms.

Some words, such as "the", "and", "was" and so forth

are worthless as content indications and we eliminate them from consideration

immediately. Other words occur so infrequently that

they are also unlikely to be useful as indexing terms.

Other words, however, with middle frequency of occurrence

are candidates as indexing terms.

However, not all words a equally good index terms.

For example, the word "computer" in a collection of computer

science articles conveys very little information useful to

indexing the document since so many, if not all, the documents

contain the word. The goal here is to determine a metric

of the ability of a word to convey information.

In the following example, several weighting schemes are compared.

In the example, ^doc(i,w) is the number of times

term w occurs in document i; ^dict(w) is the number of

times term w occurs in the collection as a whole; ^df(w)

is the number of documents term w occurs in; NbrDocs

is the total number of documents in the collection; and the function $zlog()

is the natural logarithm. The operation "\" is integer division.

|

Normalize [normal.mps] Sun Dec 15 13:08:59 2002

1000 documents; 29942 word instances, 563 distinct words

^doc(i,w) Number times word w used in document i

^dict(w) Number times word w used in total collection

^df(w) Number of documents word w appears in

Wgt1 ^doc(i,w)/(^dict(w)/^df(w))

Wgt2 ^doc(i,w)*$zlog(NbrDocs/^df(w))+1

Wgt3 Wgt1*Wgt2+0.5\1

Word ^doc(i,w) ^dict(w) ^df(w) Wgt1 Wgt2 Wgt3 MCA

[1] Death of a cult. (Apple Computer needs to alter its strategy) (column)

apple 4 261 112 1.716 9.757 17 -1.1625

computer 4 706 358 2.028 5.109 10 -19.4405

mac 2 146 71 0.973 6.290 6 -0.0256

macintosh 4 210 107 2.038 9.940 20 -0.5855

strategy 2 79 67 1.696 6.406 11 -0.0592

[2] Next year in Xanadu. (Ted Nelson's hypertext implementations) Swaine, -

Michael.

document 3 114 68 1.789 9.065 16 0.0054

operate 3 269 184 2.052 6.078 12 -2.1852

[3] WordPerfect. (WordPerfect for the Macintosh 2.0) (evaluation) Taub, Er-

ic.

edit 2 111 77 1.387 6.128 8 -0.0961

frame 2 9 7 1.556 10.924 17 0.0131

import 2 29 19 1.310 8.927 12 0.0998

macintosh 3 210 107 1.529 7.705 12 -0.5855

macro 3 38 24 1.895 12.189 23 0.1075

outstand 1 10 9 0.900 5.711 5 0.0168

user 4 861 435 2.021 4.330 9 -26.8094

wordperfect 8 24 8 2.667 39.627 106 0.1747

[4] Radius Pivot for Built-In Video an Radius Color Pivot. (Hardware Revie-

w) (new Mac monitors)(includes related article on design of

built-in 3 35 29 2.486 11.621 29 0.0678

color 3 81 47 1.741 10.173 18 0.0809

mac 2 146 71 0.973 6.290 6 -0.0256

monitor 6 88 52 3.545 18.739 66 0.0946

resolution 2 50 32 1.280 7.884 10 0.0288

screen 2 92 62 1.348 6.561 9 0.0199

video 4 106 61 2.302 12.188 28 0.0187

[5] CrystalPrint Express. (Software Review) (high-speed desktop laser prin-

ter) (evaluation)

desk 2 127 76 1.197 6.154 7 -0.1062

engine 1 15 13 0.867 5.343 5 0.0282

font 4 111 37 1.333 14.187 19 0.6350

laser 3 61 27 1.328 11.836 16 0.2562

print 3 140 66 1.414 9.154 13 0.0509

[6] 4D Write, 4D Calc, 4D XREF. (Software Review) (add-ins for Acius' Four-

th Dimension database software) (evaluation)

add-in 2 97 38 0.784 7.540 6 0.5551

analysis 2 179 139 1.553 4.947 8 -0.8492

database 5 138 67 2.428 14.515 35 0.1832

midrange 1 7 6 0.857 6.116 5 0.0218

spreadsheet 2 75 44 1.173 7.247 9 0.1707

vary 1 7 6 0.857 6.116 5 0.0107

[7] ConvertIt! (Software Review) (utility for converting HyperCard stacks -

to IBM PC format) (evaluation)

converter 2 24 13 1.083 9.686 10 0.0698

doe 5 97 84 4.330 13.385 58 -0.1139

graphical 2 307 171 1.114 4.532 5 -2.4079

hypercard 4 25 13 2.080 18.371 38 0.1517

mac 2 146 71 0.973 6.290 6 -0.0256

map 2 17 10 1.176 10.210 12 0.1180

program 4 670 334 1.994 5.386 11 -15.4832

script 3 54 32 1.778 11.326 20 0.1239

software 3 913 449 1.475 3.402 5 -30.7596

stack 5 15 8 2.667 25.142 67 0.0700

[8] Reports 2.0. (Software Review) (Nine To Five Software Reports 2.0 repo-

rt generator for HyperCard 2.0) (evaluation)

hypercard 5 25 13 2.600 22.714 59 0.1517

print 3 140 66 1.414 9.154 13 0.0509

software 3 913 449 1.475 3.402 5 -30.7596

stack 2 15 8 1.067 10.657 11 0.0700

[9] Project-scheduling tools. (FastTrack Schedule, MacSchedule) (Software -

Review) (evaluation)

manage 2 318 174 1.094 4.497 5 -2.4884

[10] Digital Darkroom. (Software Review) (new version of image-processing s-

oftware) (evaluation)

apply 1 17 15 0.882 5.200 5 0.0317

digital 4 90 52 2.311 12.826 30 -0.0042

image 4 107 58 2.168 12.389 27 0.1422

palette 2 18 12 1.333 9.846 13 0.0660

portion 2 17 15 1.765 9.399 17 0.0295

software 4 913 449 1.967 4.203 8 -30.7596

text 2 55 46 1.673 7.158 12 0.0304

user 5 861 435 2.526 5.162 13 -26.8094

[11] CalenDAr. (Software Review) (Psyborn Systems Inc. CalenDAr desk access-

ory) (evaluation)

accessory 2 14 10 1.429 10.210 15 0.0540

desk 2 127 76 1.197 6.154 7 -0.1062

display 2 106 78 1.472 6.102 9 -0.1278

program 3 670 334 1.496 4.290 6 -15.4832

sound 2 14 8 1.143 10.657 12 0.1172

user 3 861 435 1.516 3.497 5 -26.8094

[12] DisplayServer II-DPD. (Hardware Review) (DisplayServer II video card f-

or using VGA monitor with Macintosh) (evaluation)

apple 4 261 112 1.716 9.757 17 -1.1625

card 2 99 56 1.131 6.765 8 0.0790

display 2 106 78 1.472 6.102 9 -0.1278

macintosh 3 210 107 1.529 7.705 12 -0.5855

monitor 6 88 52 3.545 18.739 66 0.0946

vga 2 91 62 1.363 6.561 9 0.0104

video 2 106 61 1.151 6.594 8 0.0187

[13] SnapJot. (Software Review) (evaluation) Gruberman, Ken.

capture 2 14 11 1.571 10.020 16 0.0271

image 3 107 58 1.626 9.542 16 0.1422

software 3 913 449 1.475 3.402 5 -30.7596

window 4 417 159 1.525 8.355 13 -3.4780

[14] Studio Vision. (Software Review) (Lehrman, Paul D.) (evaluation) Lehrm-

an, Paul D.

audio 1 8 6 0.750 6.116 5 0.0161

disk 3 234 121 1.551 7.336 11 -1.1468

edit 3 111 77 2.081 8.692 18 -0.0961

operate 2 269 184 1.368 4.386 6 -2.1852

portion 1 17 15 0.882 5.200 5 0.0295

requirement 2 87 76 1.747 6.154 11 -0.1203

sound 6 14 8 3.429 29.970 103 0.1172

user 3 861 435 1.516 3.497 5 -26.8094

[15] 70 things you need to know about System 7.0. (includes related article-

s on past reports about System 7.0, Adobe Type 1 fonts,

apple 3 261 112 1.287 7.568 10 -1.1625

communication 2 199 110 1.106 5.415 6 -0.6984

desk 2 127 76 1.197 6.154 7 -0.1062

disk 2 234 121 1.034 5.224 5 -1.1468

duplicate 1 10 9 0.900 5.711 5 0.0143

file 3 271 151 1.672 6.671 11 -1.3982

font 2 111 37 0.667 7.594 5 0.6350

memory 4 142 98 2.761 10.291 28 -0.2999

tip 1 8 6 0.750 6.116 5 0.0335

user 4 861 435 2.021 4.330 9 -26.8094

virtual 2 17 15 1.765 9.399 17 0.0424

[16] Data on the run. (Hardware Review) (palmtop organizers)(includes relat-

ed article describing the WristMac from Microseeds

character 2 25 17 1.360 9.149 12 0.0871

computer 4 706 358 2.028 5.109 10 -19.4405

data 3 415 226 1.634 5.462 9 -5.6011

database 2 138 67 0.971 6.406 6 0.1832

display 4 106 78 2.943 11.204 33 -0.1278

mac 3 146 71 1.459 8.935 13 -0.0256

ms_dos 2 98 65 1.327 6.467 9 0.0481

organize 1 19 17 0.895 5.075 5 0.0589

palmtop 1 6 5 0.833 6.298 5 0.0216

ram 2 145 93 1.283 5.750 7 -0.3992

review 2 265 238 1.796 3.871 7 -2.4234

rom 1 19 17 0.895 5.075 5 0.0374

software 4 913 449 1.967 4.203 8 -30.7596

transfer 2 66 44 1.333 7.247 10 0.0918

[17] High-speed, low-cost IIci cache cards. (includes related article on ca-

ching for other Mac models) (buyers guide)

cach 1 10 9 0.900 5.711 5 0.0127

cache 8 49 30 4.898 29.052 142 0.1613

card 6 99 56 3.394 18.294 62 0.0790

chip 2 117 67 1.145 6.406 7 -0.1153

high-speed 2 18 14 1.556 9.537 15 0.0352

memory 3 142 98 2.070 7.968 16 -0.2999

ram 2 145 93 1.283 5.750 7 -0.3992

[18] Mac, DOS and VAX file servers. (multiplatform file servers)(includes r-

elated articles on optimizing server

add-on 1 17 15 0.882 5.200 5 0.0374

apple 2 261 112 0.858 5.379 5 -1.1625

file 10 271 151 5.572 19.905 111 -1.3982

lan 2 98 51 1.041 6.952 7 0.0366

mac 4 146 71 1.945 11.580 23 -0.0256

macintosh 6 210 107 3.057 14.410 44 -0.5855

ms_dos 2 98 65 1.327 6.467 9 0.0481

netware 2 60 28 0.933 8.151 8 0.2314

network 6 571 222 2.333 10.030 23 -9.4287

ratio 1 18 16 0.889 5.135 5 0.0154

server 12 162 75 5.556 32.083 178 -0.1592

software 3 913 449 1.475 3.402 5 -30.7596

unix-based 1 15 13 0.867 5.343 5 0.0376

user 3 861 435 1.516 3.497 5 -26.8094

vax 2 28 14 1.000 9.537 10 0.1692

[19] Is it time for CD-ROM? (guide to 16 CD-ROM drives)(includes related ar-

ticles on using IBM-compatible CD-ROMs with the Mac,

audio 1 8 6 0.750 6.116 5 0.0161

cd-rom 9 31 13 3.774 40.085 151 0.1760

drive 9 249 129 4.663 19.431 91 -1.4872

macintosh 2 210 107 1.019 5.470 6 -0.5855

technology 2 335 220 1.313 4.028 5 -3.9304

[20] Silver platters that matter. (CD-ROM titles) (buyers guide)

availe 3 135 121 2.689 7.336 20 -0.4302

cd-rom 6 31 13 2.516 27.057 68 0.1760

hypercard 2 25 13 1.040 9.686 10 0.1517

library 2 44 30 1.364 8.013 11 0.1473

macintosh 2 210 107 1.019 5.470 6 -0.5855

|

In the example above, are document vectors for 20 documents (out of 1000) from

computer science trade publications of the mid-80's are shown. Several

weighting schemes are tried (see key at top). The MCA weight is the

Modified Centroid Algorithm calculation method to calculate the Term Discrimination weight (see below).

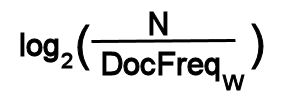

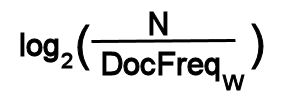

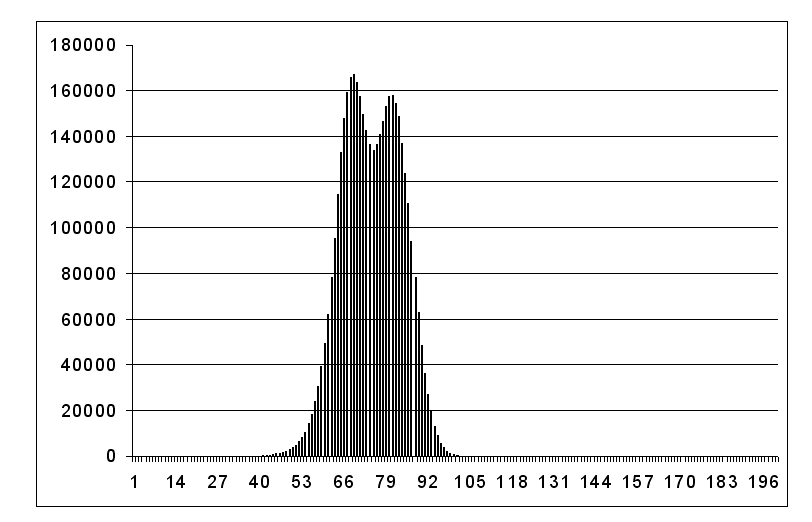

Inverse Document Frequency and Basic Vector Space

One of the simplest word weight schemes to implement is the

Inverse Document Frequency weight.

The IDF weight is the measure of how widely distributed

a term is in a collection. Low IDF weights mean that the term is

widely used while high weights indicate that the usage is more

concentrated. The IDF weight measures the weight of

a term in the collection as a whole, rather than the weight of

a term in a document. In individual document vectors,

the normalized frequency of occurrence of each term is multiplied by the

IDF to give a weight for the term in the particular document.

Thus, a term with a high frequency but a low IDF weight could

still be a highly weighted term in a particular document, and,

on the other hand, a term with a low frequency but a high

IDF weight could also be an important term in a given document.

The IDF weight for a term W in a collection of N documents is:

where DocFreqw is the number of documents in which term W

occurs.

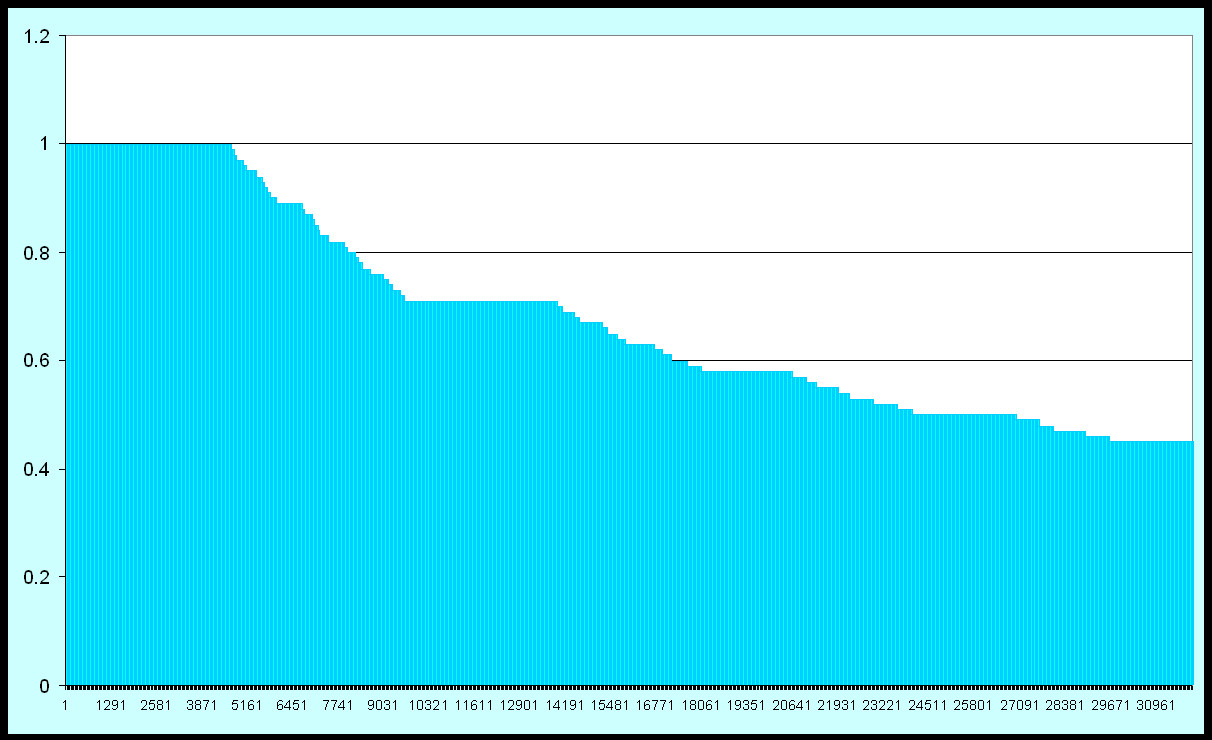

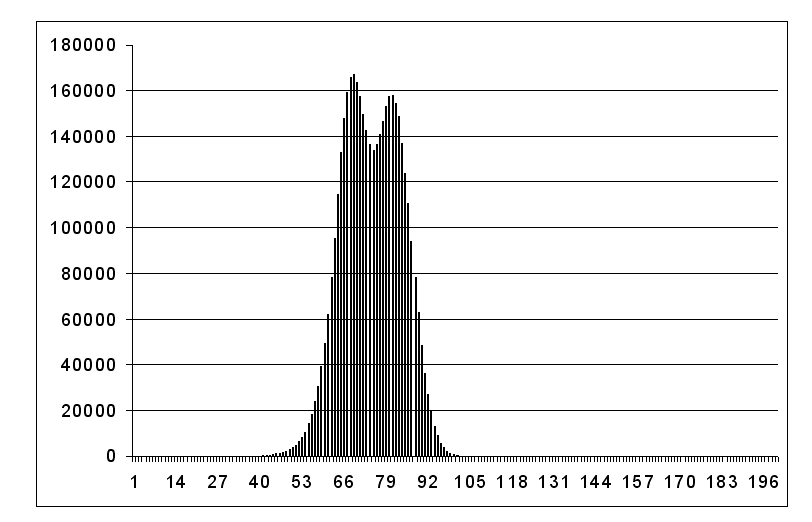

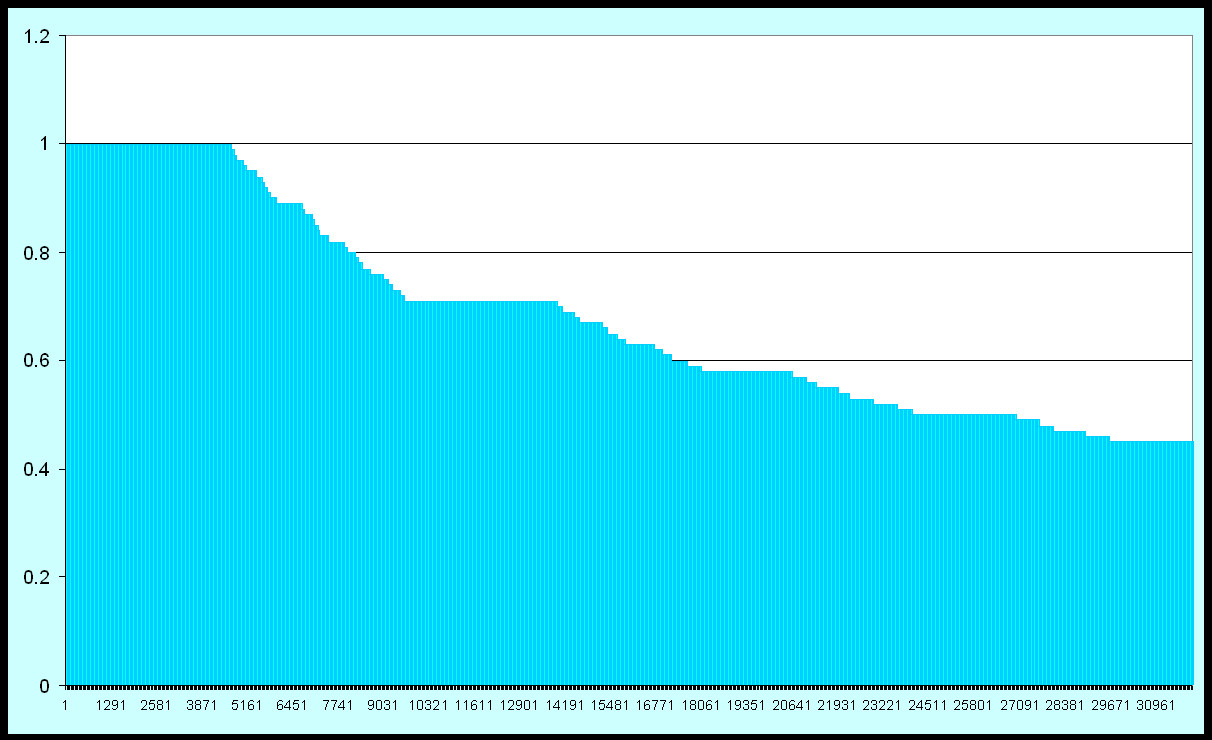

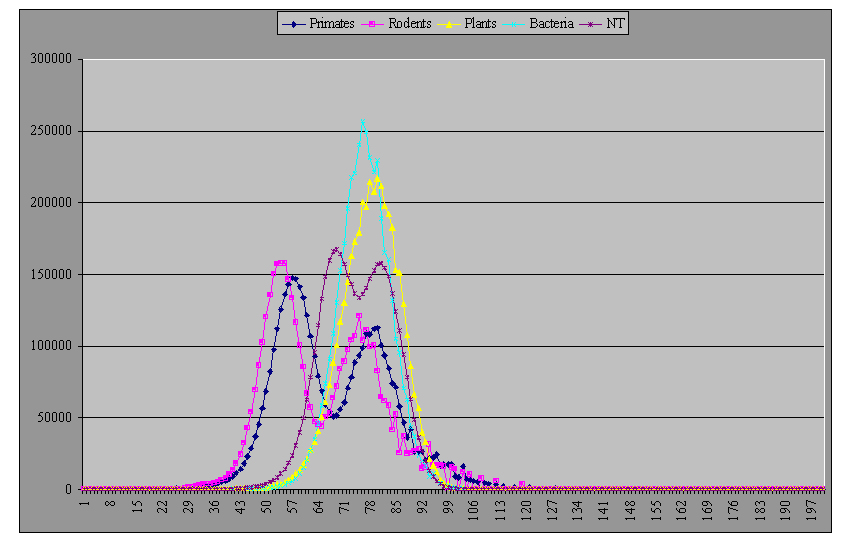

- OSU Medline Data Base IDF Weights

The IDF weights for the OSU Medline collection

were calculated after the words were processed by the stemming

function $zstem()

and the values are stored in the global array ^df(word)

for subsequent use and also printed to standard output.

The IDF weights for a recent run on the OSU

data base is here:

http://www.cs.uni.edu/~okane/source/ISR/medline.idf.sorted.gz.

Note: due to tuning parameters that set thresholds for the

construction of the stop list and other factors, different runs

on the data base will produce some variation in the

values displayed.

The weights range from lows such as:

0.189135 human

0.288966 and

0.300320 the

0.542811 with

0.737224 for

0.793466 was

0.867298 were

to highs such as:

12.590849 actinomycetoma

12.590849 actinomycetomata

12.590849 actinomycoma

12.590849 actinomyosine

12.590849 actinoplane

12.590849 actinopterygii

12.590849 actinoxanthin

12.590849 actisomide

12.590849 activ

12.590849 activationin

Note: for a given IDF value, the words are presented alphabetically.

The OSU Medline collection has many code words that appear only once.

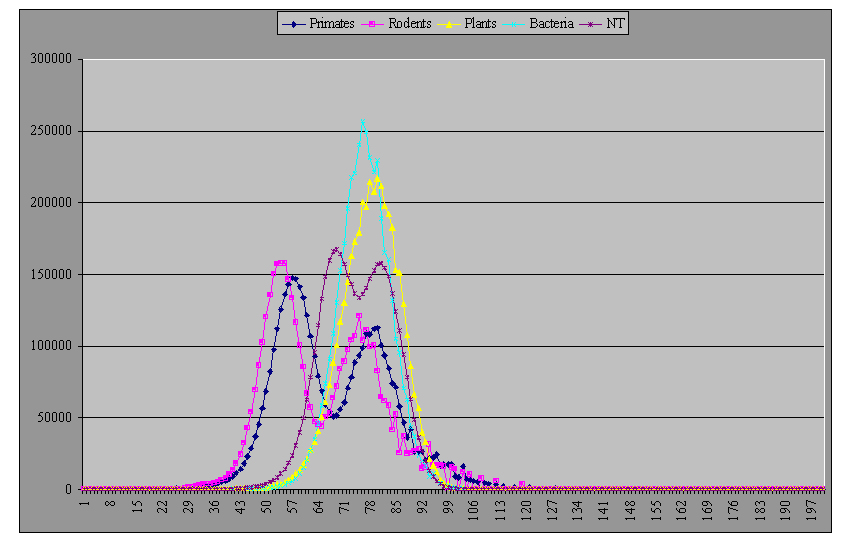

- Wikipedia Data Base IDF Weights

Similarly, the Wikipedia

IDF weights were calculated

and the

results are in http://www.cs.uni.edu/~okane/source/ISR/wiki.idf.sorted.gz.

The weights range from lows such as:

1.61 further

1.62 either

1.63 especial

1.65 certain

1.65 having

1.67 almost

1.67 along

1.68 involve

1.68 receive

to highs such as:

9.87 altopia

9.87 alyque

9.87 amangkur

9.87 amarant

9.87 amaranthus

9.87 amarantine

9.87 amazonite

9.87 ambacht

9.87 ambiorix

Calculating IDF Weights

Calculating an IDF involves

first building a document-term

matrix (^doc(i,w)) where i is the document

number and w is a term. Each cell in the

document-term matrix will contain the count of

the number of times that the term occurs in the document).

Next, from the document-term matrix,

construct a document frequency vector (^df(w)) where

each element gives the number documents the term

w occurs in.

When the document frequency vector has been built,

the individual IDF values for each word can

be calculated.

The following assumes you are using the file

http://www.cs.uni.edu/~okane/source/ISR/medline.translated.txt.gz

which is a pre-processed version of the OSU Medline text.

The format of the file is:

- the code xxxxx115xxxxx followed by one blank

- the offset in the original text file of STAT- MEDLINE followed by a blank

- the document number followed by a blank

- one or more words, converted to lower case and stemmed by $zstem()

Example:

xxxxx115xxxxx 0 1 the bind acetaldehyde the active site ribonuclease alteration catalytic active ...

xxxxx115xxxxx 2401 2 reduction breath ethanol reading norm male volunteer follow mouth rins with ...

xxxxx115xxxxx 3479 3 does the blockade opioid receptor influence the development ethanol dependence ...

xxxxx115xxxxx 4510 4 drinkwatcher description subject and evaluation laboratory marker heavy drink ...

xxxxx115xxxxx 5745 5 platelet affinity for serotonin increased alcoholic and former alcoholic biological ...

xxxxx115xxxxx 7128 6 clonidine alcohol withdraw pilot study differential symptom respons follow ...

xxxxx115xxxxx 7915 7 bias survey drink habit this paper present data from genere populate survey ...

xxxxx115xxxxx 8653 8 factor associate with young adult alcohol abuse the present study examne ...

xxxxx115xxxxx 9862 9 alcohol and the elder relationship illness and smok group semi independent ...

xxxxx115xxxxx 11174 10 concern the prob our confidence statistic editorial

|

Calculating the IDF:

Step 1

- delete any previous instances of the ^doc() and

^df() global arrays.

Step 2

Loop:

- Read the next word into w from the file using the $zzScan

function.

- If $test is false, you have reached the end of

file and proceed to Step 3.

- If the word w is the beginning of

document token (xxxxx115xxxxx):

- read (use $zzScan) the offset and then the document number.

- Retain the document number as variable D.

- Store the offset at ^doc(D).

- Repeat the loop

- Check the word w against your stop list. If it

is in the stop list, Repeat the loop

- If ^doc(D,w) exists, increment it; if not,

create it with a value of 1.

- Repeat the loop

Step 3

- for each document i in ^doc(i)

- for each word w in ^doc(i,w)

- check if ^df(w) exists. If it does, increment

^df(w); if not create ^df(w) and store a value of one.

Step 4

- for each word in ^df(w)

- calculate its IDF using the value in ^df(w) (the number of documents the

word occurs in) and D, the total number of documents.

- store the results in a global array ^idf(w)

- write (re-direct stdout is easiest) to a file

the IDF value (2 decimal places are usually enough - see $justify),

a blank, followed by the word.

Step 5

- sort the file numerically according to IDF value (first field)

The results of the IDF procedure may be used to

enhance the stop list with words that have very low

values.

A basic program to create a document-term matrix and calculate IDF weights is:

|

#!/usr/bin/mumps

#+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

#+

#+ Mumps ISR Software Library

#+ Copyright (C) 2006, 2008 by Kevin C. O'Kane

#+

#+ Kevin C. O'Kane

#+ okane@cs.uni.edu

#+

#+

#+ This program is free software; you can redistribute it and/or modify

#+ it under the terms of the GNU General Public License as published by

#+ the Free Software Foundation; either version 2 of the License, or

#+ (at your option) any later version.

#+

#+ This program is distributed in the hope that it will be useful,

#+ but WITHOUT ANY WARRANTY; without even the implied warranty of

#+ MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

#+ GNU General Public License for more details.

#+

#+ You should have received a copy of the GNU General Public License

#+ along with this program; if not, write to the Free Software

#+ Foundation, Inc., 59 Temple Place, Suite 330, Boston, MA 02111-1307 USA

#+

#++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

# idf.mps February 22, 2008

kill ^df

kill ^dict

set %=$zStopInit("good") // loads stop list into a C++ container

open 1:"translated.txt,old"

if '$test write "translated not found",! halt

use 1

for do

. use 1

. set word=$zzScan

. if '$test break

. if word="xxxxx115xxxxx" set off=$zzScan,doc=$zzScan quit // new abstract

. if '$zStopLookup(word) quit // is "word" in the good list

. if $data(^doc(doc,word)) set ^doc(doc,word)=^doc(doc,word)+1

. else set ^doc(doc,word)=1

. if $data(^dict(word)) set ^dict(word)=^dict(word)+1

. else set ^dict(word)=1

use 5

close 1

set ^DocCount(1)=doc

for d="":$order(^doc(d)):"" do

. for w="":$order(^doc(d,w)):"" do

.. if $data(^df(w)) set ^df(w)=^df(w)+1

.. else set ^df(w)=1

for w="":$order(^df(w)):"" do

. set ^dfi(w)=$justify($zlog(doc/^df(w)),1,2)

. write $justify(^dfi(w),1,2)," ",w,!

write !

halt

|

The program also records the offset in the original file of the beginning of

the abstract and stores the IDF values in the vector ^dfi.

It also calculates a document frequency vector (number of

documents a term occurs in) ^df and a dictionary vector

(total frequency of occurrence for each word) ^dict.

Example results:

http://www.cs.uni.edu/~okane/source/ISR/medline.idf.sorted.gz

http://www.cs.uni.edu/~okane/source/ISR/medline.weighted-doc-vectors.gz

http://www.cs.uni.edu/~okane/source/ISR/medline.weighted-term-vectors.gz

http://www.cs.uni.edu/~okane/source/ISR/wiki.idf.sorted.gz

http://www.cs.uni.edu/~okane/source/ISR/wiki.weighted-doc-vectors.gz

http://www.cs.uni.edu/~okane/source/ISR/wiki.weighted-term-vectors.gz

Signal-noise ratio (see Salton83 links, pages 63-66)

Discrimination Coefficients (pages 66-71) and

Simple Automatic Indexing (pages Salton83, 71-75);

Willett 1985; Crouch 1988

The Term Discrimination factor measures the degree to which

a term differentiates one document from another. It is

calculated based on the effect a term has on overall

hyperspace density with and without a given term.

If the space density is greater when a term is removed

from consideration, that means the term was making documents

look less like one another (a good discriminator) while

terms whose remove decreases the density are poor

discriminators. The discrimination values for

a set of terms are similar to the values for the IDF weights but

not exactly.

The basic procedure calls for first calculating the average of pair-wise similarities

between all documents in the space.

Then for each word, the average of the pair-wise similarities of

all the documents is calculated without that word. The difference in the averages

is the term discrimination value for the word. When the average similarity

increases when a word is removed, the word was a good discriminator - it made

documents look less like one another. On the other hand,

if the average similarity decreased, the term was not a good

discriminator since it made the documents look more like one another.

In practice, this is an expensive weight to calculate unless

speed-up techniques are used.

The modified centroid algorithm (see Crouch 1987), is an attempt

to improve the speed of calculation. The exact

calculation, where all pairwise similarity values are calculated

each time, is of complexity of the order of

(N)(N-1)(w)(W) where N is the number of documents, W is the

number of words in the collection and w is

the average number of terms per document vector.

Crouch (1988) discusses several methods to speed this calculation.

The first of these, the approximate approach, consists of

calculating the similarities of the documents with a centroid

vector representing the collection as a whole rather

that pair-wise. This results in considerable simplification as the

number of similarities to be calculated drops from (N)(N-1)

to N.

Another modification, called the Modified Centroid Algorithm

is based on:

- Subtracting the original contributions to the sum of the

similarities of those documents containing some term W and replacing these

values with the similarities calculated between the

the centroid and the document vectors with W removed;

- Storing the original contributions to the total

similarity by each document in a vector for later use (rather than

recalculating this value); and

- Using an inverted list to identify those documents which

contain the indexing terms.

In the centroid approximation of discrimination coefficients,

a centroid vector is calculated. That is, a vector is created

whose individual components are the average usage of each

word in the vocabulary. A centroid vector is the average of

all the document vectors and, by analogy, is at the center of

the hyperspace.

When using a centroid vector,

rather than calculating all the pair-wise similarities

of each document with each other document,

the average similarity is calculated by comparing

each document with the centroid vector.

This improves the performance to a complexity on the order of (N)(w)(W).

As modified centroid algorithm (MCA) calculates the average

similarity, it stores the

contribution of each document to the total document density

(order n space required). When calculating the

effect of a term on the document space density, the MCA subtracts the

original contribution of those documents that contain the term

under consideration and re-adds the document's contribution

re-calculated without the contribution of the term under consideration.

Complexity is on the order of (DF)(w)(W) where

DF is the average number of documents in which a term occurs.

Finally, an inverted term-document matrix is used to

quickly identify those documents that contain terms of interest

rather than scanning through the entire document-term matrix looking

for documents containing a given term.

While the MCA method yields values that are only an approximation

of the exact method, the values are very similar in most cases and

the savings in time to calculate the coefficients is very significant.

Crouch (Crouch 1987) reports that the MCA method was on the

order of 527 times faster than the exact method on relatively small

data sets. Larger data sets yield even greater savings as the

time required for the exact method grows with the square of the

number of documents while the MCA method grows linearly with the

number of documents. The following is the basic MCA algorithm:

|

#!/usr/bin/mumps

#+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

#+

#+ Mumps ISR Software Library

#+ Copyright (C) 2007, 2008 by Kevin C. O'Kane

#+

#+ Kevin C. O'Kane

#+ okane@cs.uni.edu

#+

#+

#+ This program is free software; you can redistribute it and/or modify

#+ it under the terms of the GNU General Public License as published by

#+ the Free Software Foundation; either version 2 of the License, or

#+ (at your option) any later version.

#+

#+ This program is distributed in the hope that it will be useful,

#+ but WITHOUT ANY WARRANTY; without even the implied warranty of

#+ MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

#+ GNU General Public License for more details.

#+

#+ You should have received a copy of the GNU General Public License

#+ along with this program; if not, write to the Free Software

#+ Foundation, Inc., 59 Temple Place, Suite 330, Boston, MA 02111-1307 USA

#+

#++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

# discrim4.mps March 5, 2008

open 1:"discrim,new"

use 1

set D=^DocCount(1) // number of documents

kill ^mca

set t1=$zd1

#++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

# calculate centroid vector ^c() for entire collection and

# the sum of the squares (needed in cos calc but should only be done once)

#++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

for w="":$order(^dict(w)):"" do

. set ^c(w)=^dict(w)/D // centroid is composed of avg word usage

#++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

# Calculate total similarity of docs for all words (T) by

# calculating the sum of the similarities of each document with the centroid.

# Remember and store contribution of each document in ^dc(dn).

#++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

set T=0

for i="":$order(^doc(i)):"" do

. set cos=$zzCosine(^doc(i),^c)

. set ^dc(i)=cos // save contributions to total of each

. set T=cos+T // sum the cosines

#++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

# calculate similarity of doc space with words removed

#++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

for W="":$order(^dict(W)):"" do // for each word W

#++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

# For each document containing W, calculate sum of the contribution

# of the cosines of these documents to the total (T). ^dc(i) is

# the original contribution of doc i. Sum of contributions is stored in T1.

#++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

. set T1=0,T2=0

. for d="":$order(^index(W,d)):"" do //for each doc d containing W

.. set T1=^dc(d)+T1 // sum of orig contribution

.. kill ^tmp

.. for w1="":$order(^doc(d,w1)):"" do // make a copy of ^doc

... if w1=W quit // don't copy W

... set ^tmp(w1)=^doc(d,w1)

.. set T2=T2+$zzCosine(^tmp,^c) // sum of cosines without W

#++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

# subtract original contribution with W (T1) and add contribution

# without W (T2) and calculate r - the change, and store in ^mca(W)

#++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

# if old (T1) big and new (T2) small, density declines

. set r=T2-T1*10000\1

. write r," ",^dfi(W)," ",W,!

. set ^mca(W)=r

use 5

write $zd1-t1,!

close 1

halt

|

The following is a further refinement of the above. It

stores the sum of the squares of the components of the

centroid vector which are needed in the denominator of each

cosine calculation this eliminating this step

This version also eliminates the step where a copy

is made of the individual document vectors.

Overall, the changes noted above and implemented in the

program below can result in substantial time improvement.

On a test run on 10,000 abstracts from the Medline database, the

procedure above took 2,053 seconds while the one below took 378 seconds.

|

#!/usr/bin/mumps

#+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

#+

#+ Mumps ISR Software Library

#+ Copyright (C) 2007, 2008 by Kevin C. O'Kane

#+

#+ Kevin C. O'Kane

#+ okane@cs.uni.edu

#+

#+

#+ This program is free software; you can redistribute it and/or modify

#+ it under the terms of the GNU General Public License as published by

#+ the Free Software Foundation; either version 2 of the License, or

#+ (at your option) any later version.

#+

#+ This program is distributed in the hope that it will be useful,

#+ but WITHOUT ANY WARRANTY; without even the implied warranty of

#+ MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

#+ GNU General Public License for more details.

#+

#+ You should have received a copy of the GNU General Public License

#+ along with this program; if not, write to the Free Software

#+ Foundation, Inc., 59 Temple Place, Suite 330, Boston, MA 02111-1307 USA

#+

#++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

# discrim3.mps March 5, 2008

open 1:"discrim,new"

use 1

set D=^DocCount(1) // number of documents

set sq=0

kill ^mca

set t1=$zd1

#++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

# calculate centroid vector ^c() for entire collection and

# the sum of the squares (needed in cos calc but should only be done once)

#++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

for w="":$order(^dict(w)):"" do

. set ^c(w)=^dict(w)/D // centroid is composed of avg word usage

. set sq=^c(w)**2+sq // The sum of the squares is needed below.

#++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

# Calculate total similarity of doc for all words (T) space by

# calculating the sum of the similarities of each document with the centroid.

# Remember and store contribution of each document in ^dc(dn).

#++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

set T=0

for i="":$order(^doc(i)):"" do

. set x=0

. set y=0

. for w="":$order(^doc(i,w)):"" do

.. set d=^doc(i,w)

.. set x=d*^c(w)+x // numerator of cos(c,doc) calc

.. set y=d*d+y // part of denominator

#++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

# Calculate and store the cos(c,doc(i)).

# Remember in ^dc(i) the contribution that this document made to the total.

#++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

. if y=0 quit

. set ^dc(i)=x/$zroot(sq*y) // cos(c,doc(i))

. set T=^dc(i)+T // sum the cosines

#++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

# calculate similarity of doc space with words removed

#++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

for W="":$order(^dict(W)):"" do

#++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

# For each document containing W, calculate sum of the contribution

# of the cosines of these documents to the total (T). ^dc(i) is

# the original contribution of doc i. Sum of contributions is stored in T1.

#++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

. set T1=0,T2=0

. for i="":$order(^index(W,i)):"" do // row of doc nbrs for word

.. set T1=^dc(i)+T1 // use prevsly calc'd cos

#++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

# For each word in document i, recalculate cos(c,doc) but without word W

#++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

.. set x=0

.. set y=0

.. for w="":$order(^doc(i,w)):"" do

... if w'=W do // if W not w

.... set d=^doc(i,w)

.... set x=d*^c(w)+x // d*^c(w)+x

.... set y=d**2+y

.. if y=0 quit

.. set T2=x/$zr(sq*y)+T2 // T2 sums cosines without W

#++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

# subtract original contribution with W (T1) and add contribution

# without W (T2) and calculate r - the change, and store in ^mca(W)

#++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

# if old (T1) big and new (T2) small, density declines

. set r=T2-T1*10000\1

. write r," ",^dfi(W)," ",W,!

. set ^mca(W)=r

use 5

write "Time used: ",$zd1-t1,!

close 1

halt

|

Example results:

wiki.discrim.gz .

medline.discrim.sorted.gz .

Note: the discrimination coefficients output is in three columns:

the first is the coefficient times 10,000, the second is the IDF for the word

and the third is the word.

Basic Retrieval

Scanning the doc-term matrix

A simple program to scan the document-term matrix looking for documents

that have terms from a query vector. (Results based on 20,000 documents).

|

#!/usr/bin/mumps

# tq.mps Feb 27, 2008

kill ^query

write "Enter search terms: "

read a

if a="" halt

for i=1:1 do

. set b=$piece(a," ",i)

. if b="" break

. set b=$zn(b) // lower case, no punct

. set b=$zstem(b) // stem it

. set ^query(b)=""

if $order(^query(""))="" halt

for j="":$order(^query(j)):"" write j,!

set t1=$zd1

for i="":$order(^doc(i)):"" do

. set f=1

. for j="":$order(^query(j)):"" do

.. if '$d(^doc(i,j)) set f=0 break

. if f write i,?8,$extract(^t(i),1,70),!

write !,"Elapsed time: ",$zd1-t1,!

Enter search terms: epithelial fibrosis

epithelial

fibrosis

10001 Phosphorylation fails to activate chloride channels from cystic fibro

18197 Relationship between mammographic and histologic features of breast t

6944 Cyclic adenosine monophosphate-dependent kinase in cystic fibrosis tr

Elapsed time: 1

|

Scanning the term-doc matrix

A simple program to scan the term-document matrix looking for documents

that contain a search term.

|

#!/usr/bin/mumps

#+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

#+

#+ Mumps ISR Software Library

#+ Copyright (C) 2008 by Kevin C. O'Kane

#+

#+ Kevin C. O'Kane

#+ okane@cs.uni.edu

#+

#+

#+ This program is free software; you can redistribute it and/or modify

#+ it under the terms of the GNU General Public License as published by

#+ the Free Software Foundation; either version 2 of the License, or

#+ (at your option) any later version.

#+

#+ This program is distributed in the hope that it will be useful,

#+ but WITHOUT ANY WARRANTY; without even the implied warranty of

#+ MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

#+ GNU General Public License for more details.

#+

#+ You should have received a copy of the GNU General Public License

#+ along with this program; if not, write to the Free Software

#+ Foundation, Inc., 59 Temple Place, Suite 330, Boston, MA 02111-1307 USA

#+

#++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

# tqw.mps Feb 28, 2008

kill ^query

kill ^tmp

write "Enter search terms: "

read a

if a="" halt

for i=1:1 do

. set b=$piece(a," ",i)

. if b="" break

. set b=$zn(b) // lower case, no punct

. set b=$zstem(b) // stem it

. set ^query(b)=1

if $order(^query(""))="" halt

set q=0

for w="":$order(^query(w)):"" write w," " set q=q+1

write !

set t1=$zd1

for w="":$order(^query(w)):"" do

. for i="":$order(^index(w,i)):"" do

.. if $data(^tmp(i)) set ^tmp(i)=^tmp(i)+1

.. else set ^tmp(i)=1

for i="":$order(^tmp(i)):"" do

. if ^tmp(i)=q write i,?8,$j($zzCosine(^doc(i),^query),5,3)," ",$extract(^t(i),1,70),!

write !,"Elapsed time: ",$zd1-t1,!

Enter search terms: epithelial fibrosis

10001 0.180 Phosphorylation fails to activate chloride channels from cystic fibro

18197 0.291 Relationship between mammographic and histologic features of breast t

6944 0.323 Cyclic adenosine monophosphate-dependent kinase in cystic fibrosis tr

Elapsed time: 0

|

Weighted scanning the term-doc matrix

Similar to the above but all terms not required. Results sorted

by sum of weights of terms in the documents.

Note: the $job function returns the process id of the

running program. This is unique and it is used to

name a temporary file that contains the

unsorted results.

|

#!/usr/bin/mumps

# tqw1.mps Feb 27, 2008

kill ^query

kill ^tmp

write "Enter search terms: "

read a

if a="" halt

for i=1:1 do

. set b=$piece(a," ",i)

. if b="" break

. set b=$zn(b) // lower case, no punct

. set b=$zstem(b) // stem it

. set ^query(b)=""

if $order(^query(""))="" halt

set q=0

for w="":$order(^query(w)):"" write w," " set q=q+1

write !

set t1=$zd1

for w="":$order(^query(w)):"" do

. for i="":$order(^index(w,i)):"" do

.. if $data(^tmp(i)) set ^tmp(i)=^tmp(i)+^index(w,i)

.. else set ^tmp(i)=^index(w,i)

set fn=$job_",new"

open 1:fn // $job number is unique to this process

use 1

for i="":$order(^tmp(i)):"" do

. write ^tmp(i)," ",$extract(^t(i),1,70),!

close 1

use 5

set %=$zsystem("sort -n "_$job_"; rm "_$job)

write !,"Elapsed time: ",$zd1-t1,!

Enter search terms: epithelial fibrosis

epithelial fibrosis

9.02 Adaptation of the jejunal mucosa in the experimental blind loop syndr

9.02 Adherence of Staphylococcus aureus to squamous epithelium: role of fi

9.02 Anti-Fx1A induces association of Heymann nephritis antigens with micr

9.02 Anti-human tumor antibodies induced in mice and rabbits by "internal

9.02 Bacterial adherence: the attachment of group A streptococci to mucosa

9.02 Benign persistent asymptomatic proteinuria with incomplete foot proce

9.02 Binding of navy bean (Phaseolus vulgaris) lectin to the intestinal ce

9.02 Cellular and non-cellular compositions of crescents in human glomerul

9.02 Central nervous system metastases in epithelial ovarian carcinoma.

...

27.06 A new model system for studying androgen-induced growth and morphogen

27.06 Immunohistochemical observations on binding of monoclonal antibody to

27.7 Cyclic adenosine monophosphate-dependent kinase in cystic fibrosis tr

28.34 Relationship between mammographic and histologic features of breast t

28.98 Asbestos induced diffuse pleural fibrosis: pathology and mineralogy.

28.98 High dose continuous infusion of bleomycin in mice: a new model for d

28.98 Taurine improves the absorption of a fat meal in patients with cystic

33.81 Measurement of nasal potential difference in adult cystic fibrosis, Y

43.47 Are lymphocyte beta-adrenoceptors altered in patients with cystic fib

43.47 Lipid composition of milk from mothers with cystic fibrosis.

57.96 Pulmonary abnormalities in obligate heterozygotes for cystic fibrosis

Elapsed time: 0

|

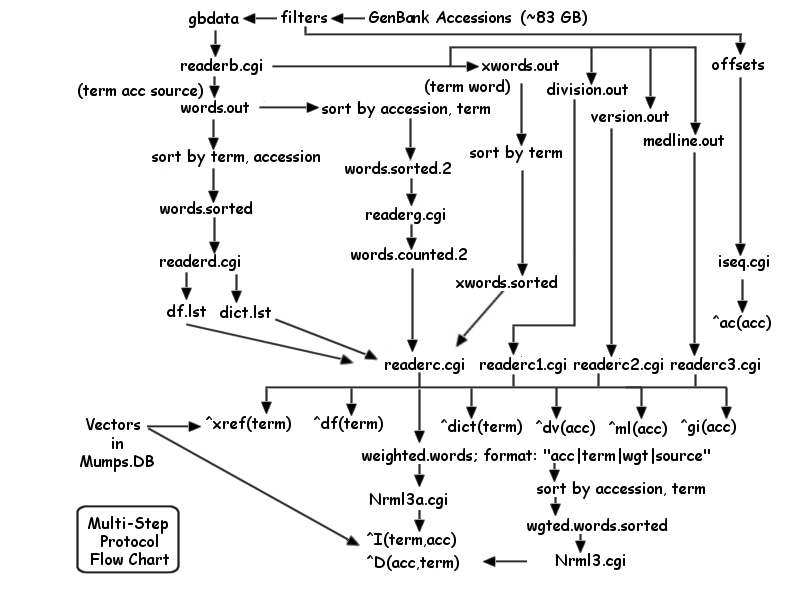

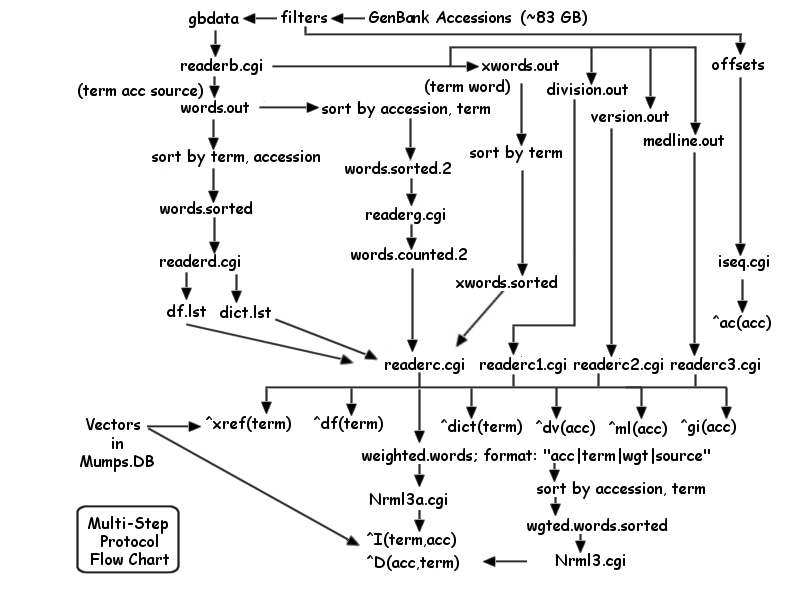

Scripted test runs

Often it is better to break the indexing process into multiple steps.

The Mumps interpreter generally runs faster when the run-time symbol

table is not cluttered with many variable names.

Also, using a script can provide an easy way to set

parameters to the several steps from one central

code point.

Below is the bash script used to do the test

runs in this book. It invokes many individual Mumps

programs as well as other system resources such

as sort.

The script generally takes a considerable amount of time to

execute so it is often run under the control of nohup.

This permits the user to logoff and the script to continue

running. All output generated during execution that would

otherwise appear on your screed (stdout and stderr)

will instead be captured and written to the file nohup.out.

To invoke a script with nohup type:

nohup nice scriptName &

nohup will initiate the script and capture the output.

The nice command causes your script to run at a

slightly reduced priority thus giving interactive users

preference. The & causes the processes to run in the background

thus giving you a command prompt immediately (rather than

when the script is complete). Note: is you want to kill the

script,

type ps and then kill -9 pid where pid is

the process id of the script. You may also want to kill

the program currently running as killing the script

only stops the starting of additional tasks; tasks in execution

continue in execution.

Note that the Mumps interpreter always looks for QUERY_STRING

in the environment. Thus, if you create QUERY_STRING

and place in it parameters, Mumps will read these and create variables

with values as is the case when your program is invoked by

the web server:

QUERY_STRING="idf=$TT_MIN_IDF&cos=$TT_MIN_COS"

export QUERY_STRING

In the example above, query string is build and exported to the

environment. It contains two assignment clauses that

will result in the variables idf and cos

being created and initialized in Mumps before your program

begins execution. the bash variables TT_MIN_IDF

and TT_MIN_COS are established at the beginning of the script and

their values are substituted when QUERY_STRING is created.

Note the $'s - these cause the substitution and are required

by bash syntax.

|

#!/bin/bash

#+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

#+

#+ Mumps Information Storage and Retrieval Software Library

#+ Copyright (C) 2006, 2008 by Kevin C. O'Kane

#+

#+ Kevin C. O'Kane

#+ okane@cs.uni.edu

#+

#+

#+ This program is free software; you can redistribute it and/or modify

#+ it under the terms of the GNU General Public License as published by

#+ the Free Software Foundation; either version 2 of the License, or

#+ (at your option) any later version.

#+

#+ This program is distributed in the hope that it will be useful,

#+ but WITHOUT ANY WARRANTY; without even the implied warranty of

#+ MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

#+ GNU General Public License for more details.

#+

#+ You should have received a copy of the GNU General Public License

#+ along with this program; if not, write to the Free Software

#+ Foundation, Inc., 59 Temple Place, Suite 330, Boston, MA 02111-1307 USA

#+

#++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

# clear old nohup.out

cat /dev/null > nohup.out

# medline MedlineInterp.script March 9, 2008

TRUE=1

FALSE=0

# maximum number of documents to scan

MAX_DOCS=20000

# maximum word occurrence for stopselect.mps

MAX_WORDS=1000

# minumum word occurrence for stopselect.mps

MIN_WORDS=5

# minimum IDF value for doc-doc matrix

DD_MIN_IDF=7

# minimum DD cosine weight

DD_MIN_WGT=0.8

# minimum weight in weight.mps

MIN_WEIGHT=5

# minimum IDF in tt.mps

TT_MIN_IDF=5

# minimum IDF cosine

TT_MIN_COS=0.8

# minimum co-occurence tt count

TT_MIN_COUNT=10

# perform steps:

DO_ZIPF=$TRUE

DO_TT=$TRUE

DO_CONVERT=$TRUE

DO_DICTIONARY=$TRUE

DO_STOPSELECT=$TRUE

DO_IDF=$TRUE

DO_WEIGHT=$TRUE

DO_COHESION=$TRUE

DO_JACCARD=$TRUE

DO_TTCLUSTER=$TRUE

DO_DISCRIM=$TRUE

DO_DOCDOC=$TRUE

DO_CLUSTERS=$TRUE

DO_HIERARCHY=$TRUE

DO_TEST=$TRUE

if [ $DO_COHESION -eq $TRUE ]

then

DO_TT=$TRUE

fi

if [ $DO_JACCARD -eq $TRUE ]

then

DO_TT=$TRUE

fi

# delete any prior data bases

rm key.dat

rm data.dat

if [ $DO_CONVERT -eq $TRUE ]

then

echo "Convert data base format"

date

starttime.mps

QUERY_STRING="MAX=$MAX_DOCS"

export QUERY_STRING

echo "MAX documents to read $QUERY_STRING"

reformat.mps < osu.medline > rtrans.txt

stems.mps < rtrans.txt > translated.txt

echo "Conversion done - total time: `endtime.mps`"

echo

fi

if [ $DO_DICTIONARY -eq $TRUE ]

then

echo "Generate and sort word frequency list"

date

starttime.mps

dictionary.mps < translated.txt > dictionary.unsorted

sort -nr < dictionary.unsorted > dictionary.sorted

echo "Word frequency list done - total time: `endtime.mps`"

echo

fi

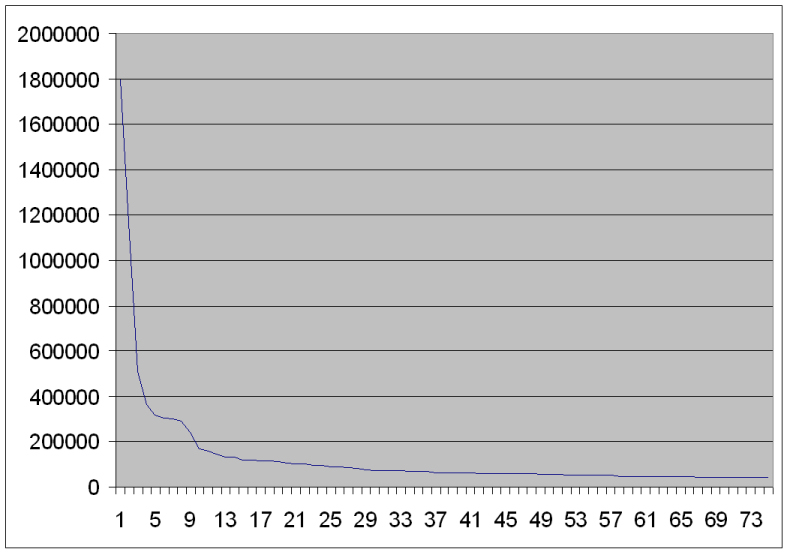

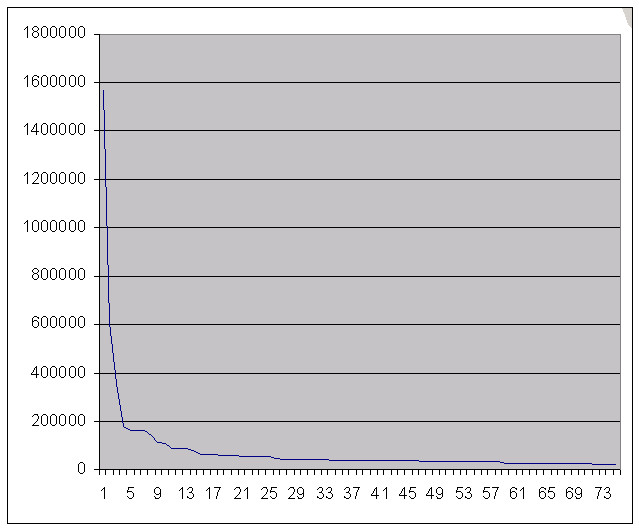

if [ $DO_ZIPF -eq $TRUE ]

then

echo "Zipf calculation"

date

starttime.mps

Zdictionary.mps < rtrans.txt | sort -nr | zipf.mps > medline.zipf

endtime.mps

echo

fi

echo "Count documents"

grep "xxxxx115" translated.txt | wc > DocStats

echo "Document grep result:"

cat DocStats

if [ $DO_STOPSELECT -eq $TRUE ]

then

echo "Select good words"

date

starttime.mps

QUERY_STRING="max=$MAX_WORDS&min=$MIN_WORDS"

export QUERY_STRING

echo "QUERY_STRING = $QUERY_STRING"

ls -l dictionary.sorted

stopselect.mps < dictionary.sorted > good

echo "Good word selection time: `endtime.mps`"

rm dictionary.unsorted

echo

fi

if [ $DO_IDF -eq $TRUE ]

then

echo "Generate and sort IDF weights"

date

starttime.mps

idf.mps > idf.unsorted

echo "idf done"

sort -n < idf.unsorted > idf.sorted

echo "IDF time: `endtime.mps`"

rm idf.unsorted

fi

if [ $DO_WEIGHT -eq $TRUE ]

then

echo "Create weighted doc vectors"

date

starttime.mps

# set minimum IDF*Freq value

QUERY_STRING="idfmin=$MIN_WEIGHT"

export QUERY_STRING

echo "QUERY_STRING = $QUERY_STRING"

weight.mps

echo "Weighting time: `endtime.mps`"

echo

fi

echo "Dump/restore"

echo "Old data base sizes:"

ls -lh key.dat data.dat

QUERY_STRING="file=weight.dmp"

export QUERY_STRING

starttime.mps

dump.mps

rm key.dat

rm data.dat

restore.mps

echo "New data base sizes:"

ls -lh key.dat data.dat

echo "Dump/restore time: `endtime.mps`"

echo

if [ $DO_TT -eq $TRUE ]

then

echo "Calculate and sort term-term matrix"

date

QUERY_STRING="min=$TT_MIN_COUNT&idf=$TT_MIN_IDF&cos=$TT_MIN_COS"

export QUERY_STRING

starttime.mps

tt.mps > tt

sort -n < tt > tt.sorted

echo "Term-term time: `endtime.mps`"

echo

if [ $DO_COHESION -eq $TRUE ]

then

echo "Calculate and sort cohesion matrix"

date

starttime.mps

cohesion.mps > cohesion

sort -nr < cohesion > cohesion.sorted

echo "Cohesion time: `endtime.mps`"

echo

fi

if [ $DO_JACCARD -eq $TRUE ]

then

echo "Calculate and sort jaccard term-term matrix"

date

starttime.mps

jaccard-tt.mps > jaccard-tt

sort -n < jaccard-tt > jaccard-tt.sorted

echo "Jaccard term time: `endtime.mps`"

echo

fi

if [ $DO_TTCLUSTER -eq $TRUE ]

then

echo "Calculate term clusters"

date

starttime.mps

clustertt.mps > cluster-tt

echo "Cluster term time: `endtime.mps`"

echo

fi

fi

if [ $DO_DISCRIM -eq $TRUE ]

then

echo "Calculate discrimination co-efficients"

date

starttime.mps

discrim3.mps

echo "discrim.mps time: `endtime.mps`"

sort -n < discrim > discrim.sorted

echo

fi

echo "Dump/restore"

date

echo "Old data base size:"

ls -lh key.dat data.dat

QUERY_STRING="file=discrim.dmp"

export QUERY_STRING

starttime.mps

dump.mps

rm key.dat

rm data.dat

restore.mps

echo "New data base size:"

ls -lh key.dat data.dat

echo "dump/restore end: `endtime.mps`"

echo

if [ $DO_DOCDOC -eq $TRUE ]

then

echo "Calculate document-document matrix"

date

starttime.mps

QUERY_STRING="wgt=$DD_MIN_WGT&min=$DD_MIN_IDF"

export QUERY_STRING

echo "QUERY_STRING = $QUERY_STRING"

docdoc5.mps > dd2

echo "Doc-doc time: `endtime.mps`"

echo

fi

if [ $DO_CLUSTERS -eq $TRUE ]

then

echo "Calculate clusters"

date

starttime.mps

cluster1.mps > clusters

echo "Cluster time: `endtime.mps`"

echo

fi

if [ $DO_HIERARCHY -eq $TRUE ]

then

echo "Calculate hierarchy"

date

starttime.mps

ttfolder.mps > ttfolder

echo "Hierarchy time: `endtime.mps`"

echo

echo "Calculate tables"

date

starttime.mps

tab.mps < ttfolder > tab

index.mps

echo "Hierarchy time: `endtime.mps`"

echo

fi

if [ $DO_TEST -eq $TRUE ]

then

echo "alcohol" > tstquery

echo "test query is alcohol"

medlineRetrieve.mps < tstquery

fi

|

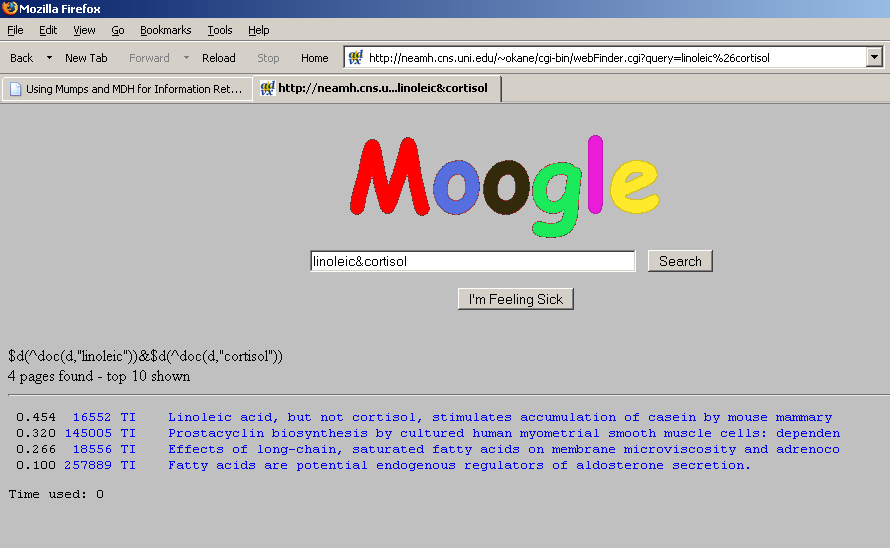

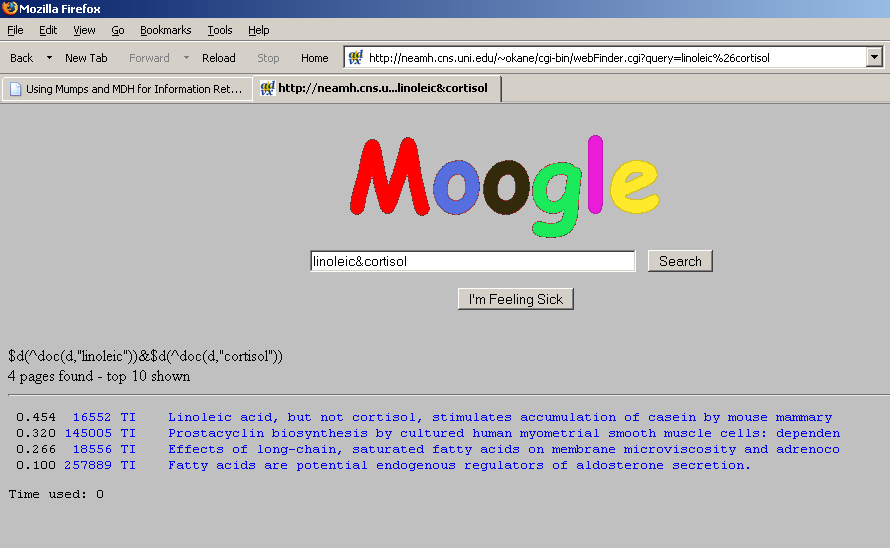

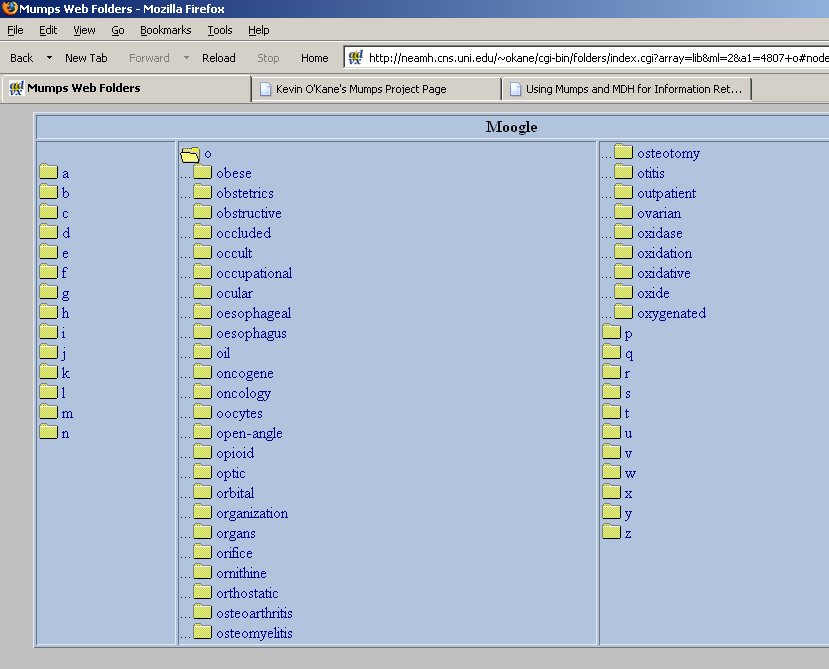

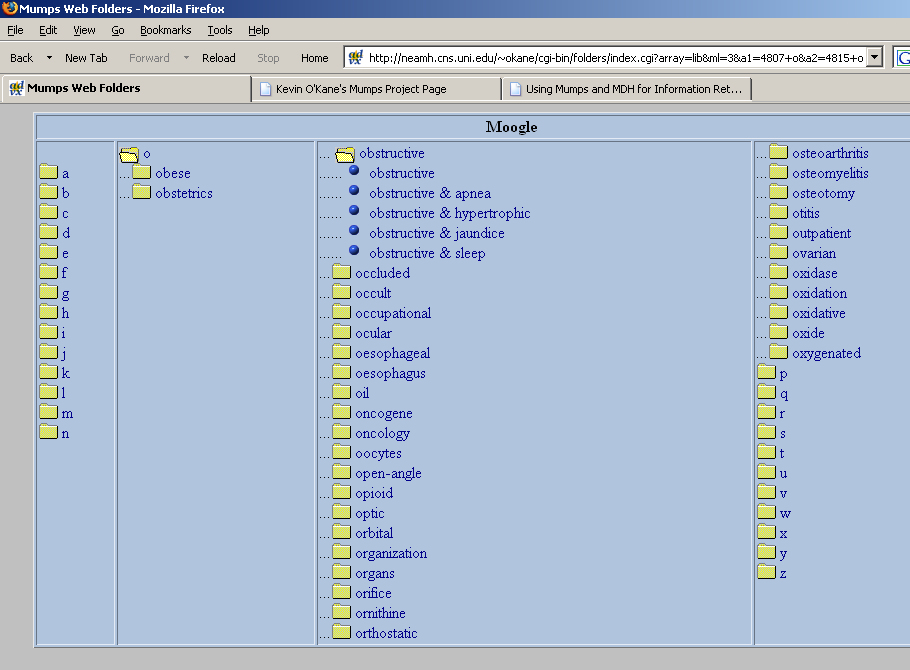

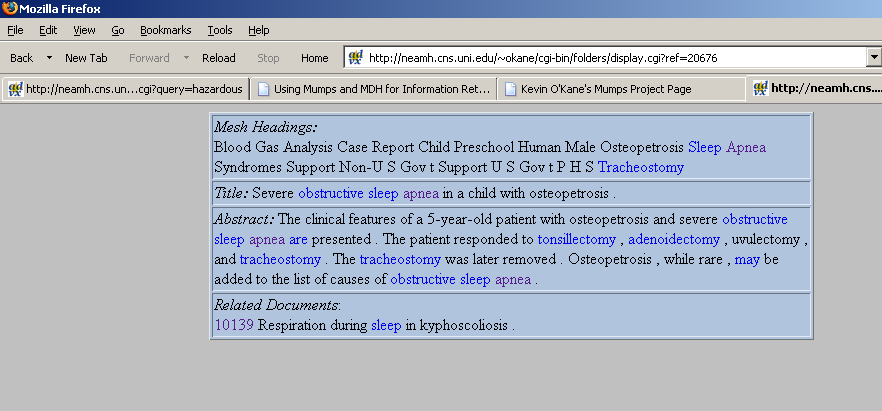

Simple Retrieval

The following program reads in a set of query words into

a query vector and then calculates the cosines between

the query and the document vectors. It then prints out

the titles of the 10 documents with the highest cosine

correlations with the query.

Note: this program makes use of a synonym dictionary

which will be discussed below.

|

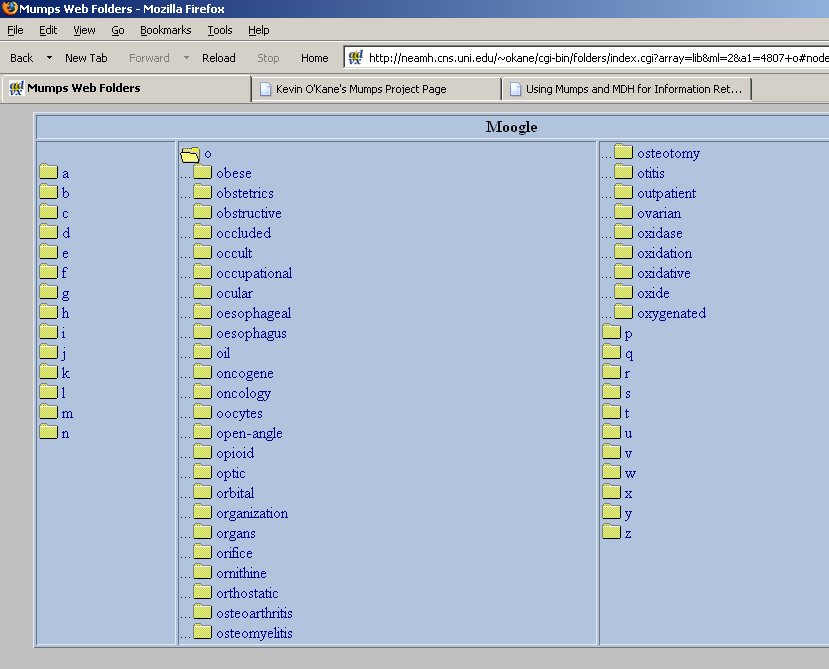

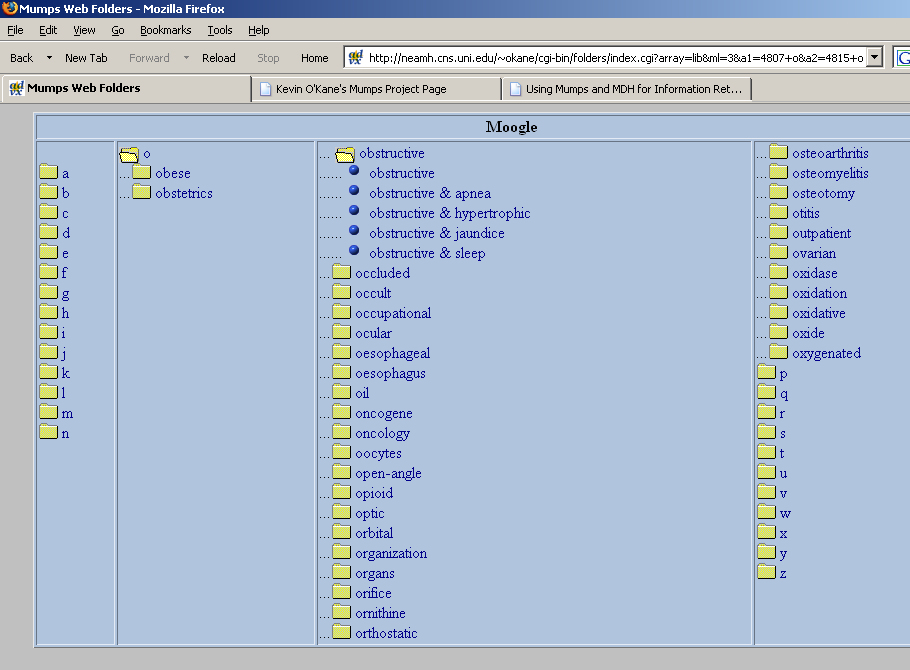

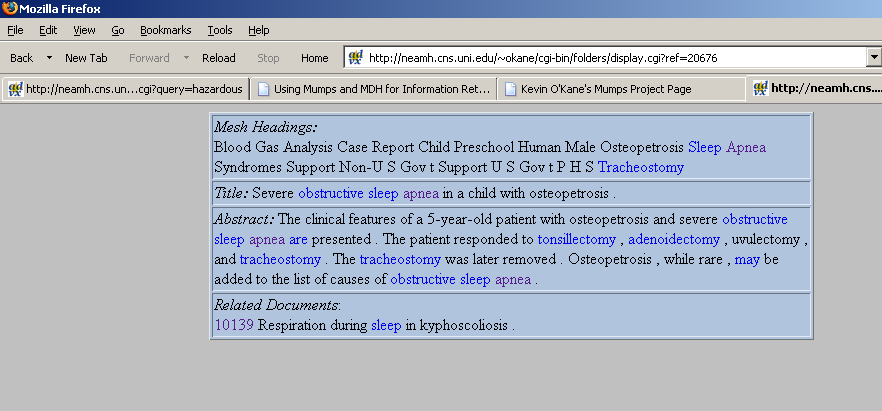

Basic Retrieval

|

|

#!/usr/bin/mumps

#+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

#+

#+ Mumps ISR Software Library

#+ Copyright (C) 2005, 2007, 2008 by Kevin C. O'Kane

#+

#+ Kevin C. O'Kane

#+ okane@cs.uni.edu

#+

#+

#+ This program is free software; you can redistribute it and/or modify

#+ it under the terms of the GNU General Public License as published by

#+ the Free Software Foundation; either version 2 of the License, or

#+ (at your option) any later version.

#+

#+ This program is distributed in the hope that it will be useful,

#+ but WITHOUT ANY WARRANTY; without even the implied warranty of

#+ MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

#+ GNU General Public License for more details.

#+

#+ You should have received a copy of the GNU General Public License

#+ along with this program; if not, write to the Free Software

#+ Foundation, Inc., 59 Temple Place, Suite 330, Boston, MA 02111-1307 USA

#+

#++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

# simpleRetrieval.mps Feb 28, 2008

open 1:"osu.medline,old"

if '$test write "osu.medline not found",! halt

write "Enter query: "

kill ^query

kill ^ans

for do // extract query words to query vector

. set w=$zzScanAlnum

. if '$test break

. set w=$zstem(w)

. set ^query(w)=1

write "Query is: "

for w="":$order(^query(w)):"" write w," "

write !

set time0=$zd1

for i="":$order(^doc(i)):"" do // calculate cosine between query and each doc

. if i="" break

. set c=$zzCosine(^doc(i),^query)

# If cosine is > zero, put it and the doc offset (^doc(i)) into an answer vector.

# Make the cosine a right justified string of length 5 with 3 digits to the

# right of the decimal point. This will force numeric ordering on the first key.

. if c>0 set ^ans($justify(c,5,3),^doc(i))=""

write "results:",!

set x=""

for %%=1:1:10 do

. set x=$order(^ans(x),-1) // cycle thru cosines in reverse (descending) order.

. if x="" break

. for i="":$order(^ans(x,i)):"" do

.. use 1 set %=$zseek(i) // move to correct spot in file primates.text

.. read a // skip STAT- MEDLINE

.. for k=1:1:30 do // the limit of 30 is to prevent run aways.

... use 1

... read a // find the title

... if $extract(a,1,3)="TI " use 5 write x," ",$extract(a,7,80),!

... if $extract(a,1,3)="AB " for do

.... use 5

.... write ?5,$extract(a,7,120),!

.... use 1

.... read a

.... if '$test break

.... if $extract(a,1,3)'=" " break

... if $extract(a,1,3)="STA" use 5 write ! break

write !,"Time used: ",$zd1-time0," seconds",!

which produces the following results on the first 20,000 abstracts:

Enter query: epithelial fibrosis

Query is: epithelial fibrosis

results:

0.393 Epithelial tumors of the ovary in women less than 40 years old.

From Jan 1, 1978 through Dec 31, 1983, 64 patients

with epithelial ovarian tumors, frankly malignant or

borderline, were managed at one institution. Nineteen

patients (29.7%) were under age 40. The youngest patient

was 19 years old. Nulliparity was present in 32% of

this group of patients. Of these young patients, 58%

had borderline epithelial tumors, compared to 13% of

patients over 40 years of age. Twenty-one percent of

the young patients were initially managed by unilateral

adnexal surgery. The overall cumulative actuarial survival

rate of all young patients was 93%. Young patients

with epithelial ovarian tumors tend to have earlier

grades of epithelial neoplasms, and survival is better

than that reported for older patients with similar

tumors.

0.367 Misdiagnosis of cystic fibrosis.

On reassessment of 179 children who had previously

been diagnosed as having cystic fibrosis seven (4%)

were found not to have the disease. The importance

of an accurate sweat test is emphasised as is the necessity

to prove malabsorption or pancreatic abnormality to

support the diagnosis of cystic fibrosis.

0.367 Are lymphocyte beta-adrenoceptors altered in patients with cystic fibrosis

1. Beta-adrenergic responsiveness may be decreased

in cystic fibrosis. In order to determine whether this

reflects an alteration in the human lymphocyte beta-receptor

complex, we studied 12 subjects with cystic fibrosis

(six were stable and ambulatory and six were decompensated,

hospitalized) as compared with 12 normal controls.

2. Lymphocyte beta-receptor mediated adenylate cyclase

activity (EC 4.6.1.1) was not decreased in the ambulatory

cystic fibrosis patients as compared with controls.

In contrast, decompensated hospitalized cystic fibrosis

patients demonstrated a significant reduction in beta-receptor

mediated lymphocyte adenylate cyclase activity expressed

as the relative increase over basal levels stimulated

by the beta-agonist isoprenaline compared with both

normal controls and stable ambulatory cystic fibrosis

patients (control 58 +/- 4%; ambulatory cystic fibrosis

patients 51 +/- 7%; decompensated hospitalized cystic

fibrosis patients 28 +/- 5%; P less than 0.05). 3.

Our data suggest that defects in lymphocyte beta-receptor

properties in cystic fibrosis patients may be better

correlated with clinical status than with presence

or absence of the disease state.

0.352 Measurement of nasal potential difference in adult cystic fibrosis, Young'

Previous work confirmed the abnormal potential difference

between the undersurface of the inferior nasal turbinate

and a reference electrode in cystic fibrosis, but the

technique is difficult and the results show overlap

between the cystic fibrosis and the control populations.

In the present study the potential difference from

the floor of the nose has therefore been assessed in

normal subjects, as well as in adult patients with

cystic fibrosis, bronchiectasis and Young's syndrome.

Voltages existing along the floor of the nasal cavity

were recorded. The mean potential difference was similar

in controls (-18 (SD 5) mv) and in patients with bronchiectasis

(-17 (6) mv) and Young's syndrome (-20 (6) mv). The

potential difference in cystic fibrosis (-45 (8) mv)

was significantly different from controls (p less than

0.002) and there was no overlap between the cystic

fibrosis values and values obtained in normal and diseased

controls. This simple technique therefore discriminates

well between patients with cystic fibrosis and other

populations, raising the possibility of its use to

assist in diagnosis.

0.342 Pulmonary abnormalities in obligate heterozygotes for cystic fibrosis.

Parents of children with cystic fibrosis have been

reported to have a high prevalence of increased airway

reactivity, but these studies were done in a select

young, healthy, symptomless population. In the present

study respiratory symptoms were examined in 315 unselected

parents of children with cystic fibrosis and 162 parents

of children with congenital heart disease (controls).

The cardinal symptom of airway reactivity, wheezing,

was somewhat more prevalent in cystic fibrosis parents

than in controls, but for most subgroups this increased

prevalence did not reach statistical significance.

Among those who had never smoked, 38% of obligate heterozygotes

for cystic fibrosis but only 25% of the controls reported

wheezing (p less than 0.05). The cystic fibrosis parents

who had never smoked but reported wheezing had lower

FEV1 and FEF25-75, expressed as a percentage of the

predicted value, than control parents; and an appreciable

portion of the variance in pulmonary function was contributed

by the interaction of heterozygosity for cystic fibrosis

with wheezing. For cystic fibrosis parents, but not

controls, the complaint of wheezing significantly contributed

to the prediction of pulmonary function (FEV1 and FEF25-75).

In addition, parents of children with cystic fibrosis

reported having lung disease before the age of 16 more

than twice as frequently as control parents. Other

respiratory complaints, including dyspnoea, cough,

bronchitis, and hay fever, were as common in controls

as in cystic fibrosis heterozygotes. These data are

consistent with the hypothesis that heterozygosity

for cystic fibrosis is associated with increased airway

reactivity and its symptoms, and that the cystic fibrosis

heterozygotes who manifest airway reactivity and its

symptoms may be at risk for poor pulmonary function.

0.323 Retroperitoneal fibrosis and nonmalignant ileal carcinoid.

The carcinoid syndrome and fibrosis are unusual but

identifiable disease processes. We report a rare case

of retroperitoneal fibrosis associated with an ileal

carcinoid in the absence of metastatic disease. The

literature is reviewed.

0.323 Cyclic adenosine monophosphate-dependent kinase in cystic fibrosis trachea

Cl-impermeability in cystic fibrosis (CF) tracheal

epithelium derives from a deficiency in the beta-adrenergic

regulation of apical membrane Cl- channels. To test

the possibility that cAMP-dependent kinase is the cause

of this deficiency, we assayed this kinase in soluble

fractions from cultured airway epithelial cells, including

CF human tracheal epithelial cells. Varying levels

of cAMP were used in these assays to derive both a

Vmax and apparent dissociation constant (Kd) for the

enzymes in soluble extracts. The cAMP-dependent protein

kinase from CF human tracheal epithelial cells has

essentially the same Vmax and apparent Kd as non-CF

human, bovine, and dog tracheal epithelial cells. Thus,

the total activity of the cAMP-dependent kinases and

their overall responsiveness to cAMP are unchanged

in CF.

0.313 Poor prognosis in patients with rheumatoid arthritis hospitalized for inte

Fifty-seven patients with rheumatoid arthritis (RA)

were treated in hospital for diffuse interstitial lung

fibrosis. Although interstitial fibrosis (either on

the basis of lung function tests or chest roentgenograms

or both) is fairly common among patients with RA, according

to this study interstitial fibrosis of sufficient extent

or severity to warrant hospitalization was rare: incidence

of hospitalization due to the lung disease in RA patients

was one case per 3,500 patient-years. Eight patients

had a largely reversible lung disease associated with

drug treatment (gold, D-penicillamine or nitrofurantoin.)

The remaining 49 had interstitial fibrosis of unknown

cause. Causes for hospitalization were respiratory

and general symptoms in 38, but infiltrations on routine

chest roentgenographic examinations alone in eleven

patients. Forty-five out of the 49 patients had crackles

on auscultation. The most typical findings in lung

function tests were restriction and a decreased diffusion

capacity. These 49 patients showed a poor prognosis,

with a median survival of 3.5 years and a five-year

survival rate of 39 percent.

0.291 Relationship between mammographic and histologic features of breast tissue

Mammograms and histologic slides of a group of 320

women who had breast symptoms and a biopsy without

cancer being found were reviewed. The mammographic

features assessed were the parenchymal pattern and

extent of nodular and homogeneous densities. In addition

to the pathologic diagnosis, the histologic features

assessed included epithelial hyperplasia and atypia,

intralobular fibrosis, and extralobular fibrosis. Among

premenopausal women, those with marked intralobular

fibrosis were more likely to have large (3+ mm) nodular

densities on the mammogram. Among postmenopausal women,

epithelial hyperplasia or atypia was related to having

nodular densities in at least 40% of the breast volume.

In both groups, marked extralobular fibrosis was related

to the presence of homogeneous density on the mammogram.

We conclude that mammographic nodular densities may

be an expression of lobular characteristics, whereas

homogeneous density may reflect extralobular connective

tissue changes.

0.290 Recent trends in the surgical treatment of endomyocardial fibrosis.

Several modifications of the traditional treatment

of endomyocardial fibrosis have been made based on

a personal experience of 51 surgical cases and on the

reports of others in the surgical literature during

the last decade. Description of these techniques and

the author's current concept of the pathological processes

are reported herein.

0.279 Vitamin A deficiency in treated cystic fibrosis: case report.

We describe a patient with cystic fibrosis and hepatic

involvement who, although on pancreatic extract, developed

vitamin A deficiency, night blindness, and a characteristic

fundus picture. All of these abnormalities were reversed

by oral vitamin A supplementation.

0.269 Epithelial ovarian tumor in a phenotypic male.

Laparotomy in a 41-year-old married man with non-treated

left cryptorchidism revealed female internal genitals

on the left side, and an epithelial ovarian tumor of

intermediate malignancy. Germinal malignancies are

frequent in intersexes, but non-germinal gonadal neoplasms

are rare. This is the second reported case of epithelial

ovarian tumor in intersexes, and the first case of

epithelial ovarian tumor in an intersex registered

as male.

Time used: 14 seconds

|

The above information storage and retrieval program is limited, however, because it sequentially

calculates the cosines between all documents and the query

vector. In fact, most documents contain no words in common with

the query vector and, consequently, their cosines are zero.

Thus, a possible speedup technique would be to only calculate the

cosines between the query and those documents that contain

at least one term in common with the query vector.

This

can be done by first constructing a vector of

document numbers of documents containing at least one

term in common with the query.

This is done in the following program as the query words are read

and processed. After processing a query word against the

stop list, synonym table, stemming and so on, if the resulting

term is in the vocabulary, add to a temporary vector ^tmp

those document numbers on the row from the term-document

matrix associated with the query term. When all query words

have been processed, the temporary vector

^tmp will contain, as indices, those document numbers

of documents that contain at least one query term.

While these documents represent, to some extent, a

response to the query, ranking the documents is important.

This could have been done somewhat simply by keeping

in ^tmp a count of the number of query terms

each document contained or it can now be calculated by

calculating a cosine or other suitable similarity

function between each of the document vectors whose

document numbers are in ^tmp and the query

vector ^query.

In the following, the cosine function is used to calculate the

similarity between the document vectors and the query vector.

As each cosine is calculated, it is stored along with the

value of ^doc(i) (where i^ans.

The purpose for doing this is to create a global array

ordered by cosine values as its first index and document identifiers

as its second index. That will allow the

results to be presented in descending cosine value order.

In order to avoid and ASCII sort of the numeric

cosine values, each cosine value is stored as an index

in a field of width 5 with three digits to the right of the decimal

point. This format insures that the first index will be in

numeric collating sequence order.

The second index of ^ans is the value of the

file offset pointer for the first line of the document

in the flat document file. Finally, the results are

presented in reverse cosine order (from high to low)

and the original documents at each cosine value are

printed (note: for a given cosine value, there may

be more than one document).

|

Faster Basic Retrieval

|

#!/usr/bin/mumps

#+++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

#+

#+ Mumps ISR Software Library

#+ Copyright (C) 2005, 2006, 2007, 2008 by Kevin C. O'Kane

#+

#+ Kevin C. O'Kane

#+ okane@cs.uni.edu

#+

#+

#+ This program is free software; you can redistribute it and/or modify

#+ it under the terms of the GNU General Public License as published by

#+ the Free Software Foundation; either version 2 of the License, or

#+ (at your option) any later version.

#+

#+ This program is distributed in the hope that it will be useful,

#+ but WITHOUT ANY WARRANTY; without even the implied warranty of

#+ MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

#+ GNU General Public License for more details.

#+

#+ You should have received a copy of the GNU General Public License

#+ along with this program; if not, write to the Free Software

#+ Foundation, Inc., 59 Temple Place, Suite 330, Boston, MA 02111-1307 USA

#+

#++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

# fasterRetrieval.mps Feb 28, 2008

open 1:"osu.medline,old"

if '$test write "osu.medline not found",! halt

write "Enter query: "

kill ^query

kill ^ans

kill ^tmp

for do // extract query words to query vector

. set w=$zzScanAlnum

. if '$test break

. set w=$zstem(w)

. if '$data(^dict(w)) quit // skip unknown words

. set ^query(w)=1

write "Query is: "

for w="":$order(^query(w)):"" write w," "

write !

set time0=$zd1

# Find documents containing one or more query terms.

for w="":$order(^query(w)):"" do

. for d="":$order(^index(w,d)):"" set ^tmp(d)="" // retain doc id

for i="":$order(^tmp(i)):"" do // calculate cosine between query and each doc

. set c=$zzCosine(^doc(i),^query) // MDH cosine calculation

# If cosine is > zero, put it and the doc offset (^doc(i)) into an answer vector.

# Make the cosine a right justified string of length 5 with 3 digits to the

# right of the decimal point. This will force numeric ordering on the first key.

. if c>0 set ^ans($justify(c,5,3),^doc(i))=""

set x=""

for %%=1:1:10 do

. set x=$order(^ans(x),-1) // cycle thru cosines in reverse (descending) order.

. if x="" break

. for i="":$order(^ans(x,i)):"" do // get the doc offsets for each cosine value.

.. use 1 set %=$zseek(i) // move to correct spot in file primates.text

.. read a // skip STAT- MEDLINE

.. for k=1:1:30 do // the limit of 30 is to prevent run aways.

... use 1

... read a // find the title

... if $extract(a,1,3)="TI " use 5 write x," ",$extract(a,7,80),!

... if $extract(a,1,3)="AB " for do

.... use 5

.... write ?5,$extract(a,7,120),!

.... use 1

.... read a

.... if '$test break

.... if $extract(a,1,3)'=" " break

... if $extract(a,1,3)="STA" use 5 write ! break

write !,"Time used: ",$zd1-time0," seconds",!

yields the same results as above but takes less than 1 second.

|

Thesaurus construction (Salton83, pages 76-84)

It is possible to find connections between terms based on their

frequency of co-occurrence. Terms that co-occur frequently

together are likely to be related.

These relationships can indicate that the words

are synonyms or terms used to express a concept.

For example, a strong relationship between the words "artificial"

and "intelligence" in a computer science data base

is due to the phrase "artificial intelligence" which

names a branch of computing. In this case, the

relationship is not that of a synonym.

Similarly, in the medical data base

terms such as

"circadian" "rhythm" and

"vena" "cava" and

"herpes" "simplex" are concepts expressed as

more than one term.

On the other hand, as seen below, words like

"synergism" and "synergistic", "cyst" and

"cystic", "schizophrenia" and "schizophrenic",

"nasal" and "nose", and

"laryngeal" and "larynx"

are examples of synonym relationships.

In other cases, the relationship is not so tight

so as to be a full synonym but express a categorical

relationship such as "anesthetic" and "halothane",

"analgesia" and "morphine",

"nitrogen" and "urea", and

"nurse" and "personnel".

Regardless of the relationship, a thesaurus

table can be constructed giving a list of words that frequently co-occur.

With this information it is then possible to:

- augment queries with related words to improve recall;

- combine multiple related infrequently occurring terms into

broader, more frequently occurring categories terms; and,

- create middle frequency composite terms from otherwise unusable high

frequency component terms.

In its simplest form, we construct a square term-term

correlation matrix which gives the frequency of co-occurrence

of terms with one another. Thus, if some term A

occurs in 20 documents and if term B also occurs in these same

documents, the term-term correlation matrix cell for row A and column B

will have the entry 20. A term-term correlation matrix

lower diagonal matrix is the same as the upper diagonal

matrix since the relationship between term A and B is always the

same as the relationship between term B and A. The diagonal

itself is meaningless in that it is the correlation of a term with itself.

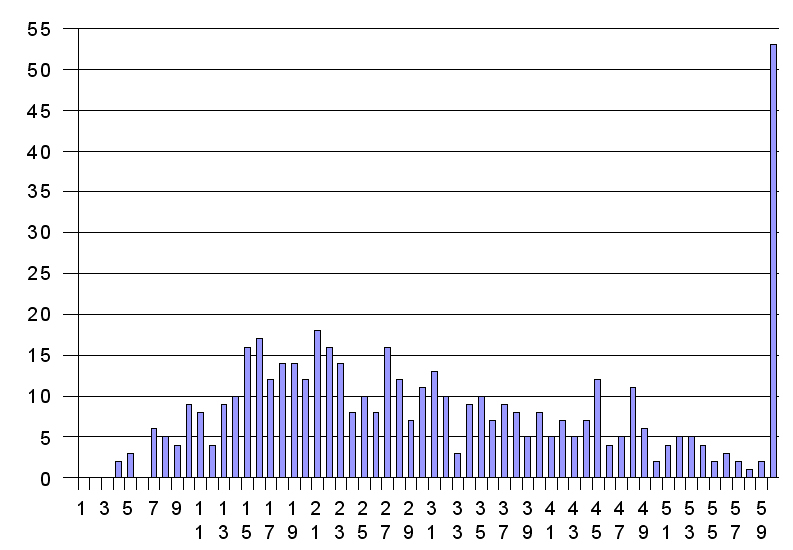

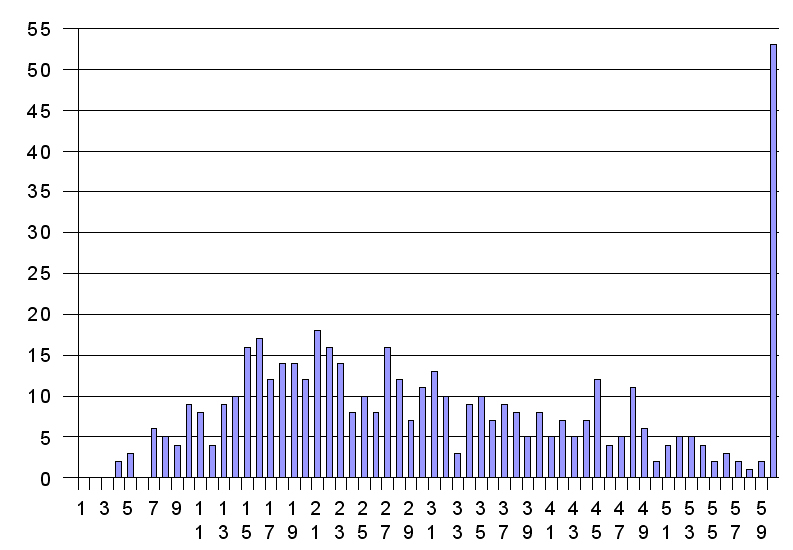

Calculating a complete term-term correlation matrix based on