January 30, 2008 8:39 AM

What is a Tree?

I can talk about something other than science. As I write this, I am at a talk called "What is a Tree?", by computational artist Ira Greenberg. In it, Greenberg is telling his story of going from art to math -- and computation -- and back.

Greenberg started as a traditional artist, based in drawing and focused in painting. He earned his degrees in Visual Art, from Cornell and Penn. His training was traditional, too -- no computation, no math. He was going to paint.

In his earliest work, Greenberg was caught up in perception. He found that he could experiment only with the motif in front of him. Over time he evolved from more realistic natural images to images that were more "synthetic", more plastic. His work came to be about shape and color. And pattern.

Alas, he wasn't selling anything. Like all of us, he needed to make some money. His uncle told him to "look into computers -- they are the future". (This is 1993 or so...) Greenberg could not have been less interested. Working with computers seemed like a waste of time. But he got a computer, some software, and some books, and he played. In spite of himself, he loved it. He was fascinated.

Soon he got paying gigs at places like Conde Nast. He was making good money doing computer graphics for marketing and publishing folks. At the time, he said, people doing computer graphics were like mad scientists, conjuring works with mystical incantations. He and his buddies found work as a hired guns for older graphic artists who had no computer skills. They would stand over his should, point at the screen, and say in rapid-fire style, "Do this, do this, do this." "We did, and then they paid us."

All the while, Greenberg was still doing his "serious work" -- painting -- on side.

But he got good at this computer stuff. He liked it. And yet he felt guilty. His artist friends were "pure", and he felt like a sell-out. Even still, he felt an urge to "put it all together", to understand what this computer stuff really meant to his art. He decided to sell out all the way: to go to NYC and sell these marketable skills for big money. The time was right, and the money was good.

It didn't work. Going to an office to produce commercial art for hire changed him, and his wife notice. Greenberg sees nothing wrong with this kind of work; it just wasn't for him. Still, he liked at least one thing about doing art in the corporate style: collaboration. He was able to work with designers, writers, marketing folks. Serious painters don't collaborate, because they are doing their own art.

The more he work with computers in the creative process, the more he began to feel as if using tools like Photoshop and LightWave was cheating. They provide an experience that is too "mediated". With any activity, as you get better you "let the chaos guide you", but these tools -- their smoothness, their engineered perfection, their Undo buttons -- were too neat. Artists need fuzziness. He wanted to get his hands dirty. Like painting.

So Greenberg decided to get under the hood of Photoshop. He started going deeper. His artist friends thought he was doing the devil's work. But he was doing cool stuff. Oftentimes, he felt that the odd things generated by his computer programs were more interesting than his painting!

He went deeper with the mathematics, playing with formulas, simulating physics. He began to substitute formulas inside formulas inside formulas. He -- his programs -- produced "sketches".

At some point, he came across Processing, "an open source programming language and environment for people who want to program images, animation, and interactions". This is a domain-specific language for artists, implemented as an IDE for Java. It grew out of work done by John Maeda's group at the MIT Media Lab. These days he programs in ActionScript, Java, Flash, and Processing, and promotes Processing as perhaps the best way for computer-wary artists to get started computationally.

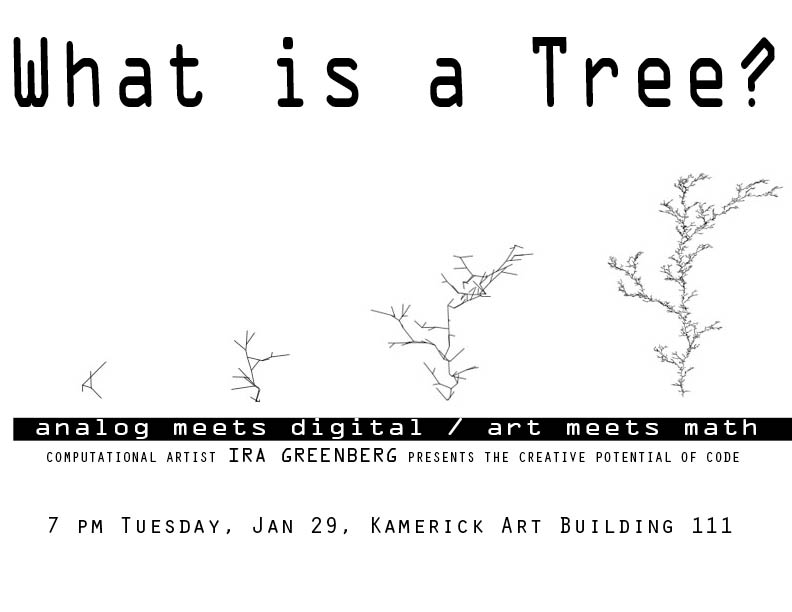

With his biographical sketch done, he moved on to the art that inspired his talk's title. He showed a series of programs that demonstrated his algorithmic approach to creativity. His example was a tree, which was a double entendré for his past as a painter of natural scenes and also for his embrace of computer science.

He started with the concept of a tree in a simple line drawing. Then he added variation: different angles, different branching factors. These created asymmetry in the image. Then he added more variation: different scales, different densities. Then he added more variation: different line thickness, "foliage" at the end of the smallest branches. With randomness elements in the program, he gets different outputs each time he runs the code. He added still more variation: color, space, dimension, .... He can keep going along as many different conceptual dimensions as he likes to create art. He can strive for verisimilitude, representation, abstraction, ... any artistic goal he might seek with a brush and oils.

Greenberg's artistic medium is code. He writes some code. He runs it. He change some things, and runs it again. This process is interactive with the medium. He evolves not a specific work of art, but an algorithm that can generate an infinite number of works.

I would claim that in a very important sense his work is the algorithm. For most artists, the art is in the physical work they produce. For Greenberg, there is a level of indirection -- which is, interestingly, one of the most fundamental concepts of computer science. For me, perhaps the algorithm is the artistic work! Greenberg's program is functional, not representational, and what people want to see is the art his programs produce. But code can be beautiful, too.

January 29, 2008 4:20 PM

A Broken Record?

Last night I attended a meeting with several CS colleagues, colleagues from Physics and Industrial Technology, representatives from the local company DISTek Integration, and representatives from National Instruments, which produces the programming environment LabVIEW. Part of the meeting was about LabVIEW and how students and faculty at my school use it. Our CS department does not use it at all, which is typical; the physicists and technologists use it to create apps for data acquisition and machine control.

Most of the meeting was much more interesting than tool talk and sales pitch, because it was about how to excite more kids and university students about IT careers and programming, and about how LabVIEW users work. Most of the folks who program in LabVIEW at DISTek are test engineers, not trained software developers. But the programming environment makes it possible for them to build surprisingly complex applications. As they grow their apps, and attack bigger problems because they can, the programs become unwieldy and become increasingly difficult to maintain and extend.

It turns out that program design matters. It turns out that software architecture matters. But these programmers aren't trained in the writing software, and so they do much of their work with little or no understanding of how to structure their code, or refactor it into something more manageable.

These folks are engineers, not scientists, but I felt a deep sense of deja vu. Our conversations sounded a lot like what we discussed with physicists at the SECANT workshop to which I keep referring.

I think we have a great opportunity to work with the folks at DISTek to help their domain-specific programmers learn the software development skills they need to become more effective programmers. Working with them will require us to think about how to teach a focused software development curriculum for non-programmers. I think that this will be work worth doing as we encounter more and more folks, in more and diverse domains, who need that sort of software development education -- not a major or maybe even minor in CS -- in order to do what they do.

January 26, 2008 10:01 PM

Spirit of the Marathon

The day of my cold run ended with a viewing of the new film Spirit of the Marathon. Jon Dunham's film follows six people as they prepare for the 2005 Chicago Marathon. Two, Kenya's Daniel Njenga and the US's Deena Kastor, are among the best marathoners in the world. One was a guy is a 30-something with a PR of 3:11 hoping to qualify for Boston. Two are 20-something women training for their first marathons. The last is a 60-something guy with several 12:00/mile-pace marathons under his belt. Interspersed throughout coverage of the six runners are interviews with some of the sports greats, including Frank Shorter and Bill Rodgers, who comment on the history of the marathon as event and on the human desire to challenge oneself and persevere.

I enjoyed the film very much. Every few minutes, someone in the film said or did something that put a smile on the face, or a tear in the eye, of every marathoner in the room. We knew just what the person was doing, thinking, feeling; we had done the same. The chill of a dark morning just before a 20-miler. The deep disappointment of an injury that means the end of a goal. The vacillation between doubt and confidence as goals are met and new challenges arise.

Some of the lines were memorable. A lot of folks chuckled out loud when the 60-something guy said, "The only runner's high I've ever felt is when I stop running." People talk about a runner's high, but it's not all that common. Each mile is work, and most days we merely manage to reach the end. I've certainly had great runs, and written about some of them here, but those days are rare and less euphoria than steady. Unfortunately, new runners expect that they should feel a runner's high at some point, and when they don't they think something is wrong with them. There's nothing wrong with you. Just keep running.

My personal favorite line was spoken, I think, by Deena Kastor, 2004 Olympic marathon bronze medalist. She said that one of the great allures of running a marathon is the unknown. You work hard, you prepare, you are ready for the race of your life. Yet despite all of the preparation, all the hard work, you never know how your body or mind will react on The Day. That is the moment of challenge.

Indeed, this is the ultimate challenge of a marathon. The distance leaves your body with no margin of error, so the marathoner is always teetering at the end of his or her physical capabilities -- and mental energy. We middle- and back-of-the-packers may think that the champion runners are different from us, but they aren't. When Daniel Njenga made the final turn of the Chicago Marathon and faced the last grueling meters of the race, desperately hoping to find a kick that would carry him across the finish line -- he came up empty. Spent, sore, tired. His dream was beyond his reach. But he kept running, pushing his mind and legs to take the last steps.

I've made that last turn in Chicago and felt that same disappointment. I've struggled to push my legs through those last grueling meters of the course. My dream wasn't Njenga's, but it meant the same to me as his did to him.

In other sports, we can share the dreams of the world's best, but we compete in different arenas. In the marathon, we run the same courses, on the same crisp mornings, only minutes (and hours...) apart.

I don't know if non-runners will enjoy Spirit of the Marathon as much as the runners in the audience Thursday night did. If you think running is boring or hard, this film may not change your mind. If you think marathoners are crazy to attempt the distance even as they know it will push them beyond their limits, then you may see this film as supporting evidence, not inspiration. As a runner, I know the exhilaration of the challenge, and watching six runners challenge themselves and show us what they felt along the way was pretty inspiring.

Oh, and the marathon doesn't push me beyond my limits. It helps me to find my limits, and push them outward.

January 25, 2008 2:07 PM

Running, Programming, and Tools

I enjoyed a brisk 5-mile run outdoors yesterday morning. That isn't much to write about, except that yesterday morning the temperature dipped to an all-time record low for January 24 here, bottoming out at -29° Fahrenheit. (All together now: Here's your sign.) At least the wind didn't make it feel much colder than that.

The thing is, I did enjoy the run. I stayed plenty warm, thanks to my clothing and the physical act of running. First, I threw on a layer or two more than usual. Second, my wife gave me a couple of pieces of new gear for Christmas: a pair of fleece running mittens with a second, wind-resistant outer layer, and an ultra-warm headband that I wore under my usual thermal hat. My fingers and toes have always been my weak spot in the cold, and for the first time ever in very cold weather, my fingers didn't get cold at all. My attire did the job, and the new gear worked just as I had hoped.

In running, as in programming, good tools make all the difference. I really liked Jason Marshall's take on this in a recent Something to Say:

There's an old saying, "A good craftsman never blames his tools." Many people take this to mean "Don't make excuses," or even, "Real men don't whine when their tools break." But I take it to mean, "A good craftsperson does not abide inferior tools."

I'm teaching a course on Unix shell scripting the first five weeks of this semester, and tool-building is central to the Unix philosophy. I hope that students see that they never have to abide inferior tools, or even okay tools that do not meet their needs. With primitive Unix commands, pipelines, I/O redirection, a little sed and awk, and the more general programming language of bash, they can do so much to customize their environment so that it meets their needs. If the shell isn't enough, they can use a general-purpose programming language. Progress depends on the creation of better tools.

I like to build software tools for myself. I'm not equipped to make my own running gear, though, and being, um, careful with my money means that I am sometimes slow to buy the more expensive item. But after running 7500 miles over the last four years, I've slowly realized that I'm enough of a runner to use better gear. A few experiences like yesterday morning's make it easier to purchase the right equipment for the job. Learning shell scripting, or a better programming language, can have the same effect on a programmer.

January 24, 2008 4:18 PM

The Stars Trust Me

My horoscope says so:

Thursday, January 24

Scorpio (October 24-November 22) -- You are smart enough to realize meeting force with force will only result in non-productive developments. To your credit, you will turn volatile matters around with wisdom, consideration, and gentleness.

Now, I may not really be smart enough, or wise enough, or even gentle enough. But on days like today it is good to hear such advice. Managing a team, a faculty, or a class involves a lot or relationships and a lot of personalities. Using wisdom, consideration, and gentleness is usually a more effective way to deal with unexpected conflicts than responding in kind or brute force.

Some days, my horoscope fits my situation perfectly. Today is one. But I promise not to turn to the zodiac for future blogging inspiration, unless it delivers a similarly general piece of advice.

January 24, 2008 6:39 AM

More on Computational Simulation, Programming, and the Scientific Method

As I was running through some very cold, snow-covered streets, it occurred to me that my recent post on James Quirk's AMRITA system neglected to highlight one of the more interesting elements of Quirk's discussion: Computational scientists have little or no incentive to become better programmers, because research papers are the currency of their disciplines. Publications earn tenure and promotion, not to mention street cred in the profession. Code is viewed by most as merely a means to an end, an ephemeral product on the way to a citation.

What I take from Quirk's paper is that code isn't -- or shouldn't be -- ephemeral, or only a means to an end. It is the experiment and the source of data on which scientific claims rest. As I thought more about the paper I began to wonder, can computational scientists do better science if they become better programmers? Even more to the point, will it become essential for a computational scientist to be a good programmer just to do the science of the future? That's certainly what I heard some of the scientists at the SECANT workshop saying.

While googling to find a link to Quirk's article for my entry (Google is the new grep. TM), I found the paper Computational Simulations and the Scientific Method (pdf), by Bil Kleb and Bill Wood. They take the programming-and-science angle in a neat software direction, suggesting that

- the creators of a new simulation technique should publish unit tests that specify the technique's intended behavior, and

- the developers of scientific simulation code for a given technique use its unit tests to demonstrate that their component correctly implements the technique.

Publishing a test fixture offers several potential benefits, including:

- a way to communicate a technique or algorithm better

- a way to share the required functionality and performance features of an implementation

- a way to improve repeatability of computational experiments, by ensuring that scientists using the same technique are actually getting the same output from their component modules

- a way to improve comparison of different experiments

- a way to improve verification and validation of experiments

These are not about programming or software development; they are about a way to do science.

This is a really neat connection between (agile) software development and doing science. The idea is not necessarily new to folks in the agile software community. Some of these folks speak of test-driven development in terms of being a "more scientific" way to write code, and agile developers of all flavors believe deeply in the observation/feedback cycle. But I didn't know that computational scientists were talking this way, too.

After reading the Kleb and Wood paper, I was not surprised to learn that Bil has been involved in the Agile 200? conferences over the years. I somehow missed the 2003 IEEE Software article that he and Wood co-wrote on P and scientific research and so now have something new to read.

I really like the way that Quirk and Kleb & Wood talk about communication and its role in the practice of science. It's refreshing and heartening.

January 23, 2008 11:15 AM

MetaBlog: Good News, No News

One piece of good news from the past week: My permalinks should work now! Our college web server is once again behaving as it should, which means that http://www.cs.uni.edu/~wallingf/blog/ will not redirect to a http://cns2.uni.edu/ URL. This means that my permalinks, which are in the www.cs.uni.edu domain, will once again work. This makes me happy, and I hope that it makes it easier for folks to link directly to articles that they discuss in their own blogs. There may still be a problem with the category pages, but the sysadmins should have that fixed soon.

Now for that Bloglines issue... I haven't had much luck getting help from the Bloglines team, but I'll keep trying.

January 22, 2008 4:37 PM

Busy Days, Computational Science

Some days, I want to write but don't have anything to say. The start of the semester often finds me too busy doing things to do anything else. Plowing through the arcane details of Unix basic regular expressions that I tend not to use very often is perhaps the most interesting thing I've been doing.

Over the weekend, I did have a chance to read this paper by James Quirk, a computational scientist who has built a sophisticated simulation-and-documentation system called AMRITA. (You can download the paper as a PDF from his web site, by following About AMRITA and clinking on the link "Computational Science: Same Old Silence, Same Old Mistakes".) Quirk's system supports a writing style that interleaves code and commentary in the spirit of literate programming using the concept of a program fold, which at one level is a way to specify a different processor for each item in a tree of structured text items. The AMRITA project is an ambitious mixture of (1) demonstrating a new way to communicate computational science results and (2) arguing for software standards that make it possible for computational scientists to examine one another's work effectively. The latter point is essential if computational science is to behave like science, yet the complexity of most simulation programs almost precludes examination, replication, and experimentation with the ideas they implement.

Much of what Quirk says about scientists as programmers meshes with what I wrote in my reports on November's SECANT workshop. The paragraph that made me sit up, though, was this lead-in to his argument:

The AMR simulation shown in Figure 1 was computed July 1990.... It took just over 12 hours to run on a Sun SPARCstation 1. In 2003 it can be run on an IBM T30 laptop in a shade over two minutes.

It is sobering occasionally to see the advances in processors and other hardware presented in such concrete terms. I remember programming on a Sun SPARCstation 1 back in the early '90s, and how fast it seemed! By 2003 a laptop could perform more than 300 times faster on a data-intensive numeric simulation. How much faster still by 2008?

Quirk is interested in what this means for the conduct of computational science. "What should the computational science community be doing over and above scaling up the sizes of the problems it computes?" That is the heart of his paper, and much of the motivation behind AMRITA.

January 17, 2008 5:24 PM

A Tale of Three Days

Yesterday was the the sort of day that makes my CS friends and colleagues ask if I am crazy for being department head. It was the third day of classes this semester. A dozen students came by, for advising on course selection, for help switching sections, and the like. I produced a schedule mapping graduate assistants to open lab hours, ran it past the GAs and the faculty, and then distributed it. The phone rang repeatedly, with calls from other offices on campus and from off-campus folks asking questions about scholarship application deadlines.

Every time I started a new train of thought, an interrupt occurred. Context switch, new process, and return. Each task was, individually, just fine. In fact, I enjoy talking to students, new and returning, and helping them make choices about their studies. But little or no computer science happened.

Today was my teaching day, so I got to spend plenty of time thinking about shell scripts. That's not Computer Science, but it's computer science, and as a hacker I loved it. Of course, yesterday's interrupt-fest cut into my prep time enough that I didn't feel as prepared for class as I like to be. But I got to think about software tools, writing code, duplication, abstraction -- many of the things that make me a happy computer scientist.

Tomorrow I travel to Des Moines to help select the winners of the 2008 Prometheus Awards, the Academy Awards of IT in my state. Four hours on the road. A great outreach activity, an important role for my university, and conversation with some sharp, interesting people who are involved in my discipline's industry -- but little or no computer science.

The weekend will be here soon.

January 16, 2008 12:02 PM

An Open-Source Repository for Course Projects

I don't write many entries for the purpose of passing on a link, but I am making an exception for the Repository for Open Software Education (ROSE), being hosted by a group at North Carolina State University that includes Laurie Williams. You know I am a big fan of project-based courses, and one of the major impediments to faculty teaching courses this way is creating suitable projects. For example, every time I teach compilers, I face the task of choosing or creating a programming language for students to use as their source. I like to use a language that is at least a little different than languages I've used in the past, for a couple of reasons, and that is hard work.

For more generic project courses in software engineering, the problem is compounded by the fact that you may want your students to work on an existing system, so that they can encounter issues raised by a larger system than they might write from scratch themselves. But where will such large software come from? Sites like SourceForge offer plenty of systems, but they come at so many levels of completeness, complexity, documentation, and accessibility. Finding projects suitable for a one-semester undergraduate course in the morass is daunting.

ROSE aims to host open-source projects that are of suitable scope and can grow slowly as different project teams extend them. Being targeted at educators, the repository aims to record information about requirements, design, and testing that are often missing or inscrutable in other open-source projects. At this point, ROSE contains only one project larger than 5K lines of code, but that will change as others contribute their projects to the repository.

As Laurie noted in her announcement of ROSE, for a few years now the CS education community has had a Nifty Assignments repository, which offers instructors a set of fun, challenging, and battle-tested programming assignments to use in lower-division courses. ROSE will host larger open-source projects for use in a variety of ways. It is a welcome addition.

January 14, 2008 2:25 PM

Planning and the Project Course

After "worrying out loud" in a recent entry, I suppose I should report that our faculty retreat went well. We talked about several different across over the course of the day, especially long-term goals, outcomes assessment, and tactics for the next few months. The biggest part of our goals discussion related to broadening our reach to more students who are not and may never want to be CS majors. That includes science majors but also social science, business, humanities, and education students.

While discussing how to determine whether or not our courses and programs of study were accomplishing what we want them to accomplish, we had what was in many ways the most interesting discussion of the day. It dealt with our "capstone" project course.

In all of the CS majors we offer, each student is required to complete a "project course" in one of the areas of study we offer. This will be the third course the student takes in that area, which is one way that we try to require students to gain some depth in at least one area of computing. When we added this requirement to our programs, the primary goal was to give students a chance to work in teams on a large piece of software, something bigger than they'd worked on in previous courses and bigger than one person could complete alone.

Some of our project courses are "content-heavy", in that they introduce new topical content while students are working on the project. The compilers course is an example of such a course; "Real-Time Embedded Systems" is another. Others do not introduce much new content and focus on the team project experience. "Projects in Systems" (for the OS/networking crowd) and "Projects in Information Science" (for the database/IR crowd) are examples. Over the years, as we've broadened our faculty and the areas we teach, we've added new project courses to the broaden the type of experience offered as well. In some of these courses, code is not the primary deliverable. For example, in "Software Testing", students might develop a testing environment, a suite of test plans, and documentation; in "User Interface Design", students focus on developing a front end to an existing system or to a lighter proof-of-concept back end that they write. Some of these courses implement looser versions of the original idea in other ways, too. My compiler students usually work in pairs or threes, rather than the four or five that we imagined we designed this part of the curriculum over a decade ago.

Our outcomes assessment discussion turned quickly to the nature of a project course and in particular the diversity we now offer under this banner. We can't write outcomes for the project requirement that refer to code as a primary deliverable if, in fact, several courses do not require that of students. Well, we could, but then we would have to change how we teach the courses -- perhaps drastically. The question was, is code an essential outcome of this part of our curriculum?

We faced similar questions as we explored the other elements of diversity. How much new content should a project introduce? Must students write prose, in the form of documentation, etc.? Should they give oral presentations? If so, should these be public, or is an internal presentation to the class or instructor sufficient? What about the structured demos that our interface design students give as a part of an end-of-the-term open house?

I enjoyed listening to all of my colleagues describe their courses in more detail than I had heard in a while. After our discussion, we decided for the most part to preserve the rich ecology of our project course landscape. Test plans and UIs are okay in place of code in certain courses; the essential outcome is that students be expected to produce multiple deliverables across the life of the project. We also found some common themes that we could agree on, even if it meant tweaking our courses. For example, whatever kind of presentation our students give at the end of the project, it should be open to the public and allow public questioning. We will be writing these outcomes up more formally as we proceed this semester and use them as we evaluate the efficacy of our curriculum.

I was impressed with the diversity of ideas in practice in our project courses. Perhaps the results of this discussion were interesting mostly because we have had this sort of conversation in a long time, at least not as a whole faculty. It's funny, but "shared knowledge" isn't always what we think it is. Sometimes we don't share as much as we think we do, at least of the surface. When we dig deeper, we can find common themes and also fundamental differences, which we can then explore explicitly and in the context of the shared knowledge. It was neat to see how each of us learned a little something new about the goals of the project course and left the conversation with an idea for improving our own courses. My compiler students certainly don't write enough technical prose in my course, certainly not as much or as structured as students in some of the other project courses. I can make my course stronger, and more consistent with the other options, by changing how I teach the course next time, and what I require of the class.

Our retreat didn't answer all my questions about the direction of the department or our strategic and tactical plans. Only in the fantasy of a manager could it! My job now is to take what we learned and decided about ourselves that day, help craft from that a coherent plan of action for the department, and begin to take concrete actions to move us in the direction we want to go. I hope that, as in most other endeavors, we will do some things, learn from the feedback, and adjust course as we go.

Posted by Eugene Wallingford | Permalink | Categories: Managing and Leading, Software Development, Teaching and Learning

January 09, 2008 4:31 PM

Follow Up to Recent Entry on Intro Courses

After writing my recent entry on some ideas about CS intro courses gleaned from a few of the columns and editorials in the latest edition of inroads, I had a few stray thoughts that seem worth expressing.

On Debate

I always feel a little uneasy when I write a piece like that. It comments specifically on an article written by a CS colleague, and in particular criticizes some of the ideas expressed in the article. Even when I include disclaimers to the effect that I really like an article, or respect the author's work, there is a chance that the author will take offense. That happens in the case even of more substantial written works, and I think that the looser, more "thinking out loud" nature of a blog only compounds the chance of a misunderstanding.

I certainly mean no offense when I write such pieces. Almost always, I am grateful for the author having triggered me to think and write something down. And always I intend for my articles to play a part in a healthy academic debate on an issue that both the author and I think is important.

Without healthy discussion of what we do -- and sometimes even contentious debate -- how can we hope to know whether we are on a good path? Or get onto a good path in the first place? Debate, or the friendlier "discussion" is one of the best ways we have for getting feedback on our thinking and for improving our ideas.

I like healthy discussion, even contentious debate, because I don't mean or take personal offense. I've always liked a good hearty discussion, but still I am indebted to my graduate advisor for modeling how academics approach ideas. In meetings of our research group and in seminars, he was often like a bulldog, challenging claims and offering counterarguments. At times, he could be contentious, and often the room became a lot hotter as he, I, and a couple of likewise combative fellow grad students went at it.

But those discussions were always about ideas. When the meeting was up, the discussion was over, and we all went back to being friends and colleagues. My advisor never held a grudge, even if I spent an hour telling him he was wrong, wrong, wrong. He enjoyed the debate, and knew that we all were getting better from the discussion.

Sometimes we have to work not to take personal offense, and to express our thoughts in a way that won't cause offense to a reasonable person. Sometimes, we even have to adjust our approach to account for the people in the room. But the work is worth it, and debate is necessary.

On Exciting Bright Minds

When deciding how to teach our intro courses, we need to ask several questions. What works best for our student population overall? What helps weakest students learn as much of value as they can? What helps our strongest students see the exciting ideas of computing deeply and come to love the power they afford us?

My dream is that we can offer all of our students, but especially our brightest ones, the sort of excitement that Jon Bentley felt, as he writes in his Communications of the ACM 50th anniversary piece In the realm of insight and creativity. The pages of each issue ignited a young mind that helped to define our discipline. Maybe our courses can do the same.

On Simplicity

In my article, I withheld comment on one of the "language war" elements of Manaris's argument, namely the argument for using a simpler language. In the editorial spirit of his article, I will share my own opinions, based almost wholly in personal preference and anecdote.

I remain a strong believer that OOP can be the foundation of a solid CS curriculum, but Java and certainly C++ are not the best vehicles for learning to program. I agree with Manaris that a conceptually simpler language is preferred. He cites Alan Kay, whom regular readers here know I cite frequently. I agree with Bill and Alan! Then again, Smalltalk or a variant thereof might be an even better choice for CS1 than Python. It has a simpler grammar, no goofy indentation rules no strange __this__ operator, ... but it is quite different from most mainstream languages in feel, style, and environment. Scheme? Can't get much simpler that that. We have learned, though, that simplicity itself can create a different sort of overload difficulty for students while learning. Many folks are successful teaching Scheme early, and I wish we had more evidence on Smalltalk's use in CS 1.

Conceptual simplicity is a good thing, but it is but one of several forces at play in the decision.

For what it's worth, as I mentioned in the same entry, we will be offering a Python-based media computation CS 1 course this semester. I am eager to see how it goes, both on its own and in comparison to the Java-based media comp CS 1 we taught once before.

On Patterns

Finally, Manaris quotes Alan Kay on the non-obvious ideas that can trip up a novice programmer, some which are

... like the concept of the arch in building design: very hard to discover, if you don't already know them.

I do not advocate hanging novices up on non-obvious ideas, but it occurs to me that instruction driven by elementary patterns would address this difficulty head-on. Sometimes, a concept we think obvious is not so to a student, and other times we want students to encounter a non-obvious idea as a part of their growth. Patterns are all about communicating such ideas, in context, with explanation of some of the thinking that underlies their utility.

January 08, 2008 5:05 PM

Admin Pushing Teaching to the Side

I have always liked the week before classes start for a new semester. There is a freshness to a new classroom of students, a new group of minds, a new set of lectures and assignments. Of course, most of these aren't really new. Many of my students this semester will have had me for class before, and most semesters I teach a course I've taught before, reusing at least some of the materials and ideas from previous offerings. Yet the combination is fresh, and there is a sense of possibility. I liked this feeling as a student, and I like it as a prof. It is one of the main reasons that I have always preferred a quarter system to a semester system: more new beginnings.

Since becoming department head, the joy is muted somewhat. For one thing, I teach one course instead of three, and instead of taking five. Another is that this first week is full of administrivia. There are graduate assistantship assignments to prepare, lab schedules to produce, last-chance registration sessions to run. Paperwork to be completed. These aren't the sort of tasks that can be easily automated or delegated or shoved aside. So they capture mindshare -- and time.

This week I have had two other admin-related items on my to-do list. First is an all-day faculty retreat my department is having later this week. The faculty actually chose to get together for what is in effect an extended meeting, to discuss the sort of issues that can't be discussed very easily during periodic meetings during the semester, which are both too short for deep discussion and too much dominated by short-term demands and deadlines. As strange as it sounds, I am looking forward to the opportunity to talk with my colleagues about the future of our department and about some concrete next actions we can take to move in the desired direction. There is always a chance that retreats like this can fall flat, and I bear some responsibility in trying to avoid that outcome, but as a group I think we can chart a strong course. One good side effect is that we will go off campus for a day and get away from the same old buildings and rooms that will fill our senses for much of the next sixteen weeks.

Second is the dean's announcement of my third-year review. Department heads here are reviewed periodically, typically every five years. I came into this position after a couple of less-than-ideal experiences for most of the faculty, so I am on a 3-year term. This will be similar to the traditional end-of-the-term student evaluations, only done by faculty of an administrator. In some ways, faculty can be much sharper critics than students. They have a lot of experience and a lot of expectations about how a department should be run. They are less likely to "be polite" out of habits learned as a child. I've been a faculty member and do recall how picky I was at times. And this evaluation will drag out for longer than a few minutes at the end of one class period, so I have many opportunities to take a big risk inadvertently. I'm not likely to pander, though; that's not my style.

I'm not all that worried. The summative part of the evaluation -- the part that judges how well I have done the job I'm assigned to do -- is an essential part of the dean determining whether he would like for me to continue. While it's rarely fun to receive criticism, it's part of life. I care what the faculty think about my performance so far, flawed as we all know it's been. Their feedback will play a large role in my determining whether I would like for me to continue in this capacity. The formative part of the evaluation -- the part that gives me feedback on how I can do my job better -- is actually something I look forward to. Participating in writers' workshops at PLoP long ago helped me to appreciate the value of suggestions for improvement. Sometimes they merely confirm what we already suspect, and that is valuable. Other times they communicate a possible incremental improvement, and that is valuable. At other times still they open doors that we did not even know were available, and that is really valuable.

I just hope that this isn't the sort of finding that comes out of the evaluation. Though I suppose that that would be valuable in its own way!

Posted by Eugene Wallingford | Permalink | Categories: Managing and Leading, Personal, Teaching and Learning

January 07, 2008 7:07 PM

Teaching Compilers by Example

The most recent issue of inroads contained more than just some articles on how to introduce computer science to majors and non-majors alike. It also contained an experience report at the intersection of topics I've discussed recently: a compiler course using a sequence of examples.

José de Oliveira Guimarães describes a course he has taught annually since 2002. In this course, he presents a sequence of ten compilers, each of which introduces a new idea or ideas beyond the previous. The "base case" isn't a full compiler but rather a parser for a Scheme-like expression language. Students see these examples -- full code for compilers that can be executed -- before they learn any theory behind the techniques they embody. All ten are implemented from scratch, using recursive descent for parsing.

If I understand the paper correctly, de Oliveira Guimarães teaches these examples, followed by five examples implemented using a parser generator, in the first eight weeks of a sixteen-week course. The second half of the course teaches the theory that underlies compiler construction. This seems to be a critical feature of a by-example course: The students study examples first and only then learn theory -- but now in the context of the code that they have already seen.

One thing that makes this course different than mine is that students do not implement a compiler during this semester. Instead, they take a second course in which they do a traditional compiler project. I don't have this luxury -- I'm lucky to be able to teach one compiler course at all, let alone a two-semester sequence. I also feel strongly about the power of students working on a major project while learning about compilers that I'm not sure how I feel about the examples-and-theory first course. But what a neat idea! I look forward to studying de Oliveira Guimarães's code in order to understand his course better. (He has all of code on-line, along with the beginnings of a descriptive "textbook" for the course, translated into English.)

In some ways, I suppose that what I do here bears some resemblance to this approach.

In the first semester, students study programming languages using an interpreter-based approach, which gives them a low level of understanding of techniques for processing programs. They see several small program processors and implement a few others, in order to understand these techniques. They then take the compiler course, where we "go deep" both in implementation and theory. But there is no question that their compiler project requires some of the theory we study that semester.

At the beginning of the second semester, before students begin work on their project and before I introduce any theory of lexical or syntax analysis, I spend two sessions presenting a whole compiler. This compiler is my implementation of a variant of a compiler described by Fischer, LeBlanc, and Cytron. I present this example first in order for students to see all of the phases of a compiler working in concert to translate a program. Because it comes so early in the semester, it is necessarily a simple example, but it does give me and the students a reference point for most of what we see later in the term.

This is only one example, but I work incremental refinements to it as we go along. As we learn new techniques for each phase of the compiler, I try to plug a new component into the reference compiler that demonstrates the theory we have just learned. For example, my initial example uses an ad hoc scanner and a recursive descent parser; later, I plug in a regular expression-based scanner and a table-driven parser. Again, language compiled by the reference program is pretty simple, so these new components are still quite simple, but I hope they give the students some grounding before they implement more complex versions in their project.

All that said, de Oliveira Guimarães's approach takes the idea of examples to another level. I look forward to digging into it further and seeing what I can learn from it.

January 03, 2008 3:25 PM

New Year, Old Topics

I have had a more relaxing break than last year. With no traveling and only an occasional hour or two in my office knocking around, not working, my mind has had a chance to clear out a bit. This is a good way to start the year.

I've even managed not to do much professional reading these past two weeks. About all I've read is a stack of old MacWorld magazines. Every time I or my department buys a Mac, I receive a complimentary 6-month subscription. I like to read about and try out all sorts of software, and this magazine is full of links. But I don't take time to read all of the issues as they roll in, which explains why I still had a couple of issues from late 2005 in my stack! (My favorite new app from this expedition is CocoThumbX.)

New Year's Eve did bring in my mail the latest issue of inroads, the periodic bulletin of SIGCSE. Rather than add it to my stack of things to read, I decided to browse through it while watching college football on TV. I found a few items of value to my work. In the first twenty pages or so, I encountered several short articles that set the stage for ongoing discussion in the new year of issues that have been capturing mind share among CS educators for the last year or so.

(Unfortunately, the latest issue of inroadsis not yet available in the ACM Digital Library, so I'll have to add later the links to the articles discussed below.)

First was Bill Manaris's Dropping CS Enrollments: Or The Emperor's New Clothes, which claims that the drop in CS enrollments may well be a result of the switch to C++, Java, and object-oriented programming in introductory courses. He includes a form of disclaimer late in his article by saying that his argument "is about the possibility that there is no absolute best language/paradigm for CS1". This is now an almost standard disclaimer in papers about the choice of language and paradigm for introductory CS courses, perhaps as an attempt to avoid the contention of the debates that swirl around the topic. But the heart of Manaris's article is a claim that what most of us do in CS1 these days is not good, disclaimer notwithstanding. That's okay; ideas are meant to be expressed, examined, and evaluated.

Manaris backs his position from an interesting angle: the use of usability to judge programming languages. He quotes one of Jakob Nielsen's papers:

On the Web, usability is a necessary condition for survival. If a website is difficult to use, people leave. If the homepage fails to clearly state what a company offers and what users can do on the site, people leave. If users get lost on a website, they leave. If a website's information is hard to read or doesn't answer users' key questions, they leave. Note a pattern here? There's no such thing as a user reading a website manual or otherwise spending much time trying to figure out an interface. There are plenty of other websites available; leaving is the first line of defense when users encounter a difficulty.

Perhaps usability is a necessary condition for CS1 to retain students? Maybe students approach their courses and majors in the same way? They certainly have many choices, and if our first course raises unexpected difficulties, maybe students will exercise their choices.

Manaris moves quickly from this notion to the idea that we should consider the usability of the programming language we adopt for our intro courses. The idea of studying how novices learn a language and using that knowledge to inform language design and choice isn't new. The psychology of programming folks have been asking these questions for many years, and Brad Myers's research group at CMU has published widely in this area. But Manaris is right that not enough folks take this kind of research into account when we think about CS1-2. And when we do discuss the ideas of usability and learnability, we usually rely on our own biases and interests as "evidence".

Relying more on usability studies of programming languages would be a good thing. But they need to be real studies, not just more of the same old "here's why I think my favorite language (or paradigm) is best..." Unfortunately, both of the examples Manaris gives in his article are the sort we see too often in SIGCSE circles: anecdotal reports of personal experiences, which are unavoidably biased toward one person's knowledge and preferences. In one, he tells of his experience coding some task in languages A and B and reports that he had "9 compiler errors, one semantic error, and one 'headache' error" in A. (Hmm, could A be Java?) But I wonder if even some of my CS1 students would encounter these same difficulties; the good ones can be pretty good.

In the other, a single evaluator collects data from his own experiences solving a simple task in several different programming environments. I believe in reporting quantitative results, but quantitative data from one individual's experience is of limited value. How many introductory CS1 students would feel the same? Or intro instructors? We are the ones who usually make claims about languages and paradigms based almost solely on their experiences and the experiences of like-minded colleagues. (Guess what? The folks I meet with at the OOPSLA educators' symposium find OOP to be a great way to teach CS1. Shocking!)

And we always need to keep in mind the difference between essential and gratuitous complexity. I am often reminded of Alan Kay's story about learning to play violin. Of course it's hard to learn. But the payoff is huge.

To be fair, Manaris is writing an editorial, not a research paper, so his examples can be taken as hints, not exact recommendations. When conducting usability studies of languages, we need to be sure to seek answers to several different questions. What works best for the general population of students? What helps the weakest students learn as much of value as they can? What helps the strongest students come to see and appreciate -- even love -- the deep ideas and power of our discipline?

Manaris closes his paper with an interesting claim:

In the minds of our beginning students, the programming language/paradigm we expose them to in CS1 is computer science.

A good CS education should help students overcome this limited mindset as soon as possible, but for students who are living through an introduction to computing, this limitation is reality. Most importantly, for students who never go beyond CS1 -- and this includes students who might have gone on but who have an unsatisfying experience and leave -- it is a reality that defines our discipline for much of the rest of the world.

This idea leads nicely into Henry Walker's column, What Image Do CS1/CS2 Present to Our Students? a few pages later in the issue. Walker contrasts the excitement many practitioners and instructors express about computing with the reality of most introductory courses for our students, which are often inward-looking and duller than they should be. We define our discipline for students in the paradigm and language we teach them, but also in many other ways: in the approach to programming we model, in the kinds of assignments we give, and in the kinds of exam questions we ask. We also define it in the ideas we expose them to. Is computing "just programming"? If that's all we show students, then for them it may well be. What ideas do we talk about in class? What activities do we ask students to do? How much creativity do we allow them?

CS1 can't be and do everything, but it should be something more than just a programming language and an arcane set of rules for formatting and commenting code.

Finally, this idea leads naturally into Owen Astrachan's Head in the Clouds only two pages later. In the last few years, Owen has become an evangelist for the position that computing education has to be grounded in real problems -- not the Towers of Hanoi, but problems that real people (not computer scientists) solve in the world as a part of doing their jobs and living their lives. This is a way to get out of the inward-looking mindset that dominates many of our intro courses -- by designing them to look outward at the world of problems just waiting to be attacked in a computational way.

Owen also has been railing against the level of discourse in which CS educators partake, the sort of discourse that ask "What language should I use in CS1?" rather than "How can I help my students using computing as a tool to solve problems?" In that sense, he may not have much interest in Manaris's article, though he may appreciate the fact the article seeks to put our focus on how the students who take our courses learn a language, rather than on the language itself.

I think that we should still start from courses designed in a meaningful context. There is a lot of power in context, both for course design and for motivation. Besides, working from a problem-driven focus gives us an interesting opportunity for evaluating the effects of features such as a language's usability. Consider Mark Guzdial's media computation approach. His team has developed course materials for complete CS1 courses in two languages, Python and Java (which are, not coincidentally, two of the languages that Manaris discusses in his article). These materials have been developed by the creators of the approach, with equal care and concern for the success of students using the approach. Python is in many ways the simpler language, so it will be interesting to see whether students find one version of the course more attractive than the other. I have taught media comp in Java, as has a colleague, and this semester he will teach it using Python. While preparing for the semester, he has already commented on some of the ways in which Python "gets out of the way" and lets the class get down to the business of computing with images and sounds sooner. But that is early anecdote; what will students think and learn?

I hope that 2008 finds the CS education community asking the right questions and moving toward answers.