November 30, 2009 10:04 PM

Agile Themes: Organic Planning and the Cost of Change

My previous agile theme, on organic planning, has implications for how we design and implement solutions -- for making decisions that "stick". Derek Sivers recently used an apocryphal story about walkways to express a similar principle:

So when should you make business decisions? When you have the most information, when you're at your smartest: as late as possible.

My first thought was that this principle follows from adaptive planning, but that confuses causal order with temporal order. Sivers's conclusion assumes that we are "at our dumbest at the beginning, and at our smartest at the end". This is the same context in which organic planning applies. It is not always the context in which we work, but when it is, then late binding -- lock-in -- is valuable.

One of the reasons I like to use dynamic programming languages is because they give me late binding in two dimensions: at programming time and at run time. When I'm coding in a domain where I'm not very smart at the outset and become smarter with experience, late binding in programming time seems to make me more productive. Allowing my programs to make decisions as late as possible means that I can imbue my code with the same sense of committing at the right time to an object or function, not sooner.

Implicit in this notion is that our designs and programs will change as we move forward, as we learn more. In the domain of new business ideas, where Sivers works, change and growth are almost unavoidable. Those of us who program in interesting new domains experience a similar pattern of learning and evolution. If change is going to happen, the question becomes, should we load our change into the beginning or end of our development process? Seth Godin knows the answer, whether you work in software, business, or any other creative endeavor:

You must thrash at the beginning, because thrashing at the beginning is cheap.

Some people object to the notion of change as "thrashing", because it sounds unskilled, unprofessional, even undignified. Godin uses the term to indicate the kind of frantic activity that some organizations undertake as they near a deadline and are trying desperately to finish a product that is worthy of delivery. In that context, "thrashing" is a great term. That sort of frantic activity is not wrong in and of itself -- it reflects the group's attempt to incorporate all that it has learned in the course of development. The problem is in the timing: when too many sticky decisions have been made, changing a product to incorporate what we have learned is expensive.

Rather than try to fight against the thrashing, let's instead move it to the beginning of the process, when change is less expensive and when are still figuring out which of our decisions will stick over the long-term. This is how reactive planning, change, and late binding can come together to make us more effective developers.

November 27, 2009 12:40 PM

Agile Themes: Organic Planning

When I encounter people who are skeptical about agile software development, one of the common concerns I hear is about the lack of planning and design in advance. How can we create software with a coherent design when we don't "really" design? I commented on this concern a while back when mused about reactive planning in AI and software.

Reading last week, I ran across a passage from Lewis Mumford's book "The City in History" that brought to mind a similar trade-off between planning upfront and planning as a system evolves:

Organic planning does not begin with a preconceived goal; it moves from need to need, from opportunity to opportunity, in a series of adaptations that themselves become increasingly coherent and purposeful, so that they generate a complex final design, hardly less unified than a pre-formed geometric pattern.

One thing I like about this passage is its recognition that adaptive planning can produce something that is coherent and purposeful. Indeed, many of us prefer to live in places that have grown organically, with a minimal amount of planning and oversight to keep growth from going off-track. The result can still feel whole in a way that other city designs do not.

I know it is dangerous to extrapolate too casually from other domains into software development, because the analogy may not be a solid one. What this passage offers is something of an existence proof that adaptation through carefully reactive planning can produce solid designs in a domain that people know and understand. This may help us to overcome initial resistance to the idea of agile planning of software long enough that they will give it a try. The real proof comes on software projects -- and many of us have experienced that.

November 23, 2009 2:53 PM

Personality and Perfection

Ward Cunningham recently tweeted about his presentation at Ignite Portland last week. I enjoyed both his video and his slides.

Brian Marick has called Ward a "gentle humanist", which seems so apt. Ward's Ignite talk was about a personal transformation in his life, from driver to cyclist, but as is often the case he uncovers patterns and truths that transcend a single experience. I think that is why I always learn so much from him, whether he is talking about software or something else.

From this talk, we can learn something about change in habit, thinking, and behavior. Still, one nugget from the talk struck me as rather important for programmers practicing their craft:

Every bike has personality. Get to know lots of them. Don't search for perfection. Enjoy variety.

This is true about bikes and also true about programming languages. Each has a personality. When we know but one or two really well, we have missed out on much of what programming holds. When we approach a new language expecting perfection -- or, even worse, that it have the same strengths, weaknesses, and personality as one we already know -- we cripple our minds before we start.

When we get to know many languages personally, down to their personalities, we learn something important about "paradigms" and programming style: They are fluid concepts, not rigid categories. Labels like "OO" and "functional" are useful from some vantage points and exceedingly limiting from others. That is one of the truths underlying Anton van Straaten's koan about objects and closures.

We should not let our own limitations limit how we learn and use our languages -- or our bikes.

November 21, 2009 5:54 AM

Quotes of the Day

The day was yesterday.

I am large, I contain multitudes.

The to-do list is a time capsule, containing missives and pleas to your future selves. ... Why is it not trivially easy to carry out items on your own to-do list? And the answer is: Because the one writing the list, and the one carrying it out are two different people.

Now I understand the problem... my to-do list is a form of time travel.

Open to Multitudes

It's the kind of culture that can tolerate rap music and extreme sports that can also create space for guys like Page and Brin and Google. That's one of our hidden strengths.

This is from economist Paul Romer, as quoted by Tyler Cowen. I agree. We need to try out lots of ideas to find the great ones.

Going to an Extreme

I'm not interested in writing short stories. Anything that doesn't take years of your life and drive you to suicide hardly seems worth doing.

Cormac McCarthy must live on the edge. This is one of those romantic notions that has never appealed to me. I've never been so driven -- nor felt like I wanted to be.

A Counterproposal

6. MAKE MANY SKETCHES

Join the best sketches to produce others and improve them until the result is satisfactory.

To make sketches is a humble and unpretentious approach toward perfection.

... says composer Arnold Schonberg, as quoted at peripatetic axiom. This is more my style.

Speaking of Perfection

My perfect day is sitting in a room with some blank paper. That's heaven. That's gold and anything else is just a waste of time.

Again from Cormac McCarthy. Unlike McCarthy, I do not think that everything else is a waste of time. Yet I feel a kinship with his sense of a perfect day. To sit in a room, alone, with an open terminal. To write, whether prose or code. But especially code.

November 20, 2009 3:35 PM

Learning Through Crisis

... an author never does more damage to his readers

than when he hides a difficulty.

-- Évariste Galois

Like many of the aphorisms we quote for guidance, this one is true, but not quite true if taken with the wrong sense of its words or at the wrong scale.

First, there are different senses of the word "difficulty". Some difficulties are incidental, and some are essential. An author should indeed hide incidental difficulties; they only get in the way. However, the author must not hide essential difficulty. Part of the author's job is to help the readers overcome the difficulty.

Second, we need to consider the scale of revelation and hiding. Authors who expose difficulties too soon only confuse their readers. Part of the author's job is to prepare the reader, to explain, inspire, and lead readers from their initial state into a state where they are ready to face the difficulty. At that moment, the author is ready to bring the difficulty out into the open. The readers are ready.

What if the reader has already uncovered the difficulty before meeting the author? In that time, the author must not try to hide it, to fool his readers. He must attack it head on -- perhaps with the same deliberation in explaining, inspiring, and leading, but without artifice. It is this sense in which Galois has nailed a universal truth.

If we replace "author" with "teacher" in this discussion we still have truths. The teacher's job is to eliminate incidental difficulties while exposing essential ones. Yet the teacher must be deliberate, too, and prepare the reader, the student, to overcome the difficulty. Indeed, a large part of the teacher's craft is the judicious use of simplification and unfolding, leading students to a deeper understanding.

Sometimes, we teachers can use difficulty to our advantage. As I discussed recently, the brain often learns best when it it encounters its own limitations. Some say that is the only way we learn, but I don't think I believe the notion when taken to this extreme. But I think that difficulty is often the teacher's best source of leverage. Confront students with difficulty, and then help them to find resolution.

Ben Blum-Smith expresses a similar viewpoint in his recent nugget on teaching students to do proofs in mathematics. He launches his essay with remarks by Paul Lockhart, whose essay I discussed last summer. Blum-Smith's teaching nugget is this:

The impulse toward rigorous proof comes about when your intuition fails you. If your intuition is never given a chance to fail you, it's hard to see the point of proof.

This is just as true for us as we learn to create programs as it is when we learn to create proofs. If our intuition and our current toolbox never fail us, it's hard to see the point of learning a new tool -- especially one that is difficult to learn.

Blum-Smith then quotes Lockhart:

Rigorous formal proof only becomes important when there is a crisis -- when you discover that your imaginary objects behave in a counterintuitive way; when there is a paradox of some kind.

This quote doesn't inspire cool thoughts in me the way so many other passages in Lockhart's paper do, but one word stands way out on this reading: crisis. It inspires Blum-Smith as well:

... what happens is that when kids reach a point in their mathematical education where they are asked to prove things, they find

- that they have no idea how to accomplish what is being asked of them, and

- that they don't really get why they're being asked to do it in the first place.

The way out of this is to give them a crisis. We need to give them problems where the obvious pattern is not the real pattern. What you see is not the whole story! Then, there is a reason to prove something.

We need to give our programming students problems in which the obvious solution, the solution that flows naturally from their fingers onto the keyboards, doesn't feel right, or maybe even doesn't work at all. There is more to the story; there is reason to learn something new.

Teachers who know a lot and can present useful knowledge to students can be quite successful, and every teacher really needs to be able to play this role sometime. But that is not enough, especially in a world where increasingly knowledge is a plentiful commodity. Great teachers have to know how to create in the minds of their students a crisis: a circumstance in which they doubt what they know just enough to spur the hard work needed to learn.

A good writer can do this in print, but I think that this is a competitive advantage available to classroom teachers: they operate in a more visceral environment, in which one can create safe and reliably effective crises in their students minds. If face-to-face university courses with domain experts are to thrive in the new, connected world, it will be because they are able to exploit this advantage.

~~~~

Postscript: Galois, the mathematician quoted at the top of this article, was born on October 25. That was the date of one of my latest confrontations with difficulty. Let me assure you: You can run, but you cannot hide!

November 18, 2009 6:45 AM

The Gang-of-Four Book at Fifteen

One of the fun parts of teaching software engineering this semester has been revisiting some basic patterns in the design part of the course, and now as we discuss refactoring in the part of the course that deals with implementation and maintenance. 2009 is the 15th anniversary of the publication of Design Patterns, the book that launched software patterns into the consciousness of mainstream developers. Some folks reminisced about the event at OOPSLA this year, but I wasn't able to make it to Orlando. OOPSLA 2004 had a great 10th-anniversary celebration, which I had the good fortune to attend and write about.

I wasn't present at OOPSLA in 1994, when the book created an unprecedented spectacle in the exhibit hall; that just predates my own debut at OOPSLA. But wish I had been!

InformIT recently ran a series of interviews with OO and patterns luminaries, sharing their thoughts on the book and on how patterns have changed the landscape of software development. The interview with Brian Foote had a passage that I really liked:

InformIT: How has Design Patterns changed your impressions about the way software is built?

The vision of reuse that we had in the object-oriented community in hindsight seems like a God that Failed. Just as the Space Shuttle never lived up to its promised reuse potential, libraries, frameworks, and components, while effective in as far as they went, never became foundations of routine software reuse that many had envisioned and hoped.

Instead, designs themselves, design ideas, patterns became the loci of reuse. We craft our ideas, by hand, into each new artifact we build.

This insight gets to the heart of why patterns matter. Other forms of reuse have their place and use, but they operate at a code level that is ultimately fragile in the face of the different contexts in which our programs operate. So they are, by necessity, limited as vehicles for reuse.

Design ideas are less specific, more malleable. They apply in a million contexts, though never in quite the same way. We mold them to the context of our program. The patterns we see in our designs and tests and implementations give us the abstract raw material out of which to create our programs. We still strive for reuse, but at a different level of thinking and working.

Read the full interview linked above. It is typical Brian Foote: entertaining and full of ideas presented slightly askew from the typical vantage point. That twist helps me to think differently about things that may have otherwise become commonplace. And as so often happens, I had to look a word up in the dictionary before I reached the end. I always seem to learn something from Brian!

November 16, 2009 8:32 PM

Towards Software that Improves on Index Cards

Who would have thought that this would turn out to be a major challenge to software developers, software to improve on index cards?

Like a dog gnawing on a bone, I had planned to write more about the topic of software for XP planning. The thread on the XP list just kept on going, and I was sure that I needed to rebut some of the attitude about index cards being the perfect technology for executing XP. I'm not sure why I felt this need. I mostly agree that small cards and fat pens are the best way for us to implement story planning and team communication in XP. Maybe it's an academic's vice to drive any topic into the ground on a technicality.

Fortunately, though, I came to realize that I was punching a strawman. Most of the folks on the list who are talking up the use of low-fi technology don't take a hard stance on reality versus simulation as discussed in my previous post, even when it colors their rhetoric. Most would simply say something like this: "Index cards and felt-tip markers simply work better for us right now than anything else. If someone wants to claim that a software tool can do as well or better, they'll have to show us."

Skepticism and asking for evidence -- many of us all can do better with such an attitude.

I also realized what has been the most interesting result of this discussion thread for me: a chance to see what XP practitioners consider to be the essential features of a planning tool for agile teams. Which characteristics of cards and markers make them so useful? Which characteristics of existing software tools get in the way of doing the job as well as index cards? This includes general-purpose software that we use for XP, say, a spreadsheet, and software built explicitly for P teams (there are many).

As a part of the XP list discussion, Ilja Preuss posted a link to his blog entry on criteria for XP team tools. Here the start of a feature list for XP planning software that I gleaned from that entry and from a few of the articles in the thread:

- easy to see all the stories at once

- easy to move the stories around

- easy to make notations of various sorts on the stories

- provides visual cues of the size of the system and what stories are most important

- makes all of the people related to the project comfortable with making changes

The overarching themes in this discussion are high visibility and strong collaboration. In this context, good tools provide more than a one- or even two-way communication medium. In addition to what they communicate, they must communicate in a way that is visible to as many team members as possible at all times. This is the first step toward enabling and encouraging interactivity. One of the most powerful roles that cards play in software development is that of tokens in a cooperative game -- several games, really -- that moves a project forward. Without interactivity, the communication that makes it possible for projects to succeed tends to die off.

Some people are trying to build better tools, and I applaud them. I hope they draw on their own experience and on the experiences we find shared in forums such as the XP list. One tool-in-progress that caught my attention was Taskboardy, which builds on Google Wave. Kent Beck recently tweeted and blogged that he had not yet grokked the need to be satisfied by Wave. Without a killer itch to be scratched, it is hard for a new technology, especially a radically different one, to become indispensable and displace other tools. Maybe the high degree of communication and interactivity demanded by agile software teams is just the sort of need that Wave can satisfy? I don't know, but the best way to find out is for someone to try.

November 15, 2009 8:02 PM

Knowledge Arbitrage

A couple of weeks back, Brian Foote tweeted:

Ward Cunningham: Pure Knowledge arbitrageurs will no longer gain by hoarding as knowledge increasingly becomes a plentiful commodity #oopsla

This reminds me of a "quought" of the day that I read a couple of years ago. Paraphrased, it asked marketers: What will you do when all of your competitors know all of the same things you do? Ward's message broadens the implication from marketers to any playing field on which knowledge drives success. If everyone has access to the same knowledge, how do you distinguish yourself? Your product? The future looks a bit more imposing when no one starts with any particular advantage in knowledge.

Ward's own contributions to the world -- the wiki and extreme programming among them -- give us a hint as to what this new future might look like. Hoarding is not the answer. Sharing and building together might be.

The history of the internet and the web tells us at the result of collaboration and open knowledge may well be a net win for all of us over a world in which knowledge is hoarded and exploited for gain in controlled bursts.

Part of the ideal of the academy has always been the creation and sharing of knowledge. But increasingly its business model has been exposed as depending on the sort of knowledge arbitrage that Ward warns against. Universities now compete in a world of knowledge more plentiful and open than ever before. What can they do when all of their customers have access to much of the same knowledge that they hope to disseminate? Taking a cue from Ward, universities probably need to be thinking hard about how they share knowledge, how they help students, professors, and industry build knowledge together, and how they add value in their unique way through academic inquiry.

November 13, 2009 2:18 PM

Learning via Solutions to our Limitations

Yesterday I introduced refactoring in my software engineering course. Near the beginning of my code demo, I got sidetracked a bit when I mentioned that I would be using JUnit to run some automated tests. We have not talked about testing yet, automated or otherwise, and I thought that refactoring might be a good way to show its value.

One student wondered why he should go to the trouble; why not just write a few lines of code to do his own testing? My initial response turned too quickly to the idea of automation, which seemed natural given the context of refactoring. Automating tests is essential when we are working in a tight cycle of code-test-refactor-test. This wasn't all that persuasive to the student, who had not seen us refactor yet. Fortunately, another student, who has used testing frameworks at work, jumped in to point out the real flaw in what the first student had proposed: interspersing test code and production code. I think that was more persuasive to the class, and we moved on.

That got me to thinking about a different way to introduce both testing frameworks and refactoring next time. The key pedagogical idea is to focus on students' current experience and why they need something new. Necessity gives birth not only to invention but also to the desire to learn.

Somedays, I think the web is magic. This popped into newsfeed when I refreshed this morning:

whenever possible, introduce new skills and new knowledge as the solution to the limitations of old skills and old knowledge

Meyer, who teaches HS math, has a couple of images contrasting the typical approach to lesson planning (introduce concept, pay "brief homage to workers who use it", work sample problems) to an approach based on the limitations of old skills:

- summarize briefly relevant prior skills

- show a "sample problem that renders those skills pretty well useless"

- describe the new skill

I like to teach design patterns using a more active version of this approach:

- give the students a problem to solve, preferably one that looks like a good fit for their current skill set

- as a group, explore the weaknesses in their solutions or the difficulties they had creating them

- introduce a pattern that balances the forces in this problem, and the discuss the more general context in which it applies

I need to remember to use this strategy with more of the new skills and techniques. It's hard to do this in the small for all techniques, but when I can tie the new idea to an error students make or a difficulty they have, I usually have better success. (My favorite success story with this approach was helping students to learn selection patterns -- ways to use if statements -- in CS1 back in the mid-1990s.)

November 11, 2009 1:08 PM

Time Waits for No One

OR: for all p,  passed(p)

passed(p)

~~~~

Last week saw the passing of computer scientist Amir Pnueli. Even though, Pnueli received the Turing Award, I do not have the impression that many computer scientists know much about his work. That is a shame. Pnueli helped to invent an important new sub-discipline of computing:

Pnueli received ACM's A. M. Turing Award in 1996 for introducing temporal logic, a formal technique for specifying and reasoning about the behavior of systems over time, to computer science. In particular, the citation lauded his landmark 1977 paper, "The Temporal Logic of Programs," as a milestone in the area of reasoning about the dynamic behavior of systems.

I was fortunate to read "The Temporal Logic of Programs" early in my time as a graduate student. When I started at Michigan State, most of its AI research was done in the world-class Pattern Recognition and Image Recognition lab. That kind of AI didn't appeal to me much, and I soon found myself drawn to the Design Automation Research Group, which was working on ways to derive hardware designs from specs and to prove assertions about the behavior of systems from their designs. This was a neat application area for logic, modeling, and reasoning about design. I began to work under Anthony Wojcik, applying the idea of modal logics to reasoning about hardware design. That's where I encountered the work of Pnueli, which was still relatively young and full of promise.

Classical propositional logic allows us to reason about the truth and falsehood of assertions. It assumes that the world is determinate and static: each assertion must be either true or false, and the truth value of an assertion never changes. Modal logic enables us to express and reason about contingent assertions. In a modal logic, one can assert "John might be in the room" to demonstrate the possibility of John's presence, regardless of whether he is or is not in the room. If John were known to be out of the country, one could assert "John cannot be in the room" to denote that it is necessarily true that he is not in the room. Modal logic is sometimes referred to as the logic of possibility and necessity.

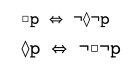

These notions of contingency are formalized in the modal operators  p, "possibly p," and

p, "possibly p," and

p, "necessarily

p." Much like the propositional operators "and" and "or",

p, "necessarily

p." Much like the propositional operators "and" and "or",  and

and  can be used to express

the other in combination with ¬, because necessity is really nothing more than possibility "turned inside out". The

fundamental identities of modal logic embody this relationship:

can be used to express

the other in combination with ¬, because necessity is really nothing more than possibility "turned inside out". The

fundamental identities of modal logic embody this relationship:

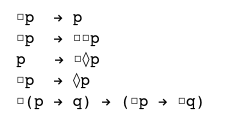

Modal logic extends the operator set of classical logic to permit contingency. All the basic relationships of

classical logic are also present in modal logic.  and

and  are not themselves

truth functions but quantifiers over possible states of a contingent world.

are not themselves

truth functions but quantifiers over possible states of a contingent world.

When you begin to play around with modal operators, you start to discover some fun little relationships. Here are a few I remember enjoying:

The last of those is an example of a distributive property for modal operators. Part of my master's research was to derive or discover other properties that would be useful in our design verification tasks.

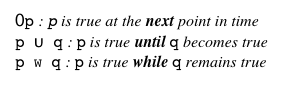

The notion of contingency can be interpreted in many ways. Temporal logic interprets the operators of modal

logic as reasoning over time.  p becomes "always p" or "henceforth p," and

p becomes "always p" or "henceforth p," and  p becomes "sometimes p" or

"eventually p." When we use temporal logic to reason over circuits, we typically think in terms of "henceforth" and

"eventually." The states of the world represent discrete points in time at which one can determine the truth value of

individual propositions. One need not assume that time is discrete by its nature, only that we can evaluate the truth

value of an assertion at distinct points in time. The fundamental identities of modal logic hold in this temporal logic

as well.

p becomes "sometimes p" or

"eventually p." When we use temporal logic to reason over circuits, we typically think in terms of "henceforth" and

"eventually." The states of the world represent discrete points in time at which one can determine the truth value of

individual propositions. One need not assume that time is discrete by its nature, only that we can evaluate the truth

value of an assertion at distinct points in time. The fundamental identities of modal logic hold in this temporal logic

as well.

In temporal logic, we often define other operators that have specific meanings related to time. Among the more useful temporal logical connectives are:

My master's research focused specifically on applications of interval temporal logic, a refinement of temporal logic that treats sequences of points in time as the basic units of reasoning. Interval logics consider possible states of the world from a higher level. They are especially useful for computer science applications, because hardware and software behavior can often be expressed in terms of nested time intervals or sequences of intervals. For example, the change in the state of a flip-flop can be characterized by the interval of time between the instant that its input changes and the instant at which its output reflects the changed input.

Though I ultimately moved into the brand-new AI/KBS Lab for my doctoral work, I have the fondest memories of my work with Wojcik and the DARG team. It resulted in my master's paper, "Temporal Logic and its Use in the Symbolic Verification of Hardware", from which the above description is adapted. While Pnueli's passing was a loss for the computer science community, it inspired me to go back to that twenty-year-old paper and reminisce about the research a younger version of myself did. In retrospect, it was a pretty good piece of work. Had I continued to work on symbolic verification, it may have produced an interesting result or two.

Postscript. When I first read of Pnueli's passing, I didn't figure I had a copy of my master's paper. After twenty years of moving files from machine to machine, OS to OS, and external medium to medium, I figured it would have been lost in the ether. Yet I found both a hardcopy in my filing cabinet and an electronic version on disk. I wrote the paper in nroff format on an old Sparc workstation. nroff provided built-in char sequences for all of the special symbols I needed when writing about modal logic that worked perfectly -- unlike HTML, whose codes I've been struggling with for this entry. Wonderful! I'll have to see whether I can generate a PDF document from the old nroff source. I am sure you all would love to read it.

November 09, 2009 9:54 PM

Reality versus Simulation

A recent discussion on the XP mailing list discussed the relative merits of using physical cards for story planning versus a program, even something as simple as a spreadsheet. Someone had asked, "why not use a program?", and lots of XP aficionados explained why not.

I mostly agree with the explanations, but one undercurrent in the discussion bothered me. It is best captured in this comment:

The software packages are simulations. The board and cards are the real thing.

I was immediately transported twenty years back, to a set of old arguments against artificial intelligence. They went something like this... If we write a program to simulate a rainstorm, we will not get wet; it is just a simulation. By the same token, we can write a program to simulate symbol processing the way we think people do it, but it's not real symbol processing; it is just a simulation. We can write a program to simulate human thought, but it's not real; it's just simulated thought. Just as a simulated rainstorm will not make us wet, simulated thought can't enlighten us. Only human thought is real.

That always raised my hackles. I understand the difference between a physical phenomenon like rain and a simulation of it. But symbol processing and thought are something different. They are physical in our brains, but they manifest themselves in our interactions with the exterior world, including other symbol processors and thinkers. Turing's insight in his seminal paper Computing Machinery and Intelligence was to separate the physical instantiation of intelligent behavior from the behavior itself. The essence of the behavior is its ability to communicate ideas to other agents. If a program can carry on such communication in a way indistinguishable form how humans communicate, then on what grounds are we to say that the simulation is any less real than the real thing?

That seems like a long way to go back for a connection, but when I read the above remark, from someone whose work I greatly respect, it, too, raised my hackles. Why would a software tool that supports an XP practice be "only" a simulation and current practice be the real thing?

The same person prefaced his conclusion above with this, which explains the reasoning behind it:

Every software package out there has to "simulate" some definite subset of these opportunities, and the more of them the package chooses to support the more complex to learn and operate it becomes. Whereas with a physical board and cards, the opportunities to represent useful information are just there, they don't need to be simulated.

The current way of doing things -- index cards and post-it notes on pegboards -- is a medium of expression. It is an old medium, familiar, comfortable, and well understood, but a medium nonetheless. So is a piece of software. Maybe we can't express as much in our program, or maybe it's not as convenient to say what we want to say. This disadvantage is about what we can say or say easily. It's not about reality.

The same person has the right idea elsewhere in his post:

Physical boards and cards afford a much larger world of opportunities for representing information about the work as it is getting done.

Ah... The physical medium fits better into how we work. It gives us the ability to easily represent information as the work is being done. This is about work flow, not reality.

Another poster gets it right, too:

It may seem counterintuitive for those of us who work with technology, but the physical cards and boards are simply more powerful, more expressive, and more useful than electronic storage. Maybe because it's not about storage but communication.

The physical medium is more expressive, which makes it more powerful. More power combined with greater convenience makes the physical medium more useful. This conclusion is about communication. It doesn't make the software tool less real, only less useful or effective.

You will find that communication is often the bottom line when we are talking about software development. The agile approaches emphasize communication and so occasionally reach what seems to be a counterintuitive result for a technical profession.

I agree with the XP posters about the use of physical cards and big, visible boards for displaying them. This physical medium encourages and enhances human communication in a way that most software does not -- at least for now. Perhaps we could create better software tools to support our work? Maybe computer systems will evolve to the point that a live display board will dynamically display our stories, tasks, and status in a way that meshes as nicely with human workflow and teamwork as physical displays do now. Indeed, this is probably possible now, though not as inexpensively or as conveniently as stash of index cards, a cheap box of push pins, and some cork board.

I am open to a new possibility. Framing the issue as one of reality versus simulation seems to imply that it's not possible. I think that perspective limits us more than it helps us.

November 04, 2009 7:33 PM

Wherefore Art Thou Agile?

In recent years, my favorite conference, OOPSLA, has been suffering a strange identity crisis. When the conference began in the mid-1980s, object-oriented programming was a relatively new idea, known to some academics but unheard of by most in industry. By the early 2000s, it had gone mainstream. Most developers understands OOP now, or think they do. Our languages and tools make it seem second nature. We teach it to freshmen in many universities. Who needs a big conference devoted to objects?

As I've written before, say, here, this reflects a fundamental misunderstanding about OOPSLA has always been about. It's a natural misunderstanding, given the name and the marketing hype around it through the 1990s, but a misunderstanding nonetheless. It's hard to unbrand a well-known conference and re-brand it once its existing brand loses its purpose or zip. So OOPSLA struggles a bit. I hear that next year it will become one attraction under the umbrella of a new event, "SPLASH". (More on that later!)

In the last few years, people have begun to notice something similar going on with a younger spinoff from OOPSLA. Agile software development has started to become mainstream. It is over the initial hump of awareness and acceptance. Most everyone knows what 'agile' is, or thinks he does. Its practices have begun to seep into a large number of software houses, whether from Scrum or XP. Our languages and tools now make it possible to work in shorter iterations with continuous feedback and more confidence. We teach it in universities, sometimes even to freshmen. Agile has become old school.

Maybe I'm overstating things a bit, but the buzz has certainly died off. Now we are more likely to hear grumbling. I don't know that many people are asking whether we need conferences devoted to agile approaches yet. But I am starting to read articles that second guess the "movement" and to see a lot of revisionist history. Perhaps this is what happens when the excitement of newness fades into the blandness of same-old, same-old. The excitement passes, and people begin to pay attention more to the chinks in the armor than the strengths that gave the idea birth.

Let's not forget what made agile development and especially XP so galvanizing. William Caputo hasn't forgotten:

But why Agile sells isn't why I liked it. I liked it *because* it put the programmer at the center of the effort of programming (crazy notion that), I didn't need the manifesto to tell me that I had to find ways to make the person paying the money delighted with my efforts, what I needed was a way to tell them that I would gladly do so, if they would just let me.

(The asterisks are his. The bold is mine.)

This is what made XP and the other agile approaches so very important. Focusing on the buyers' needs is easy to do. They pay for software and so have an opportunity to affect strongly the nature of software development. What was missing pre-agile was attention to the developer. I still prefer the term "programmer", with all it implies. It was easy to find ways in which programmers were marginalized, buried beneath processes, documentation, and tools that seemed primarily to serve purposes other than to trust and help programmers to make great software.

The agile movement helped to change that, to shift the perspective from inhuman processes that expected the worst from programmers to practices that celebrate programmers and their love for making software. That some people are now even talking about restating the Agile Manifesto's values toward maximizing shareholder value is a tribute to agile's success at changing the overarching story of the industry.

All this reminds me of an essay by Brian Marick on ease and joy. Agile is about joy at work. We programmers love to make software -- software that improves the lives of its users. Great software. Let us love writing programs, and we can do great things for you.

November 03, 2009 7:48 PM

Parts of Speech in Programming Languages

I enjoyed Reg Braithwaite's talk Ruby.rewrite(Ruby) (slides available on-line). It gives a nice survey of some metaprogramming hacks related to Ruby's syntactic and semantic structure.

To me, one of the most thought-provoking things Reg says is actually a rather small point in the overall message of the talk. Object-oriented programming is, he summarizes, basically a matter a matter of nouns and verbs, objects and their behaviors. What about other parts of speech? He gives a simple example of an adverb:

blitz.not.blank?

In this expression, not is an adverb that modifies the behavior of blank?. At the syntactic level, we are really telling blitz to behave differently in response to the next message, which happens to be blank?, but from the programmer's semantic level not modifies the predicate blank?. It is an adverb!

Reg notes that some purists might flag this code as a violation of the Law of Demeter, because it sends a message to an object received from another message send. But it doesn't! It just looks that way at the syntax level. We aren't chaining two requests together; we are modifying how one of the requests works, how its result is to be interpreted. While this may look like a violation of the Law of Demeter, it isn't. Being able to talk about adverbs, and thus to distinguish among different kinds of message, helps to make this clear.

It also helps us to program better in at least two ways. First, we are able to use our tools without unnecessary guilt at breaking the letter of a law that doesn't really apply. Second, we are freed to think more creatively about how our programs can say what we mean. I love that Ruby allows me to create constructs such as not and weave them seamlessly into my code. Many of my favorite gems and apps use this feature to create domain-specific languages that look and feel like what they are and look and feel like Ruby -- at the same time. Treetop is an example. I'd love to hear about your favorite examples.

So, our OO programs have nouns and verbs and adverbs. What about other parts of speech? I can think of at least two from Java. One is pronouns. In English, this is a demonstrative pronoun. It is in Java, too. I think that super is also demonstrative pronoun, though it's not a word we use similarly in English. As an object, I consist of this part of me and that (super) part of me.

Another is adjectives. When I teach Java to students, I usually make an analogy from access modifiers -- public, private, and protected -- to adjectives. They modify the variables and methods which they accompany. So do synchronized and volatile.

Once we free ourselves to think this way, though, I think there is something more powerful afoot. We can begin to think about creating and using our own pronouns and adjectives in code. Do we need to say something in which another part of speech helps us to communicate better? If so, how can we make it so? We shouldn't be limited to the keywords defined for us five or fifteen or fifty years ago.

Thinking about adverbs in programming languages reminds me of a wonderful Onward! talk I heard at the OOPSLA 2003 conference. Cristina Lopes talked about naturalistic programming. She suggested that this was a natural step in the evolution from aspect-oriented programming, which had delocalized references within programs in a new way, to code that is concise, effective, and understandable. Naturalistic programming would seek to take advantage of elements in natural language that humans have been using to think about and describe complex systems for thousand of years. I don't remember many of the details of the talk, but I recall discussion of how we could use anaphora (repetition for the sake of emphasis) and temporal references in programs. Now that my mind is tuned to this wavelength, I'll go back to read the paper and see what other connections it might trigger. What other parts of speech might we make a natural part of our programs?

(While writing this essay, I have felt a strong sense of deja vu. Have I written a previous blog entry on this before? If so, I haven't found it yet. I'll keep looking.)

November 02, 2009 6:59 PM

It's All Just Programming

One of my colleagues is an old-school C programmer. He can make the machine dance using C. When C++ came along, he tried it for a while, but many of the newly-available features seemed like overkill to him. I think templates fell into that category. Other features disturbed him. I remember him reporting some particularly bad experiences with operator overloading. They made code unreadable! Unmaintainable! You could never be sure what + was doing, let alone operators like () and casts. His verdict: Operator overloading and its ilk are too powerful. They are fine in theory, but real languages should not provide so much freedom.

Some people don't like languages with features that allow them to reconfigure how the language looks and works. I may have been in that group once, long ago, but then I met Lisp and Smalltalk. What wonderful friends they were. They opened themselves completely to me; almost nothing was off limits. In Lisp, most everything was open to inspection, code was data that I could process, and macros let me define my own syntax. In Smalltalk, everything was an object, including the integers and the classes and the methods. Even better, most of Smalltalk was implemented in Smalltalk, right there for me to browse and mimic... and change.

Once I was shown a world bigger than Fortran, PL/I, and Pascal, I came to learn something important, something Giles Bowkett captures in his inimitable, colorful style:

There is no such thing as metaprogramming. It's all just programming.

(Note: "Colorful" is a euphemism for "not safe to read aloud at work, nor to be read by those with tender sensibilities".)

Ruby fits nicely with languages such as Common Lisp, Scheme, and Smalltalk. It doesn't erect too many boundaries around what you can do. The result can be disorienting to someone coming from a more mainstream language such as Java or C, where boundaries between "my program" and "the language" are so much more common. But to Lispers, Schemers, and Smalltalkers, the freedom feels... free. It empowers them to express their ideas in code that is direct, succinct, and powerful.

Actually, when you program in C, you learn the same lesson, only in a different way. It's all just programming. Good C programmers often implement their own little interpreters and their own higher-order procedures as a part of larger programs. To do so, they simply create their own data structures and code to manipulate them. This truth is the raw material out of which Greenspun's Tenth Rule of Programming springs. And that's the point. In languages like C, if you want to use more powerful features, and you will, you have to roll them for yourself. My friends who are "C weenies" -- including the aforementioned colleague -- take great pride in their ability to solve any problem with just a bit more programming, and they love to tell us the stories

Metaprogramming is not magic. It is simply another tool in the prepared programmer's toolbox. It's awfully nice when that tool is also part of the programming language we use. Otherwise, we are limited in what we can say conveniently in our programs by the somewhat arbitrary lines drawn between real and meta.

You know what? Almost everything in programming looks like magic to me. That may seem like an overstatement, but it's not. When I see a program of a few thousand lines or more generate music, play chess, or even do mundane tasks like display text, images, and video in a web browser, I am amazed. When I see one program convert another into the language of a particular machine, I am amazed. When people show me shorter programs that can do these things, I am even more amazed.

The beauty of computer science is that we dig deeper into these programs, learn their ideas, and come to understand how they work. We also learn how to write them ourselves.

It may still feel like magic to me, but in my mind I know better.

Whenever I bump into a new bit of sorcery, a new illusion or a new incantation, I know what I need to do. I need to learn more about how to write programs.