July 30, 2010 2:36 PM

Notes on Entry Past

I've been killing loose minutes this week by going through my stuff folder, moving files I want to keep to permanent homes and pitching files I have lost interest in or won't have time for anytime soon. As I sometimes do, I've run across quotes I stashed away for use in blog entries. Alas, some of the quotes would have been useful in pieces I wrote recently, but now they aren't likely to find a home any time soon.

I recall reading this quote from A. E. Stallings in a short essay by Tim O'Reilly on the value of a classical education:

[The ancients] showed me that technique was not the enemy of urgency, but the instrument.

But it would have been perfect in Form Matters. Improving your form doesn't slow you down in the long run, it makes you faster.

I read this in an entry by Philip Windley about how he had averted a potential disaster:

Automate everything.

... and this in a response to that entry by Gordon Weakliem:

You are not a machine, so stop repeating yourself.

Of course, I was immediately reminded of my own disaster, unaverted. As I look back at Weakliem's article, it is interesting to see how programming principles such as "don't repeat yourself" and practices such as pair programming show up in different contexts outside of software.

Finally, I found this snippet, a tweet by @KentBeck:

as your audience grows, the cost of failure rises. put positively, it'll never be cheaper to fail than today.

This would have been a great part of any number of entries about my agile software development course in May and my software engineering course last fall. Kent is a master of crystallizing ideas into neat little catchphrases I never forget. Perhaps this one would have stuck with a few students as they tried to move toward shorter and shorter iterations of the test-code-refactor cycles.

July 28, 2010 2:26 PM

Sending Bad Signals about Recursion

A couple of weeks ago there was a sustained thread on the SIGCSE mailing list on the topic of teaching recursion. Many people expressed strongly held opinions, though most of those were not applicable outside the poster's own context. Only a few of the views expressed were supported by educational research.

My biggest take-away from the discussion was this: I can understand why CS students at so many schools have a bad impression of recursion. Like so many students I encounter, the faculty who responded expressed admiration for the "beauty and elegance" of recursion but seemed to misunderstand at a fundamental level how to use it as a practical tool.

The discussion took a brief side excursion into the merits of Towers of Hanoi as a useful example for teaching recursion to students in the first year. It is simple and easy for students to understand, said the proponents, so that makes it useful. In my early years of teaching CS1/CS2, I used Towers as an example, but long ago I came to believe that real problems are more compelling and provide richer context for learning. (My good friend Owen Astrachan has been sounding the clarion call on this topic for a few years now, including a direct dig on the Towers of Hanoi!)

My concern with the Towers is more specific when we talk about recursion. One poster remarked that this problem helped students to see that recursion can be slow:

Recursion *is* slow if you're solving a problem that is exponentially hard like Hanoi. You can't solve it faster than the recursive solution, so I think Hanoi is a perfectly fine example of recursion.

This, I think, is one of the attitudes that gives our students an unduly bad impression of recursion, because it confounds problem and solution. Most students leave their first-year courses thinking that recursion is slow and computationally expensive. This is in part an effect of the kinds of problems we solve with recursion there. The first examples we show students of loops tend not to solve exponentially hard problems. This leads students to infer that loops are fast and recursion is slow, when the computational complexity was a feature of the problems, not the solutions. A loop-based solution to Towers would be slow and use a lot of space, too! We can always tell our students about the distinction, but they see so few examples of recursion that they are surely left with a misimpression, through no fault of their own.

Another poster commented that he had once been a consultant on a project at a nuclear reactor. One of the programmers proudly showed one of their programs that used recursion to solve one of their problems. By using recursion, they had been able to construct a straightforward inductive proof of the code's correctness. The poster chided the programmer, because the code was able to overflow the run-time stack and fail during execution. He encouraged them to re-write the code using a subset of looping constructs that enables proofs over the limited set of programs it generates. Recursion cannot be used in real-time systems, he asserted, for just this reason.

Now, I don't want run-time errors in the code that runs our nuclear reactors or any other real-time system, for that matter, but that conclusion is a long jump from the data. I wrote to this faculty member off-list and asked whether the programming language in question forbids, allows, or requires the compiler to optimize recursive code or, more specifically, tail calls. With tail call optimization, a compiler can convert a large class of recursive functions to a non-recursive run-time implementation. This means that the programmer could have both a convincing inductive proof of the code's correctness and a guarantee that the run-time stack will never grow beyond the initial stack frame.

The answer was, yes, this is allowed, and the standard compilers provide this as an option. But he wasn't interested in discussing the idea further. Recursion is not suitable for real-time systems, and that's that.

It's hard to imagine students developing a deep appreciation for recursion when their teachers believe that recursion is inappropriate independent of any evidence otherwise. Recursion has strengths and weaknesses, but the only strengths most students seem to learn about are its beauty and its elegance. Those are code words in many students' minds for "impractical" and, when combined with a teacher's general attitude toward the technique, surely limit our students' ability to get recursion.

I'm not claiming that it's easy for students to learn recursion, especially in the first year, when we tend to work with data that make it hard to see when recursion really helps. But it's certainly possible to help students move from naive recursive solutions to uses of an accumulator variable that enable tail-recursive implementations. Whether that is a worthwhile endeavor in the first year, given everything else we want to accomplish there, is the real question. It is also the question that underlay the SIGCSE thread. But we need to make sure that our students understand recursion and know how to use it effectively in code before they graduate. It's too powerful a tool to be missing from their skill set when they enter the workforce.

As I opened this entry, though, I left the discussion not very hopeful. The general attitude of many instructors may well get in the way of achieving that goal. When confronted with evidence that one of their beliefs is a misconception, too many of them shrugged their shoulders or actively disputed the evidence. The facts interfered with what they already know to be true!

There is hope, though. One of Ursula Wolz's messages was my favorite part of the conversation. She described a study she conducted in grad school teaching recursion to middle-schoolers using simple turtle graphics. From the results of that study and her anecdotal experiences teaching recursion to undergrads, she concluded:

Recursion is not HARD. Recursion is accessible when good models of abstraction are present, students are engaged and the teacher has a broad rather than narrow agenda.

Two important ideas stand out of this quote for me. First, students need to have access to good models of abstraction. I think this can be aided by using problems that are rich enough to support abstractions our students can comprehend. Second, the teacher must have a broad agenda, not a narrow one. To me, this agenda includes not only the educational goals for the lesson but also general message that we want to send our students. Even young learners are pretty\ good at sensing what we think about the material we are teaching. If we convey to them that recursion is beautiful, elegant, hard, and not useful, then that's what they will learn.

July 26, 2010 3:28 PM

Digital Cameras, Electric Guitars, and Programming

I often write here about programming for everyone, or at least for certain users. Won't that put professional programmers out of business? Here is a great answer to a related question from a screenwriter using an analogy to music:

Well, if I gave you an electric guitar, would you instantly become Eric Clapton?

There is always room for specialists and artists, people who take literacy to a higher level. We all can write a little bit, and pretty soon everyone will be blogging, tweeting, or Facebooking, but we still need Shakespeare, James Joyce, and Kurt Vonnegut. There is more to writing than letters and sentences. There is more to programming than tokens and procedures. A person with ideas can create things we want to read and use.

Sometimes the idea is as simple as hooking up two existing ideas. I may be late to the party, but @bc_l is simply too cool:

I'm GNU bc on twitter! DM me your math and I'll tell you the answer. (by @hmason)

@hmason is awesome.

On a more practical note, I use dc as the target language for a simple demo compiler in my compilers course, following the lead of Fischer, Cytron, and LeBlanc in Crafting a Compiler. I'm considering using the new edition of this text in my course this fall, in part because of its support for virtual machines as targets and especially the JVM. I like where my course has been the last couple of offerings, but this seems like an overdue change in my course's content. I may as well start moving the course. Eventually, targeting multi-core architectures will be essential.

If I want to help students who dream of being Eric Clapton with a keyboard, I gotta keep moving.

~~~~

(The image above is courtesy of PedalFreak at flickr, with a Attribution-NoDerivs 2.0 Generic license.)

July 23, 2010 2:29 PM

Agile Moments: Values and Integrity Come Before Practices

As important as the technical practices of the agile software developer are, it is good to keep in mind that they are a means to an end. Jeff Langr and Tim Ottinger do a great job of summarizing the characteristics of agile development teams and reminding us that they are not specific practices. Ottinger boiled it down to a simple phrase in a recent discussion of this piece on the XP mailing list: "... start with the values."

Later in the same thread, Langr commented on what made teaching XP practices so frustrating:

... I am very happy now to be developing in a team doing TDD, and to not be debating daily with apathetic and/or duplicitous people who want to make excuses. You're right, you can reach the lazier folks, given enough time.... It's the sly ones who spent more time crafting excuses, instead of earnestly trying to learn, who drove me nuts.

Few people can learn something entirely new to them without making a good-faith effort. When someone pretends to make a good-faith effort but then schemes not to learn, everyone's time is wasted -- and a lot of the coach's or teacher's limited energy, too. I have been fortunate in my years teaching CS to encounter very few students who behave this way. Most do make an effort to learn, and their struggles are signs that I need to try something different. And every once in a while I run into into is honest and says outright, "I'm not going to do that." This lets us both move straight on to more productive uses of our time.

On the same theme of values and integrity, I love this line from John Cook

If the smart thing to do doesn't scale, maybe we shouldn't scale.

His entry is in part about values. When someone says that we can't treat people well "because that doesn't scale", they are doing what many managerial strategies and software development processes do to people. It also reminds me of why many in the agile development community favor small teams. Not everything that we value scales, and we value those things enough that we are willing to look for ways to work "small".

July 22, 2010 4:19 PM

Days of Future Passed: Standards of Comparison and The Value of Limits

This morning, a buddy of mine said something like this as part of a group e-mail discussion:

For me, the Moody Blues are a perfect artist for iTunes. Obviously, "Days of Future Passed" is a classic, but everything else is pretty much in the "one good song on an album" category.

This struck as a reflection of an interesting way in which iTunes has re-defined how we think about music. There are a lot of great albums that everyone should own, but even for fans most artists produce only a song or two worth keeping over the long haul. iTunes makes it possible to cherry-pick individual songs in a way that relegates albums to second thought. A singer or band have achieved something notable if people want to buy the whole album.

That's not the only standard measure I encountered in that discussion.

After the same guy said, "The perfect Moody Blues disc collection is a 2-CD collection with the entirety of 'Days of Future Passed' and whatever else you can fit", another buddy agreed and went further (again paraphrased):

"Days of Future Passed" is just over half an 80-minute CD, and then I grabbed another 8 or 9 songs. That worked out right for me.

Even though CDs are semi-obsolete in this context, they still serve a purpose, as a sort of threshold for asking the question "How much music from this band do I really want to rip?"

When I was growing up, the standard was the 90-minute cassette tape. Whenever I created a collection for a band from a set of albums I did not want to own, I faced two limits: forty-five minutes on a side, and ninety minutes total. Those constraints caused me many moments of uncertainty as I tried to cull my list of songs into two lists that fit. Those moments were fun, though, too, because I spent a lot of time thinking about the songs on the bubble, listening and re-listening until I could make a comfortable choice. Some kids love that kind of thing.

Then, for a couple of decades the standard was the compact disc. CDs offered better quality with no halfway cut, but only about eighty minutes of space. I had to make choices.

When digital music leapt from the CD to the hard drive, something strange happened. Suddenly we were talking about gigabytes. And small numbers of gigabytes didn't last long. From 4- and 8-gigabyte devices we quickly jumped to iPods with a standard capacity of 160GB. That's several tens of thousands of songs! People might fill their iPods with movies, but most people won't ever need to fill them with the music they listen to on any regular basis. If they do, they always have the hard drive on the computer they sync the iPod with. Can you say "one terabyte", boys and girls?

The computer drives we use for music got so large so fast that they are no longer useful as the arbitrary limit on our collections. In the long run, that may well be a good thing, but as someone who has lived on both sides of the chasm, I feel a little sadness. The arbitrary limits imposed by LPs, cassettes, and CDs caused us to be selective and sometimes even creative. This is the same thing we hear from programmers who had to write code for machines with 128K of memory and 8 Mhz processors. Constraints are a source of creativity and freedom.

It's funny how the move to digital music has created one new standard of comparison via the iTunes store and destroyed another via effectively infinite hard drives. We never know quite how we and our world will change in response to the things we build. That's part of the fun, I think.

July 21, 2010 4:17 PM

Two Classic Programs Available for Study

I just learned that the Computer History Museum has worked with Apple Computer to make source code for MacPaint and QuickDraw available to the public. Both were written by Bill Atkinson for the original Mac, drawing on his earlier work for the Lisa. MacPaint was the iconic drawing program of the 1980s. The utility and quality of this one program played a big role in the success of the Mac. Andy Hertzfeld, another Apple pioneer, credited QuickDraw for the success of the Mac for its speed at producing the novel interface that defined the machine to the public. These programs were engineering accomplishments of a different time:

MacPaint was finished in October 1983. It coexisted in only 128K of memory with QuickDraw and portions of the operating system, and ran on an 8 Mhz processor that didn't have floating-point operations. Even with those meager resources, MacPaint provided a level of performance and function that established a new standard for personal computers.

Though I came to Macs in 1988 or so, I was never much of a MacPaint user, but I was aware of the program through friends who showed me works they created using it. Now we can look under the hood to see how the program did what it did. Atkinson implemented MacPaint in one 5,822-line Pascal program and four assembly language files for the Motorola 6800 totaling 3,583 lines. QuickDraw consists of 17,101 lines of Motorola 6800 assembly in thirty-seven modules.

I speak Pascal fluently and am eager to dig into the main MacPaint program. What patterns will I recognize? What design features will surprise me, and teach me something new? Atkinson is a master programmer, and I'm sure to learn plenty from him. He was working in an environment that so constrained his code's size that he had to do things differently than I ever think about programming.

This passage from the Computer History Museum piece shares a humorous story that highlights how Atkinson spent much of his time tightening up his code:

When the Lisa team was pushing to finalize their software in 1982, project managers started requiring programmers to submit weekly forms reporting on the number of lines of code they had written. Bill Atkinson thought that was silly. For the week in which he had rewritten QuickDraw's region calculation routines to be six times faster and 2000 lines shorter, he put "-2000" on the form. After a few more weeks the managers stopped asking him to fill out the form, and he gladly complied.

This reminded me of one of my early blog entries about refactoring. Code removed is code earned!

I don't know assembly language nearly as well as I know Pascal, let alone Motorola 6800 assembly, but I am intrigued by the idea of being able to study more than 20,000 lines of assembly language that work together on a common task and which also exposes a library API for other graphics programs. Sounds like great material for a student research project, or five...

I am a programmer, and I love to study code. Some people ask why anyone would want to read listings of any program, let alone a dated graphics program from more than twenty-five years ago. If you use software but don't write it, then you probably have no reason to look under this hood. But keep in mind that I study how computation works and how it solves problems in a given context, especially when it has limited access to time, space, or both.

But... People write programs. Don't we already know how they work? Isn't that what we teach CS students, at least ones in practical undergrad departments? Well, yes and no. Scientists from other disciplines often ask this question, not as a question but as an implication that CS is not science. I have written on this topic before, including this entry about computation in nature. But studying even human-made computation is a valuable activity. Building large systems and building tightly resource-constrained programs are still black arts.

Many programmers could write a program with the functionality of MacPaint these days, but only a few could write a program that offers such functionality under similar resource limitations. That's true even today, more than two decades after Atkinson and others of his era wrote programs like this one. Knowledge and expertise matter, and most of it is hidden away in code that most of us never get to see. Many of the techniques used by masters are documented either not well or not at all. One of the goals of the software patterns community is to document techniques and the design knowledge needed to use them effectively. And one of the great services of the free and open-source software communities is to make programs and their source code accessible to everyone, so that great ideas are available to anyone willing to work to find them -- by reading code.

Historically, engineering has almost always run ahead of science. Software scientists study source code in order to understand how and why a program works, in a qualitatively different way than is possible by studying a program from the outside. By doing so, we learn about both engineering (how to make software) and science (the abstractions that explain how software works). Whether CS is a "natural" science or not, it is science, and source code embodies what it studies.

For me, encountering the release of source code for programs such as MacPaint feels something like a biologist discovering a new species. It is exciting, and an invitation to do new work.

Update: This is worth an update: a portrait of Bill Atkinson created in MacPaint. Well done.

July 20, 2010 6:59 PM

Running on the Road: The Oxford, Ohio, Area

(The seventh stop in the Running on the Road series. The first six were Allerton Park, Illinois, Muncie, Indiana, Vancouver, British Columbia, St. Louis, Missouri, Houston, Texas, Carefree, Arizona, and Greenfield, Indiana.)

Much time has passed since my last Running on the Road report, written three years ago after a high school reunion. My conference travel schedule has not been as extensive in the interim, but I have been to SIGCSE in places such as Milwaukee and Portland, a couple of SECANT workshops at Purdue, and a few other meetings. I've also vacationed in places such as San Diego and St. Louis. I've run at least a little bit all these places, though persistent illness often had me running far fewer miles and so not seeking out routes more adventurous than shorts loops near my hotels. Still, Portland, Milwaukee, San Diego, and even West Lafayette deserve reports of their own. Time and inclination to write them have been scarce at the times they needed to be written.

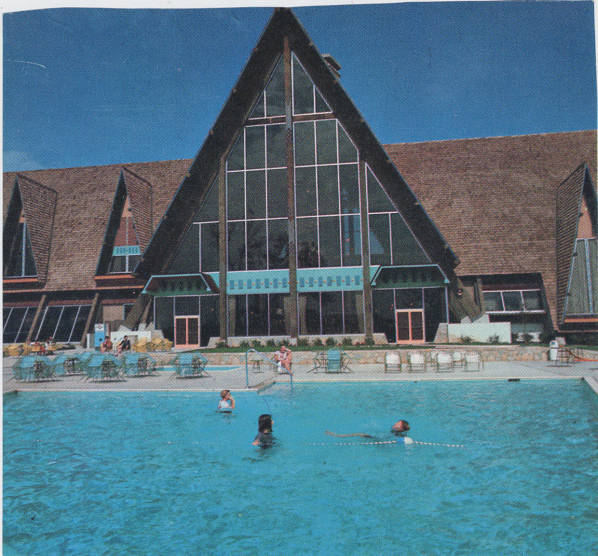

Here in the dog days of summer, I find a few moments where writing about running on the road seems the right thing to do, even though the location isn't a big city or common conference or vacation destination. Over the weekend, I intended an informal reunion of college friends, which we held at Hueston Woods State Park, not too far from the college town of Oxford, Ohio. This park has, in addition to all the features one expects from a nature refuge, a first-rate lodge that lives more like a resort center or a vacation hotel. From that base, I had a chance to run two different routes that I will want to remember and which other runners might enjoy.

I have just begun training for a fall marathon, about which I will surely have something to say in the coming months. For this trip, though, it meant that I had a 7-mile run planned for Friday morning and a 14-mile long run planned for Sunday. Between the kind of resort we had planned for the reunion and the eight-hour drive to it from my home, I decided to save my Friday AM run for the evening and the park and to hit the road sooner. Why run the same old 7-mile loops at home when the prospect of beautiful new scenery called from Ohio?

Like many parks of this kind, Hueston Woods offers quite a selection of off-road trails for walkers, hikers, and mountain bikers. At the right time of day and under the right conditions, these trails might be perfect for a runner, too. I decided to play it conservatively and stick to the roads in and around the park, which themselves offered relaxing views without the risks of trails: bad footing, slopes that are occasionally too steep for running, and the potential of running into people who are taking a more leisurely trek through the wilderness. So I used the lodge itself as the starting point for both of my runs.

Main Loop Road

After a long and tiring day driving, I decided not to venture too far from the known for my evening run. I picked up a map of the park, saw that there is a nice loop that circles Acton Lake, and asked the staff at the front desk about its length. They gave me an idea of driving distances to various attractions around the lake, and from those numbers I extrapolated that the main Loop Road must be in the 6-7 mile range. Perfect. So off I headed.

Perhaps I should have played it safer by driving the loop myself or at least trying to use the scale marker on the map to estimate the road's length more accurately. First, the road from the lodge to the loop was longer than I anticipated, about 1/2 mile. Once on the loop, I found myself running, and running, and still running. After 50 minutes, I started to worry that I had not seen the last landmark along the way. After 60 minutes, I knew that my estimate was way too low -- but by how much? After 70 minutes, I decided that I should limit my losses and stop soon. At the 78:00 mark, I stopped running and began walking. I was not too far from home... I walked about seven minutes before finishing the loop. When I got back to the lodge, I asked a different staff person, who told me the loop is 11 miles long. Given my times (73 minutes running and 7 minutes walking), the conditions (lots of hills to slow me down and a day having sat stiffly in a car), and my fitness level, I doubt strongly that I ran seven-minute miles. So I estimate that that loop is nine miles, certainly no more than 9.5 miles.

Were I better reporter, I would have driven the loop myself before coming home Sunday to confirm my estimate. But in the end I enjoyed the route enough to recommend it for future runs. The scenery is simple but pleasant. As the park's main road, it offers many options for side trips to extend or replace part of the loop, including access to the park's dozen or so trails at several points along the way. There are several hills of varying length and grade, which added a bit of spice to the run. This road makes for a solid basic component on which to build runs at Hueston Woods.

The Road to Oxford

Once I saw the kind of roads that lead to the state park, I quickly decided to do my long run by running from the park to Oxford and back. Oxford is home to Miami University, an athletic rival of my alma mater and an strong academic school. I'd never spent much time in Oxford, and that was over twenty years ago. My group dined in town Saturday night and found a surprising variety of cuisine, including an Indian restaurant, a couple of sushi places, and several small bistros with the sort of trendy food one finds in college towns for students and faculty desiring worldliness.

Hueston Woods State Park is 5 miles up the road from Oxford on State Road 732. The jog from the front door of the lodge to the main Loop Road is 0.5 miles, and the road from there to the park entrance is 1.7 miles. I confirmed all of these numbers with the help of one of my buddies on the drive back from the restaurant Saturday night. Thus I was able to go out with confidence early Sunday morning and run an out-and-back route totaling 14.3 miles.

This route offers a rural vista common to Ohio, my home state of Indiana, and the rest of the Midwest. Being in southern Ohio, it also presents many curves, few straightaways of any note, and half dozen or so hills of varying length and grade that challenge any runner accustomed to training and racing on flat land. There are stands of trees, farms with corn and cows, and even a covered bridge that merits a paragraph in Wikipedia.

One warning for my friends from other parts of the country: Do not expect shoulders alongside the road. Rarely on SR 732 is there room on the pavement for cars in both directions and a runner on one side. When my future wife first visited my family in Indiana, one of the first things she noticed was that there were no shoulders on the country roads leading to our house, or anywhere in our county. Ohio seems to have the same feature. The roads in the park were somewhat better, but only because they tended to be wider. I did not have any trouble on the state road leading into and out of Oxford early on a Sunday morning, but I remained alert throughout.

Even with the hills and the curves, I surprised myself with a brisk two-hour, three-minute long run. I enjoyed each minute along the way and will happily run this route again when I next visit Butler County. Next time, I wouldn't mind adding some mileage to explore the Miami U. campus or to press on south of Oxford back into the country.

I'm back home now, my usual routes. Hueston Woods gave me a welcome break and a boost of energy as I press on with beginning of marathon training.

July 19, 2010 4:48 PM

"I Blame Computers."

I spent the weekend in southwestern Ohio at Hueston Woods State Park lodge with a bunch of friends from my undergrad days. This group is the union of two intersecting groups of friends. I'm a member of only one but was good friends with the two main folks in the intersection. After over twenty years, with close contact every few years, we remain bonded by experiences we shared -- and created -- all those years ago.

The drives to and from the gathering were more eventful than usual. I was stopped by a train at same railroad crossing going both directions. On the way there, a semi driver intentionally ran me off the road while I was passing him on the right. I don't usually do that, but he had been driving in the left lane for quite a while, and none too fast. Perhaps I upset him, but I'm not sure how. Then, on the way back, I drove through one of the worst rainstorms I've encountered in a long while. It was scarier than most because it hit while I was on a five-lane interstate full of traffic in Indianapolis. The drivers of my hometown impressed me by slowing down, using their hazard lights, and cooperating. That was a nice counterpoint to my experience two days earlier.

Long ago, my mom gave me the New Testament of the Bible on cassette tape. (I said it was long ago!) When we moved to a new house last year, I came across the set again and have had it in pile of stuff to handle ever since. I was in an unusual mood last week while packing for the trip and threw the set in the car. On the way to Ohio, I listened to Gospel of Matthew. I don't think I have ever heard or read an entire gospel in one setting before. After hearing Matthew, I could only think, "This is a hard teaching." (That is a line from another gospel, by John, the words and imagery of which have always intrigued me more than the other gospels.)

When I arrived on Friday, I found that the lodge did offer internet service to the rooms, but at an additional cost. That made it easier for me to do what I intended, which was to spend weekend off-line and mostly away from the keyboard. I enjoyed the break. I filled my time with two runs (more on them soon) and long talks with friends and their families.

Ironically, conversation late on Saturday night turned to computers. The two guys I was talking with are lawyers, one for the Air Force at Wright Patterson Air Force Base and one for a U.S. district court in northern Indiana. Both lamented the increasing pace of work expected by their clients. "I blame computers," said one of the guys.

In the old days, documents were prepared, duplicated, and mailed by hand. The result was slow turnaround times, so people came to expect slow turnaround. Computers in the home and office, the Internet, and digital databases have made it possible to prepare and communicate documents almost instantly. This has contributed to two problems they see in their professional work. First, the ease of copy-and-paste has made it even easier to create documents that are bloated or off-point. This can be used to mislead, but in their experience the more pernicious problem is lack of thoughtfulness and understanding.

Second, the increased speed of communication has led to a change in peoples' expectations about response. "I e-mailed you the brief this morning. Have you resolved the issue this morning?" There is increasing pressure to speed up the work cycle and respond faster. Fortunately, both report that these pressures come only from outside. Neither the military brass nor the circuit court judges push them or their staff to work faster, and in fact encourage them to work with prudence and care. But the pressure on their own staff from their clients grows.

Many people lash out and blame "computers" for whatever ills of society trouble them. These guys are bright, well-read, and thoughtful, and I found their concerns about our legal system to be well thought out. They are deeply concerned by what the changes mean for the cost and equitability of the justice the system can deliver. The problem, of course, is not with the computers themselves but with how we use them, and perhaps with how they change us. For me as a computer scientist, that conversation was a reminder that writing a program does not always solve our problems, and sometimes it creates new ones. The social and cultural environments in which programs operate are harder to understand and control than our programs. People problems can be much harder to solve than technical problems. Often, when we solve technical problems, we need to be prepared for unexpected effects on how people work and think.

July 11, 2010 11:59 AM

Form Matters

While reading the July/August 2010 issue of Running Times, I ran across an article called "Why Form Matters" that struck me as just as useful for programmers as runners. Unfortunately, the new issue has not been posted on-line yet, so I can't link to the article. Perhaps I can make some of the connections for you.

For runners, form is the way our body works when we run: the way we hold our heads and arms; the way our feet strike the ground; the length and timing of our strides. For programmers, I am thinking of what we often call 'process', but also the smaller habitual practices we follow when we code, from how we engage a new feature to how and when we test our code, to how we manage our code repository. Like running, the act of programming is full of little features that just happen when we work. That is form.

The article opened with a story about a coach trying to fix Bill Rodgers' running form at a time when he was the best marathoner in the world. The result was surprising: textbook form, but lower efficiency. Rodgers changed his form to something better and became a worse runner.

Some runners take this to mean, "Don't fix what works. My form works for me, however bad it is." I always chuckle when I hear this and think, "When you are the best marathoner in the world, let's talk. Until then, you might want to consider ways that you can get better." And you can be sure that Bill Rodgers was always looking for ways that you can get better.

There are a lot of programmers who resist changing style or form because, hey, what I do works for me. But just as all top running coaches ask their pupils -- even the best runners -- work on their form, all programmers should work on their form, the practices they use in the moment-to-moment activity of writing code. Running form is sub-conscious, but so is the part of our programming practice that has the biggest effect on our productivity. These are the habits and the default answers that pop into our head as we work.

If you buy this connection between running form and programming practice, then there is a lot for programmers to learn from this article. First, what of that experiment with Bill Rodgers?

No reputable source claims that, at any one instant, significantly altering your form from what your body is used to will make you faster.

If you decide to try out a new set of practices, say, to go agile and practice XP, you probably won't be faster at the end of the day. New habits take time. The body and mind require practice and acclimation. When we work in teams to build software, we have to go through a process of acculturation. Time.

But that doesn't mean ... that the form your body naturally gravitates toward is what will make you fastest.

There are many reasons that you may have fallen into the practices you use now. The courses and instructors you had in school, the language(s) you learned first, and the programming culture cut your professional teeth in all lead you in a particular direction. You will naturally try to get better within the context of these influences.

Even when you have been working to get better, you may (in AI terms) reach a local max biased by the initial conditions on the search. So:

"... there is a difference between doing something reasonably well and maximizing performance."

Sometimes, we need a change in kind rather than yet another change in degree.

Nor does it mean that your "natural" form is in your best long-term interest.

Initial conditions really do have a huge effect on how we develop as runners. When we start running, our muscles are weak and we have little stamina. This affects our initial running form, which we then rehearse slowly over many months as we become better runners. The result is often that we now have stronger muscles, more stamina, and bad form!

The same is true for programmers, both solo and in teams. If we are bad at testing and refactoring when we start, we develop our programming skills and get better while not testing and refactoring. What we practice is what we become.

Now, consider this cruel irony faced by runners:

"This belief system that just doing it over and over is somehow going to make us better is really crazy. Longtime runners actually suffer from the body's ability to become efficient. You become so efficient that you start recruiting fewer muscle fibers to do the same exercise, and as you begin using [fewer] muscle fibers you start to get a little bit weaker. Over time, that can become significant. Once you've stopped recruiting as many fibers you start exerting too much pressure on the fibers you are recruiting to perform the same action. And then you start getting muscle imbalance injuries...."

We programmers may not have to worry about muscle imbalance injuries, but we can find ourselves putting all of our emphasis on our mastery of a small set of coding skills, which then become responsible for all facets of quality in the software we produce. There may be no checks and balances, no practices that help reinforce the quality we are trying to wring out of our coding skills.

How do runners break out of this rut, which is the result of locally maximizing performance? They do something wildly different. Elites might start racing at a different distance or even move to the mountains, where they can run on hills and at altitude. We duffers can also try a race at a new distances, which will encourage us to train differently. Or we might simply change our training regimen: add a track workout once a week, or join a running group that will challenge us in new ways.

Sometimes we just need a change, something new that will jolt us out our equilibrium and stress our system in new way. Programmers can do this, too, whether it's by learning a new language every year or by giving a whole new style a try.

"Running is the one sport where people think, 'I don't have to worry about my technique. ...' We also have a sport where people don't listen to what the top people are doing. ..."

... I can't think of one top runner in the last two decades who hasn't worked on form, either directly through technique drills, indirectly through strength work or simply by being mindful of it while running.

The best runners work on their form. So do the best programmers. You and I should, too. Of course,

It's important when discussing running form to remember that there's no "perfect" form that we should all aspire to.

Even though I'm a big fan of XP and other approaches, I know that there are almost as many reliable ways to deliver great software as there are programmers. The key for all of us is to keep getting better -- not just strengthening our strengths, which can lead to the irony of overtraining, but also finding our weaknesses and building up those muscles. If you tend toward domains and practices where up-front plans work best for you, great. Just don't forget to work on practices that can make you better. And, every once in a while, try something crazy new. You never know where that might lead you.

"... if I went out and said we're going to do functional testing on a set of people, you're going to find weaknesses in every single one of them. The body has adapted to who you are, but has the body adapted to the best possible thing you can offer it? No."

Runners owe it to their bodies to try to offer them the best form possible. Programmers owe it to themselves, their employers, and their customers to try to find the best techniques and process for writing code. Sometimes, that requires a little hill climbing in the search, jumping off into some foreign territory and seeing how much better we can get form there. For runners, this may literally be hill climbing!

After the opening of the Running Times article, it turned to discussion of problems and techniques very specific to running. Even I didn't want to overburden my analogy by trying to connect those passages to software development. But then the article ended with a last bit of motivation for skeptical runners, and I think it's perfect for skeptical programmers, too:

If you're thinking, "That's all well and good for college runners and pros who have all day for their running, but I have only an hour a day total for my running, so I'm better off spending that time just getting in the miles," [Pete] Magill has an answer for you.

"... if you have only an hour a day to devote to your running, the first thing you've got to do is learn to run. If you bring bad form into your running, all you're going to be doing for that hour a day is reinforcing bad form. ..."

"A lot of people waste far more time being injured from running with muscle imbalances and poorly developed form than they do spending time doing drills or exercises or short hills or setting aside a short period each week to work on form itself."

Sure, practicing and working to get better is hard and takes time. But what is the alternative? Think about all, the years, days, and minutes you spend making software. If you do it poorly -- or even well, but less efficiently than you might -- how much time are you wasting? Practice is an investment, not a consumable.

We programmers are not limited to improving our form by practicing off-line. We can also change what we do on-line: we can write a test, take a short step, and refactor. We can speed up the cycle between requirement and running code, learn from the feedback we get -- and get better at the same time.

The next time you are writing code, think about your form. Surprise yourself.

July 06, 2010 1:03 PM

Vindicated

By H. G. Wells, no less:

"You have turned your back on common men, on their elementary needs and their restricted time and intelligence," H.G. Wells complained to Joyce after reading "Finnegans Wake." That didn't faze him. "The demand that I make of my reader," Joyce said, "is that he should devote his whole life to reading my works." To which the obvious retort is: Life's too short.

This passage comes from an article on the complexity of modern art. Some modern art works for me, but I long ago lost interest in writers who complicate their work seemingly with the goal of proving to me how smart they are. Some of my friends love such writers and look at me in the same way they look at children and puppies. I must admit, with no small measure of guilt, that I have occasionally wondered how much their interest in these writers rested in a hidden desire to show how smart they are.

I've mentioned at least a couple of times that I prefer small books to large, and on that criterion alone I could bypass "Ulysses" and "Finnegans Wake". Joyce compounds their length with sentence structures and made-up words that numb my small brain and squanders my limited time. Their complexity and deeply-woven literary allusions may well reward the reader who devotes his life to studying Joyce. But for me, life is indeed too short.

I must admit that I very much enjoyed Joyce's comparatively svelte "Portrait Of The Artist As A Young Man". I also enjoyed H. G. Wells's science fiction, though as literature it never rises anywhere near the level of "Portrait".

July 04, 2010 3:49 PM

Sharing Skills and Joys with Teachers

For many of the departments in my college, a big part of summer is running workshops for K-12 teachers. Science education programs reside in the science departments here, and summer is the perfect time for faculty in biology, physical science, and the like to work with their K-12 colleagues and keep them up to date on both their science and the teaching of it.

Not so in Computer Science; we don't have a CS education program. My state does not certify CS teachers, so their is no demand from teachers for graduate credits to keep licenses up to date. Few schools teach any CS or even computer programming these days at all, so there aren't even many teachers interested in picking up CS content or methods for their own courses. My department did offer a teaching minor for undergrads and a master's degree in CS education for many years in the 1980s and 1990s, but the audience slowly dried up and we dropped the programs.

Still, several CS faculty and I have long talked about how valuable it might be for recruiting to share the thrill of computing with high school teachers. With a little background, they might be more motivated -- and better equipped -- to share that excitement with their students.

That's what makes this summer so exciting. With support from Google's CS4HS program, we are offering a workshop for middle and high school teachers, Introducing Computing via Scratch and Simulation. Because there are not many CS-only teachers in our potential audience, we pitched the workshop to science and math teachers, with a promise that the workshop would help them learn how to use computing to demonstrate concepts in their disciplines and to build simple simulations for their students. We have also had some positive experiences working with middle-school social science students at the local lab school, so we included social science and humanities teachers in our call for participants. (Scratch is a great tool for story telling!)

Our workshop will reflect a basic tenet my colleagues and I hold: the best way to excite people about computing is to show them its power. Our main focus is on how they can use CS to teach their science, math, and other courses better. But we will also begin to hint at how they can use simple scripts to make their lives better, whether to prepare data for classroom examples or to handle administrative tasks. Scratch will be the main medium we teach them, but we will also point them toward something like Python. Glitz gets people's attention, but glitz fades quickly when the demands of daily life return to the main stage. The power of computing may help keep their attention.

Google is expanding this program, which they piloted last year at a couple of top schools. This year, several elite schools are offering workshops, but also several schools like mine, schools working in the trenches both of CS but also teacher preparation. As the old teachers' college in my state, we prepare a large percentage of the K-12 teachers here.

The grant from Google, awarded through a competitive proposal process, helped us to take the big step of developing a workshop and trying to sell it to the teachers of our area. We were not sure how many teachers would be willing to spend four days (three this summer and a follow-up day in the fall) to study computer science. The Google award allowed us to offer small stipends to lower the barrier to attendance. We also kept expenses low by donating instructor time, which allowed us to offer the workshop at the lowest cost possible to teachers. They result was promising: more teachers signed up than we have stipends for.

Next comes the fun part: preparing and teaching the workshop!