June 30, 2012 10:52 AM

"What Were Alleged to be Ideas"

James Webb Young begins his book A Technique for Producing Ideas with a prefatory note:

The subject is properly one which belongs to the professional psychologist, which I am not. This treatment of it, therefore, can have value only as an expression of the personal experience of one who has had to earn his living by producing what were alleged to be ideas.

With a little tweaking, such as occasionally substituting a different profession for psychologist, this would make a nice disclaimer for many of my blog entries.

Come to think of it, with a little tweaking, this could serve as the basis of a disclaimer for about 98% of the web.

Thanks to David Schmüdde for a pointer to Young's delightful little book.

June 28, 2012 4:13 PM

"Doing research is therefore writing software."

The lede from RA Manual: Notes on Writing Code, by Gentzkow and Shapiro:

Every step of every research project we do is written in code, from raw data to final paper. Doing research is therefore writing software.

The authors are economists at the University of Chicago. I have only skimmed the beginning of the paper, but I like what little I've seen. They take seriously the writing of computer programs.

- "This document lays out some broad principles we should all follow."

- "We encourage you to invest in reading more broadly about software craftsmanship, looking critically at your own code and that of your colleagues, and suggesting improvements or additions to the principles below."

- "Apply these principles to every piece of code you check in without exception."

- "You should also take the time to improve code you are modifying or extending even if you did not write the code yourself."

...every piece of code you check in... Source code management and version control? They are a couple of steps up on many CS professors and students.

Thanks to Tyler Cowen for the link.

June 28, 2012 12:37 PM

What Big Software Needs

Unix guru Rob Pike, on "programming in the large":

There's this idea about "programming in the large" and somehow C++ and Java own that domain. I believe that's just a historical accident, or perhaps an industrial accident. But the widely held belief is that it has something to do with object-oriented design.

Big software needs methodology to be sure, but not nearly as much as it needs strong dependency management and clean interface abstraction and superb documentation tools, none of which is served well by C++ (although Java does noticeably better).

That is as succinct a summary as I've seen of what people need from a language in order to write and maintain large programs: strong dependency management, clean interface abstraction, and superb documentation tools. I think that individuals and small teams need them as much as large teams, but that you experience the pain of not having them much sooner when you work on larger teams.

|

The quoted passage is from Less is exponentially more, the text of a talk he gave this month about the biggest surprise he experienced from the rolling out of Go, the programming language he and several colleagues created at Google. He had expected Go to attract C and C++ programmers, because Go was designed to do the things that C++ is used for. Instead, it attracts programmers from Python and Ruby. I'm tempted to quote Pike's conclusion, because it's so succinct, but instead I'll let you read his blog post yourself.

It was interesting to read this paper the day after seeing Leo Meyerovich's blog post on the sociology of programming languages. After reading Pike's thoughts on the spread of Go, I'm more motivated to read the paper Meyerovich introduces, on the principles for programming language adoption.

Irrespective of the adoption question: Pike's talk has no code in it, yet it conveys the spirit of Go better than anything I had read before.

~~~~

Go logo comes courtesy of the project's open-source repository at Google Code.

June 26, 2012 4:23 PM

Adventures in Advising

Student brings me a proposed schedule for next semester.

Me: "Are you happy with this schedule?"

Student: "If I weren't, why would I have made it?"

All I can think is, "Boy, are you gonna have fun as a programmer."

June 25, 2012 12:11 PM

Test-First Development and Implementation of Processing.js

While describing the lessons learned by the team that wrote Processing.js, Mike Kamermans talks about one of the benefits of writing tests before writing code:

The usual process, in which code is written and then test cases are written for that code, actually creates biased tests. Rather than testing whether or not your code does what it should do, according to the specification, you are only testing whether your code is bug-free. In Processing.js, we instead start by creating test cases based on what the functional requirements for some function or set of functions is, based on the documentation for it. With these unbiased tests, we can then write code that is functionally complete, rather than simply bug-free but possibly deficient.

When you implement a language processor, you can use the language specification as a primary guide. Testing your code efficiently, though, means translating the features of the spec into test cases -- code. When you port a language from one platform to another, you can usually use the documentation of the original implementation as a guide, too. The Processing.js team had the benefit that the original implementation also came with a large set of test cases. This allowed them to write code against tests from the beginning, and then write their own tests before writing code that went beyond the scope of the original.

The next time I teach our compiler course, I hope to do a better job getting students to write tests sooner, if not first, as a matter of habit. Perhaps I will seed the teams with a few tests to help them get started.

~~~~

The passage above comes from the chapter on Processing.js in Volume 2 of The Architecture of Open Source Applications. This was a good read that taught me a bit about Javascript, HTML 5, and the web browser as a platform. But most of all it explained the thought process that went into porting a powerful Java package to an architecture with considerably different strengths and weakness. Language theory is all fine and good, but language implementors have to make pragmatic choices in the service of users. It's essential to remember that, in the end, what matters is that the compiler or interpreter produce correct results -- and not, in the case of a port, that the resulting code resemble the original (another lesson the Processing.js team learned).

Another lesson this chapter teaches is to acknowledge when when program doesn't meet everyone's expectations. This was a serious challenge for Processing.js, because Java makes possible behaviors that a web browser does not. When you can't make something work the way people expect, tell them. Processing.js provides documentation for people who come to it with a Processing background, and documentation for people who come to it with a JavaScript background.

Next up on my reading list from AOSA: the design of LLVM.

June 20, 2012 4:54 PM

Becoming Engrossed

Moneyball author Michael Lewis gave the graduation address at his alma mater, Princeton, this spring. Unlike so many others, his address is short and to the point. He wants us to remember the role that luck and accident play in our lives, and not to assume that every time live presents us with a gift we deserve it. It's worth a quick read.

The teacher in me was struck by a line about something else. It appears in the background story that describes Lewis's own good fortune. As an undergrad, Lewis wrote his senior thesis under the direction of archaeologist William Childs, about whom he says:

God knows what Professor Childs actually thought of [my thesis], but he helped me to become engrossed.

"He helped me to become engrossed." What a fine compliment to pay a teacher.

It's not easy to help students become engrossed in a project, a topic, or a discipline. It requires skill. I think I'm pretty good at working with students who are already engrossed, but then so are a lot of people, I imagine. These students make it easy for us.

I want to get better at helping students become engrossed, to help light the new fire. I try all the time, and every once in a while I succeed. I'd like to be more reliable at it.

Whatever else goes into this skill, I'm pretty sure that connecting with students and their interests is usually a good first step, and that being curious myself is a good next step. I also think it's good for students to see me being engrossed with a problem is helpful. In fact, being engrossed is almost certainly more important than being engrossing.

June 19, 2012 3:04 PM

Basic Arithmetic, APL-Style, and Confident Problem Solvers

After writing last week about a cool array manipulation idiom, motivated by APL, I ran across another reference to "APL style" computation yesterday while catching up with weekend traffic on the Fundamentals of New Computing mailing list. And it was cool, too.

Consider the sort of multi-digit addition problem that we all spend a lot of time practicing as children:

365

+ 366

------

The technique requires converting two-digit sums, such as 6 + 5 = 11 in the rightmost column, into a units digit and carrying the tens digit into the next column to the left. The process is straightforward but creates problems for many students. That's not too surprising, because there is a lot going on in a small space.

David Leibs described a technique, which he says he learned from something Kenneth Iverson wrote, that approaches the task of carrying somewhat differently. It takes advantage of the fact that a multi-digit number is a vector of digits times another vector of powers.

First, we "spread the digits out" and add them, with no concern for overflow:

3 6 5

+ 3 6 6

------------

6 12 11

Then we normalize the result by shifting carries from right to left, "in fine APL style".

6 12 11

6 13 1

7 3 1

According to Leibs, Iverson believed that this two-step approach was easier for people to get right. I don't know if he had any empirical evidence for the claim, but I can imagine why it might be true. The two-step approach separates into independent operations the tasks of addition and carrying, which are conflated in the conventional approach. Programmers call this separation of concerns, and it makes software easier to get right, too.

Multiplication can be handled in a conceptually similar way. First, we compute an outer product by building a digit-by-digit times table for the digits:

+---+---------+

| | 3 6 6|

+---+---------+

| 3 | 9 18 18|

| 6 | 18 36 36|

| 5 | 15 30 30|

+---+---------+

This is straightforward, simply an application of the basic facts that students memorize when they first learn multiplication.

Then we sum the diagonals running southwest to northeast, again with no concern for carrying:

(9) (18+18) (18+36+15) (36+30) (30)

9 36 69 66 30

In the traditional column-based approach, we do this implicitly when we add staggered columns of digits, only we have to worry about the carries at the same time -- and now the carry digit may be something other than one!

Finally, we normalize the resulting vector right to left, just as we did for addition:

9 36 69 66 30

9 36 69 69 0

9 36 75 9 0

9 43 5 9 0

13 3 5 9 0

1 3 3 5 9 0

Again, the three components of the solution are separated into independent tasks, enabling the student to focus on one task at a time, using for each a single, relatively straightforward operator.

(Does this approach remind some of you of Cannon's algorithm for matrix multiplication in a two-dimensional mesh architecture?)

Of course, Iverson's APL was designed around vector operations such as these, so it includes operators that make implementing such algorithms as straightforward as the calculate-by-hand technique. Three or four Greek symbols and, voilá, you have a working program. If you are Dave Ungar, you are well on your way to a compiler!

|

I have a great fondness for alternative ways to do arithmetic. One of the favorite things I ever got from my dad was a worn copy of Lester Meyers's High-Speed Math Self-Taught. I don't know how many hours I spent studying that book, practicing its techniques, and developing my own shortcuts. Many of these techniques have the same feel as the vector-based approaches to addition and multiplication: they seem to involve more steps, but the steps are simpler and easier to get right.

A good example of this I remember learning from High-Speed Math Self-Taught is a shortcut for finding 12.5% of a number: first multiply by 100, then divide by 8. How can a multiplication and a division be faster than a single multiplication? Well, multiplying by 100 is trivial: just add two zeros to the number, or shift the decimal point two places to the right. The division that remains involves a single-digit divisor, which is much easier than multiplying by a three-digit number in the conventional way. The three-digit number even has its own decimal point, which complicates matters further!

To this day, I use shortcuts that Meyers taught me whenever I'm making updating the balance in my checkbook register, calculating a tip in a restaurant, or doing any arithmetic that comes my way. Many people avoid such problems, but I seek them out, because I have fun playing with the numbers.

I am able to have fun in part because I don't have to worry too much about getting a wrong answer. The alternative technique allows me to work not only faster but also more accurately. Being able to work quickly and accurately is a great source of confidence. That's one reason I like the idea of teaching students alternative techniques that separate concerns and thus offer hope for making fewer mistakes. Confident students tend to learn and work faster, and they tend to enjoy learning more than students who are handcuffed by fear.

I don't know if anyone was tried teaching Iverson's APL-style basic arithmetic to children to see if it helps them learn faster or solve problems more accurately. Even if not, it is both a great demonstration of separation of concerns and a solid example of how thinking about a problem differently opens the door to a new kind of solution. That's a useful thing for programmers to learn.

~~~~

Postscript. If anyone has a pointer to a paper or book in which Iverson talks about this approach to arithmetic, I would love to hear from you.

IMAGE: the cover of Meyers's High-Speed Math Self-Taught, 1960. Source: OpenLibrary.org.

Posted by Eugene Wallingford | Permalink | Categories: Computing, Software Development, Teaching and Learning

June 13, 2012 2:19 PM

The First Rule of Programming in Ruby

When I was thinking about implementing the cool programming idiom I blogged about yesterday, I forgot the first rule of programming in Ruby:

It's already in there.

It didn't take long after my post went live that readers began to point out that Ruby already offers the functionality of my sub and R's { operator, via Array#values_at:

>> [10, 5, 9, 6, 20, 17, 1].values_at(6, 1, 3, 2, 0, 5, 4)

=;> [1, 5, 6, 9, 10, 17, 20]

I'm not surprised. I should probably spend a few minutes every day browsing the documentation for a randomly-chosen Ruby class. There's so much to find! There's also too much to remember out of context, but I never know when I'll come across a need and have a vague recollection that Ruby already does what I need.

Reader Gary Wright pointed out that I can get closer to R's syntax by aliasing values_at with an unused array operator, say:

class Array

def %(*args)

values_at(*args.first)

end

end

Now my use of % is as idiomatic in Ruby as { is in R:

>> [10, 5, 9, 6, 20, 17, 1] % [6, 1, 3, 2, 0, 5, 4]

=;> [1, 5, 6, 9, 10, 17, 20]

I am happy to learn that Ruby already has my method and am just as happy to have spent time thinking about and implementing it on my own. I like to play with the ideas as much as I like knowing the vast class library of a modern scripting language.

(If I were writing Ruby code for a living, my boss might not look upon my sense of exploration so favorably... There are advantages to being an academic!)

June 12, 2012 3:44 PM

Faking a Cool Programming Idiom in Ruby

Last week, James Hague blogged about a programming idiom you've never heard of: fetching multiple items from an array with a single operation.

Let's say the initial array is this:10 5 9 6 20 17 1Fetching the values at indices 0, 1, 3, and 6, gives:

10 5 6 1

You can do this directly in APL-style languages such as J and R. In J, for example, you use the { operator:

0 1 3 6 { 10 5 9 6 20 17 1

Such an operator enables you to do some crazy things, like producing a sorted array by accessing it with a permuted set of indices. This:

6 1 3 2 0 5 4 { 10 5 9 6 20 17 1

produces this:

1 5 6 9 10 17 20

When I saw this, my first thought was, "Very cool!" It's been a long time since I programmed in APL, and if this is even possible in APL, I'd forgotten.

One of my next thoughts was, "I bet I can fake that in Ruby...".

I just need a way to pass multiple indices to the array, invoking a method that fetches one value at a time and returns them all. So I created an Array method named sub that takes the indices as an array.

class Array

def sub slots

slots.map {|i| self[i] }

end

end

Now I can say:

[10, 5, 9, 6, 20, 17, 1].sub([6, 1, 3, 2, 0, 5, 4])

and produce [1, 5, 6, 9, 10, 17, 20].

The J solution is a little cleaner, because my method requires extra syntax to create an array of indices. We can do better by using Ruby's splat operator, *. splat gathers up loose arguments into a single collection.

class Array

def sub(*slots) # splat the parameter

slots.map {|i| self[i] }

end

end

This definition allows us to send sub any number of integer arguments, all of which will be captured into the parameter slots.

Now I can produce the sorted array by saying:

[10, 5, 9, 6, 20, 17, 1].sub(6, 1, 3, 2, 0, 5, 4)

Of course, Ruby allows us to omit the parentheses when we send a message as long as the result is unambiguous. So we can go one step further:

[10, 5, 9, 6, 20, 17, 1].sub 6, 1, 3, 2, 0, 5, 4

Not bad. We are pretty close to the APL-style solution now. Instead of {, we have .sub. And Ruby requires comma-separated argument lists, so we have to use commas when we invoke the method. These are syntactic limitations placed on us by Ruby.

Still, with relative simple code we are able to fake Hague's idiom quite nicely. With a touch more complexity, we could write sub to allow either the unbundled indices or a single array containing all the indices. This would make the code fit nicely with other Ruby idioms that produce array values.

If you have stronger Ruby-fu than I and can suggest a more elegant implementation, please share. I'd love to learn something new!

June 06, 2012 3:33 PM

Advice, Platitudes, and Reasonable Decisions

I recently listened to a short clip from Seth Godin's book "The Dip". In it, he quotes Vince Lombardi as saying, "winners never quit, and quitters never win", and then says something to the effect of:

Winners quit all the time. They just quit the right stuff at the right time.

This reminded of my recent Good Ideas Aren't Always Enough, in which I talk briefly about Ward Cunningham's experience trying to create a universal mark-up language for wiki.

How did Ward know it was the right time to stop pushing for a universal mark-up? Perhaps success was right around the corner. Maybe he just needed a better argument, or a better example, or a better mark-up language.

Inherent in this sort of lesson is a generic variation of the Halting Problem. You can't be sure that an effort will fail until it fails. But the process may never fail explicitly, simply churning on forever. What then?

That's one of the problems with giving advice of the sort my entry gave, or of the sort that Godin gives in his book. The advice itself is empty, because the opposite advice is also true. You only know which advice is right in any given context after the fact -- if ever.

How did Ward know? I'm guessing a combination of:

- knowledge about the problem,

- experience with this problem and others like it,

- relationship with the community of people involved,

- and... a little luck.

And someone may come along some day with a better argument, or a better example, or a better mark-up language, and succeed. We won't know until it happens.

Maybe such advice is nothing more than platitude. Without any context, it isn't all that helpful, except as motivation to persevere in the face of a challenge (if you want to push on) or consolation in the face of a setback (if you want to focus your energy elsewhere). Still, I think it's useful to know that other people -- accomplished people -- have faced the same choice. Both outcomes are possible. Knowing that, we can use our knowledge, experience, and relationships to make choices that make sense in our current circumstances and live with the outcomes.

June 05, 2012 3:33 PM

Writing and Rewriting

An interviewer once asked writer Stephen King about extensive rewrites, and King responded:

One of the ways the computer has changed the way I work is that I have a much greater tendency to edit "in the camera" -- to make changes on the screen. With 'Cell' that's what I did. I read it over, I had editorial corrections, I was able to make my own corrections, and to me that's like ice skating. It's an OK way to do the work, but it isn't optimal. With 'Lisey' I had the copy beside the computer and I created blank documents and retyped the whole thing. To me that's like swimming, and that's preferable. It's like you're writing the book over again. It is literally a rewriting.

The idea of typing an existing text made me think of Zed Shaw's approach to teaching programming, which has grown into Learn Code the Hard Way. You can learn a lot about a body of words or code by reading it just enough to type it, and letting your brain do the rest. I'm not sure how well this approach would work for a group of complete novices. I suspect that a few would like it and that many would not. I like having it around, though, because I like having as diverse a set of tools as possible for reaching students.

For someone who already knows how to write -- or, in King's case, who actually wrote the text he is retyping -- the act offers a different set of trade-offs than rewriting or refactoring in place. It also offers a very different experience from (re)writing from scratch, or deleting text so you won't be tempted to keep it.

June 01, 2012 4:39 PM

Good Ideas Aren't Always Enough

|

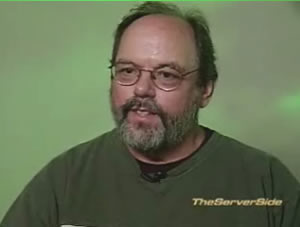

In his recent Dr. Dobb's interview, Ward Cunningham talked about the wiki community's efforts to create a universal mark-up language. Despite the many advantages of a common language, the idea never took hold. Ward's post-mortem:

So the only thing I can conclude is that as nice as having a universal or portable mark-up would be, it's not nice enough to cause people to give up what they're working on when they work on their wiki.

This is an important lesson to learn, whatever your discipline or your community. It's especially important if you hope to be an agent of change. Good ideas aren't always enough to induce change, even in a community of people working together in an explicit effort to create better ideas. There needs to be enough energy to overcome the natural inertia associated with any set of practices.

Ward's next sentence embodies even more wisdom:

I accept that as the state of nature and don't worry about it too much anymore.

Denial locks you up. Either you continue in vain to push the rejected idea, or you waste precious time and energy lamenting the perceived injustice of the failure.

Acceptance frees you to move on to your project in peace.