September 28, 2013 12:17 PM

StrangeLoop: This and That, Volume 2

[My notes on StrangeLoop 2013: Table of Contents]

I am at a really good talk and look around the room. So many people are staring at their phones, scrolling away. So many others are staring at their laptops, typing away. The guy next to me: doing both at the same time. Kudos, sir. But you may have missed the point.

Conference talks are a great source of homework problems. Sometimes, the talk presents a good problem directly. Others, watching the talk sets my subconscious mind in motion, and it creates something useful. My students thank you. I thank you.

Jenny Finkel talked about the difference between two kinds of recommenders: explorers, who forage for new content, and exploiters, who want to see what's already popular. The former discovers cool new things occasionally but fails occasionally, too. The latter is satisfied most of the time but rarely surprised. As conference goes, I felt this distinction at play in my own head this year. When selecting the next talk to attend, I have to take a few risks if I ever hope to find something unexpected. But when I fail, a small regret tugs at me.

We heard a lot of confident female voices on the StrangeLoop stages this year. Some of these speakers have advanced academic degrees, or at least experience in grad school.

The best advice I received on Day 1 perhaps came not from a talk but from the building:

"Please do not climb on bears." That sounds like a good idea most everywhere, most all the time.

September 27, 2013 4:26 PM

StrangeLoop: Add All These Things

[My notes on StrangeLoop 2013: Table of Contents]

I took a refreshing walk in the rain over the lunch hour on Friday. I managed to return late and, as a result, missed the start of Avi Bryant's talk on algebra and analytics. Only a few minutes, though, which is good. I enjoyed this presentation.

Bryant didn't talk about the algebra we study in eighth or ninth grade, but the mathematical structure math students encounter in a course called "abstract" or "modern" algebra. A big chunk of the talk focused on an even narrower topic: why +, and operators like it, are cool.

One reason is that grouping doesn't matter. You can add 1 to 2, and then add 4 to the result, and have the same answer as if you added 4 to 1, and then added 2 to the result. This is, of course, the associative property.

Another is that order doesn't matter. 1 + 2 is the same as 2 + 1. That's the commutative property.

Yet another is that, if you have nothing to add, you can add nothing and have the same value you started with. 4 + 0 = 4. 0 is the identity element for addition.

Finally, when you add two numbers, you get a number back. This is not quite as true in computers as in math, because an operation can cause an overflow or underflow and create an error. But looked at through fuzzy lenses, this is true in our computers, too. This is the closure property for addition of integers and real numbers.

Addition isn't the only operation on numbers that has these properties. Finding the maximum value in a set of numbers, does, too. The maximum of two numbers is a number. max(x,y) = max(y,x), and if we have three or more numbers, it doesn't how matter how we group them; max will find the maximum among them. The identity value is tricky -- there is no smallest number... -- but in practice we can finesse this by using the smallest number of a given data type, or even allowing max to take "nothing" as a value and return its other argument.

When we see a pattern like this, Bryant said, we should generalize:

- We have a function f that takes two values from a set and produces another member of the same set.

- The order of f's arguments doesn't matter.

- The grouping of f's arguments doesn't matter.

- There is some identity value, a conceptual "zero", that doesn't matter, in the sense that f(i,zero) for any i is simply i.

There is a name for this pattern. When we have such as set and operation, we have a commutative monoid.

S ⊕ S → S

x ⊕ y = y ⊕ x

x ⊕ (y ⊕ z) = (x ⊕ y) ⊕ z

x ⊕ 0 = x

I learned about this and other such patterns in grad school when I took an abstract algebra course for kicks. No one told me at the time that I'd being seeing them again as soon as someone created the Internet and it unleashed a torrent of data on everyone.

Just why we are seeing the idea of a commutative monoid again was the heart of Bryant's talk. When we have data coming into our company from multiple network sources, at varying rates of usage and data flow, and we want to extract meaning from the data, it can be incredibly handy if the meaning we hope to extract -- the sum of all the values, or the largest -- can be computed using a commutative monoid. You can run multiple copies of your function at the entry point of each source, and combine the partial results later, in any order.

Bryant showed this much more attractively than that, using cute little pictures with boxes. But then, there should be an advantage to going to the actual talk... With pictures and fairly straightforward examples, he was able to demystify the abstract math and deliver on his talk's abstract:

A mathematician friend of mine tweeted that anyone who doesn't understand abelian groups shouldn't build analytics systems. I'd turn that around and say that anyone who builds analytics systems ends up understanding abelian groups, whether they know it or not.

That's an important point. Just because you haven't studied group theory or abstract algebra doesn't mean you shouldn't do analytics. You just need to be prepared to learn some new math when it's helpful. As programmers, we are all looking for opportunities to capitalize on patterns and to generalize code for use in a wider set of circumstances. When we do, we may re-invent the wheel a bit. That's okay. But also look for opportunities to capitalize on patterns recognized and codified by others already.

Unfortunately, not all data analysis is as simple as summing or maximizing. What if I need to find an average? The average operator doesn't form a commutative monoid with numbers. It falls short in almost every way. But, if you switch from the set of numbers to the set of pairs [n, c], where n is a number and c is a count of how many times you've seen n, then you are back in business. Counting is addition.

So, we save the average operation itself as a post-processing step on a set of number/count pairs. This turns out to be a useful lesson, as finding the average of a set is a lossy operation: it loses track of how many numbers you've seen. Lossy operations are often best saved for presenting data, rather than building them directly into the system's computation.

Likewise, finding the top k values in a set of numbers (a generalized form of maximum) can be handled just fine as long as we work on lists of numbers, rather than numbers themselves.

This is actually one of the Big Ideas of computer science. Sometimes, we can use a tool or technique to solve a problem if only we transform the problem into an equivalent one in a different space. CS theory courses hammer this home, with oodles of exercises in which students are asked to convert every problem under the sun into 3-SAT or the clique problem. I look for chances to introduce my students to this Big Idea when I teach AI or any programming course, but the lesson probably gets lost in the noise of regular classwork. Some students seem to figure it out by the time they graduate, though, and the ones who do are better at solving all kinds of problems (and not by converting them all 3-SAT!).

Sorry for the digression. Bryant didn't talk about 3-SAT, but he did demonstrate several useful problem transformations. His goal was more practical: how can we use this idea of a commutative monoid to extract as many interesting results from the stream of data as possible.

This isn't just an academic exercise, either. When we can frame several problems in this way, we are able to use a common body of code for the processing. He called this body of code an aggregator, comprising three steps:

- prepare the data by transforming it into the space of a commutative monoid

- reduce the data to a single value in that space, using the appropriate operator

- present the result by transforming it back into its original space

In practice, transforming the problem into the space of a monoid presents challenges in the implementation. For example, it is straightforward to compute the number of unique values in a collection of streams by transforming each item into a set of size one and then using set union as the operator. But union requires unbounded space, and this can be inconvenient when dealing with very large data sets.

One approach is to compute an estimated number of uniques using a hash function and some fancy arithmetic. We can make the expected error in estimate smaller and smaller by using more and more hash functions. (I hope to write this up in simple code and blog about it soon.)

Bryant looked at one more problem, computing frequencies, and then closed with a few more terms from group theory: semigroup, group, and abelian group. Knowing these terms -- actually, simply knowing that they exist -- can be useful even for the most practical of practitioners. They let us know that there is more out there, should our problems become harder or our needs become larger.

That's a valuable lesson to learn, too. You can learn all about abelian groups in the trenches, but sometimes it's good to know that there may be some help out there in the form of theory. Reinventing wheels can be cool, but solving the problems you need solved is even cooler.

September 24, 2013 4:38 PM

StrangeLoop: Compilers, Compilers, Compilers

[My notes on StrangeLoop 2013: Table of Contents]

I went to a lot of talks about compilation. There seemed to be more this year than last, but perhaps I was suffering from a perception bias. I'm teaching compilers this semester and have been reading a bit about V8 and Crankshaft on the elliptical of late.

Many of the talks I saw revolved around a common theme: dynamic run-time systems. Given the prominence these days of Javascript, Python, Ruby, Lua, and their like, it's not surprising that finding better ways to organize dynamic run-times and optimize their performance are receiving a lot of attention.

The problem of optimizing dynamic run-time systems is complicated by the wide range of tasks being performed dynamically: type checking, field access, function selection, and the most common whipping horse of my static-language friends, garbage collection. Throw in eval, which allows the execution of arbitrary code, possibly changing even the definition of core classes, and it's amazing that our dynamic languages can run in human time at all. That's a tribute to the people who have been creating compilers for us over the years.

As I listened to these talks, my ears were tuned to ideas and topics that I need to learn more about. That's what my notes captured best. Here are a few ideas that stood out.

The JavaScript interpreter, interpreted. Martha Girdler gave a quick, jargon-free tour of how Javascript works, using Javascript code to illustrate basic ideas like contexts. This sort of talk can help relatively inexperienced developers understand the common "pain points" of the language, such as variable hoisting.

Fast and Dynamic. Maxime Chevalier-Boisvert went a level deeper, tracing some of the fundamental ideas used to implement run-time systems from their historical roots in Lisp, Smalltalk, and Self up to research prototypes such as Chevalier-Boisvert's own Higgs compiler.

Many of the ideas are familiar to anyone who has had an undergrad compiler course, such as type tagging and microcoded instructions. Others are simple extensions of such ideas, such as inline caching, which is a workhorse in any dynamic compiler. Still others have entered popular discussion only recently. Maps, which are effectively hidden classes, originated in Self and are now being applied and extended in a number of interesting ways.

Two ideas from this talk that I would like to learn more about are hybrid type inference, which Chevalier-Boisvert mentioned in the context of Chrome and Firefox, and basic block versioning, a technique being explored in the Higgs compiler.

In closing, the speaker speculated on the better compilers of the future. Some of the advances will come from smarter CPUs, which might execute potential future paths in parallel, and more principled language design. But many will come from academic research that discovers new techniques and improves exiting ones.

Some of the ideas of the future are probably already available and simply haven't caught on yet. Chevalier-Boisvert offered three candidates: direct manipulation of the AST, pattern matching, and the persistent image. I certainly hear a lot of people talking about the first of these, but I've yet to see a compelling implementation yet.

Ruby Doesn't Have to Be Slow. In this session, Alex Gaynor explained why dynamic languages don't have to be slow. Though Ruby was his working example, everything he said applies to Javascript, Python, Lua, and other dynamic languages. He then talked about how he is putting these ideas to work in Topaz, a fast Ruby interpreter written in RPython. Topaz uses a number of advanced techniques, including a tracing JIT, type-specialized field look-up, maps, quasi-immutable fields, and escape analysis. It supports a subset of Ruby, though much of what is missing now is simply standard library classes and methods.

Two of the more interesting points of this talk for me were about meta-issues. First, he opened with an elaboration of the claim, "Ruby is slow", which he rightfully rejected as too imprecise to be meaningful. What people probably mean is something like, "Code written in Ruby executes CPU-bound tasks slower than other languages." I would add that, for many of my CS colleagues, the implicit benchmark is compiled C.

Further, Ruby users tend to respond to this claim poorly. Rather than refute it, they accept its premise and dance around its edges. Saddest, he says, is when they say, "If it turns out to matter, we can rewrite the program in some serious language." The compiler nerd in him says, "We can do this." Topaz is, in part, an attempt to support that claim.

Second, in response to an audience question, he claimed that people responsible for Java got something right fifteen years: they convinced people to abandon their C extensions. If the Ruby world followed course, and moved away from external dependencies that restrict what the compiler and run-time system can know, then many performance improvements would follow.

Throughout this talk, I kept coming back to JRuby in my mind...

The Art of Finding Shortcuts. Vyacheslav " @mraleph" Egorov's talk was ostensibly about an optimizing compiler for Dart, but like most of the compiler talks this year, it presented ideas of value for handling any dynamic language. Indeed, this talk gave a clear introduction to what an optimizing compiler does, what in-line caching is, and different ways that the compiler might capitalize on them.

According to Egorov, writing an optimizing compiler for language like Dart is the art of finding -- and taking -- shortcuts. The three key issues to address are representation, resolution, and redundancy. You deal with representation when you design your run-time system. The other two fall to the optimizing compiler.

Resolution is fundamentally a two-part question. Given the expression obj.prop,

- What is obj?

- Where is prop?

In-line caches eliminate redundancy by memoizing where/what pairs. The goal is to use the same hidden class maps to resolve property access whenever possible. Dart's optimizer uses in-line caching to give type feedback for use in improving the performance of loads and stores.

Egorov was one of the most quotable speakers I heard at StrangeLoop this year. In addition to "the art of finding shortcuts", I noted several other pithy sayings that I'll probably steal at some point, including:

- "If all you have is an in-line cache, then everything looks like an in-line cache stub."

- "In-lining is a Catch-22." You can't know if you will benefit from inlining unless you try, but trying (and undoing) is expensive.

Two ideas I plan to read more about after hearing this talk are allocation sinking and load forwarding.

~~~~

I have a lot of research to do now.

September 23, 2013 4:22 PM

StrangeLoop: This and That, Volume 1

[My notes on StrangeLoop 2013: Table of Contents]

|

I'm working on a post about the compiler talks I attended, but in the meantime here are a few stray thoughts, mostly from Day 1.

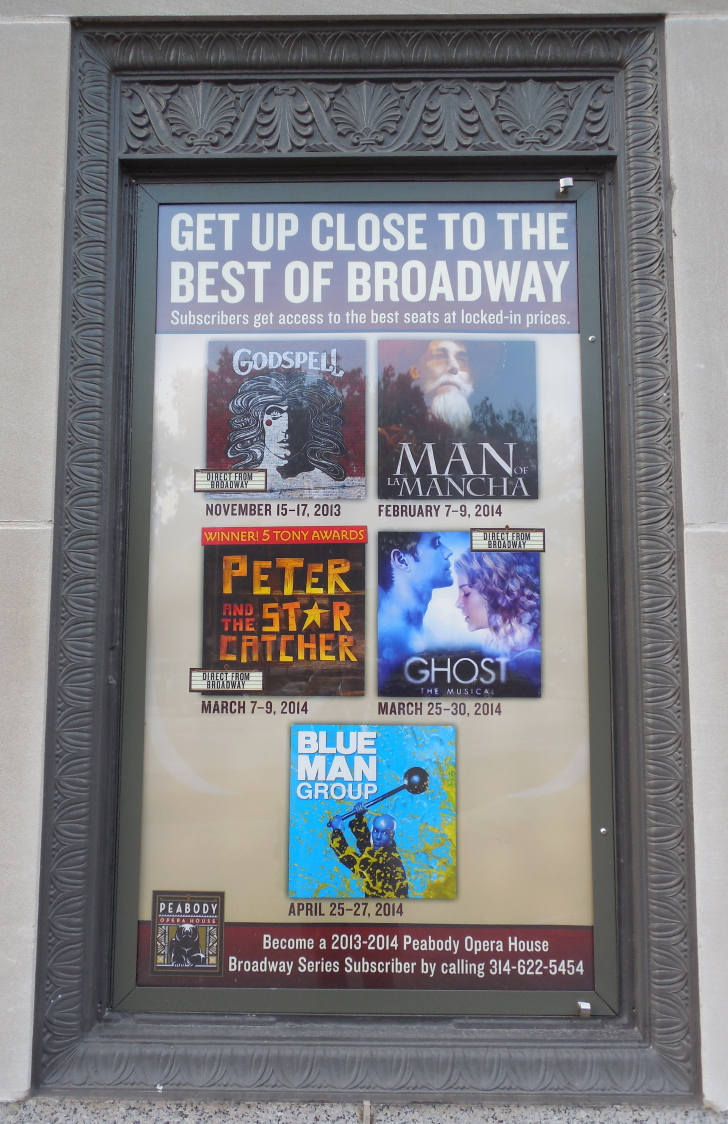

The Peabody Opera House really is a nice place to hold a conference of this size. If StrangeLoop were to get much larger, it might not fit.

I really don't like the word "architected".

The talks were scheduled pretty well. Only once in two days did I find myself really wanting to go to two talks at the same time. And only once did I hear myself thinking, "I don't want to hear any of these...".

My only real regret from Day 1 was missing Scott Vokes's talk on data compression. I enjoyed the talk I went to well enough, but I think I would have enjoyed this one more.

What a glorious time to be a programming language theory weenie. Industry practitioners are going to conferences and attending talks on dependent types, continuations, macros, immutable data structures, and functional reactive programming.

Moon Hooch? Interesting name, interesting sound.

September 22, 2013 3:51 PM

StrangeLoop: Jenny Finkel on Machine Learning at Prismatic

[My notes on StrangeLoop 2013: Table of Contents]

The conference opened with a talk by Jenny Finkel on the role machine learning play at Prismatic, the customized newsfeed service. It was a good way to start the conference, as it introduced a few themes that would recur throughout, had a little technical detail but not too much, and reported a few lessons from the trenches.

Prismatic is trying to solve the discovery problem: finding content that users would like to read but otherwise would not see. This is more than simply a customized newsfeed from a singular journalistic source, because it draws from many sources, including other reader's links, and because it tries to surprise readers with articles that may not be explicitly indicated by their profiles.

The scale of the problem is large, but different from the scale of the raw data facing Twitter, Facebook, and the like. Finkel said that Prismatic is processing only about one million timely docs at a time, with the set of articles turning over roughly weekly. The company currently uses 5,000 categories to classify the articles, though that number will soon go up to the order of 250,000.

The complexity here comes from the cross product of readers, articles, and categories, along with all of the features used to try to tease out why readers like the things they do and don't like the others. On top of this are machine learning algorithms that are themselves exponentially expensive to run. And with articles turning over roughly weekly, they have to be amassing data, learning from it, and moving on constantly.

The main problem at the heart of a service like this is: What is relevant? Everywhere one turns in AI, one sees this question, or its more general cousin, Is this similar? In many ways, this is the problem at the heart of all intelligence, natural and artificial.

Prismatic's approach is straight from AI, too. They construct a feature vector for each user/article pair and then try to learn weights that, when applied to the values in a given vector, will rank desired articles high and undesired articles low. One of the key challenges when doing this kind of working is to choose the right features to use in the vector. Finkel mentioned a few used by Prismatic, including "Does the user follow this topic?", "How many times has the reader read an article from this publisher?", and "Does the article include a picture?"

With a complex algorithm, lots of data, and a need to constantly re-learn, Prismatic has to make adjustments and take shortcuts wherever possible in order to speed up the process. This is a common theme at a conference where many speakers are from industry. First, learn your theory and foundations; learn the pragmatics and heuristics need to turn basic techniques into the backbone of practical applications.

Finkel shared one pragmatic idea of this sort that Prismatic uses. They look for opportunities to fold user-specific feature weights into user-neutral features. This enables their program to compute many user-specific dot products using a static vector.

She closed the talk with five challenges that Prismatic has faced that other teams might be on the look out for:

Bugs in the data. In one case, one program was updating a data set before another program could take a snapshot of the original. With the old data replaced by the new, they thought their ranker was doing better than it actually was. As Finkel said, this is pretty typical for an error in machine learning. The program doesn't crash; it just gives the wrong answer. Worse, you don't even have reason to suspect something is wrong in the offending code.

Presentation bias. Readers tend to look at more of the articles at the top of a list of suggestions, even if they would have enjoyed something further down the list. This is a feature of the human brain, not of computer programs. Any time we write programs that interact with people, we have to be aware of human psychology and its effects.

Non-representative subsets. When you are creating a program that ranks things, its whole purpose is to skew a set of user/article data points toward the subset of articles that the reader most wants to read. But this subset probably doesn't have the same distribution as the full set, which hampers your ability to use statistical analysis to draw valid conclusions.

Statistical bleeding. Sometimes, one algorithm looks better than it is because it benefits from the performance of the other. Consider two ranking algorithms, one an "explorer" that seeks out new content and one an "exploiter" that recommend articles that have already been found to be popular. If we in comparing their performances, the exploiter will tend to look better than it is because it benefits from the successes of the explorer without being penalized for its failures. It is crucial to recognize that one feature you measure is not dependent on another. (Thanks to Christian Murphy for the prompt!)

Simpson's Paradox. The iPhone and the web have different clickthrough rates. They once found them in a situation where one recommendation algorithm performed worse than another on both platforms, yet better overall. This can really disorient teams who follow up experiments by assessing the results. The issue here is usually a hidden variable that is confounding the results.

(I remember discussing this classic statistical illusion with a student in my early years of teaching, when we encountered a similar illusion in his grade. I am pretty sure that I enjoyed our discussion of the paradox more than he did...)

This part of a talk is of great value to me. Hearing about another team's difficulties rarely helps me avoid the same problems in my own projects, but it often does help me recognize those problems when they occur and begin thinking about ways to work around them. This was a good way for me to start the conference.

September 22, 2013 10:27 AM

Back from StrangeLoop 2013

|

I'm back home for StrangeLoop 2013. It was, again, an outstanding conference: a great location, excellent amenities, fun side events, and -- most importantly -- a solid set of talks: diverse topics, strong technical content, and a some very good speakers. Alex Miller and his team put on a good conference.

This year, I went to the talks old school: with a steno notebook and no technology but a camera. As a result, a couple of things are different about how I'm blogging the conference. First, I did not write or post any entries during the event itself. Second, my notes are a bit shorter than usual and will need to be typed up before they become blog entries. I'll write my thoughts up over the next week or so and post the entries as they emerge.

This entry will serve as a table of contents for my StrangeLoop posts, a home base for readers who might stumble onto one post and care to read more. For now, I'll list a few entries I expect to write, but I'll only know what belongs here after I have written them.

Primary entries:

- Jenny Finkel on Machine Learning at Prismatic

- Compilers, Compilers, Compilers

- Add All These Things, Avi Bryant's talk on data analytics and... monoids

- Rich Hickey on channels and program design

- Exercises in Programming Style, by Crista Lopes

- Martin Odersky on the trouble with types

- a bag of unrelated talks

Ancillary entries:

Is it too early to start looking forward to StrangeLoop 2014?

September 15, 2013 10:25 AM

I am Going to PLoP 2013. Yeah!

|

On Friday, an e-mail message was sent to a number of mailing lists inviting people to register for the 20th Conference on Pattern Languages of Programs, also known as PLoP 2013. In part, it said:

... this time a lot of old-timers will also participate, among them Ward Cunningham, Peter Sommerlad, Kyle Brown, Joshua Kerievsky, Eugene Wallingford, Jenny Quillien, Joe Yoder, Ralph Johnson, Richard Gabriel, Robert Hanmer, Rebecca Wirfs-Brock, Lise Hvatum, and Ademar Aguiar.

When I saw this, I felt as if I were a prop in a game of "One of these things is not like the others...". My name will surely raise a few "Huh?"s in a list that contains Ward Cunningham, Ralph Johnson, Richard Gabriel, Rebecca Wirfs-Brock, and a number of other well-known book authors. I am, however, willing to admit that I am now an old-timer!

Yes, I am going to PLoP again this year. After attending for ten straight years, 1996-2005, chairing the 2000 conference, serving on a number of program committees, and chairing a few ChiliPLoPs, school duties pulled me away. Becoming department head ate a lot of my time, and I found myself writing less. Also, in 2006, PLoP left Allerton Park and collocated with OOPSLA/SPLASH for a few years. That made going to PLoP a bigger time commitment for me than driving a few hours to the southeast.

This year, PLoP returns to Allerton Park, which is an awesome site for a conference: remote, relaxing, unusual, and stimulating. It is also a great place to run and ride.

I am excited to be going back to Allerton and to PLoP this year. I look forward to seeing so many of the old gang. I also look forward to making new friends among the software practitioners who are working hard to document the deep design and implementation knowledge of how to write programs. So much of this knowledge is created in the trenches, after we graduate from school and start making things under varied constraints. Fortunately, there are developers out there who are trying to write that knowledge down -- and write it well.

Sadly, I don't have a paper in a workshop this year. The writers' workshop is one of the key innovations brought to the software world by the patterns community, and I enjoy them.

I will be participating in some of the 20th anniversary events, I'm sure, as well as helping with a working group on pedagogical patterns. There has been a lot of interesting work going on in this space over the last few years, especially in Europe, under the leadership of Christian Köppe, Christian Kohls, and others. PLoP will give me a chance to meet the people doing this new work and to work with them.

So, count this patterns old-timer as excited about the chance to renew friendships with other old-timers and the chance to make new friendships with the new standard bearers. And about the chance to savor again the unique atmosphere of Allerton Park.

First, though, I head to StrangeLoop 2013 later this week. I am psyched.

September 10, 2013 3:40 PM

A Laugh at My Own Expense

This morning presented a short cautionary tale for me and my students, from a silly mistake I made in a procmail filter.

Back story: I found out recently that I am still subscribed to a Billy Joel fan discussion list from the 1990s. The list has been inactive for years, or I would have been filtering its messages to a separate mailbox. Someone has apparently hacked the list, as a few days ago it started spewing hundreds of spam messages a day.

I was on the road for a few days after the deluge began and was checking mail through a shell connection to the mail server. Because I was busy with my trip and checking mail infrequently, I just deleted the messages by hand. When I got back, Mail.app soon learned they were junk and filtered them away for me. But the spam was still hitting my inbox on the mail server, where I read my mail occasionally even on campus.

After a session on the server early this morning, I took a few minutes to procmail them away. Every message from the list has a common pattern in the Subject: line, so I copied it and pasted it into a new procmail recipe to send all list traffic to /dev/null :

:0

* ^Subject.*[billyjoel]

/dev/null

Do you see the problem? Of course you do.

I didn't at the time. My blindness probably resulted from a combination of the early hour, a rush to get over to the gym, and the tunnel vision that comes from focusing on a single case. It all looked obvious.

This mistake offers programming lessons at several different levels.

The first is at the detailed level of the regular expression. Pay attention to the characters in your regex -- all of them. Those brackets really are in the Subject: line, but by themselves mean something else in the regex. I need to escape them:

* ^Subject.*\[billyjoel\]

This relates to a more general piece of problem-solving advice. Step back from individual case you are solving and think about the code you are writing more generally. Focused on the annoying messages from the list, the brackets are just characters in a stream. Looked at from the perspective of the file of procmail recipes, they are control characters.

The second is at the level of programming practice. Don't /dev/null something until you know it's junk. Much better to send the offending messages to a junk mbox first:

* ^Subject.*\[billyjoel\]

in.tmp.junk

Once I see that all and only the messages from the list are being matched by the pattern, I can change that line send list traffic where it belongs. That's a specific example of the sort of defensive programming that we all should practice. Don't commit to solutions too soon.

This, too, relates to more general programming advice about software validation and verification. I should have exercised a few test cases to validate my recipe before turning it loose unsupervised on my live mail stream.

I teach my students this mindset and program that way myself, at least most of the time. Of course, the time you most need test cases will be the time you don't write them.

The day provided a bit of irony to make the story even better. The topic of today's session in my compilers course? Writing regular expressions to describe the tokens in a language. So, after my mail admin colleague and I had a good laugh at my expense, I got to tell the story to my students, and they did, too.

Posted by Eugene Wallingford | Permalink | Categories: Computing, Software Development, Teaching and Learning

September 07, 2013 9:02 PM

Riding in Muncie

|

It's been three years since my most recent Running on the Road entry, from the Oxford, Ohio, area. Little did I know at the time it would be my last. I've started cycling in place of running, though until now when I've traveled I've either hit the hotel's fitness room or walked outdoors.

This is the first trip since Oxford that I have taken my exercise with me. I put a Thule bike rack on the trunk of my Taurus and brought my bike with me.

I'm back again in Muncie, which I reported in my second Running on the Road entry back in October 2004. This gave me a chance to revisit the Cardinal Greenway, an old favorite for marathon training. I've several times done 20-milers by running from the Wysor Depot north to Gaston and back. This time, with my bike, I headed south to see new ground. The result was a ride down past Losantville and 50 miles total. A good ride.

Biking when I travel is tougher than running. I need so much more equipment, and it takes so much more space to pack. Unless I can find ways to rent or borrow a bike at my destination, I will be limited to riding in places to which I can drive. But I'm willing to give it a try. If things go well on upcoming trips this fall to St. Louis and Allerton Park, Riding on the Road may become its own series of posts.

September 06, 2013 8:37 AM

Old Enough to Know

A trip to my alma mater for a reunion this weekend brings to mind these words from Roger Ebert:

There is a part of me that will forever want to be walking under autumn leaves, carrying a briefcase containing the works of Shakespeare and Yeats and a portable chess set. I will pass an old tree under which once on a summer night I lay on the grass with a fragrant young woman and we quoted e.e. cummings back and forth.

I was more likely carrying Keats than Yeats and quoting Voltaire than cummings, but the feeling's the same. There is something about the age as we enter adulthood that becomes permanent in us, more so than any other time. I'm old enough to know that these memories can't hurt a thing.

I sent one daughter off to college a couple of years ago and will send another next year. In this experience, I feel more like the wistful father who penned My Dear Son. Indeed, "every age has its gifts for the man who is willing to work for them and use them temperately".

Attributions. The Ebert passage comes from his review of the film Liberal Arts. (Ebert calls it "an almost unreasonable pleasure"; I agree.) John Mellencamp wrote the line "old enough to know...".

September 05, 2013 3:13 PM

Code Should Speak for Itself

Matt Welsh recently posted a blog entry on experiences rewriting a large system in Go and some of his thoughts about the language afterwards. One of the few shortcomings in his mind had to do with how Go's type inference made it hard for him to know the type of a variable. Sure, an IDE or other tool could help, but Welsh says:

I staunchly refuse to edit code with any tool that requires using a mouse.

That's mostly how I feel, too, though I'm more an emacs man. I use IDEs and appreciate what they can give. I used Eclipse a fair amount back when it was young, but it's gotten so big and complex these days that I shudder at the thought of starting it up. RubyMine gave me many pleasant moments when I used it for a while a couple of years ago.

When I use IDEs, I prefer simpler IDEs, such as Dr. Racket or even Dr. Java, to complex ones anyway. They don't generally provide as much support, but they do help. When not helping, they mostly staying out of the way while I am writing code.

For me, the key word in Welsh's refusal is require'. If I *need* a mouse or a lot of IDE support just to use a language, that's a sign that the code either isn't telling me everything it should, or there's too much to think about.

Code should speak for itself, and it only say things that the programmer needs to know.