March 30, 2015 3:33 PM

Reminiscing on the Effects of Photoshop

Thomas Knoll, one of the creators of Adobe Photoshop, reminisces on the insight that gave rise to the program. His brother, John, worked on analog image composition at Industrial Light and Magic, where they had just begun to experiment with digital processing.

[ILM] had a scanner that could scan in frames from a movie, digitally process them, and then write the images out to film again.

My brother saw that and had a revelation. He said, "If we convert the movie footage into numbers, and we can convert the numbers back into movie footage, then once it's in the numerical form we could do anything to it. We'd have complete power."

I bought my first copy of Photoshop in the summer of 1992, as part of my start-up package for new faculty. In addition to the hardware and software I needed to do my knowledge-based systems research, we also outfitted the lab with a number of other tools, including Aldus Persuasion, a LaCie digital scanner, OmniPage Pro software for OCR, Adobe Premiere, and Adobe Photoshop. I felt like I could do anything I wanted with text, images, and video. It was a great power.

In truth, I barely scratched the surface of what was possible. Others took Photoshop and went places that even Adobe didn't expect them to go. The Knoll brothers sensed what was possible, but it must have been quite something to watch professionals and amateurs alike use the program to reinvent our relationship with images. Here is Thomas Knoll again:

Photoshop has so many features that make it extremely versatile, and there are artists in the world who do things with it that are incredible. I suppose that's the nature of writing a versatile tool with some low-level features that you can combine with anything and everything else.

Digital representation opens new doors for manipulation. When you give users control at both the highest levels and the lowest, who knows what they will do. Stand back and wait.

March 24, 2015 3:40 PM

Some Thoughts on How to Teach Programming Better

In How Stephen King Teaches Writing, Jessica Lahey asks Stephen King why we should teach grammar:

Lahey: You write, "One either absorbs the grammatical principles of one's native language in conversation and in reading or one does not." If this is true, why teach grammar in school at all? Why bother to name the parts?

King: When we name the parts, we take away the mystery and turn writing into a problem that can be solved. I used to tell them that if you could put together a model car or assemble a piece of furniture from directions, you could write a sentence. Reading is the key, though. A kid who grows up hearing "It don't matter to me" can only learn doesn't if he/she reads it over and over again.

There are at least three nice ideas in King's answer.

- It is helpful to beginners when we can turn writing into a problem that can be solved. Making concrete things out of ideas in our head is hard. When we giving students tools and techniques that help them to create basic sentences, paragraphs, and stories, we make the process of creating a bit more concrete and a bit less scary.

- A first step in this direction is to give names to the things and ideas students need to think about when writing. We don't want students to memorize the names for their own sake; that's a step in the wrong direction. We simply need to have words for talking about the things we need to talk about -- and think about.

- Reading is, as the old slogan tells us, fundamental. It helps to build knowledge of vocabulary, grammar, usage, and style in a way that the brain absorbs naturally. It creates habits of thought that are hard to undo later.

All of these are true of teaching programmers, too, in their own way.

- We need ways to demystify the process and give students concrete steps they can take when they encounter a new problem. The design recipe used in the How to Design Programs approach is a great example. Naming recipes and their steps makes them a part of the vocabulary teachers and students can use to make programming a repeatable, reliable process.

- I've often had great success by giving names to design and implementation patterns, and having those patterns become part of the vocabulary we use to discuss problems and solutions. I have a category for posts about patterns, and a fair number of those relate to teaching beginners. I wish there were more.

- Finally, while it may not be practical to have students read a hundred programs before writing their first, we cannot underestimate the importance of students reading code in parallel with learning to write code. Reading lots of good examples is a great way for students to absorb ideas about how to write their own code. It also gives them the raw material they need to ask questions. I've long thought that Clancy's and Linn's work on case studies of programming deserves more attention.

Finding ways to integrate design recipes, patterns, and case studies is an area I'd like to explore more in my own teaching.

Posted by Eugene Wallingford | Permalink | Categories: Patterns, Software Development, Teaching and Learning

March 13, 2015 3:07 PM

Two Forms of Irrelevance

When companies become irrelevant to consumers.

From The Power of Marginal, by Paul Graham:

The big media companies shouldn't worry that people will post their copyrighted material on YouTube. They should worry that people will post their own stuff on YouTube, and audiences will watch that instead.

You mean Grey's Anatomy is still on the air? (Or, as today's teenagers say, "Grey's what?")

When people become irrelevant to intelligent machines.

From Outing A.I.: Beyond the

Turing Test, by Benjamin Bratton:

I argue that we should abandon the conceit that a "true" Artificial Intelligence must care deeply about humanity -- us specifically -- as its focus and motivation. Perhaps what we really fear, even more than a Big Machine that wants to kill us, is one that sees us as irrelevant. Worse than being seen as an enemy is not being seen at all.

Our new computer overlords indeed. This calls for a different sort of preparation than studying lists of presidents and state capitals.

March 11, 2015 4:15 PM

If Design is Important, Do It All The Time

And you don't have to be in software. In Jonathan Ive and the Future of Apple, Ian Parker describes how the process of developing products at Apple has changed during Ive's tenure.

... design had been "a vertical stripe in the chain of events" in a product's delivery; at Apple, it became "a long horizontal stripe, where design is part of every conversation." This cleared a path for other designers.

By the time the iPhone launched, Ive had become "the hub of the wheel".

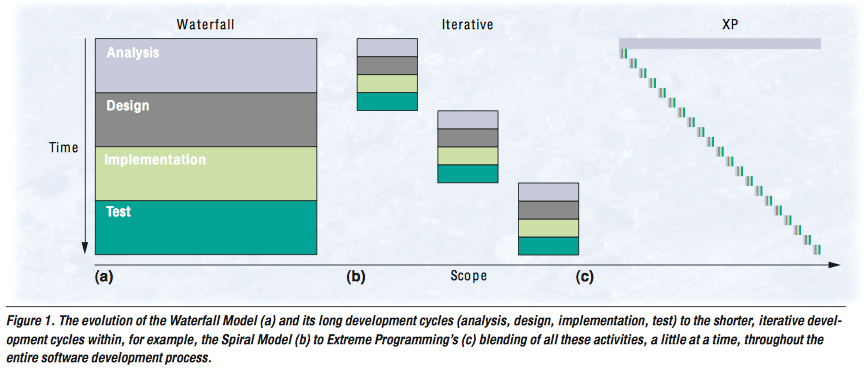

The vertical stripe/horizontal stripe image brought to mind Kent Beck's reimagining of the software development cycle in XP. I was thinking the image in my head came from Extreme Programming Explained, but the closest thing to my memory I can find is in his IEEE Computer article, Embracing Change with Extreme Programming:

My mental image has time on the x-axis, though, which meshes better with the vertical/horizontal metaphor of Robert Brunner, the designer quoted in the passage above.

|

versus |  |

If analysis is important, do it all the time. If design is important, do it all the time. If implementation is important, do it all the time. If testing is important, do it all the time.

Ive and his team have shown that there is value in making design an ongoing part of the process for developing hardware products, too, where "design is part of every conversation". This kind of thinking is not just for software any more.

March 10, 2015 4:45 PM

Learning to Program is a Loser's Game

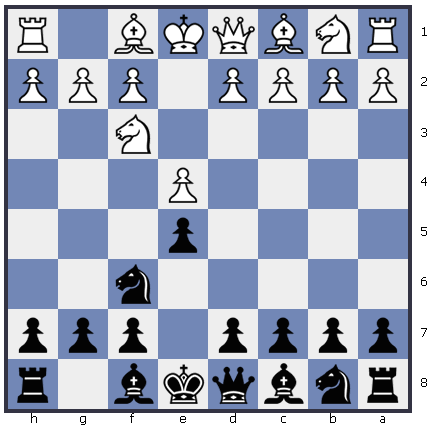

After a long break from playing chess, I recently played a few games at the local club. Playing a couple of games twice in the last two weeks has reminded me that I am very rusty. I've only made two horrible blunders in four games, but I have made many small mistakes, the kind of errors that accumulate over time and make a position hard to defend, even untenable. Having played better in years past, these inaccuracies are irksome.

Still, I managed to win all four games. As I've watched games at the club, I've noticed that most games are won by the player who makes the second-to-last blunder. Most of the players are novices, and they trade mistakes: one player leaves his queen en prise; later, his opponent launches an underprepared attack that loses a rook; then the first player trades pieces and leaves himself with a terrible pawn structure -- and so on, the players trading weak or bad moves until the position is lost for one of them.

My secret thus far has been one part luck, one part simple strategy: winning by not losing.

This experience reminded me of a paper called The Loser's Game, which in 1975 suggested that it was no longer possible for a fund manager to beat market averages over time because most of the information needed to do well was available to everyone. To outperform the market average, a fund manager has to profit from mistakes made by other managers, sufficiently often and by a sufficient margin to sustain a long-term advantage. Charles Ellis, the author, contrasts this with the bull markets of the 1960s. Then, managers made profits based on the specific winning investments they made; in the future, though, the best a manager could hope for was not to make the mistakes that other investors would profit from. Fund management had transformed from being a Winner's Game to a Loser's Game.

|

Ellis drew his inspiration from another world, too. Simon Ramo had pointed out the differences between a Winner's Game and a Loser's Game in Extraordinary Tennis for the Ordinary Tennis Player. Professional tennis players, Ramo said, win based on the positive actions they take: unreturnable shots down the baseline, passing shots out of the reach of a player at the net, service aces, and so on. We duffers try to emulate our heroes and fail... We hit our deep shots just beyond the baseline, our passing shots just wide of the sideline, and our killer serves into the net. It turns out that mediocre players win based on the errors they don't make. They keep the ball in play, and eventually their opponents make a mistake and lose the point.

Ramo saw that tennis pros are playing a Winner's Game, and average players are playing a Loser's Game. These are fundamentally different games, which reward different mindsets and different strategies. Ellis saw the same thing in the investing world, but as part of a structural shift: what had once been a Winner's Game was now a Loser's Game, to the consternation of fund managers whose mindset is finding the stocks that will earn them big returns. The safer play now, Ellis says, is to minimize mistakes. (This is good news for us amateurs investors!)

This is the same phenomenon I've been seeing at the chess club recently. The novices there are still playing a Loser's Game, where the greatest reward comes to those who make the fewest and smallest mistakes. That's not very exciting, especially for someone who fancies herself to be Adolf Anderssen or Mikhail Tal in search of an immortal game. The best way to win is to stay alive, making moves that are as sound as possible, and wait for the swashbuckler across the board from you to lose the game.

What does this have to do with learning to program? I think that, in many respects, learning to program is a Loser's Game. Even a seemingly beginner-friendly programming language such as Python has an exacting syntax compared to what beginners are used to. The semantics seem foreign, even opaque. It is easy to make a small mistake that chokes the compiler, which then spews an error message that overwhelms the new programmer. The student struggles to fix the error, only to find another error waiting somewhere else in the code. Or he introduces a new error while eliminating the old one, which makes even debugging seem scary. Over time, this can dishearten even the heartiest beginner.

What is the best way to succeed? As in all Loser's Games, the key is to make fewer mistakes: follow examples closely, pay careful attention to syntactic details, and otherwise not stray too far from what you are reading about and using in class. Another path to success is to make the mistakes smaller and less intimidating: take small steps, test the code frequently, and grow solutions rather than write them all at once. It is no accident that the latter sounds like XP and other agile methods; they help to guard us from the Loser's Game and enable us to make better moves.

Just as playing the Loser's Game in tennis or investing calls for a different mindset, so, too does learning to program. Some beginners seem to grok programming quickly and move on to designing and coding brilliantly, but most of us have to settle in for a period of discipline and growth. It may not be exciting to follow examples closely when we want to forge ahead quickly to big ideas, but the alternative is to take big shots and let the compiler win all the battles.

Unlike tennis and Ellis's view of stock investing, programming offers us hope: Nearly all of us can make the transition from the Loser's Game to the Winner's Game. We are not destined to forever play it safe. With practice and time, we can develop the discipline and skills necessary to making bold, winning moves. We just have to be patient and put time and energy into the process of becoming less mistake-prone. By adopting the mindset needed to succeed in a Loser's Game, we can eventually play the Winner's Game.

I'm not too sure about the phrases "Loser's Game" and "Winner's Game", but I think that this analogy can help novice programmers. I'm thinking of ways that I can use it to help my students survive until they can succeed.

Posted by Eugene Wallingford | Permalink | Categories: General, Software Development, Teaching and Learning

March 06, 2015 11:29 AM

A Brief Return to the 18th Century

|

I recently discovered that the students at my university have a chess club, so I stopped over yesterday to play a couple of games. In the first, my opponent played Philidor's Defense. In the second, I played Petrov's Defense. For a moment, I felt as if we had drifted in time to a Parisian cafe, circa 1770.

Then I looked up and saw a bank of TV screens surrounded by students who were drinking lattes and using cell phones to scroll through photos. I was back from my reverie.

March 04, 2015 3:28 PM

Code as a Form of Expression, Even Spreadsheets

Even formulas in spreadsheets, even back in the early 1980s:

Spreadsheet models have become a form of expression, and the very act of creating them seem to yield a pleasure unrelated to their utility. Unusual models are duplicated and passed around; these templates are sometimes used by other modelers and sometimes only admired for their elegance.

People love to make and share things. Computation has given us another medium in which to work, and the things people make with it are often very cool.

The above passage comes from Stephen Levy's A Spreadsheet Way of Knowledge, which appeared originally in Harper's magazine in November 1984. He re-published it on Medium this week in belated honor of Spreadsheet Day last October 17, which was the 35th anniversary of VisiCalc, "the Apple II program that started it all". It's a great read, both as history and as a look at how new technologies create unexpected benefits and dangers.

March 02, 2015 4:14 PM

Knowing When We Don't Understand

Via a circuitous walk of web links, this morning I read an old piece called Two More Things to Unlearn from School, which opens:

I suspect the *most* dangerous habit of thought taught in schools is that even if you don't really understand something, you should parrot it back anyway. One of the most fundamental life skills is realizing when you are confused, and school actively destroys this ability -- teaches students that they "understand" when they can successfully answer questions on an exam, which is very very very far from absorbing the knowledge and making it a part of you. Students learn the habit that eating consists of putting food into mouth; the exams can't test for chewing or swallowing, and so they starve.

Schools don't teach this habit explicitly, but they allow it to develop and grow without check. This is one of the things that makes computer science hard for students. You can only get so far by parroting back answers you don't understand. Eventually, you have to write a program or prove an assertion, and all the memorization of facts in the world can't help you.

That said, though, I think students know very well when when they don't understand something. Many of my students struggle with the things they don't understand. But, as Yudkowsky says, they face the time constraints of a course fitting into a fifteen-week window and of one course competing with others for their time. The habit have they developed over the years is to think that, in the end, not understanding is okay, or at least an acceptable outcome of the course. As long as they get the grade they need to move on, they'll have another chance to get it later. And maybe they won't ever need to understand this thing ever again...

One of the best things we can do for students is to ask them to make things and to discuss with them the things they made, and how they made them. This is a sort of intellectual work that requires a deeper understanding than merely factual. It also forces them to consider the choices and trade-offs that characterize real knowledge.