September 30, 2018 6:40 PM

Strange Loop 3: David Schmüdde and the Art of Misuse

|

This talk, the first of the afternoon on Day 1, opened with a familiar image: René Magritte's "this is not a pipe" painting, next to a picture of an actual pipe from some e-commerce site. Throughout the talk, speaker David Schmüdde returned to the distinction between thing and referent as he looked at the phenomenon of software users who used -- misused -- software to do something other than intended by the designer. The things they did were, or became, art.

First, a disclaimer: David is a former student of mine, now a friend, and one of my favorite people in the world. I still have in my music carousel a CD labeled "Schmudde Music!!" that he made for me just before he graduated and headed off to a master's program in music at Northwestern.

I often say in my conference reports that I can't do a talk justice in a blog entry, but it's even more true of a talk such as this one. Schmüdde demonstrated multiple works of art, both static and dynamic, which created a vibe that loses most of its zing when linearized in text. So I'll limit myself here to a few stray observations and impressions from the talk, hoping that you'll be intrigued enough to watch the video when it's posted.

Art is a technological endeavor. Rembrandt and hip hop don't exist without advances in art-making technology.

Misuse can be a form of creative experimentation. Check out Jodi, a website created in 1995 and still available. In the browser, it seems to be a work of ASCII art, but show the page source... (That's a lot harder these days than it was in 1995.) Now that is ASCII art.

Schmüdde talked about another work of from the same era, entitled Rain. It used slowness -- of the network, of the browser -- as a feature. Old HTML (or was it a bug in an old version of Netscape Navigator?) allowed one HEAD tag in a file with multiple BODY tags. The artist created such a document that, when loaded in sequence, gave the appearance of rain falling in the browser. Misusing the tools under the conditions of the day enabled the artist to create an animation before animated GIFs, Flash, and other forms of animation existed.

The talk followed with examples and demos of other forms of software misuse, which could:

- find bugs in a system

- lead to new system features

- illuminate a system in ways not anticipated by the software's creator

Accidental misuse is life. We expect it. Intentional misuse is, or can be, art. It can surprise us.

What does art preservation look like for these works? The original hardware and software systems often are obsolete or, more likely, gone. To me, this is one of the great things about computers: we can simulate just about anything. Digital art preservation becomes a matter of simulating the systems or interactions that existed at the time the art was created. We are back to Magritte's pipe... This is not a work of art; it is a pointer to a work of art.

It is, of course, harder to recreate the experience of the art from the time it was created, but isn't this true of all art? Each of us experiences a work of art anew each time we encounter it. Our experience is never the same as the experience of those who were present when the work was first unveiled. It's often not even the same experience we ourselves had yesterday.

Schmüdde closed with a gentle plea to the technologists in the room to allow more art into their process. This is a new talk, and he was a little concerned about his ending. He may find a less abrupt way to end in the future, but to be honest, I though what he did this time worked well enough for the day.

Even taking my friendship with the speaker into account, this was the talk of the conference for me. It blended software, users, technology, ideas, programming, art, the making of things, and exploring software at its margins. These ideas may appear at the margin, but they often lie at the core of the work. And even when they don't, they surprise us or delight us or make us think.

This talk was a solid example of what makes Strange Loop a great conference every year. There were a couple of other talks this year that gave me a similar vibe, for example, Hannah Davis's "Generating Music..." talk on Day 1 and Ashley Williams's "A Tale of Two asyncs" talk on Day 2. The conference delivers top-notch technical content but also invites speakers who use technology, and explore its development, in ways that go beyond what you find in most CS classrooms.

For me, Day One of the conference ended better than most: over a beer with David at Flannery's with good conversation, both about ideas from his talk and about old times, families, and the future. A good day.

September 30, 2018 10:31 AM

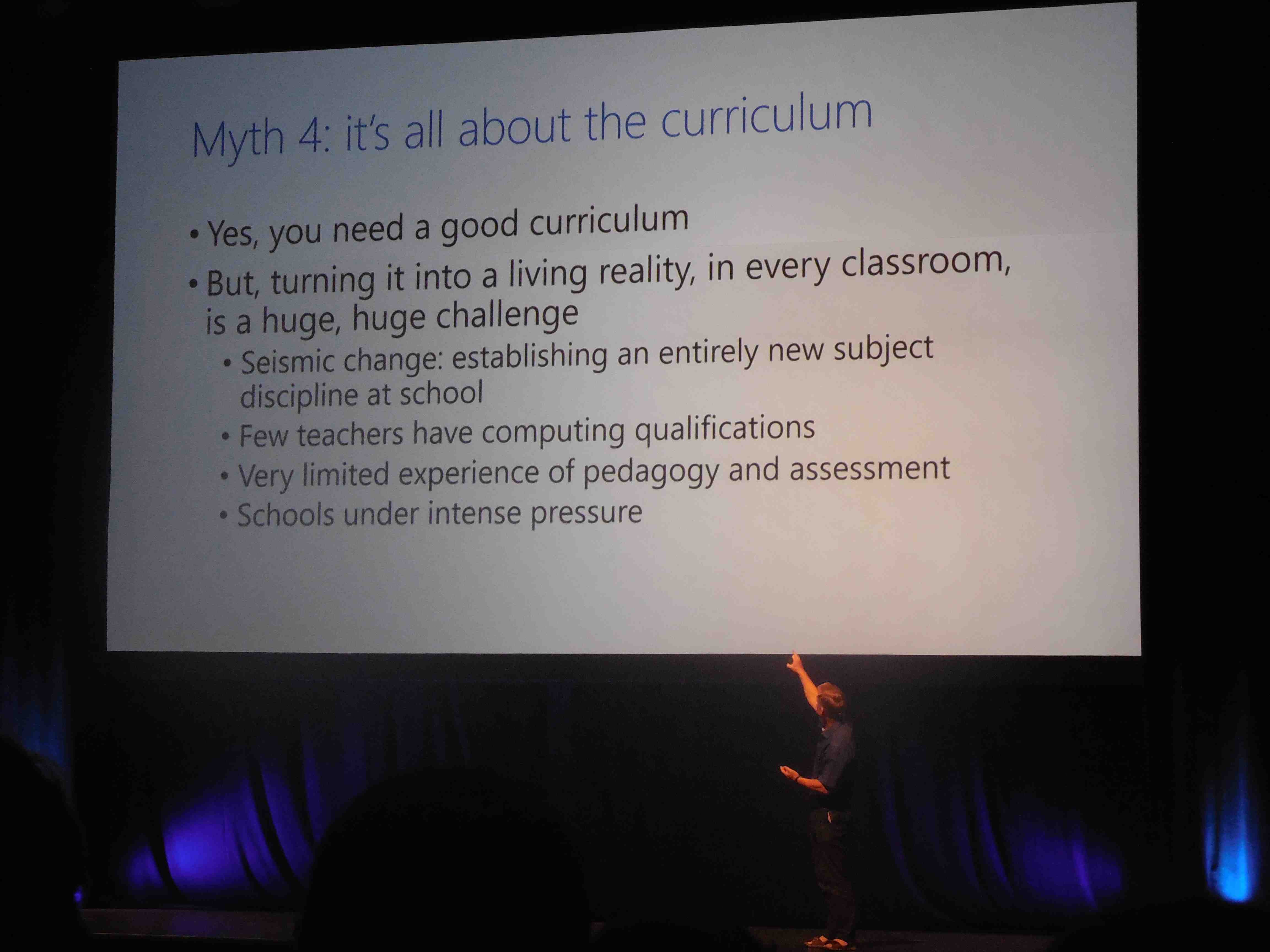

Strange Loop 2: Simon Peyton Jones on Teaching CS in the Schools

|

The opening keynote this year was by Simon Peyton Jones of Microsoft Research, well known in the programming languages for Haskell and many other things. But his talk was about something considerably less academic: "Shaping Our Children's Education in Computing", a ten-year project to reform the teaching of computing in the UK primary and secondary schools. It was a wonderful talk, full of history, practical advice, lessons learned, and philosophy of computing. Rather than try to summarize everything Peyton Jones said, I will let you watch the video when it is posted (which will be as early as next week, I think).

I would, though, like to highlight one particular part of the talk, the way he describes computer science to a non-CS audience. This is an essential skill for anyone who wants to introduce CS to folks in education, government, and the wider community who often see CS as either hopelessly arcane or as nothing more than a technology or a set of tools.

Peyton Jones characterized computing as being about information, computation, and communication. For each, he shared one or two ways to discuss the idea with an educated but non-technical audience. For example:

- Information. Show two images, say the Mona Lisa and a line drawing of a five-pointed star. Ask which contains more information. How can we tell? How can we compare the amounts? How might we write that information down?

- Computation. Use a problem that everyone can relate to, such as planning a trip to visit all the US state capitals in the fewest miles or sorting a set of numbers. For the latter, he used one of the activities from CS Unplugged on sorting networks as an example.

- Communication. Here, Peyton Jones used the elegant and simple idea underlying the Diffie Hellman algorithm for sharing secret as his primary example. It is simple and elegant, yet it's not at all obvious to most people who don't already know it that the problem can be solved at all!

In all three cases, it helps greatly to use examples from many disciplines and to ask questions that encourage the audience to ask their own questions, form their own hypotheses, and create their own experiments. The best examples and questions actually enable people to engage with computing through their own curiosity and inquisitiveness. We are fascinated by computing; other people can be, too.

There is a huge push in the US these days for everyone to learn how to program. This creates a tension among many of us computer scientists, who know that programming isn't everything that we do and that its details can obscure CS as much as they illuminate it. I thought that Peyton Jones used a very nice analogy to express the relationship between programming and CS more broadly: Programming is to computer science as lab work is to physics. Yes, you could probably take lab work out of physics and still have physics, but doing so would eviscerate the discipline. It would also take away a lot of what draws people to the discipline. So it is with programming and computer science. But we have to walk a thin line, because programming is seductive and can ultimately distract us from the ideas that make programming so valuable in the first place.

Finally, I liked Peyton Jones's simple summary of the reasons that everyone should learn a little computer science:

- Everyone should be able to create digital media, not just consume it.

- Everyone should be able to understand their tools, not just use them.

- People should know that technology is not magic.

Oh, and yes, a few people will get jobs that use programming skills and computing knowledge. People in government and business love to hear that part.

Regular readers of this blog know that I am a sucker for aphorisms. Peyton Jones dropped a few on us, most earnestly when encouraging his audience to participate in the arduous task of introducing and reforming the teaching CS in the schools:

- "If you wait for policy to change, you'll just grow old. Get on with it."

- "There is no 'them'. There is only us."

It's easy to admire great researchers who have invested so much time and energy into solving real-world problems, especially in our schools. As long as this post is, it covers only a few minutes from the middle of the talk. My selection and bare-bones outline don't do justice to Peyton Jones's presentation or his message. Go watch the talk when the video goes up. It was a great way to start Strange Loop.

September 29, 2018 6:19 PM

Strange Loop 1: Day One

|

Last Wednesday morning, I hopped in my car and headed south to Strange Loop 2018. It had been a few years since I'd listened to Zen and the Art of Motorcycle Maintenance on a conference drive, so I popped it into the tapedeck (!) once I got out of town and fell into the story. My top-level goal while listening to Zen was similar to my top-level goal for attending Strange Loop this year: to experience it at a high level; not to get bogged down in so many details that I lost sight of the bigger messages. Even so, though, a few quotes stuck in my mind from the drive down. The first is an old friend, one of my favorite lines from all of literature:

Assembly of Japanese bicycle require great peace of mind.

The other was the intellectual breakthrough that unified Phaedrus's philosophy:

Quality is not an object; it is an event.This idea has been on my mind in recent months. It seemed a fitting theme, too, for Strange Loop.

On the first day of the conference, I saw mostly a mixture of compiler talks and art talks, including:

• @mraleph's "Six Years of Dart", in which he reminisced on the evolution of the language, its ecosystem, and its JIT. I took at least one cool idea from this talk. When he compared the performance of two JITs, he gave a histogram comparing their relative performances, rather than an average improvement. A new system often does better on some programs and worse on others. An average not only loses information; it may mislead.

• Jason Dagit's "Your Secrets are Safe with Julia", about a system that explores the use of homomorphic encryption to to compile secure programs. In this context, the key element of security is privacy. As Dagit pointed out, "trust is not transitive", which is especially important when it comes to sharing a person's health data.

• I just loved Hannah Davis's talk on "Generating Music From Emotion". She taught me about data sonification and its various forms. She also demonstrated some of her attempts to tease multiple dimensions of human emotion out of large datasets and to use these dimensions to generate music that reflects the data's meaning. Very cool stuff. She also showed the short video Dragon Baby, which made me laugh out loud.

• I also really enjoyed "Hackett: A Metaprogrammable Haskell", by Alexis King. I've read about this project on the Racket mailing list for a few years and have long admired King's ability in posts there to present complex ideas clearly and logically. This talk did a great job of explaining that Haskell deserves a powerful macro system like Racket's, that Racket's macro system deserves a powerful type system like Haskell's, and that integrating the two is more challenging than simply adding a stage to the compiler pipeline.

I saw two other talks the first day:

- the opening keynote address by Simon Peyton Jones, "Shaping Our Children's Education in Computing" [ link ]

- David Schmüdde, "Misuser" [ link ]

I had almost forgotten how many different kinds of cool ideas I can encounter in a single day at Strange Loop. Thursday was a perfect reminder.

September 20, 2018 4:44 PM

Special Numbers in a Simple Language

This fall I am again teaching our course in compiler development. Working in teams of two or three, students will implement from scratch a complete compiler for a simple functional language that consists of little more than integers, booleans, an if statement, and recursive functions. Such a language isn't suitable for much, but it works great for writing programs that do simple arithmetic and number theory. In the past, I likened it to an integer assembly language. This semester, my students are compiling a Pascal-like language of this sort that call Flair.

If you've read my blog much in the falls over the last decade or so, you may recall that I love to write code in the languages for which my students write their compilers. It makes the language seem more real to them and to me, gives us all more opportunities to master the language, and gives us interesting test cases for their scanners, parsers, type checkers, and code generators. In recent years I've blogged about some of my explorations in these languages, including programs to compute Farey numbers and excellent numbers, as well as trying to solve one of my daughter's AP calculus problems.

When I run into a problem, I usually get an itch to write a program, and in the fall I want to write it in my students' language.

Yesterday, I began writing my first new Flair program of the semester. I ran across this tweet from James Tanton, which starts:

N is "special" if, in binary, N has a 1s and b 0s and a & b are each factors of N (so non-zero).

So, 10 is special because:

- In binary, 10 is 1010.

- 1010 contains two 1s and two 0s.

- Two is a factor of 10.

9 is not special because its binary rep also contains two 1s and two 0s, but two is not a factor of 9. 3 is not special because its binary rep has no 0s at all.

My first thought upon seeing this tweet was, "I can write a Flair program to determine if a number is special." And that is what I started to do.

Flair doesn't have loops, so I usually start every new program by mapping out the functions I will need simply to implement the definition. This makes sure that I don't spend much time implementing loops that I don't need. I ended up writing headers and default bodies for three utility functions:

- convert a decimal number to binary

- count the number of times a particular digits occurs in a number

- determine if a number x divides evenly into a number n

With these helpers, I was ready to apply the definition of specialness:

return divides(count(1, to_binary(n)), n)

and divides(count(0, to_binary(n)), n)

Calling to_binary on the same argument is wasteful, but Flair doesn't have local variables, either. So I added one more helper to implement the design pattern "Function Call as Variable Assignment", apply_definition:

function apply_definition(binary_n : integer, n : integer) : boolean

and called it from the program's main:

return apply_definition(to_binary(n), n)

This is only the beginning. I still have a lot of work to do to implement to_binary, count and divides, using recursive function calls to simulate loops. This is another essential design pattern in Flair-like languages.

As I prepared to discuss my new program in class today, I found bug: My divides test was checking for factors of binary_n, not the decimal n. I also renamed a function and one of its parameters. Explaining my programs to students, a generalization of rubber duck debugging, often helps me see ways to make a program better. That's one of the reasons I like to teach.

Today I asked my students to please write me a Flair compiler so that I can run my program. The course is officially underway.

September 13, 2018 3:50 PM

Legacy

In an interview at The Great Discontent, designer John Gall is asked, "What kind of legacy do you hope to leave?" He replies:

I have no idea; it's not something I think about. It's the thing one has the least control over. I just hope that my kids will have nice things to say about me.

I admire this answer.

No one is likely to ask me about my legacy; I'm just an ordinary guy. But it has always seemed strange when people -- presidents, artists, writers, film stars -- are asked this question. The idea that we can or should craft our own legacy like a marketing brand seems venal. We should do things because they matter, because they are worth doing, because they make the world better, or at least better than it would be without us. It also seems like a waste of time. The simple fact is that most of us won't be remembered long beyond our deaths, and only then by close family members and friends. Even presidents, artists, writers, and film stars are mostly forgotten.

To the extent that anyone will have a legacy, it will decided in the future by others. As Gall notes, we don't have much control over how that will turn out. History is full of people whose place in the public memory turned out much differently than anyone might have guessed at the time.

When I am concerned that I'm not using my time well, it's not because I am thinking of my legacy. It's because I know that time is a precious and limited resource and I feel guilty for wasting it.

About the most any of us can hope is that our actions in this life leave a little seed of improvement in the world after we are gone. Maybe my daughters and former students and friends can make the world better in part because of something in the way I lived. If that's what people mean by their legacy, great, but it's likely to be a pretty nebulous effect. Not many of us can be Einstein or Shakespeare.

All that said, I do hope my daughters have good things to say about me, now and after I'm gone. I love them, and like them a lot. I want to make their lives happier. Being remembered well by them might also indicate that I put my time on Earth to good use.

September 05, 2018 3:58 PM

Learning by Copying the Textbook

Or: How to Learn Physics, Professional Golfer Edition

Bryson DeChambeau is a professional golfer, in the news recently for consecutive wins in the FedExCup playoff series. But he can also claim an unusual distinction as a student of physics:

In high school, he rewrote his physics textbook.

DeChambeau borrowed the textbook from the library and wrote down everything from the 180-page book into a three-ring binder. He explains: "My parents could have bought one for me, but they had done so much for me in golf that I didn't want to bother them in asking for a $200 book. ... By writing it down myself I was able to understand things on a whole comprehensive level.

I imagine that copying texts word-for-word was a more common learning strategy back when books were harder to come by, and perhaps it will become more common again as textbook prices rise and rise. There is certainly something to be said for it. Writing by hand takes time, and all the while our brains can absorb terms, make connections among concepts, and process the material into long-term memory. Zed Shaw argues for this as a great way to learn computer programming, implementing it as a pedagogical strategy in his "Learn <x> the Hard Way" series of books. (See Learn Python the Hard Way as an example.)

I don't think I've ever copied a textbook word-for-word, and I never copied computer programs from "Byte" magazine, but I do have similar experiences in note taking. I took elaborate notes all through high school, college, and grad school. In grad school, I usually rewrote all of my class notes -- by hand; no home PC -- as I reviewed them in the day or two after class. My clean, rewritten notes had other benefits, too. In a graduate graph algorithms course, they drew the attention of a classmate who became one of my best friends and were part of what attracted the attention of the course's professor, who asked me to consider joining his research group. (I was tempted... Graph algorithms was one of my favorite courses and research areas!)

I'm not sure many students these days benefit from this low-tech strategy. Most students who take detailed notes in my course seem to type rather than write which, if what I've read is correct, has fewer cognitive advantages. But at least those students are engaging with the material consciously. So few students seem to take detailed notes at all these days, and that's a shame. Without notes, it is harder to review ideas, to remember what they found challenging or puzzling in the moment, and to rehearse what they encounter in class into their long-term memories. Then again, maybe I'm just having a "kids these days" moment.

Anyway, I applaud DeChambeau for saving his parents a few dollars and for the achievement of copying an entire physics text. He even realized, perhaps after the fact, that it was an excellent learning strategy.

(The above passage is from The 11 Most Unusual Things About Bryson DeChambeau. He sounds like an interesting guy.)

September 03, 2018 7:24 AM

Lay a Split of Good Oak on the Andirons

There are two spiritual dangers in not owning a farm. One is the danger of supposing that breakfast comes from the grocer, and the other that heat comes from the furnace.

The remedy for the first, according to Aldo Leopold, is to grow a garden, preferably in a place without the temptation and distraction of a grocery store. The remedy for the second is to "lay a split of good oak on the andirons" and let it warm your body "while a February blizzard tosses the trees outside".

I ran across Leopold's The Sand County Almanac in the local nature center late this summer. After thumbing through the pages during a break in a day-long meeting indoors, I added it to my long list of books to read. My reading list is actually stack, so there was some hope that I might get to it soon -- and some danger that it would be buried before I did.

Then an old high school friend, propagating a meme on Facebook, posted a picture of the book and wrote that it had changed his life, changed how he looked at the world. That caught my attention, so I anchored it atop my stack and checked a copy out of the university library.

It now serves as a quiet read for this city boy on a dark and rainy three-day weekend. There are no February blizzards here yet, of course, but autumn storms have lingered for days. In an important sense, I'm not a "city boy", as my by big-city friends will tell me, but I've lived my life mostly sheltered from the reality growing my own food and heating my home by a wonderful and complex economy of specialized labor that benefits us all. It's good to be reminded sometimes of that good fortune, and also to luxuriate in the idea of experiencing a different kind of life, even if only for a while.