February 29, 2024 3:45 PM

Finding the Torture You're Comfortable With

At some point last week, I found myself pointed to this short YouTube video of Jerry Seinfeld talking with Howard Stern about work habits. Seinfeld told Stern that he was essentially always thinking about making comedy. Whatever situation he found himself in, even with family and friends, he was thinking about how he could mine it for new material. Stern told him that sounded like torture. Jerry said, yes, it was, but...

Your blessing in life is when you find the torture you're comfortable with.

This is something I talk about with students a lot.

Sometimes it's a current student who is worried that CS isn't for them because too often the work seems hard, or boring. Shouldn't it be easy, or at least fun?

Sometimes it's a prospective student, maybe a HS student on a university visit or a college student thinking about changing their major. They worry that they haven't found an area of study that makes them happy all the time. Other people tell them, "If you love what you do, you'll never work a day in your life." Why can't I find that?

I tell them all that I love what I do -- studying, teaching, and writing about computer science -- and even so, some days feel like work.

I don't use torture as analogy the way Seinfeld does, but I certainly know what he means. Instead, I usually think of this phenomenon in terms of drudgery: all the grunt work that comes with setting up tools, and fiddling with test cases, and formatting documentation, and ... the list goes on. Sometimes we can automate one bit of drudgery, but around the corner awaits another.

And yet we persist. We have found the drudgery we are comfortable with, the grunt work we are willing to do so that we can be part of the thing it serves: creating something new, or understanding one little corner of the world better.

I experienced the disconnect between the torture I was comfortable with and the torture that drove me away during my first year in college. As I've mentioned here a few times, most recently in my post on Niklaus Wirth, from an early age I had wanted to become an architect (the kind who design houses and other buildings, not software). I spent years reading about architecture and learning about the profession. I even took two drafting courses in high school, including one in which we designed a house and did a full set of plans, with cross-sections of walls and eaves.

Then I got to college and found two things. One, I still liked architecture in the same way as I always had. Two, I most assuredly did not enjoy the kind of grunt work that architecture students had to do, nor did I relish the torture that came with not seeing a path to a solution for a thorny design problem.

That was so different from the feeling I had writing BASIC programs. I would gladly bang my head on the wall for hours to get the tiniest detail just the way I wanted it, either in the code or in the output. When the torture ended, the resulting program made all the pain worth it. Then I'd tackle a new problem, and it started again.

Many of the students I talk with don't yet know this feeling. Even so, it comforts some of them to know that they don't have to find The One Perfect Major that makes all their boredom go away.

However, a few others understand immediately. They are often the ones who learned to play a musical instrument or who ran cross country. The pianists remember all the boring finger exercises they had to do; the runners remember all the wind sprints and all the long, boring miles they ran to build their base. These students stuck with the boredom and worked through the pain because they wanted to get to the other side, where satisfaction and joy are.

Like Seinfeld, I am lucky that I found the torture I am comfortable with. It has made this life a good one. I hope everyone finds theirs.

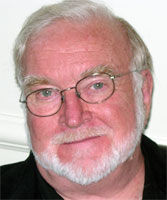

Posted by Eugene Wallingford | Permalink | Categories: Computing, Personal, Running, Software Development, Teaching and Learning

January 27, 2024 7:10 PM

Today in "It's not the objects; it's the messages"

Alan Kay is fond of saying that object-oriented programming is not about the objects; it's about the messages. He also looks to the biological world for models of how to think about and write computer programs.

This morning I read two things on the exercise bike that brought these ideas to mind, one from the animal kingdom and one from the human sphere.

First was a surprising little article on how an invasive ant species is making it harder for Kenyan lions to hunt zebras, with elephants playing a pivotal role in the story, too. One of the scientists behind the study said:

"We often talk about conservation in the context of species. But it's the interactions which are the glue that holds the entire system together."

It's not just the animals. It's the interactions.

Then came @jessitron reflecting on what it means to be "the best":

And then I remembered: people are people through other people. Meaning comes from between us, not within us.

It's not just the people. It's the interactions.

Both articles highlighted that we are usually better served by thinking about interactions within systems, and not simply the components of system. That way lies a more reliable approach to build robust software. Alan Kay is probably somewhere nodding his head.

The ideas in Jessitron's piece fit nicely into the software analogy, but they mean even more in the world of people that she is reflecting on. It's easy for each of us to fall into the habit of walking around the world as an I and never quite feeling whole. Wholeness comes from connection to others. I occasionally have to remind myself to step back and see my day in terms of the students and faculty I've interacted with, whom I have helped and who have helped me.

It's not (just) the people. It's the interactions.

November 24, 2023 12:17 PM

And Then Came JavaScript

It's been a long time again between posts. That seems to be the new normal. On top of that, though, teaching web development this fall for the first time has been soaking up all of my free hours in the evenings and over the weekends, which has left me little time to be sad about not blogging. I've certainly been writing plenty of text and a bit of code.

The course started off with a lot of positive energy. The students were excited to learn HTML and CSS. I made a few mistakes in how I organized and presented topics, but things went pretty well. By all accounts, students seemed to enjoy what they were learning and doing.

And then came JavaScript.

Well, along came real computer programming. It could have been Python or some other language, I think, but the transition to writing programs, even short bits of code, took the wind out of the students' excitement.

I was prepared for the possibility that the mood of the course would change when we shifted from CSS to JavaScript. A previous offering of the course had encountered a similar obstacle. Learning to program is a challenge. I'm still not convinced that learning to program is that much harder than a lot of things people learn to do, but it does take time.

As I prepared for the course last summer, I saw so many different approaches to teaching JavaScript for web development. Many assumed a lot of HTML/CSS/DOM background, certainly more than my students had picked up in six weeks. Others assumed programming experience most of my students didn't have, even when the approach said 'no experience necessary!'. So I had to find a middle path.

My main source of inspiration in the first half of the course was David Humphrey's WEB 222 course, which was explicitly aimed at an audience of CS students with programming experience. So I knew that I had to do something different with my students, even as I used his wonderful course materials whenever I could.

My department had offered this course once many years ago, aimed at much the same kind of audience as mine, and the instructor — a good friend — shared all of his materials. I used that offering as a primary source of ideas for getting started with JavaScript, and I occasionally adapted examples for use in my class.

The results were not ideal. Students don't seem to have enjoyed this part of the course much at all. Some acknowledged that to me directly. Even the most engaged students seemed to lose a bit of their energy for the course. Performance also sagged. Based on homework solutions and a short exam, I would say that only one student has achieved the outcomes I had originally outlined for this unit.

I either expected too much or did not do a good enough job helping students get to where I wanted them to be.

I have to do better next time.

But how?

Programming isn't as hard as some people tell us, but most of us can't learn to do it in five or six weeks, at least not enough to become very productive. We don't expect students to master all of CSS or even HTML in such a short time, so we can't expect them to master JavaScript either. The difference is that there seems to be a smooth on-ramp for learning HTML and CSS on the way to mastery, while JavaScript (or any other programming language) presents a steep climb, with occasional plateaus.

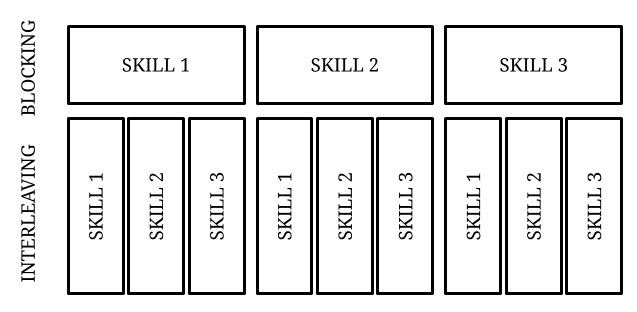

For now, I am thinking that the key to doing better is to focus on an even narrower set of concepts and skills.

If people starting from scratch can't learn all of JavaScript in five or six weeks, or even enough to be super-productive, what useful skills can they learn in that time? For this course I trimmed down the set of topics that we might cover in an intro CS considerably, but I think I need to trim even more and — more importantly — choose topics and examples that are even more embedded in the act of web development.

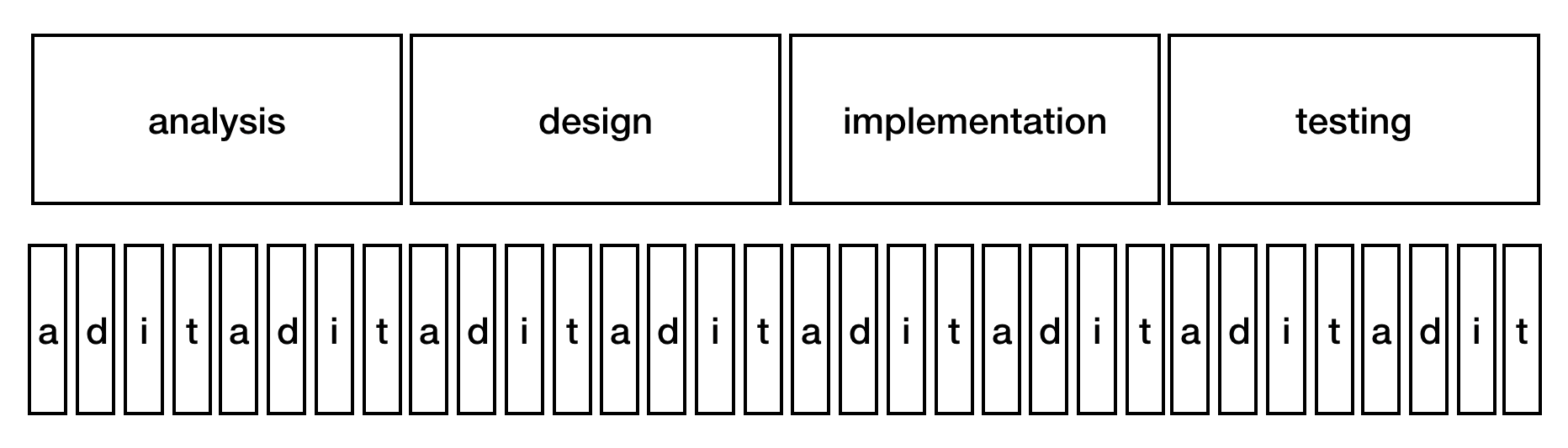

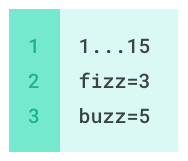

Earlier this week, a sudden burst of thought outlined something like this:

-

document.querySelector()to select an element in a page - simple assignment statements to modify

innerText,innerHTML, and various style attributes - parameterizing changes to an element to create a function

-

document.querySelectorAll()to select collections of elements in a page -

forEachto process every element in a collection - guarded actions to select items in the collection using

ifstatements, withoutelseclauses

That is a lot to learn in five weeks! Even so, it cuts way back on several topics I tried cover this time, such as a more general discussion of objects, arrays, and boolean values, and a deeper look at the DOM. And it eliminates even mentioning several topics altogether:

-

if-elsestatements -

whilestatements - counted

forloops and, more generally, map-like behavior - any fiddling with numbers and arithmetic, which are often used

to learn assignment statements,

ifstatements, and function

There are so many things a programmer can't do without these concepts, such as writing an indefinite data validation loop or really understanding what's going on in the DOM tree. But trying to cover all of those topics too did not result in students being able to do them either! I think it left them confused, with so many new ideas jumbled in their minds, and a general dissatisfaction at being unable to use JavaScript effectively.

Of course I would want to build hooks into the course for students who want to go deeper and are ready to do so. There is so much good material on the web for people who are ready for more. Providing more enriched opportunities for advanced students is easier than designing learning opportunities for beginners.

Can something like this work?

I won't know for a while. It will be at least a year before I teach this course again. I wish I could teach it again sooner, so that I could try some of my new ideas and get feedback on them sooner. Such is the curse of a university calendar and once-yearly offerings.

It's too late to make any big changes in trajectory this semester. We have only two weeks after the current one-week Thanksgiving break. Next week, we will focus on input forms (HTML), styling (CSS), and a little data validation (HTML+JavaScript). I hope that this return to HTML+CSS helps us end the course on a positive note. I'd like for students to finish with a good feeling about all they have learned and now can do.

October 31, 2023 7:12 PM

The Spirit of Spelunking

Last month, Gus Mueller announced v7.4.3 of his image-editing tool Acorn. This release has a fun little extra built in:

One more super geeky thing I've added is a JavaScript Console:

...

This tool is really meant for folks developing plugins in Acorn, and it is only accessible from the Command Bar, but a part of me absolutely loves pointing out little things like this. I was just chatting with Brent Simmons the other day at Xcoders how you can't really spelunk in apps any more because of all the restrictions that are (justifiably) put on recent MacOS releases. While a console isn't exactly a spelunking tool, I still think it's kind of cool and fun and maybe someone will discover it accidentally and that will inspire them to do stupid and entertaining things like we used to do back in the 10.x days.

I have had JavaScript consoles on my mind a lot in the last few weeks. My students and I have used the developer tools in our browsers as part of my web development course for non-majors. To be honest, I had never used a JavaScript console until this summer, when I began preparing for the course in earnest. REPLs are, of course, a big part of the programming background, from Lisp to Racket to Ruby to Python, so I took to the console with ease and joy. (My past experience with JavaScript was mostly in Node.js, which has its own REPL.) We just started our fourth week studying JavaScript in class, so my students have started getting used to the console. At the outset, it was new to most of them, who have never programmed before. Our attention has now turned to interacting with the DOM and manipulating the content of web page. It's been a lot of fun for me. I'm not sure how it feels for all of my students, though. Many came to the course for web design and really enjoy HTML and CSS. JavaScript, on the other hand, is... programming: more syntax, more semantics, and a lot of new details just to select, say, the third h3 on the page.

Sometimes, you just gotta work over the initial hump to sense the power and fun. Some of them are getting there.

Today I had great fun showing them how to add some simple search functionality to a web page. It was our first big exercise using document.querySelectorAll() and processing a collection of HTML elements. Soon we'll learn about text fields and buttons and events, at which point my The Books of Bokonon will become much more useful to the many readers who still find pleasure in it. Just last night that page got its first modern web styling in the form of a CSS style sheet. For its first twenty-four years of existence, it was all 1990s-era HTML and pseudo-layout using <center>, <hr>, and <br> tags.

Anyway, I appreciate Gus's excitement at adding a console to Acorn, making his tool a place to play as well as work. Spread the joy.

September 22, 2023 8:38 PM

Time and Words

Earlier this week, I posted an item on Mastodon:

After a few months studying and using CSS, every once in a while I am able to change a style sheet and cause something I want to happen. It feels wonderful. Pretty soon, though, I'll make a typo somewhere, or misunderstand a property, and not be able to make anything useful happen. That makes me feel like I'm drowning in complexity.

Thankfully, the occasional wonderful feelings — and my strange willingness to struggle with programming errors — pull me forward.

I've been learning a lot while preparing to teach to our web development course [ 1 | 2 ]. Occasionally, I feel incompetent, or not very bright. It's good for me.

I haven't been blogging lately, but I've been writing lots of words. I've also been re-purposing and adapting many other words that are licensed to be reusable (thanks, David Humphrey and John Davis). Prepping a new course is usually prime blogging time for me, with my mind in a constant state of churn, but this course has me drowning in work to do. There is so much to learn, and so much new material — readings, session plans and notes, examples, homework assignments — to create.

I have made a few notes along the way, hoping to expand them into posts. Today they become part of this post.

VS Code

This is my first time in a long time using an editor configured to auto-complete and do all the modern things that developers expect these days. I figured, new tool, why not try a new workflow...

After a short period of breaking in, I'm quite enjoying the experience. One feature I didn't expect to use so much is the ability to collapse an HTML element. In a large HTML file, this has been a game changer for me. Yes, I know, this is old news to most of you. But as my brother loves to say when he gets something used or watches a movie everyone else has already seen, "But, hey, it's new to me!" VS Code's auto-complete across HTML, CSS, and JavaScript, with built-in documentation and links to MDN's more complete documentation, lets me type code much faster than ever before. It made me think of one of my favorite Kent Beck lines:

As the speed of development approaches infinity, reuse becomes irrelevant.

When programming, I often copy, paste, and adapt previous code. In VS Code, I have found myself moving fast enough that copy, paste, and adapt would slow me down. That sort of reuse has become irrelevant.

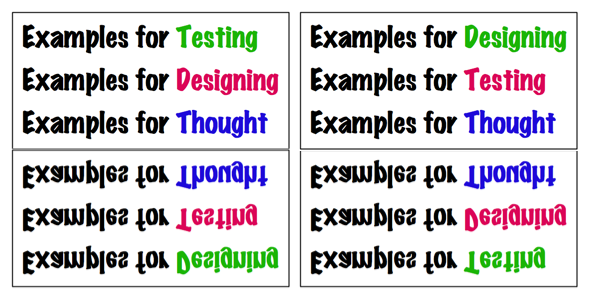

Examples > Prose

The class sessions for which I have written the longest and most complete notes for my students (and me) tend to be the ones for which I have the fewest, or least well-developed, code examples. The reverse is also true: lots of good examples and code tends to mean smaller class notes. Sometimes that is because I run out of time to write much prose to accompany the session. Just as often, though, it's because the examples give us plenty to do live in class, where the learning happens in the flow of writing and examining code.

This confirms something I've observed over many years of teaching: Examples First tends to work better for most students, even people like me who fancy themselves as top-down thinkers. Good examples and code exercises can create the conditions in which abstract knowledge can be learned. This is a sturdy pedagogical pattern.

Concepts and Patterns

There is so, so much to CSS! HTML itself has a lot of details for new coders to master before they reach fluency. Many of the websites aimed at teaching and presenting these topics quickly begin to feel like a downpour of information, even when the authors try to organize it all. It's too easy to fall into, "And then there's this other property...".

After a few weeks, I've settled into trying to help students learn two kinds of things for each topic:

- a couple of basic concepts or principles

- a few helpful patterns

~~~~~

We are only five weeks into a fifteen week semester, so take any conclusions I draw with a grain of salt. We also haven't gotten to JavaScript yet, the teaching and learning of which will present a different sort of challenge than HTML and CSS with students who have little or no experience programming. Maybe I will make time to write up our experiences with JavaScript in a few weeks.

Posted by Eugene Wallingford | Permalink | Categories: Patterns, Software Development, Teaching and Learning

July 31, 2023 2:35 PM

Learning CSS By Doing It

I ran across this blog post earlier this summer when browsing articles on CSS, as one does while learning CSS for a fall course. Near the end, the writer says:

This is thoroughly exciting to me, and I don't wanna whine about improvements in CSS, but it's a bit concerning since I feel like what the web is now capable of is slipping through my fingers. And I guess that's what I'm worried about; I no longer have a good idea of how these things interact with each other, or where the frontier is now.

The map of CSS in my mind is real messy, confused, and teetering with details that I can't keep straight in my head.

Imagine how someone feels as they learn CSS basically from the beginning and tries to get a handle both on how to use it effectively and how to teach it effectively. There is so much there... The good news, of course, is that our course is for folks with no experience, learning the basics of HTML, CSS, and JavaScript from the beginning, so there is only so far we can hope to go in fifteen weeks anyway.

My impressions of HTML and CSS at this point are quite similar: very little syntax, at least for big chunks of the use cases, and lots and lots of vocabulary. Having convenient access to documentation such as that available at developer.mozilla.org via the web and inside VS Code makes exploring all of the options more manageable in context.

I've been watching Dave Humphrey's videos for his WEB 222 course at Seneca College and learning tons. Special thanks to Dave for showing me a neat technique to use when learning -- and teaching -- web development: take a page you use all the time and try to recreate it using HTML and CSS, without looking at the page's own styles. He has done that a couple times now in his videos, and I was able to integrate the ideas we covered about the two languages in previous videos as Dave made the magic work. I have tried it once on my own. It's good fun and a challenging exercise.

Learning layout by viewing page source used to be easier in the old days, when pages were simpler and didn't include dozens of CSS imports or thousands of scripts. Accepting the extra challenge of not looking at a page's styles in 2023 is usually the simpler path.

Two re-creations I have on tap for myself in the coming days are a simple Wikipedia-like page for myself (I'm not notable enough to have an actual Wikipedia page, of course) and a page that acts like my Mastodon home page, with anchored sidebars and a scrolling feed in between. Wish me luck.

July 10, 2023 12:28 PM

What We Know Affects What We See

Last time I posted this passage from Shop Class as Soulcraft, by Matthew Crawford:

Countless times since that day, a more experienced mechanic has pointed out to me something that was right in front of my face, but which I lacked the knowledge to see. It is an uncanny experience; the raw sensual data reaching my eye before and after are the same, but without the pertinent framework of meaning, the features in question are invisible. Once they have been pointed out, it seems impossible that I should not have seen them before.

We perceive in part based on what we know. A lack of knowledge can prevent us from seeing what is right in front of us. Our brains and eyes work together, and without a perceptual frame, they don't make sense of the pattern. Once we learn something, our eyes -- and brains -- can.

This reminds me of a line from the movie The Santa Clause, which my family watched several times when my daughters were younger. The new Santa Claus is at the North Pole, watching magical things outside his window, and comments to the elf whose been helping him, "I see it, but I don't believe it." She replies that adults don't understand: "Seeing isn't believing; believing is seeing." As a mechanic, Crawford came to understand that knowing is seeing.

Later in the book, Crawford describes another way that knowing and perceiving interact with one another, this time with negative results. He had been struggling to figure out why there was no spark at the spark plugs in his VW Bug, and his father -- an academic, not a mechanic -- told him about Ohm's Law:

Ohm's law is something explicit and rulelike, and is true in the way that propositions are true. Its utter simplicity makes it beautiful; a mind in possession of this equation is charmed with a sense of its own competence. We feel we have access to something universal, and this affords a pleasure that is quasi-religious, perhaps. But this charm of competence can get in the way of noticing things; it can displace, or perhaps hamper the development of, a different kind of knowledge that may be difficult to bring to explicit awareness, but is superior as a practical matter. It superiority lies in the fact that it begins with the typical rather than the universal, so it goes more rapidly and directly to particular causes, the kind that actually tend to cause ignition problems.

Rule-based, universal knowledge imposes a frame on the scene. Unfortunately, its universal nature can impede perception by blinding us to the particular details of the situation we are actually in. Instead of seeing what is there, we see the scene as our knowledge would have it.

|

This reminds me of a story and a technique from the book Drawing on the Right Side of the Brain, which I first wrote about in the earliest days of this blog. When asked to draw a chair, most people barely even look at the chair in front of them. Instead, they start drawing their idea of a chair, supplemented by a few details of the actual chair they see. That works about as well as diagnosing an engine by diagnosing your mind's eye of an engine, rather than the mess of parts in front of you.

In that blog post, I reported my experience with one of Edwards's techniques for seeing the chair, drawing the negative space:

One of her exercises asked the student to draw a chair. But, rather than trying to draw the chair itself, the student is to draw the space around the chair. You know, that little area hemmed in between the legs of the chair and the floor; the space between the bottom of the chair's back and its seat; and the space that is the rest of the room around the chair. In focusing on these spaces, I had to actually look at the space, because I don't have an image in my brain of an idealized space between the bottom of the chair's back and its seat. I had to look at the angles, and the shading, and that flaw in the seat fabric that makes the space seem a little ragged.

Sometimes, we have to trick our eyes into seeing, because otherwise our brains tell us what we see before we actually look at the scene. Abstract universal knowledge helps us reason about what we see, but it can also impede us from seeing in the first place.

What we know both enables and hampers what we perceive. This idea has me thinking about how my students this fall, non-CS majors who want to learn how to develop web sites, will encounter the course. Most will be novice programmers who don't know what they see when they are looking at code, or perhaps even at a page rendered in the browser. Debugging code will be a big part of their experience this semester. Are there exercises I can give them to help them see accurately?

As I said in my previous post, there's lots of good stuff happening in my brain as I read this book. Perhaps more posts will follow.

Posted by Eugene Wallingford | Permalink | Categories: Patterns, Software Development, Teaching and Learning

May 19, 2023 2:57 PM

Help! Teaching Web Development in 2023

With the exception of teaching our database course during the COVID year, I have been teaching a stable pair of courses for the last many semesters: Programming Languages in the spring and our compilers course, Translation of Programming Languages, in the fall. That will change this fall due to some issues with enrollments and course demands. I'll be teaching a course in client-side web development.

The official number and title of the course are "CS 1100 Web Development: Client-Side Coding". The catalog description for the course was written years ago by committee:

Client-side web development adhering to current Web standards. Includes by-hand web page development involving basic HTML, CSS, data acquisition using forms, and JavaScript for data validation and simple web-based tools.

As you might guess from the 1000-level number, this is an introductory course suitable for even first-year students. Learning to use HTML, CSS, and Javascript effectively is the focal point. It was designed as a service course for non-majors, with the primary audience these days being students in our Interactive Digital Studies program. Students in that program learn some HTML and CSS in another course, but that course is not a prerequisite to ours. A few students will come in with a little HTML5+CSS3 experience, but not all.

So that's where I am. As I mentioned, this is one of my first courses to design from scratch in a long time. Other than Database Systems, we have to go back to Software Engineering in 2009. Starting from scratch is fun but can be daunting, especially in a course outside my core competency of hard-core computer science.

The really big change, though, was mentioned two paragraphs ago: non-majors. I don't think I've taught non-majors since teaching my university's capstone 21 years ago -- so long ago that this blog did not yet exist. I haven't taught a non-majors' programming course in even longer, 25 years or more, when I last taught BASIC. That is so long ago that their was no "Visual" in the language name!

So: new area, new content, new target audience. I have a lot of work to do this summer.

I could use some advice from those of you who do web development for a living, who know someone who does, or who are more up to date on the field than I.

Generally, what should a course with this title and catalog description be teaching to beginners in 2023?

Specifically, can you point me toward...

- similar courses with material online that I could learn from?

- resources for students to use as they learn: websites, videos, even books?

For example, a former student and now friend mentioned that the w3schools website includes a JavaScript tutorial which allows students to type and test code within the web browser. That might simplify practice greatly for non-CS students while they are learning other development tools.

I have so many questions to answer about tools in particular right now: Should we use an IDE or a simple text editor? Which one? Should we learn raw JavaScript or a simple framework? If a framework, which one?

This isn't a job-training course, but to the extent that's reasonable I would like for students to see a reasonable facsimile of what they might encounter out in industry.

Thinking back, I guess I'm glad now that I decided to play some around with JavaScript in 2022... At least I now have more context for evaluatins the options available for this course.

If you have any thoughts or suggestions, please do send them along. Sending email or replying on Mastodon or Twitter all work. I'll keep you posted on what I learn.

April 24, 2023 2:54 PM

PyCon Day 3

The last day of a conference is often a wildcard. If it ends early enough, I can often drive or fly home afterward, which means that I can attend all of the conference activities. If not, I have to decide between departing the next day or cutting out early. When possible, I stay all day. Sometimes, as with StrangeLoop, I can stay all day, skip only the closing keynote, and drive home into the night.

With virtual PyCon, I decided fairly early on that I would not be able to attend most of the last day. This is Sunday, I have family visiting, and work looms on the horizon for Monday. An afternoon talk or two is all I will be able to manage.

Talk 1: A Pythonic Full-Text Search

The idea of this talk was to use common Python tools to implement full-text search of a corpus. It turns out that the talk focused on websites, and thus on tools common to Python web developers: PostgreSQL and Django. It also turns out that django.contrib.postgres provides lots of features for doing search out of the box. This talk showed how to use them.

This was an interesting talk, but not immediately useful to me. I don't work with Django, and I use SQLite more often then PostgreSQL for my personal work. There may be some support for search in django.contrib.sqlite, if it exists, but the speaker said that I'd likely have to implement most of the search functionality myself. Even so, I enjoyed seeing what was possible with modules already available.

Talk 2: Using Python to Help the Unhoused

I thought I was done for the conference, but I decided I could listen in one one more talk while making dinner. This non-technical session sounded interesting:

How a group of volunteers from around the globe use Python to help an NGO in Victoria, BC, Canada to help the unhoused. By building a tool to find social media activity on unhoused in the Capitol Region, the NGO can use a dashboard of results to know where to move their limited resources.

With my attention focused on the Sri Lankan dal with coconut-lime kale in my care, I didn't take detailed notes this time, but I did learn about the existence of Statistics Without Borders, which sounds like a cool public service group that needs to exist in 2023. Otherwise, the project involved scraping Twitter as a source of data about the needs of the homeless in Victoria, and using sentiment analysis to organize the data. Filtering the data to zero in on relevant data was their biggest challenge, as keyword filters passed through many false positives.

At this point, the developers have given their app to the NGO and are looking forward to receiving feedback, so that they can make any improvements that might be needed.

This is a nice project by folks giving back to their community, and a nice way to end the conference.

~~~~~

I was a PyCon first-timer, attending virtually. The conference talks were quite good. Thanks to everyone who organized the conference and created such a complete online experience. I didn't use all of the features available, but what I did use worked well, and the people were great. I ended up with links to several Python projects to try out, a few example scripts for PyScript and Mermaid that I cobbled together after the talks, and lots of syntactic abstractions to explore. Three days well spent.

April 23, 2023 12:09 PM

PyCon Day 2

Another way that attending a virtual conference is like the in-person experience: you can oversleep, or lose track of time. After a long morning of activity before the time-shifted start of Day 2, I took a nap before the 11:45 talk, and...

Talk 1: Python's Syntactic Sugar

Grrr. I missed the first two-thirds of this talk, which I greatly anticipated, but I slept longer than I planned. My body must have needed more than I was giving it.

I saw enough of the talk, though, to know I want to watch the video on YouTube when it shows up. This topic is one of my favorite topics in programming languages: What is the smallest set of features we need to implement the rest of the language? The speaker spent a couple of years implementing various Python features in terms of others, and arrived at a list of only ten that he could not translate away. The rest are sugar. I missed the list at the beginning of the talk, but I gathered a few of its members in the ten minutes I watched: while, raise, and try/except.

I love this kind of exercise: "How can you translate the statement if X: Y into one that uses only core features?" Here's one attempt the speaker gave:

try:

while X:

Y

raise _DONE

except _DONE:

None

I was today days old when I learned that Python's bool subclasses int, that True == 1, and that False == 0. That bit of knowledge was worth interrupting my nap to catch the end of the talk. Even better, this talk was based on a series of blog posts. Video is okay, but I love to read and play with ideas in written form. This series vaults to the top of my reading list for the coming days.

Talk 2: Subclassing, Composition, Python, and You

Okay, so this guy doesn't like subclasses much. Fair enough, but... some of his concerns seem to be more about the way Python classes work (open borders with their super- and subclasses) than with the idea itself. He showed a lot of ways one can go wrong with arcane uses of Python subclassing, things I've never thought to do with a subclass in my many years doing OO programming. There are plenty of simpler uses of inheritance that are useful and understandable.

Still, I liked this talk, and the speaker. He was honest about his biases, and he clearly cares about programs and programmers. His excitement gave the talk energy. The second half of the talk included a few good design examples, using subclassing and composition together to achieve various ends. It also recommended the book Architecture Patterns with Python. I haven't read a new software patterns book in a while, so I'll give this one a look.

Toward the end, the speaker referenced the line "Software engineering is programming integrated over time." Apparently, this is a Google thing, but it was new to me. Clever. I'll think on it.

Talk 3: How We Are Making CPython Faster -- Past, Present and Future

I did not realize that, until Python 3.11, efforts to make the interpreter had been rather limited. The speaker mentioned one improvement made in 3.7 to optimize the typical method invocation, obj.meth(arg), and one in 3.8 that sped up global variable access by using a cache. There are others, but nothing systematic.

At this point, the talk became mutually recursive with the Friday talk "Inside CPython 3.11's New Specializing, Adaptive Interpreter". The speaker asked us to go watch that talk and return. If I were more ambitious, I'd add a link to that talk now, but I'll let you any of you are interested to visit yesterday's post and scroll down two paragraphs.

He then continued with improvements currently in the works, including:

- efforts to optimize over larger regions, such as the different elements of a function call

- use of partial evaluation when possible

- specialization of code

- efforts to speed up memory management and garbage collection

He also mentions possible improvements related to C extension code, but I didn't catch the substance of this one. The speaker offered the audience a pithy takeaway from his talk: Python is always getting faster. Do the planet a favor and upgrade to the latest version as soon as you can. That's a nice hook.

There was lots of good stuff here. Whenever I hear compiler talks like this, I almost immediately start thinking about how I might work some of the ideas into my compiler course. To do more with optimization, we would have to move faster through construction of a baseline compiler, skipping some or all of the classic material. That's a trade-off I've been reluctant to make, given the course's role in our curriculum as a compilers-for-everyone experience. I remain tempted, though, and open to a different treatment.

Talk 4: The Lost Art of Diagrams: Making Complex Ideas Easy to See with Python

Early on, this talk contained a line that programmers sometimes need to remember: Good documentation shows respect for users. Good diagrams, said the speaker, can improve users' lives. The talk was a nice general introduction to some of the design choices available to us as we create diagrams, including the use of color, shading, and shapes (Venn diagrams, concentric circles, etc.). It then discussed a few tools one can use to generate better diagrams. The one that appealed most to me was Mermaid.js, which uses a Markdown-like syntax that reminded me of GraphViz's Dot language. My students and use GraphViz, so picking up Mermaid might be a comfortable task.

~~~~~

My second day at virtual PyCon confirmed that attending was a good choice. I've seen enough language-specific material to get me thinking new thoughts about my courses, plus a few other topics to broaden the experience. A nice break from the semester's grind.

Posted by Eugene Wallingford | Permalink | Categories: Computing, Software Development, Teaching and Learning

April 22, 2023 6:38 PM

PyCon Day 1

One of great benefits of a virtual conference is accessibility. I can hop from Iowa to Salt Lake City with the press of a button. The competing cost to virtual conference is that I am accessible ... from elsewhere.

On the first day of PyCon, we had a transfer orientation session that required my presence in virtual Iowa from 10:00 AM-12:00 noon local time. That's 9:00-11:00 Mountain time, so I missed Ned Batchelder's keynote and the opening set of talks. The rest of the day, though, I was at the conference. Virtual giveth, and virtual taketh away.

Talk 1: Inside CPython 3.11's New Specializing, Adaptive Interpreter

As I said yesterday, I don't know Python -- tools, community, or implementation -- intimately. That means I have a lot to learn in any talk. In this one, Brandt Bucher discussed the adaptive interpreter that is part of Python 3.11, in particular how the compiler uses specialization to improve its performance based on run-time usage of the code.

Midway through the talk, he referred us to a talk on tomorrow's schedule. "You'll find that the two talks are not only complementary, they're also mutually recursive." I love the idea of mutually recursive talks! Maybe I should try this with two sessions in one of my courses. To make it fly, I will need to make some videos... I wonder how students would respond?

This online Python disassembler by @pamelafox@fosstodon.org popped up in the chat room. It looks like a neat tool I can use in my compiler course. (Full disclosure: I have been following Pamela on Twitter and Mastodon for a long time. Her posts are always interesting!)

Talk 2: Build Yourself a PyScript

PyScript is a Javascript module that enables you to embed Python in a web page, via WebAssembly. This talk described how PyScript works and showed some of the technical issues in writing web apps.

Some of this talk was over my head. I also do not have deep experience programming in the web. It looks like I will end up teaching a beginning web development course this fall (more later), so I'll definitely be learning more about HTML, CSS, and Javascript soon. That will prepare me to be more productive using tools like PyScript.

Talk 3: Kill All Mutants! (Intro to Mutation Testing)

Our test suites are often not strong enough to recognize changes in our code. The talk introduced mutation testing, which modifies code to test the suite. I didn't take a lot of notes on this one, but I did make a note to try mutation testing out, maybe in Racket.

Talk 4: Working with Time Zones: Everything You Wish You Didn't Need to Know

Dealing with time zones is one of those things that every software engineer seems to complain about. It's a thorny problem with both technical and social dimensions, which makes it really interesting for someone who loves software design to think about.

This talk opened with example after example of how time zones don't behave as straightforwardly as you might think, and then discussed Python's newest time zone library, pytz.

My main takeaways from this talk: pytz looks useful, and I'm glad I don't have to deal with time zones on a regular basis.

Talk 5: Pythonic Functional (iter)tools for your Data Challenges

This is, of course, a topic after my heart. Functional programming is a big part of my programming languages course, and I like being able to show students Python analogues to the Racket ideas they are learning. There was not much new FP content for me here, but I did learn some new Python functions from itertools that I can use in class -- and in my own code. I enjoyed the Advent of Code segment of the talk, in which the speaker applied Python to some of the 2021 challenges. I use an Advent of Code challenge or two each year in class, too. The early days of the month usually feature fun little problems that my students can understand quickly. They know how to solve them imperatively in Python, but we tackle them functionally in Racket.

Most of the FP ideas needed to solve them in Python are similar, so it was fun to see the speaker solve them using itertools. Toward the end, the solutions got heavy quickly, which must be how some of my students feel when we are solving these problems in class.

~~~~~

Between work in the morning and the conference afternoon and evening, this was a long day. I have a lot of new tools to explore.

April 21, 2023 3:33 PM

Headed to PyCon, Virtually

Last night, I posted on Mastodon:

Heading off to #PyConUS in the morning, virtually. I just took my first tour of Hubilo, the online platform. There's an awful lot going on, but you need a lot of moving parts to produce the varied experiences available in person. I'm glad that people have taken up the challenge.

Why PyCon? I'm not an expert in the language, or as into the language as I once was with Ruby. Long-time readers may recall that I blogged about attending JRubyConf back in 2012. Here is a link to my first post from that conference. You can scroll up from there to see several more posts from the conference.

However, I do write and read a lot of Python code, because our students learn it early and use it quite a bit. Besides, it's a fun language and has a large, welcoming community. Like many language-specific conferences, PyCon includes a decent number of talks about interpreters, compilers, and tools, which are a big part of my teaching portfolio these days. The conference offers a solid virtual experience, too, which makes it attractive to attend while the semester is still going on.

My goals for attending PyCon this year include:

- learning some things that will help me improve my programming languages and compiler courses,

- learning some things that make me a better Python programmer, and

- simply enjoying computer science and programming for a few days, after a long and occasionally tedious year. The work doesn't go away while I am at a conference, but my mind gets to focus on something else -- something I enjoy!

More about today's talks tomorrow.

April 09, 2023 8:24 AM

It Was Just Revision

There are several revised approaches to "what's the deal with the ring?" presented in "The History of The Lord of the Rings", and, as you read through the drafts, the material just ... slowly gets better! Bit by bit, the familiar angles emerge. There seems not to have been any magic moment: no electric thought in the bathtub, circa 1931, that sent Tolkien rushing to find a pen.

It was just revision.

Then:

... if Tolkien can find his way to the One Ring in the middle of the fifth draft, so can I, and so can you.

-- Robin Sloan, How The Ring Got Good

Posted by Eugene Wallingford | Permalink | Categories: General, Software Development, Teaching and Learning

April 06, 2023 2:59 PM

The Two Meanings of Grace, in Software

In a recent blog post, Why Grace Matters (for Software Development), Avdi Grimm tells the story of how he came to name his training site "Graceful.Dev". Check it out. This passage resolves into the answer:

You know, the word "grace" is interesting, because it has two different meanings. On the one hand, it means beauty in lines or in motion. But if you were raised with a religious background anything like mine, you know that grace is also something that saves you.

And in that moment on the dance floor, I realized that these two meanings of grace are really one and the same thing. Because grace is something that makes space for you to screw up, and then turns it into something beautiful.

I don't think I was raised in the same religious tradition as Avdi, but I was raised in a tradition that valued deeply the notion of grace. Grace manifest in sacrament was a powerful notion to me, one of the religious ideas I found most compelling as I was growing up.

That's probably why Avdi's realization strikes close to home for me. I carry the idea of grace present in other parts of my life as part of my cultural DNA. His connection of grace to software feels right. "Grace makes space for you to screw up, and then turns it into something beautiful." -- I imagine that many programmers know this feeling, in an non-religious way, if only vaguely.

March 31, 2023 3:57 PM

"I Just Need a Programmer, er, Writer"

This line line from Chuck Wendig's post on AI tools and writing:

Hell, it's the thing every writer has heard from some jabroni who tells you, "I got this great idea, you write it, we'll split the money 50/50, boom."

... brought to mind one of my most-read blog posts ever, "I Just Need a Programmer":

As head of the Department of Computer Science at my university, I often receive e-mail and phone calls from people with The Next Great Idea. The phone calls can be quite entertaining! The caller is an eager entrepreneur, drunk on their idea to revolutionize the web, to replace Google, to top Facebook, or to change the face of business as we know it. ...

They just need a programmer. ...

The opening of that piece sounds a little harsh more than a

decade later, but the basic premise holds. And, as Wendig

notes, it holds beyond the software world. I even once wrote

a short follow-up

when accomplished TV writer Ken Levine commented on his blog

about the same phenomenon in screenwriting.

Some misconceptions are evergreen.

Adding AI to the mix adds a new twist. I do think human execution in telling stories will still matter, though. I'm not yet convinced that the AI tools have the depth of network to replace human creativity.

However, maybe tools such as ChatGPT can be the programmer people need. A lot of folks are putting these tools to good use creating prototypes, and people who know how to program are using them effectively as accelerators. Execution will still matter, but these programs may be useful contributors on the path to a product.

March 12, 2023 9:00 AM

A Spectator to Phase Change

Robin Sloan speculates that language-learning models like ChatGPT have gone through a phase change in what they can accomplish.

AI at this moment feels like a mash-up of programming and biology. The programming part is obvious; the biology part becomes apparent when you see AI engineers probing their own creations the way scientists might probe a mouse in a lab.

Like so many people, I find my social media and blog feeds filled with ChatGPT and LLMs and DALL-E and ... speculation about what these tools mean for (1) the production of text and code, and (2) learning to write and program. A lot of that speculation is tinged with fear.

I admire Sloan's effort to be constructive in his approach to the uncertainty:

I've found it helpful, these past few years, to frame my anxieties and dissatisfactions as questions. For example, fed up with the state of social media, I asked: what do I want from the internet, anyway?

It turns out I had an answer to that question.

Where the GPT-alikes are concerned, a question that's emerging for me is:

What could I do with a universal function — a tool for turning just about any X into just about any Y with plain language instructions?

I admit that I am reacting to these developments slowly compared to many people. That's my style in most things: I am more likely to under-react to a change than to over-react, especially at the onset of the change. In this case, there is no chance of immediate peril, so waiting to see what happens as people use these tools seems like a reasonable reasonable. I haven't made any effort to use these tools actively (or even been moved to), so any speculating I do would be uninformed by personal experience.

Instead, I read as people whose work I respect experiment with these tools and try to make sense of them. Occasionally, I draw a small, tentative conclusion, such as that prompting these generators is a lot like prompting students. After a few months of reading and a little reflection, I still think the biggest risk we face is probably that we tend to change the world around us to accommodate our technologies. If we put these tools to work for us in ways that enhance what we do, then the accommodation will pay off. If not, then we may, as Daniel Steinberg wrote in one of his newsletters, stop asking the questions we want to ask and start asking only the questions these tools can answer.

Professionally, I think most about the effect that ChatGPT and its ilk will have on programming and CS education. In these regards, I've been paying special attention to reports from David Humphrey, such as this blog post on his university's attempt to grapple the widespread availability of these tools. David has approached OpenAI with an open mind and written thoughtfully about the promise and the risk. For example, he has written a lot of code with an LLM assistant and found it improving his ability both to write code and to think about problems. Advanced CS students can benefit from this kind of assistance, too, but David wonders how such tools might interfere with students first learning to program.

What do we educators want from generative programming tools anyway? What do I as a programmer and educator want from them?

So, at this point, my personal interaction with the phase change that Sloan describes has been mostly passive: I read about what others are doing and think about the results of their exploration. Perhaps this post is a guilty conscience asserting that I should be doing more. Really, though, I think of it more as an active form of inaction: an effort to collect some of my thinking as I slowly respond to the changes that are coming. Perhaps some day soon I will feel moved to use of these tools as I write code of my own. For now, though, I am content to watch from the sidelines. You can learn a lot just by watching.

Posted by Eugene Wallingford | Permalink | Categories: Personal, Software Development, Teaching and Learning

March 05, 2023 9:47 AM

Context Matters

In this episode of Conversations With Tyler, Cowen asks economist Jeffrey Sachs if he agrees with several other economists' bearish views on a particular issue. Sachs says they "have been wrong ... for 20 years", laughs, and goes on to say:

They just got it wrong time and again. They had failed to understand, and the same with [another economist]. It's the same story. It doesn't fit our model exactly, so it can't happen. It's got to collapse. That's not right. It's happening. That's the story of our time. It's happening.

"It doesn't fit our model, so it can't happen." But it is happening.

When your model keeps saying that something can't happen, but it keeps happening anyway, you may want to reconsider your model. Otherwise, you may miss the dominant story of the era -- not to mention being continually wrong.

Sachs spends much of his time with Cowen emphasizing the importance of context in determining which model to use and which actions to take. This is essential in economics because the world it studies is simply too complex for the models we have now, even the complex models.

I think Sachs's insight applies to any discipline that works with people, including education and software development.

The topic of education even comes up toward the end of the conversation, when Cowen asks Sachs how to "fix graduate education in economics". Sachs says that one of its problems is that they teach econ as if there were "four underlying, natural forces of the social universe" rather than studying the specific context of particular problems.

He goes on to highlight an approach that is affecting every discipline now touched by data analytics:

We have so much statistical machinery to ask the question, "What can you learn from this dataset?" That's the wrong question because the dataset is always a tiny, tiny fraction of what you can know about the problem that you're studying.

Every interesting problem is bigger than any dataset we build from it. The details of the problem matter. Again: context. Sachs suggests that we shouldn't teach econ like physics, with Maxwell's overarching equations, but like biology, with the seemingly arbitrary details of DNA.

In my mind, I immediately began thinking about my discipline. We shouldn't teach software development (or econ!) like pure math. We should teach it as a mix of principles and context, generalities and specific details.

There's almost always a tension in CS programs between timeless knowledge and the details of specific languages, libraries, and tools. Most of students don't go on to become theoretical computer scientists; they go out to work in the world of messy details, details that keep evolving and morphing into something new.

That makes our job harder than teaching math or some sciences because, like economics:

... we're not studying a stable environment. We're studying a changing environment. Whatever we study in depth will be out of date. We're looking at a moving target.

That dynamic environment creates a challenge for those of us teaching software development or any computing as practiced in the world. CS professors have to constantly be moving, so as not to fall our of date. But they also have to try to identify the enduring principles that their students can count on as they go on to work in the world for several decades.

To be honest, that's part of the fun for many of us CS profs. But it's also why so many CS profs can burn out after 15 or 20 years. A never-ending marathon can wear anyone out.

Anyway, I found Cowens' conversation with Jeffrey Sachs to be surprisingly stimulating, both for thinking about economics and for thinking about software.

Posted by Eugene Wallingford | Permalink | Categories: Computing, Software Development, Teaching and Learning

March 01, 2023 2:26 PM

Have Clojure and Racket Overcome the Lisp Curse?

I finally read Rudolf Winestock's 2011 essay The Lisp Curse, which is summarized in one line:

Lisp is so powerful that problems which are technical issues in other programming languages are social issues in Lisp.

It seems to me that Racket and Clojure have overcome the curse. Racket was built by a small team that grew up in academia. Clojure was designed and created by an individual. Yet they are both 100% solutions, not the sort of one-off 80% personal solutions that tend to plague the Lisp world.

But the creators went further: They also attracted and built communities.

The Racket and Clojure communities consist of programmers who care about the entire ecosystem. The Racket community welcomes and helps newcomers. I don't move in Clojure circles, but I see and hear good things from people who do.

Clojure has made a bigger impact commercially, of course. Offering a high level of performance and running on the JVM have their advantages. I doubt either will ever displace Java or the other commercial behemoths, but they appear to have staying power. They earned that status by solving both technical issues and social issues.

February 11, 2023 1:53 PM

What does it take to succeed as a CS student?

Today I received an email message similar to this:

I didn't do very well in my first semester, so I'm looking for ways to do better this time around. Do you have any ideas about study resources or tips for succeeding in CS courses?

As an advisor, I'm occasionally asked by students for advice of this sort. As department head, I receive even more queries, because early on I am the faculty member students know best, from campus visits and orientation advising.

When such students have already attempted a CS course or two, my first step is always to learn more about their situation. That way, I can offer suggestions suited to their specific needs.

Sometimes, though, the request comes from a high school student, or a high school student's parent: What is the best way to succeed as a CS student?

To be honest, most of the advice I give is not specific to a computer science major. At a first approximation, what it takes to succeed as a CS student is the same as what it takes to succeed as a student in any major: show up and do the work. But there are a few things a CS student does that are discipline-specific, most of which involve the tools we use.

I've decided to put together a list of suggestions that I can point our students to, and to which I can refer occasionally in order to refresh my mind. My advice usually includes one or all of these suggestions, with a focus on students at the beginning of our program:

- Go to every class and every lab session. This is #0 because it should go without saying, but sometimes saying it helps. Students don't always have to go to their other courses every day in order to succeed.

- Work steadily on a course. Do a little work on your course, both programming and reading or study, frequently -- every day, if possible. This gives your brain a chance to see patterns more often and learn more effectively. Cramming may help you pass a test, but it doesn't usually help you learn how to program or make software.

- Ask your professor questions sooner rather than later. Send email. Visit office hours. This way, you get answers sooner and don't end up spinning your wheels while doing homework. Even worse, feeling confused can lead you to shying away from doing the work, which gets in the way of #1.

- Get to know your programming environment. When programming in Python, simply feeling comfortable with IDLE, and with the file system where you store your programs and data, can make everything else seem easier. Your mind doesn't have to look up basic actions or worry about details, which enables you to make the most of your programming time: working on the assigned task.

- Spend some of your study time with IDLE open. Even when you

aren't writing a program, the REPL can help you! It lets you

try out snippets of code from your reading, to see them work.

You can run small experiments of your own, to see whether you

understand syntax and operators correctly. You can make up

your own examples to fill in the gaps in your understanding

of the problem.

Getting used to trying things out in the interactions window can be a huge asset. This is one of the touchstones of being a successful CS student.

That's what came to mind at the end of a Friday, at the end of a long week, when I sat down to give advice to one student. I'd love to hear your suggestions for improving the suggestions in my list, or other bits of advice that would help our students. Email me your ideas, and I'll make my list better for anyone who cares to read it.

Posted by Eugene Wallingford | Permalink | Categories: Computing, Software Development, Teaching and Learning

January 10, 2023 2:20 PM

Are Major Languages' Parsers Implemented By Hand?

Someone on Mastodon posted a link to a 2021 survey of how the parsers for major languages are implemented. Are they written by hand, or automated by a parser generator? The answer was mixed: a few are generated by yacc-like tools (some of which were custom built), but many are written by hand, often for speed.

My two favorite notes:

Julia's parser is handwritten but not in Julia. It's in Scheme!

Good for the Julia team. Scheme is a fine language in which to write -- and maintain -- a parser.

Not only [is Clang's parser] handwritten but the same file handles parsing C, Objective-C and C++.

I haven't clicked through to the source code for Clang yet but, wow, that must be some file.

Finally, this closing comment in the paper hit close to the heart:

Although parser generators are still used in major language implementations, maybe it's time for universities to start teaching handwritten parsing?

I have persisted in having my compiler students write table-driven parsers by hand for over fifteen years. As I noted in this post at the beginning of the 2021 semester, my course is compilers for everyone in our major, or potentially so. Most of our students will not write another compiler in their careers, and traditional skills like implementing recursive descent and building a table-driven program are valuable to them more generally than knowing yacc or bison. Any of my compiler students who do eventually want to use a parser generator are well prepared to learn how, and they'll understand what's going on when they do, to boot.

My course is so old school that it's back to the forefront. I just had to be patient.

(I posted the seeds of this entry on Mastodon. Feel free to comment there!)

Posted by Eugene Wallingford | Permalink | Categories: Computing, Software Development, Teaching and Learning

January 09, 2023 12:19 PM

Thoughts on Software and Teaching from Last Week's Reading

I'm trying to get back into the habit of writing here more regularly. In the early days of my blog, I posted quick snippets every so often. Here's a set to start 2023.

• Falsework

From A Bridge Over a River Never Crossed:

Funnily enough, traditional arch bridges were built by first having a wood framing on which to lay all the stones in a solid arch (YouTube). That wood framing is called falsework, and is necessary until the arch is complete and can stand on its own. Only then is the falsework taken away. Without it, no such bridge would be left standing. That temporary structure, even if no trace is left of it at the end, is nevertheless critical to getting a functional bridge.

Programmers sometimes write a function or an object that helps them build something else that they couldn't easily have built otherwise, then delete the bridge code after they have written the code they really wanted. A big step in the development of a student programmer is when they do this for the first time, and feel in their bones why it was necessary and good.

• Repair as part of the history of an object

From The Art of Imperfection and its link back to a post on making repair visible, I learned about Kintsugi, a practice in Japanese art...

that treats breakage and repair as part of the history of an object, rather than something to disguise.

I have this pattern around my home, at least on occasion. I often repair my backpack, satchel, or clothing and leave evidence of the repair visible. My family thinks it's odd, but figure it's just me.

Do I do this in code? I don't think so. I tend to like clean code, with no distractions for future readers. The closest thing to Kintsugi I can think of now are comments that mention where some bit of code came from, especially if the current code is not intuitive to me at the time. Perhaps my memory is failing me, though. I'll be on the watch for this practice as I program.

• "It is good to watch the master."

I've been reading a rundown of the top 128 tennis players of the last hundred years, including this one about Pancho Gonzalez, one of the great players of the 1940s, '50s, and '60s. He was forty years old when the Open Era of tennis began in 1968, well past his prime. Even so, he could still compete with the best players in the game.

Even his opponents could appreciate the legend in their midst. Denmark's Torben Ulrich lost to him in five sets at the 1969 US Open. "Pancho gives great happiness," he said. "It is good to watch the master."

The old masters give me great happiness, too. With any luck, I can give a little happiness to my students now and then.

Posted by Eugene Wallingford | Permalink | Categories: Personal, Software Development, Teaching and Learning

December 11, 2022 9:09 AM

Living with AI in a World Where We Change the World to Accommodate Our Technologies

My social media feeds are full of ChatGPT screenshots and speculation these days, as they have been with LLMs and DALL-E and other machine learning-based tools for many months. People wonder what these tools will mean for writers, students, teachers, artists, and anyone who produces ordinary text, programs, and art.

These are natural concerns, given their effect on real people right now. But if you consider the history of human technology, they miss a bigger picture. Technologies often eliminate the need for a certain form of human labor, but they just as often create a new form of human labor. And sometimes, they increase the demand for the old kind of labor! If we come to rely on LLMs to generate text for us, where will we get the text with which to train them? Maybe we'll need people to write even more replacement-level prose and code!

As Robin Sloan reminds us in the latest edition of his newsletter, A Year of New Avenues, we redesign the world to fit the technologies we create and adopt.

Likewise, here's a lesson from my work making olive oil. In most places, the olive harvest is mechanized, but that's only possible because olive groves have been replanted to fit the shape of the harvesting machines. A grove planted for machine harvesting looks nothing like a grove planted for human harvesting.

Which means that our attention should be on how programs like GPT-2 might lead us to redesign the world we live and work in better to accommodate these new tools:

For me, the interesting questions sound more likeThat last question will, on the timescale of decades, turn out to be the most consequential, by far. Think of cars ... and of how dutifully humans have engineered a world just for them, at our own great expense. What will be the equivalent, for AI, of the gas station, the six-lane highway, the parking lot?

- What new or expanded kinds of human labor might AI systems demand?

- What entirely new activities do they suggest?

- How will the world now be reshaped to fit their needs?

Many professors worry that ChatGPT makes their homework assignments and grading rubrics obsolete, which is a natural concern in the short run. I'm old enough that I may not live to work in a world with the AI equivalent of the gas station, so maybe that world seems too far in the future to be my main concern. But the really interesting questions for us to ask now revolve around how tools such as these will lead us to redesign our worlds to accommodate and even serve them.

Perhaps, with a little thought and a little collaboration, we can avoid engineering a world for them at our own great expense. How might we benefit from the good things that our new AI technologies can provide us while sidestepping some of the highest costs of, say, the auto-centric world we built? Trying to answer that question is a better long-term use of our time and energy that fretting about our "Hello, world!" assignments and advertising copy.

Posted by Eugene Wallingford | Permalink | Categories: Computing, Software Development, Teaching and Learning

December 04, 2022 9:18 AM

If Only Ants Watched Netflix...

In the essay "On Societies as Organisms", Lewis Thomas says that we "violate science" when we try to read human meaning into the structures and behaviors of insects. But it's hard not to:

Ants are so much like human beings as to be an embarrassment. They farm fungi, raise aphids as livestock, launch armies into wars, use chemical sprays to alarm and confuse enemies, capture slaves. The families of weaver ants engage in child labor, holding their larvae like shuttles to spin out the thread that sews the leaves together for their fungus gardens. They exchange information ceaselessly. They do everything but watch television.

I'm not sure if humans should be embarrassed for still imitating some of the less savory behaviors of insects, or if ants should be embarrassed for reflecting some of the less savory behaviors of humans.

Biology has never been my forte, so I've read and learned less about it than many other sciences. Enjoying chemistry a bit at least helped keep me within range of the life sciences. I was fortunate to grow up in the Digital Age.

But with many people thinking the 21st century will the Age of Biology, I feel like I should get more in tune with the times. I picked up Thomas's now classic The Lives of a Cell, in which the quoted essay appears, as a brief foray into biological thinking about the world. I'm only a few pages in, but it is striking a chord. I can imagine so many parallels with computing and software. Perhaps I can be as at home in the 21st century as I was in the 20th.

November 27, 2022 9:38 AM

I Toot From the Command Line, Therefore I Am

Like so many people, I have been checking out new social media options in the face of Twitter's upheaval. None are ideal, but for now I have focused most of my attention on Mastodon, a federation of servers implemented using the ActivityPub protocol. Mastodon has an open API, which makes it attractive to programmers. I've had an account there for a few years (I like to grab username wallingf whenever a new service comes out) but, like so many people, hadn't really used it. Now feels more like the time.

On Friday, I spent a few minutes writing a small script that posts to my Mastodon account from the command line. I occasionally find that sort of thing useful, so the script has practical value. Really, though, I just wanted to play a bit in code and take a look at Mastodon's API.

Several people in my feed posted, boosted, and retweeted a link to this DEV Community article, which walks readers through the process of posting a status update using curl or Python. Everything worked exactly as advertised, with one small change: the Developers link that used to be in the bottom left corner of one's Mastodon home page is now a Development link on the Preferences page.

I've read a lot in the last few weeks about how the culture of Mastodon is different from the culture of Twitter. I'm trying to take seriously the different culture. One concrete example is the use of content warnings or spoiler alerts to hide content behind a brief phrase or tag. This seems like a really valuable practice, useful in a number of different contexts. At the very least, it feels like the Subject: line on an email message or a Usenet News post. So I looked up how to post content warnings with my command-line script. It was dead simple, all done in a few minutes.

There may be efficiency problems under the hood with how Mastodon requests work, or so I've read. The public interface seems well done, though.

I went with Python for my script, rather than curl. That fits better with most my coding these days. It also makes it easier to grow the script later, if I want. bash is great for a few lines, but I don't like to live inside bash for very long. On any code longer than a few lines, I want to use a programming language. At a couple of dozen lines, my script was already long enough to merit a real language. I went mostly YAGNI this time around. There are no classes, just a sequence of statements to build the http request from some constants (server name, authorization token) and command-line args (the post, the content warning). I did factor the server name and authorization token out of the longer strings and include an option to write the post via stdin. I want the flexibility of writing longer toots now, and I don't like magic constants. If I ever need to change servers or tokens, I never have to look past the few first few lines of the file.

As I briefly imagined morphing the small but growing script into a Toot class, I recalled a project I gave my Intermediate Computing students back in 2009 or so: implement the barebones framework of a Twitter-like application. That felt cutting edge back then, and most of the students really liked putting their new OO design and programming skills to use in a program that seemed to matter. It was good fun, and a great playground for so many of the ideas they had learned that semester.

All in all, this was a great way to spend a few minutes on a free afternoon. The API was simple to use, and the result is a usable new command. I probably should've been grading or doing some admin work, but profs need a break, too. I'm thankful to enjoy little programming projects so much.

October 31, 2022 6:11 PM

The Inventor of Assembly Language

This weekend, I learned that Kathleen Booth, a British mathematician and computer scientist, invented assembly language. An October 29 obituary reported that Booth died on September 29 at the age of 100. By 1950, when she received her PhD in applied mathematics from the University of London, she had already collaborated on building at least two early digital computers. But her contributions weren't limited to hardware:

As well as building the hardware for the first machines, she wrote all the software for the ARC2 and SEC machines, in the process inventing what she called "Contracted Notation" and would later be known as assembly language.

Her 1958 book, Programming for an Automatic Digital Calculator, may have been the first one on programming written by a woman.

I love the phrase "Contracted Notation".

Thanks to several people in my Twitter feed for sharing this link. Here's hoping that Twitter doesn't become uninhabitable, or that a viable alternative arises; otherwise, I'm going to miss out on a whole lotta learning.

October 23, 2022 9:54 AM

Here I Go Again: Carmichael Numbers in Graphene

I've been meaning to write a post about my fall compilers course since the beginning of the semester but never managed to set aside time to do anything more than jot down a few notes. Now we are at the end of Week 9 and I just must write. Long-time readers know what motivates me most: a fun program to write in my student's source language!

TFW you run across a puzzle and all you want to do now is write a program to solve it. And teach your students about the process.

-- https://twitter.com/wallingf/status/1583841233536884737

Yesterday, it wasn't a puzzle so much as discovering a new kind of number, Carmichael numbers. Of course, I didn't discover them (neither did Carmichael, though); I learned of them from a Quanta article about a recent proof about these numbers that masquerade as primes. One way of defining this set comes from Korselt:

A positive composite integer n is a Carmichael number if and only if it has multiple prime divisors, no prime divisor repeats, and for each prime divisor p, p-1 divides n-1.