April 21, 2024 12:41 PM

The Truths We Express To Children Are Really Our Hopes

In her Conversation with Tyler, scholar Katherine Rundell said something important about the books we give our children:

Children's novels tend to teach the large, uncompromising truths that we hope exist. Things like love will matter, kindness will matter, equality is possible. I think that we express them as truths to children when what they really are are hopes.

This passage immediately brought to mind Marick's Law: In software, anything of the form "X's Law" is better understood by replacing the word "Law" with "Fervent Desire". (More on this law below.)

While comments on different worlds, these two ideas are very much in sync. In software and so many other domains, we coin laws that are really much more expressions of our aspiration. This no less true in how we interact with young people.

We usually think that our job is to teach children the universal truths we have discovered about the world, but what we really teach them is our view of how the world can or should be. We can do that by our example. We can also do that with good books.

But aren't the universal truths in our children's literature true? Sometimes, perhaps, but not all of them are true all of the time, or for all people. When we tell stories, we are describing the world we want for our children, and giving them the hope, and perhaps the gumption, to make our truths truer than we ourselves have been able to.

I found myself reading lots of children's books and YA fiction when my daughters were young: to them, and with them, and on their recommendation. Some of them affected me enough that I quoted them in blog posts. There is so many good books for our youth in the library: honest, relevant to their experiences, aspirational, exemplary. I concur in Rundell's suggestion that adults should read children's fiction occasionally, both for pleasure and "for the unabashed politics of idealism that they have".

More on Marick's Law and Me

I remember posting Marick's Law on this blog in October 2015, when I wanted to share a link to it with Mike Feathers. Brian had tweeted the law in 2009, but a link to a tweet didn't feel right, not at a time when the idealism of the open web was still alive. In my post, I said "This law is too important to be left vulnerable to the vagaries of an internet service, so let's give it a permanent home".

In 2015, the idea that Twitter would take a weird turn, change its name to X, and become a place many of my colleagues don't want to visit anymore seemed far-fetched. Fortunately, Brian's tweet is still there and, at least for now, publicly viewable via redirect. Even so, given the events of the last couple of years, I'm glad I trusted my instincts and gave the law a more home on Knowing and Doing. (Will this blog outlive Twitter?)

The funny thing, though, is that that wasn't its first appearance here. I found the 2015 URL for use in this post by searching for the word "fervent" in my Software category. That search also brought up a Posts of the Day post from April 2009 — the day after Brian tweeted the law. I don't remember that post now, and I guess I didn't remember it in 2015 either.

Sometimes, "Great minds think alike" doesn't require two different people. With a little forgetfulness, they can be Past Me and Current Me.

March 31, 2024 9:18 AM

A Man to Go to Work, A Man to Stay at Home

I was listening to some music from the 1970s yesterday morning while doing some academic bookkeeping. As happens occasionally, the lyrics of one of the songs jerked me out of my bureaucratic trance by echoing my subconscious:

I need to be three men in one

To get my job done

I need a thirty hour day

Two jobs with double pay

I need a man to go to work

A man to stay at home

That's William Bell in his 1977 R&B crossover hit "Tryin' to Love Two" [ YouTube ].

I love only one, truly, but... University work has been unusually busy the last couple of weeks, and now we enter April, which is always a hyperactive month on campus. Add to that regular life — tax season and plans for May travel and wanting to spend time with the one I love — and I empathize with Bell wanting to be two or three people all at once. A doppelgänger to attend all my extra meetings would certainly be welcome some days!

At times like this, though, it's good to remember how lucky I am that this is the biggest predicament I face. So: hello, April.

February 29, 2024 3:45 PM

Finding the Torture You're Comfortable With

At some point last week, I found myself pointed to this short YouTube video of Jerry Seinfeld talking with Howard Stern about work habits. Seinfeld told Stern that he was essentially always thinking about making comedy. Whatever situation he found himself in, even with family and friends, he was thinking about how he could mine it for new material. Stern told him that sounded like torture. Jerry said, yes, it was, but...

Your blessing in life is when you find the torture you're comfortable with.

This is something I talk about with students a lot.

Sometimes it's a current student who is worried that CS isn't for them because too often the work seems hard, or boring. Shouldn't it be easy, or at least fun?

Sometimes it's a prospective student, maybe a HS student on a university visit or a college student thinking about changing their major. They worry that they haven't found an area of study that makes them happy all the time. Other people tell them, "If you love what you do, you'll never work a day in your life." Why can't I find that?

I tell them all that I love what I do -- studying, teaching, and writing about computer science -- and even so, some days feel like work.

I don't use torture as analogy the way Seinfeld does, but I certainly know what he means. Instead, I usually think of this phenomenon in terms of drudgery: all the grunt work that comes with setting up tools, and fiddling with test cases, and formatting documentation, and ... the list goes on. Sometimes we can automate one bit of drudgery, but around the corner awaits another.

And yet we persist. We have found the drudgery we are comfortable with, the grunt work we are willing to do so that we can be part of the thing it serves: creating something new, or understanding one little corner of the world better.

I experienced the disconnect between the torture I was comfortable with and the torture that drove me away during my first year in college. As I've mentioned here a few times, most recently in my post on Niklaus Wirth, from an early age I had wanted to become an architect (the kind who design houses and other buildings, not software). I spent years reading about architecture and learning about the profession. I even took two drafting courses in high school, including one in which we designed a house and did a full set of plans, with cross-sections of walls and eaves.

Then I got to college and found two things. One, I still liked architecture in the same way as I always had. Two, I most assuredly did not enjoy the kind of grunt work that architecture students had to do, nor did I relish the torture that came with not seeing a path to a solution for a thorny design problem.

That was so different from the feeling I had writing BASIC programs. I would gladly bang my head on the wall for hours to get the tiniest detail just the way I wanted it, either in the code or in the output. When the torture ended, the resulting program made all the pain worth it. Then I'd tackle a new problem, and it started again.

Many of the students I talk with don't yet know this feeling. Even so, it comforts some of them to know that they don't have to find The One Perfect Major that makes all their boredom go away.

However, a few others understand immediately. They are often the ones who learned to play a musical instrument or who ran cross country. The pianists remember all the boring finger exercises they had to do; the runners remember all the wind sprints and all the long, boring miles they ran to build their base. These students stuck with the boredom and worked through the pain because they wanted to get to the other side, where satisfaction and joy are.

Like Seinfeld, I am lucky that I found the torture I am comfortable with. It has made this life a good one. I hope everyone finds theirs.

Posted by Eugene Wallingford | Permalink | Categories: Computing, Personal, Running, Software Development, Teaching and Learning

February 09, 2024 3:45 PM

Finding Cool Ideas to Play With

In a recent post on Computational Complexity, Bill Gasarch wrote up the solution to a fun little dice problem he had posed previously. Check it out. After showing the solution, he answered some meta-questions. I liked this one:

How did I find this question, and its answer, at random? I intentionally went to the math library, turned my cell phone off, and browsed some back issues of the journal Discrete Mathematics. I would read the table of contents and decide what article sounded interesting, read enough to see if I really wanted to read that article. I then SAT DOWN AND READ THE ARTICLES, taking some notes on them.

He points out that turning off his cell phone isn't the secret to his method.

It's allowing yourself the freedom to NOT work on a a paper for the next ... conference and just read math for FUN without thinking in terms of writing a paper.

Slack of this sort used to be one of the great attractions of the academic life. I'm not sure it is as much a part of the deal as it once was. The pace of the university seems faster these days. Many of the younger faculty I follow out in the world seem always to be hustling for the next conference acceptance or grant proposal. They seem truly joyous when an afternoon turns into a serendipitous session of debugging or reading.

Gasarch's advice is wise, if you can follow it: Set aside time to explore, and then do it.

It's not always easy fun; reading some articles is work. But that's the kind of fun many of us signed up for when we went into academia.

~~~~~

I haven't made enough time to explore recently, but I did get to re-read an old paper unexpectedly. A student came to me to discuss possible undergrad research projects. He had recently been noodling around, implementing his own neural network simulator. I've never been much of a neural net person, but that reminded of this paper on PushForth, a concatenative language in the spirit of Forth and Joy designed as part of an evolutionary programming project. Genetic programming has always interested me, and concatenative languages seem like a perfect fit...

I found the paper in a research folder and made time to re-read it for fun. This is not the kind of fun Gasarch is talking about, as it had potential use for a project, but I enjoyed digging into the topic again nonetheless.

The student looked at the paper and liked the idea, too, so we embarked on a little project -- not quite serendipity, but a project I hadn't planned to work on at the turn of the new year. I'll take it!

January 06, 2024 10:41 AM

end.

My social media feed this week has included many notes and tributes on the passing of Niklaus Wirth, including his obituary from ETH Zurich, where he was a professor. Wirth was, of course, a Turing Award winner for his foundational work designing a sequence of programming languages.

Wirth's death reminded me of

END DO,

my post on the passing of John Backus, and before that

a post

on the passing of Kenneth Iverson. I have many fond memories related

to Wirth as well.

Pascal

Pascal was, I think, the fifth programming language I learned. After that, my language-learning history starts to speed up and blur. (I do think APL and Lisp came soon after.)

I learned BASIC first, as a junior in high school. This ultimately changed the trajectory of my life, as it planted the seeds for me to abandon a lifelong dream to be an architect.

Then at university, I learned Fortran in CS 1, PL/I in Data Structures (you want pointers!), and IBM 360/370 assembly language in a two-quarter sequence that also included JCL. Each of these language expanded my mind a little.

Pascal was the first language I learned "on my own". The fall of my junior year, I took my first course in algorithms. On Day 1, the professor announced that the department had decided to switch to Pascal in the intro course, so that's what we would use in this course.

"Um, prof, that's what the new CS majors are learning. We know Fortran and PL/I." He smiled, shrugged, and turned to the chalkboard. Class began.

After class, several of us headed immediately to the university library, checked out one Pascal book each, and headed back to the dorms to read. Later that week, we were all using Pascal to implement whatever classical algorithm we learned first in that course. Everything was fine.

I've always treasured that experience, even if it was little scary for a week or so. And don't worry: That professor turned out to be a good guy with whom I took several courses. He was a fellow chess player and ended up being the advisor on my senior project: a program to perform the Swiss system commonly used to run chess tournaments. I wrote that program in... Pascal. Up to that point, it was the largest and most complex program I had ever written solo. I still have the code.

The first course I taught as a tenure-track prof was my university's version of CS 1 -- using Pascal.

Fond memories all. I miss the language.

Wirth sightings in this blog

I did a quick search and found that Wirth has made an occasional appearance in this blog over the years.

• January 2006: Just a Course in Compilers

This was written at the beginning of my second offering of our compiler course, which I have taught and written about many times since. I had considered using as our textbook Wirth's Compiler Construction, a thin volume that builds a compiler for a subset of Wirth's Oberon programming language over the course of sixteen short chapters. It's a "just the facts and code" approach that appeals to me most days.

I didn't adopt the book for several reasons, not least of which that at the time Amazon showed only four copies available, starting at $274.70 each. With two decades of experience teaching the course now, I don't think I could ever really use this book with my undergrads, but it was a fun exercise for me to work through. It helped me think about compilers and my course.

Note: A PDF of Compiler Construction has been posted on the

web for many years, but every time I link to it, the link ultimately

disappears. I decided to mirror the files locally, so that the link

will last as long as this post lasts:

[

Chapters 1-8

|

Chapters 9-16

]

• September 2007: Hype, or Disseminating Results?

... in which I quote Wirth's thoughts on why Pascal spread widely in the world but Modula and Oberon didn't. The passage comes from a short historical paper he wrote called "Pascal and its Successors". It's worth a read.

• April 2012: Intermediate Representations and Life Beyond the Compiler

This post mentions how Wirth's P-code IR ultimately lived on in the MIPS compiler suite long after the compiler which first implemented P-code.

• July 2016: Oberon: GoogleMaps as Desktop UI

... which notes that the Oberon spec defines the language's desktop as "an infinitely large two-dimensional space on which windows ... can be arranged".

• November 2017: Thousand-Year Software

This is my last post mentioning Wirth before today's. It refers to the same 1999 SIGPLAN Notices article that tells the P-code story discussed in my April 2012 post.

I repeat myself. Some stories remain evergreen in my mind.

The Title of This Post

I titled my post on the passing of John Backus END DO

in homage to his intimate connection to Fortran. I wanted to do something

similar for Wirth.

Pascal has a distinguished sequence to end a program:

"end.". It seems a

fitting way to remember the life of the person who created it and who

gave the world so many programming experiences.

December 31, 2023 1:35 PM

"I Want to Find Something to Learn That Excites Me"

In his year-end wrap-up, Greg Wilson writes:

I want to find something to learn that excites me. A new musical instrument is out because of my hand; I've thought about reviving my French, picking up some Spanish, diving into Postgres or machine learningn (yeah, yeah, I know, don't hate me), but none of them are making my heart race.

What he said. I want to find something to learn that excites me.

I just spent six months immersed in learning more about HTML, CSS, and JavaScript so that I could work with novice web developers. Picking up that project was one part personal choice and one part professional necessity. It worked out well. I really enjoyed studying the web development world and learned some powerful new tools. I will continue to use them as time and energy permit.

But I can't say that I am excited enough by the topic to keep going in this area. Right now, I am still burned out from the semester on a learning treadmill. I have a followup post to my early reactions about the course's JavaScript unit in the hopper, waiting for a little desire to finish it.

What now? There are parallels between my state and Wilson's.

- After my first-ever trip to Europe in 2019, for a Dagstuhl seminar (brief mention here), my wife and I talked about a return trip, with a focus this time on Italy. Learning Italian was part of the nascent plan. Then came COVID, along with a loss of energy for travel. I still have learning Italian in my mind.

- In the fall of 2020, the first full semester of the pandemic, I taught a database course for the first time (bookend posts here and here). I still have a few SQL projects and learning goals hanging around from that time, but none are calling me right now.

- LLMs are the main focus of so many people's attention these days, but they still haven't lit up me up. In some ways, I envy David Humphrey, who fell in love with AI this year. Maybe something about LLMs will light me up one of these days. (As always, you should read David's stuff. He does neat work and shares it with the world.)

Unlike Wilson, I do not play a musical instrument. I did, however, learn a little basic piano twenty-five years ago when I was a Suzuki piano parent with my daughters. We still have our piano, and I harbor dreams of picking it back up and going farther some day. Right now doesn't seem to be that day.

I have several other possibilities on the back burner, particularly in the area of data analytics. I've been intrigued by the work on data-centric computing in education being done by Kathi Fisler and Shriram Krishnamurthi have been at Brown. I also will be reading a couple of their papers on program design and plan composition in the coming weeks as I prepare for my programming languages course this spring. Fisler and Krishnamurthi are coming at these topics from the side of CS education, but the topics are also related to my grad-school work in AI. Maybe these papers will ignite a spark.

Winter break is coming to an end soon. Like others, I'm thinking about 2024. Let's see what the coming weeks bring.

July 04, 2023 11:55 AM

Time Out

Any man can call time out, but no man

can say how long the time out will be.

--

Books of Bokonon

I realized early last week that it had been a while since I blogged. June was a morass of administrative work, mostly summer orientation. Over the month, I had made notes for several potential posts, on my web dev course, on the latest book I was reading, but never found -- made -- time to write a full post. I figured this would be a light month, only a couple of short posts, if I only I could squeeze another one in by Friday.

Then I saw that the date of my most recent post was May 26, with the request for ideas about the web course coming a week before.

I no longer trust my sense of time.

This blog has certainly become much quieter over the years, due in part to the kind and amount of work I do and in part to choices I make outside of work. I may even have gone a month between posts a few fallow times in the past. But June 2023 became my first calendar month with zero posts.

It's somewhat surprising that a summer month would be the first to shut me out. Summer is a time of no classes to teach, fewer student and faculty issues to deal with, and fewer distinct job duties. This occurrence is a testament to how much orientation occupies many of my summer days, and how at other times I just want to be AFK.

A real post or two are on their way, I promise -- a promise to myself, as well as to any of you who are missing my posts in your newsreader. In the meantime...

On the web dev course: thanks to everyone who sent thoughts! There were a few unanimous, or near unanimous, suggestions, such as to have students use VS code. I am now learning it myself, and getting used to an IDE that autocompletes pairs such as "". My main prep activity up to this point has been watching David Humphrey's videos for WEB 222. I have been learning a little HTML and JavaScript and a lot of CSS and how these tools work together on the modern web. I'm also learning how to teach these topics, while thinking about the differences between my student audience and David's.

On the latest book: I'm currently reading Shop Class as Soulcraft, by Matthew Crawford. It came out in 2010 and, though several people recommended it to me then, I had never gotten around to it. This book is prompting so many ideas and thoughts that I'm constantly jotting down notes and thinking about how these ideas might affect my teaching and my practice as a programmer. I have a few short posts in mind based on the book, if only I commit time to flesh them out. Here are two passages, one short and one long, from my notes.

Fixing things may be a cure for narcissism.

Countless times since that day, a more experienced mechanic has pointed out to me something that was right in front of my face, but which I lacked the knowledge to see. It is an uncanny experience; the raw sensual data reaching my eye before and after are the same, but without the pertinent framework of meaning, the features in question are invisible. Once they have been pointed out, it seems impossible that I should not have seen them before.

Both strike a chord for me as I learn an area I know only the surface of. Learning changes us.

May 26, 2023 12:37 PM

It's usually counterproductive to be doctrinaire

A short passage from Innocence, by Penelope Fitzgerald:

In 1927, when they moved me from Ustica to Milan, I was allowed to plant a few seeds of chicory, and when they came up I had to decide whether to follow Rousseau and leave them to grow by the light of nature, or whether to interfere in the name of knowledge and authority. What I wanted was a a decent head of chicory. It's useless to be doctrinaire in such circumstances.

Sometimes, you just want a good head of chicory -- or a working program. Don't let philosophical ruminations get in the way. There will be time for reflection and evaluation later.

A few years ago, I picked up Fitzgerald's short novel The Bookshop while browsing the stacks at the public library. I enjoyed it despite the fact that (or perhaps because) it ended in a way that didn't create a false sense of satisfaction. Since then I have had Fitzgerald on my list of authors to explore more. I've read the first fifty pages or so of Innocence and quite like it.

May 07, 2023 8:36 AM

"The Society for the Diffusion of Useful Knowledge"

I just started reading Joshua Kendall's The Man Who Made Lists, a story about Peter Mark Roget. Long before compiling his namesake thesaurus, Roget was a medical doctor with a local practice. After a family tragedy, though, he returned to teaching and became a science writer:

In the 1820s and 1830s, Roget would publish three hundred thousand words in the Encyclopaedia Brittanica and also several lengthy review articles for the Society for the Diffusion of Useful Knowledge, the organization affiliated with the new University of London, which sought to enable the British working class to educate itself.

What a noble goal, enabling the working class to educate itself. And what a cool name: The Society for the Diffusion of Useful Knowledge!

For many years, my university has provided a series of talks for retirees, on topics from various departments on campus. This is a fine public service, though without the grand vision -- or the wonderful name -- of the Society for the Diffusion of Useful Knowledge. I suspect that most universities depend too much on tuition and lower costs these days to mount an ambitious effort to enable the working class to educate itself.

Mental illness ran in Roget's family. Kendall wonders if Roget's "lifelong desire to bring order to the world" -- through his lecturing, his writing, and ultimately his thesaurus, which attempted to classify every word and concept -- may have "insulated him from his turbulent emotions" and helped him stave off the depression that afflicted several of his family members.

Academics often live an obsessive connection with the disciplines they practice and study. Certainly that sort of focus can can be bad for a person when taken too far. (Is it possible for an obsession not to go too far?) For me, though, the focus of studying something deeply, organizing its parts, and trying to communicate it to others through my courses and writing has always felt like a gift. The activity has healing properties all its own.

In any case, the name "The Society for the Diffusion of Useful Knowledge" made me smile. Reading has the power to heal, too.

May 01, 2023 4:21 PM

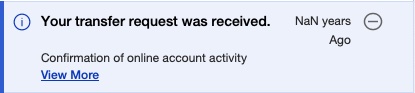

Disconcerted by a Bank Transaction

I'm not sure what to think of the fact that my bank says it received my money NaN years ago:

At least NaN hasn't show up as my account balance yet! I suppose that if it were the result of an overflow, at least I'd know what it's like to be fabulously wealthy.

(For my non-technical readers, NaN stands for "Not a Number", and is used in computing interpreted as a value that is not defined or not representable. You may be able to imagine why seeing this in a bank transaction would be disconcerting to a programmer!)

April 26, 2023 12:15 PM

Cultivating a Way of Seeing

Sometimes, I run across a sentence I wish I had written. Here are several paragraphs by Dan Bouk I would be proud to have written.

Museums offer a place to practice looking for and acknowledging beauty. This is, mostly, why I visit them.

As I wander from room to room, a pose diverts me, a glance attracts me, or a flash of color draws my eye. And then I look, and look, and look, and then move on.

Outside the museum, I find that this training sticks. I wander from subway car to platform, from park to city street, and a pose diverts me, a glance attracts me, or a flash of color draws my eye. People of no particular beauty reveal themselves to be beautiful. It feels as though I never left the museum, and now everything, all around me, is art.

This way of seeing persists, sometimes for days on end. It resonates with and reinforces my political commitment to the equal value of each of my neighbors. It vibrates with my belief in the divine spark, the image of God, that animates every person.

-- Dan Bouk, in On Walking to the Museum, Musing on Beauty and Safety

April 06, 2023 2:59 PM

The Two Meanings of Grace, in Software

In a recent blog post, Why Grace Matters (for Software Development), Avdi Grimm tells the story of how he came to name his training site "Graceful.Dev". Check it out. This passage resolves into the answer:

You know, the word "grace" is interesting, because it has two different meanings. On the one hand, it means beauty in lines or in motion. But if you were raised with a religious background anything like mine, you know that grace is also something that saves you.

And in that moment on the dance floor, I realized that these two meanings of grace are really one and the same thing. Because grace is something that makes space for you to screw up, and then turns it into something beautiful.

I don't think I was raised in the same religious tradition as Avdi, but I was raised in a tradition that valued deeply the notion of grace. Grace manifest in sacrament was a powerful notion to me, one of the religious ideas I found most compelling as I was growing up.

That's probably why Avdi's realization strikes close to home for me. I carry the idea of grace present in other parts of my life as part of my cultural DNA. His connection of grace to software feels right. "Grace makes space for you to screw up, and then turns it into something beautiful." -- I imagine that many programmers know this feeling, in an non-religious way, if only vaguely.

March 12, 2023 9:00 AM

A Spectator to Phase Change

Robin Sloan speculates that language-learning models like ChatGPT have gone through a phase change in what they can accomplish.

AI at this moment feels like a mash-up of programming and biology. The programming part is obvious; the biology part becomes apparent when you see AI engineers probing their own creations the way scientists might probe a mouse in a lab.

Like so many people, I find my social media and blog feeds filled with ChatGPT and LLMs and DALL-E and ... speculation about what these tools mean for (1) the production of text and code, and (2) learning to write and program. A lot of that speculation is tinged with fear.

I admire Sloan's effort to be constructive in his approach to the uncertainty:

I've found it helpful, these past few years, to frame my anxieties and dissatisfactions as questions. For example, fed up with the state of social media, I asked: what do I want from the internet, anyway?

It turns out I had an answer to that question.

Where the GPT-alikes are concerned, a question that's emerging for me is:

What could I do with a universal function — a tool for turning just about any X into just about any Y with plain language instructions?

I admit that I am reacting to these developments slowly compared to many people. That's my style in most things: I am more likely to under-react to a change than to over-react, especially at the onset of the change. In this case, there is no chance of immediate peril, so waiting to see what happens as people use these tools seems like a reasonable reasonable. I haven't made any effort to use these tools actively (or even been moved to), so any speculating I do would be uninformed by personal experience.

Instead, I read as people whose work I respect experiment with these tools and try to make sense of them. Occasionally, I draw a small, tentative conclusion, such as that prompting these generators is a lot like prompting students. After a few months of reading and a little reflection, I still think the biggest risk we face is probably that we tend to change the world around us to accommodate our technologies. If we put these tools to work for us in ways that enhance what we do, then the accommodation will pay off. If not, then we may, as Daniel Steinberg wrote in one of his newsletters, stop asking the questions we want to ask and start asking only the questions these tools can answer.

Professionally, I think most about the effect that ChatGPT and its ilk will have on programming and CS education. In these regards, I've been paying special attention to reports from David Humphrey, such as this blog post on his university's attempt to grapple the widespread availability of these tools. David has approached OpenAI with an open mind and written thoughtfully about the promise and the risk. For example, he has written a lot of code with an LLM assistant and found it improving his ability both to write code and to think about problems. Advanced CS students can benefit from this kind of assistance, too, but David wonders how such tools might interfere with students first learning to program.

What do we educators want from generative programming tools anyway? What do I as a programmer and educator want from them?

So, at this point, my personal interaction with the phase change that Sloan describes has been mostly passive: I read about what others are doing and think about the results of their exploration. Perhaps this post is a guilty conscience asserting that I should be doing more. Really, though, I think of it more as an active form of inaction: an effort to collect some of my thinking as I slowly respond to the changes that are coming. Perhaps some day soon I will feel moved to use of these tools as I write code of my own. For now, though, I am content to watch from the sidelines. You can learn a lot just by watching.

Posted by Eugene Wallingford | Permalink | Categories: Personal, Software Development, Teaching and Learning

February 26, 2023 8:57 AM

"If I say no, are you going to quit?"

Poet Marvin Bell, in his contribution to the collection Writers on Writing:

The future belongs to the helpless. I am often presented that irresistible question asked by the beginning poet: "Do you think I am any good?" I have learned to reply with a question: "If I say no, are you going to quit?" Because life offers any of us many excuses to quit. If you are going to quit now, you are almost certainly going to quit later. But I have concluded that writers are people who you cannot stop from writing. They are helpless to stop it.

Reading that passage brought to mind Ted Gioia's recent essay on musicians who can't seem to retire. Even after accomplishing much, these artists seem never want to stop doing their thing.

Just before starting Writers on Writing, I finished Kurt Vonnegut's Sucker's Portfolio, a slim 2013 volume of six stories and one essay not previously published. The book ends with an eighth piece: a short story unfinished at the time of Vonnegut's death. The story ends mid-sentence and, according to the book's editor, at the top of an unfinished typewritten page. In his mid-80s, Vonnegut was creating stories t the end.

I wouldn't mind if, when it's my time to go, folks find my laptop open to some fun little programming project I was working on for myself. Programming and writing are not everything there is to my life, but they bring me a measure of joy and satisfaction.

~~~~~

This week was a wonderful confluence of reading the Bell, Gioia, and Vonnegut pieces around the same time. So many connections... not least of which is that Bell and Vonnegut both taught at the Iowa Writers' Workshop.

There's also an odd connection between Vonnegut and the Gioia essay. Gioia used a quip attributed to the Roman epigrammist Martial:

Fortune gives too much to many, but enough to none.

That reminded me of a story Vonnegut told occasionally in his public talks. He and fellow author Joseph Heller were at a party hosted by a billionaire. Vonnegut asked Heller, "How does it make you feel to know that guy made more money yesterday than Catch-22 has made in all the years since it was published?" Heller answered, "I have something he'll never have: the knowledge that I have enough."

There's one final connection here, involving me. Marvin Bell was the keynote speaker at Camouflage: Art, Science & Popular Culture an international conference organized by graphic design prof Roy Behrens at my university and held in April 2006. Participants really did come from all around the world, mostly artists or designers of some sort. Bell read a new poem of his and then spoke of:

the ways in which poetry is like camouflage, how it uses a common vocabulary but requires a second look in order to see what is there.I gave a talk at the conference called NUMB3RS Meets The DaVinci Code: Information Masquerading as Art. (That title was more timely in 2006 than 2023...) I presented steganography as a computational form of camouflage: not quite traditional concealment, not quite dazzle, but a form of dispersion uniquely available in the digital world. I recall that audience reaction to the talk was better than I feared when I proposed it to Roy. The computer science topic meshed nicely with the rest of the conference lineup, and the artists and writers who saw the talk seemed to appreciate the analogy. Anyway, lots of connections this week.

February 20, 2023 10:18 AM

Commands I Use

Catching up on articles in my newsreader and ran across Commands I Use by @gvwilson. That sounded like fun, and I was game:

$ history | awk '{print $2}' | sort | uniq -c | sort -nr > commands.txt

The first four items on my list are essentially the same as Wilson's, and there are a lot of other similarities, too. I don't think this is surprising, given how Unix works and how much sense git makes for software developers to use.

- git - same caveat as Wilson. Next time, I may look at field and flesh this out.

- ll - my shorthand for ls -al

- emacs - less of a cheat for me. I mostly edit in emacs.

- cd

- mv

- rmbak - my shorthand for rm *~, a form of Wilson's clean

- cpsync - my shorthand for copying a file to a folder for syncing to my office machine

- popd

- pushd - I pushd and popd a lot...

- open

- dirs - ... which means occasionally checking the stack

- mvsync - similar to cpsync but also moves the file (often from the desktop to its permanent home)

- tgz - a 5-line script that bundles the sync folder used by cpsync and mvsync

- cp

- cls

- pwd

- close-journal.py - a substantial Python script; part of my homegrown family accounting system

- rm - aliased to ask for confirmation before deleting

- cat

- /bin/rm - the built-in command nukes a file with no shame

- more

- xattr

- python3 - same caveat as Wilson

- gzt - an unbundling script

- mkdir

- gooffice - my shorthand for sshing into my office machine

It's interesting to see that I use rm and /bin/rm in roughly even measure. I would have guessed that I used the guarded command in higher proportion.

At the bottom of the tally are a few items I don't use often, or don't generally launch from the command line:

- touch

- racket

- npm

- idle3

- chmod

... and a bunch of typos, including:

- pops

- ce

- nv

- nl

- mdir

- emaxs, emavs, emacd,

That was fun! Thanks to Greg for the prompt.

February 13, 2023 10:34 AM

The Exuberance of Bruce Springsteen in Concert

Bruce Springsteen, on why he puts on such an intense physical show:

So the display of exuberance is critical. "For an adult, the world is constantly trying to clamp down on itself," he says. "Routine, responsibility, decay of institutions, corruption: this is all the world closing in. Music, when it's really great, pries that shit back open and lets people back in, it lets light in, and air in, and energy in, and sends people home with that and sends me back to the hotel with it. People carry that with them sometimes for a very long period of time."

This passage is from a 2012 profile of the Boss, We Are Alive: Bruce Springsteen at Sixty-Two. A good read throughout.

Another comment from earlier in the piece has been rumbling around my head since I read it. Many older acts, especially those of Springsteen's vintage, have become essentially "their own cover bands", playing the oldies on repeat for nostalgic fans. The Boss, though, "refuses to be a mercenary curator of his past" and continually evolves as an artist. That's an inspiration I need right now.

January 15, 2023 12:07 PM

You Can Learn A Lot About People Just By Talking With Them

This morning on the exercise bike, I read a big chunk of Daniel Gross and Tyler Talk Talent, from the Conversations with Tyler series. The focus of this conversation is how to identify talent, as prelude to the release of their book on that topic.

The bit I've read so far has been like most Conversations with Tyler: interesting ideas with Cowen occasionally offering an offbeat idea seemingly for the sake of being offbeat. For example, if the person he is interviewing has read Shakespeare, he might say,

"Well, my hypothesis is that in Romeo and Juliet, Romeo and Juliet don't actually love each other at all. Does the play still make sense?" Just see what they have to say. It's a way of testing their second-order understanding of situations, diversity of characters.

This is a bit much for my taste, but the motivating idea behind talking to people about drama or literature is sound:

It's not something they can prepare for. They can't really fake it. If they don't understand the topic, well, you can switch to something else. But if you can't find anything they can understand, you figure, well, maybe they don't have that much depth or understanding of other people's characters.

It seems to me that this style of interviewing runs a risk of not being equitable to all candidates, and at the very least places high demands on both the interviewee and the interviewer. That said, Gross summarizes the potential value of talking to people about movies, music, and other popular culture in interviews:

I think that works because you can learn a lot from what someone says -- they're not likely to make up a story -- but it's also fun, and it is a common thing many people share, even in this era of HBO and Netflix.

This exchange reminded me of perhaps my favorite interview of all time, one in which I occupied the hot seat.

I was a senior in high school, hoping to study architecture at Ball State University. (Actual architecture... the software thing would come later.) I was a pretty good student, so I applied for Ball State's Whitinger Scholarship, one of the university's top awards. My initial application resulted in me being invited to campus for a personal interview. First, I sat to write an essay over the course of an hour, or perhaps half an hour. To be honest, I don't remember many details from that part of the day, only sitting in a room by myself for a while with a blue book and writing away. I wrote a lot of essays in those days.

Then I met with Dr. Warren Vander Hill, the director of the Honors College, for an interview. I'd had a few experiences on college campuses in the previous couple of years, but I still felt a little out of my element. Though I came from a home that valued reading and learning, my family background was not academic.

On a shelf behind Dr. Vander Hill, I noticed a picture of him in a Hope College basketball jersey, dribbling during a college game. I casually asked him about it and learned that he had played Division III varsity ball as an undergrad. I just now searched online in hopes of confirming my memory and learned that he is still #8 on the list of Hope's all-time career scoring leaders. I don't recall him slipping that fact into our chat... (Back then, he would have been #2!)

Anyway, we started talking basketball. Somehow, the conversation turned to Oscar Robertson, one of the NBA's all-time great players. He starred at Indianapolis's all-black Crispus Attucks High School and led the school to a state championship in 1955. From there, we talked about a lot of things -- the integration of college athletics, the civil rights movement, the state of the world in 1982 -- but it all seemed to revolve around basketball.

The time flew. Suddenly, the interview period was over, and I headed home. I enjoyed the conversation quite a bit, but on the hour drive, I wondered if I'd squandered my chances at the scholarship by using my interview time to talk sports. A few weeks later, though, I received a letter saying that I had been selected as one of the recipients.

That was the beginning of four very good years for me. Maybe I can trace some of that fortune to a conversation about sports. I certainly owe a debt to the skill of the person who interviewed me.

I got to know Dr. Vander Hill better over the next four years and slowly realized that he had probably known exactly what he was doing in that interview. He had found a common interest we shared and used it to start a conversation that opened up into bigger ideas. I couldn't have prepared answers for this conversation. He could see that I wasn't making up a story, that I was genuinely interested in the issues we were discussing and was, perhaps, genuinely interesting. The interview was a lot of fun, for both of us, I think, and he learned a lot about me from just talking.

I learned a lot from Dr. Vander Hill over the years, though what I learned from him that day took a few years to sink in.

Posted by Eugene Wallingford | Permalink | Categories: Managing and Leading, Personal, Teaching and Learning

January 09, 2023 12:19 PM

Thoughts on Software and Teaching from Last Week's Reading

I'm trying to get back into the habit of writing here more regularly. In the early days of my blog, I posted quick snippets every so often. Here's a set to start 2023.

• Falsework

From A Bridge Over a River Never Crossed:

Funnily enough, traditional arch bridges were built by first having a wood framing on which to lay all the stones in a solid arch (YouTube). That wood framing is called falsework, and is necessary until the arch is complete and can stand on its own. Only then is the falsework taken away. Without it, no such bridge would be left standing. That temporary structure, even if no trace is left of it at the end, is nevertheless critical to getting a functional bridge.

Programmers sometimes write a function or an object that helps them build something else that they couldn't easily have built otherwise, then delete the bridge code after they have written the code they really wanted. A big step in the development of a student programmer is when they do this for the first time, and feel in their bones why it was necessary and good.

• Repair as part of the history of an object

From The Art of Imperfection and its link back to a post on making repair visible, I learned about Kintsugi, a practice in Japanese art...

that treats breakage and repair as part of the history of an object, rather than something to disguise.

I have this pattern around my home, at least on occasion. I often repair my backpack, satchel, or clothing and leave evidence of the repair visible. My family thinks it's odd, but figure it's just me.

Do I do this in code? I don't think so. I tend to like clean code, with no distractions for future readers. The closest thing to Kintsugi I can think of now are comments that mention where some bit of code came from, especially if the current code is not intuitive to me at the time. Perhaps my memory is failing me, though. I'll be on the watch for this practice as I program.

• "It is good to watch the master."

I've been reading a rundown of the top 128 tennis players of the last hundred years, including this one about Pancho Gonzalez, one of the great players of the 1940s, '50s, and '60s. He was forty years old when the Open Era of tennis began in 1968, well past his prime. Even so, he could still compete with the best players in the game.

Even his opponents could appreciate the legend in their midst. Denmark's Torben Ulrich lost to him in five sets at the 1969 US Open. "Pancho gives great happiness," he said. "It is good to watch the master."

The old masters give me great happiness, too. With any luck, I can give a little happiness to my students now and then.

Posted by Eugene Wallingford | Permalink | Categories: Personal, Software Development, Teaching and Learning

November 23, 2022 1:27 PM

I Can't Imagine...

I've been catching up on some items in my newsreader that went unread last summer while I rode my bike outdoors rather than inside. This passage from a blog post by Fred Wilson at AVC touched on a personal habit I've been working on:

I can't imagine an effective exec team that isn't in person together at least once a month.

I sometimes fall into a habit of saying or thinking "I can't imagine...". I'm trying to break that habit.

I don't mean to pick on Wilson, whose short posts I enjoy for insight into the world of venture capital. "I can't imagine" is a common trope in both spoken and written English. Some writers use it as a rhetorical device, not as a literal expression. Maybe he meant it that way, too.

For a while now, though, I've been trying to catch myself whenever I say or think "I can't imagine...". Usually my mind is simply being lazy, or too quick to judge how other people think or act.

It turns out that I usually can imagine, if I try. Trying to imagine how that thinking or behavior makes sense helps me see what other people might be thinking, what their assumptions or first principles are. Even when I end up remaining firm in my own way of thinking, trying to imagine usually puts me in a better position to work with the other person, or explain my own reasoning to them more effectively.

Trying to imagine can also give me insight into the limits of my own thinking. What assumptions am I making that lead me to have confidence in my position? Are those assumptions true? If yes, when might they not to be true? If no, how do I need to update my thinking to align with reality?

When I hear someone say, "I can't imagine..." I often think of Russell and Norvig's textbook Artificial Intelligence: A Modern Approach, which I used for many years in class [1]. At the end of one of the early chapters, I think, they mention critics of artificial intelligence who can't imagine the field of AI ever accomplishing a particular goal. They respond cheekily to the effect, This says less about AI than it says about the critics' lack of imagination. I don't think I'd ever seen a textbook dunk on anyone before, and as a young prof and open-minded AI researcher, I very much enjoyed that line [2].

Instead of saying "I can't imagine...", I am trying to imagine. I'm usually better off for the effort.

~~~~

[1] The Russell and Norvig text first came out in 1995. I wonder if the subtitle "A Modern Approach" is still accurate... Maybe theirs is now a classical approach!

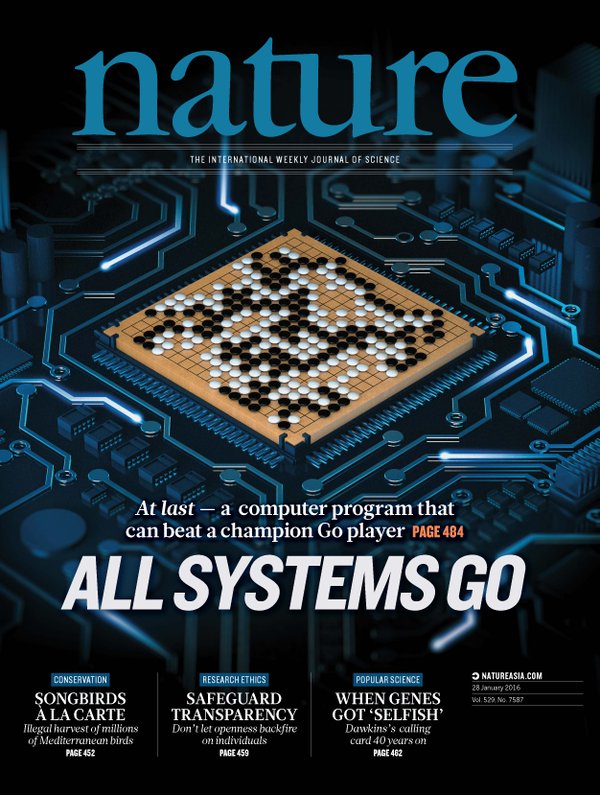

[2] I'll have to track that passage down when I am back in my regular office and have access to my books. (We are in temporary digs this fall due to construction.) I wonder if AI has accomplished the criticized goal in the time since Russell and Norvig published their book. AI has reached heights in recent years that many critics in 1995 could not imagine. I certainly didn't imagine a computer program defeating a human expert at Go in my lifetime, let alone learning to do so almost from scratch! (I wrote about AlphaGo and its intersection with my ideas about AI a few times over the years: [ 01/2016 | 03/2016 | 05/2017 | 05/2018 ].)

October 30, 2022 9:32 AM

Recognize

From Robin Sloan Sloan's newsletter:

There was a book I wanted very badly to write; a book I had been making notes toward for nearly ten years. (In my database, the earliest one is dated December 13, 2013.) I had not, however, set down a single word of prose. Of course I hadn't! Many of you will recognize this feeling: your "best" ideas are the ones you are most reluctant to realize, because the instant you begin, they will drop out of the smooth hyperspace of abstraction, apparate right into the asteroid field of real work.

I can't really say that there is a book I want very badly to write. In the early 2000s I worked with several colleagues on elementary patterns, and we brainstormed writing an intro CS textbook built atop a pattern language. Posts from the early days of this blog discuss some of this work from ChiliPLoP, I think. I'm not sure that such a textbook could ever have worked in practice, but I think writing it would have been a worthwhile experience anyway, for personal growth. But writing such a book takes a level of commitment that I wasn't able to make.

That experience is one of the reasons I have so much respect for people who do write books.

While I do not have a book for which I've been making notes in recent years, I do recognize the feeling Sloan describes. It applies to blog posts and other small-scale writing. It also applies to new courses one might create, or old courses one might reorganize and teach in a very different way.

I've been fortunate to be able to create and re-create many courses over my career. I also have some ideas that sit in the back of my mind because I'm a little concerned about the commitment they will require, the time and the energy, the political wrangling. I'm also aware that the minute I begin to work on them, they will no longer be perfect abstractions in my mind; they will collide with reality and require compromises and real work.

(TIL I learned the word "apparate". I'm not sure how I feel about it yet.)

October 02, 2022 9:13 AM

Twitter Replies That No One Asked For

I've been pretty quiet on Twitter lately. One reason is that my daily schedule has been so different for the last six or eight weeksr: I've been going for bike rides with my wife at the end of the work day, which means I'm most likely to be reading Twitter late in the day. By then, many of the threads I see have played themselves out. Maybe I should jump in anyway? Even after more than a decade, I'm not sure I know how to Twitter properly.

Here are a few Twitter replies that no one asked for and that I chose not to send at the time.

• When people say, "That's the wrong question to ask", what they often seem to mean -- and should almost always say -- is, "That's not the question I would have asked."

• No, I will not send you a Google Calendar invite. I don't use Google Calendar. I don't even put every event into the calendaring system I *do* use.

• Yes, I will send you a Zoom link.

• COVID did not break me for working from home. Before the pandemic, I almost never worked at home during the regular work day. As a result, doing so felt strange when the pandemic hit us all so quickly. But I came first to appreciate and then to enjoy it, for many of the same reasons others enjoy it. (And I don't even have a long or onerous commute to campus!) Now, I try to work from home one day a week when schedules allow.

• COVID also did not break me for appreciating a quiet and relatively empty campus. Summer is still a great time to work on campus, when the pace is relaxed and most of the students who are on campus are doing research. Then again, so is fall, when students return to the university, and spring, when the sun returns to the world. The takeaway: It's usually a great time to be on campus.

I realize that some of these replies in absentia are effectively subtweets at a distance. All the more reason to post them here, where everyone who reads them has chosen to visit my blog, rather in a Twitter thread filled with folks who wouldn't know me from Adam. They didn't ask for my snark.

I do stand by the first bullet as a general observation. Most of us -- me included! -- would do better to read everyone else's tweets and blog posts as generously as possible.

September 18, 2022 9:37 AM

Dread and Hope

First, a relatively small-scale dread. From Jeff Jarvis in What Is Happening to TV?

I dread subscribing to Apple TV+, Disney+, Discovery+, ESPN+, and all the other pluses for fear of what it will take to cancel them.

I have not seen a lot of popular TV shows and movies in the last decade or two because I don't want to deal with the hassle of unsubscribing from some service. I have a list of movies to keep an eye out for in other places, should they ever appear, or to watch at their original homes, should my desire to see them ever outgrow my preference to avoid certain annoyances.

Next, a larger-scale source of hope, courtesy of Neel Krishnaswami in The Golden Age of PL Research:

One minor fact about separation logic. John C. Reynolds invented separation logic when was 65. At the time that most people start thinking about retirement, he was making yet another giant contribution to the whole field!

I'm not thinking about retirement at all yet, but I am past my early days as a fresh, energetic, new assistant prof. It's good to be reminded every once in a while that the work we do at all stages of our careers can matter. I didn't make giant contributions when I was younger, and I'm not likely to make a giant contribution in the future. But I should strive to keep doing work that matters. Perhaps a small contribution remains to be made.

~~~~

This isn't much of a blog post, I know. I figure if I can get back into the habit of writing small thoughts down, perhaps I can get back to blogging more regularly. It's all about the habit. Wish me luck.

August 29, 2022 4:44 PM

Radio Silence

|

I did not intend for August to be radio silence on my blog and Twitter page. The summer just caught up with me, and my brain took care of itself, I guess, by turning off for a bit.

One bit of newness for the month was setting up a new Macbook Air. I finally placed my order on July 24. It was scheduled to arrive the week of August 10-17 but magically appeared on our doorstep on July 29. I've been meaning to write about the experience of setting up a new Mac laptop after working for seven years on a trusty Macbook Pro, but that post has been a victim of the August slowdown. I can say this: I pulled out the old Macbook Pro to watch Netflix on Saturday evening... and it felt *so* heavy. How quickly we adjust to new conditions and forget how lucky we were before.

Another pleasure in August was meeting up with Daniel Steinberg over Zoom. I remember back near the beginning of the pandemic Daniel said something on Twitter about getting together for a virtual coffee with friends and colleagues he could no longer visit. After far too long, I contacted him to set up a chat. We had a lot of catching up to do and ended up discussing teaching, writing, programming, and our families. It was one of my best hours for the month!

My wife and I took advantage of the last week before school started by going on a couple of hikes. We visited Backbone State Park for the first time and spent an entire day walking and enjoying scenery that most people don't associate with Iowa. The image at the top of this post comes from the park's namesake trail, which showcases some of the dolomite limestone cliffs leftover from before the last glaciers. Here's another shot, of an entrance to a cave carved out by icy water that still flows beneath the surface:

Closer to home, we took a long morning to walk through Hartman Reserve, a county preserve. Walking for a couple of hours as the sun rises and watching the trees and wildlife come to light is a great way to shake some rust off the mind before school starts.

I had a tough time getting ready mentally for the idea of a new school year. This summer's work offered more burnout than refreshment. As the final week before classes wound down, I had to get serious about class prep -- and it freed me up a bit. Writing code, thinking about CS, and getting back into the classroom with students still energize me. This fall is my compilers course. I'm giving myself permission to make only a few targeted changes in the course plan this time around. I'm hoping that this lets me build some energy and momentum throughout the semester. I'll need that in order to be there for the students.

August 15, 2022 12:49 PM

No Comment

|

From the closing pages from The Orchid Thief, which I mentioned in my previous post:

"The thing about computers," Laroche said, "the thing that I like is that I'm immersed in it but it's not a living thing that's going to leave or die or something. I like having the minimum number of living things to worry about in my life."

Actually, I have two comments.

If Laroche had gotten into open source software, he might have found himself with the opposite problem: software that won't die. Programmers sometimes think, "I know, I'll design and implement my own programming language!" Veterans of the programming languages community always seem to advise: think twice. If you put something out there, other people will use it, and now you are stuck maintaining a package forever. The same can be said for open source software more generally. Oh, and did I mention it would be really great if you added this feature?

I like having plants in my home and office. They give me joy every day. They also tend to live a lot longer than some of my code. The hardy orchid featured above bloomed like clockwork twice a year for me for five and a half years. Eventually it needed more space than the pot in my office could give, so it's gone now. But I'm glad to have enjoyed it for all those years.

July 06, 2022 4:13 PM

When Dinosaurs Walked the Earth

|

Chad Orzel, getting ready to begin his 22nd year as a faculty member at Union College, muses:

It's really hard to see myself in the "grizzled veteran" class of faculty, though realistically, I'm very much one of the old folks these days. I am to a new faculty member starting this year as someone hired in 1980 would've been to me when I started, and just typing that out makes me want to crumble into dust.

I'm not the sort who likes to one-up another blogger, but... I can top this, and crumble into a bigger, or at least older, pile of dust.

In May, I finished my 30th year as a faculty member. I am as old to a 2022 hire as someone hired in 1962 would have been to me. Being in computer science, rather than physics or another of the disciplines older than CS, this is an even bigger gap culturally than it first appears. The first Computer Science department in the US was created in 1962. In 1992, my colleagues who started in the 1970s seemed pretty firmly in the old guard, and the one CS faculty member from the 1960s had just retired, opening the line into which I was hired.

Indeed, our Department of Computer Science only came into existence in 1992. Prior to that, the CS faculty had been offering CS degrees for a little over a decade as part of a combined department with Mathematics. (Our department even has a few distinguished alums who graduated pre-1981, with CS degrees that are actually Math degrees with a "computation emphasis".) A new department head and I were hired for the department's first year as a standalone entity, and then we hired two more faculty the next year to flesh out our offerings.

So, yeah. I know what Chad means when he says "just typing that out makes me want to crumble into dust", and then some.

On the other hand, it's kind of cool to see how far computer science has come as an academic discipline in the last thirty years. It's also cool that I am still be excited to learn new things and to work with students as they learn them, too.

June 28, 2022 4:12 PM

You May Be Right

|

I first saw Billy Joel perform live in 1983, with a college roommate and our girlfriends. It was my first pop/rock concert, and I fancied myself the biggest Billy Joel fan in the world. The show was like magic to a kid who had been listening to Billy's music on vinyl, and the radio, for years.

Since then, I've seen him more times than I can remember, most recently in 2008. My teenaged daughters went with me to that one, so it was magic for more reasons than one. I've even seen a touring Broadway show built around his music. So, yeah, I'm still a fan.

On Saturday morning, I drove to Elkhart, Indiana, to meet up with three friends from college to go see Billy perform outdoors at Notre Dame Stadium. We bought our tickets in October 2019, pre-COVID, expecting to see the show in the summer of 2020. After two years of postponement, Billy, the venue, and the fans were ready to go. Six hours is a long way to drive to see a two- or three-hour show, especially knowing that I had to drive six hours back the next morning. I'm not a college student any more!

You may be right; I may be crazy. But I would drive six hours again to see Billy. Even at 73, he puts on a great show. I hope I have that kind of energy -- and the desire to still do my professional thing -- when I reach that age. (I don't expect that 50,000 students will pay to see me do it, let alone drive six hours.) For this show, I had the bonus of being able to visit with good friends, one of whom I've known since grade school, after too long a time.

I went all fanboy in my short post about the 2008 concert, so I won't bore you again with my hyperbole. I'll just say that Billy performed "She's Always A Woman" and "Don't Ask Me Why" again, along with a bunch of the old favorites and a few covers: I enjoyed his impromptu version of "Don't Let the Sun Go Down on Me", bobbles and all. He played piano for one of his band members, Mike DelGuidice, who sang "Nessun Dorma". And the biggest ovation of the night may have gone to Crystal Taliafero, a multi-talented member of Billy's group, for her version of "Dancing in the Streets" during the extended pause in "The River of Dreams".

This concert crowd was the most people I've been around in a long time... I figured a show in an outdoor stadium was safe enough, with precautions. (I was one of the few folks who wore a mask in the interior concourse and restrooms.) Maybe life is getting back to normal.

If this was my last time seeing Billy Joel perform live, it was a worthy final performance. Who knows, though. I thought 2008 might be my last live show.

June 07, 2022 12:25 PM

I Miss Repeats

This past weekend, it was supposed to rain Saturday evening into Sunday, so I woke up with uncertainty about my usual Sunday morning bike ride. My exercise bike broke down a few weeks back, so riding outdoors was my only option. I decided before I went to bed on Saturday night that, if it was dry when I woke up, I would ride a couple of miles to a small lake in town and ride laps in whatever time I could squeeze in between rain showers.

The rain in the forecast turned out to be a false alarm, so I had more time to ride than I had planned. I ended up riding the 2.3 miles to the fifteen 1.2-mile laps, and 2.30 miles back home. Fifteen mile-plus laps may seem crazy to you, but it was the quickest and most predictable adjustment I could make in the face of the suddenly available time. It was like a speed workout on the track from my running days. Though shorter than my usual Sunday ride, it was an unexpected gift of exercise on what turned out to be a beautiful morning.

A couple of laps into the ride, the hill on the far end of the loop began to look look foreboding. Thirteen laps to go... Thirteen more times up an extended incline (well, at least what passes for one in east central Iowa).

After a few more laps, my mindset had changed. Six down. This feels good. Let's do nine more!

I had found the rhythm of doing repeats.

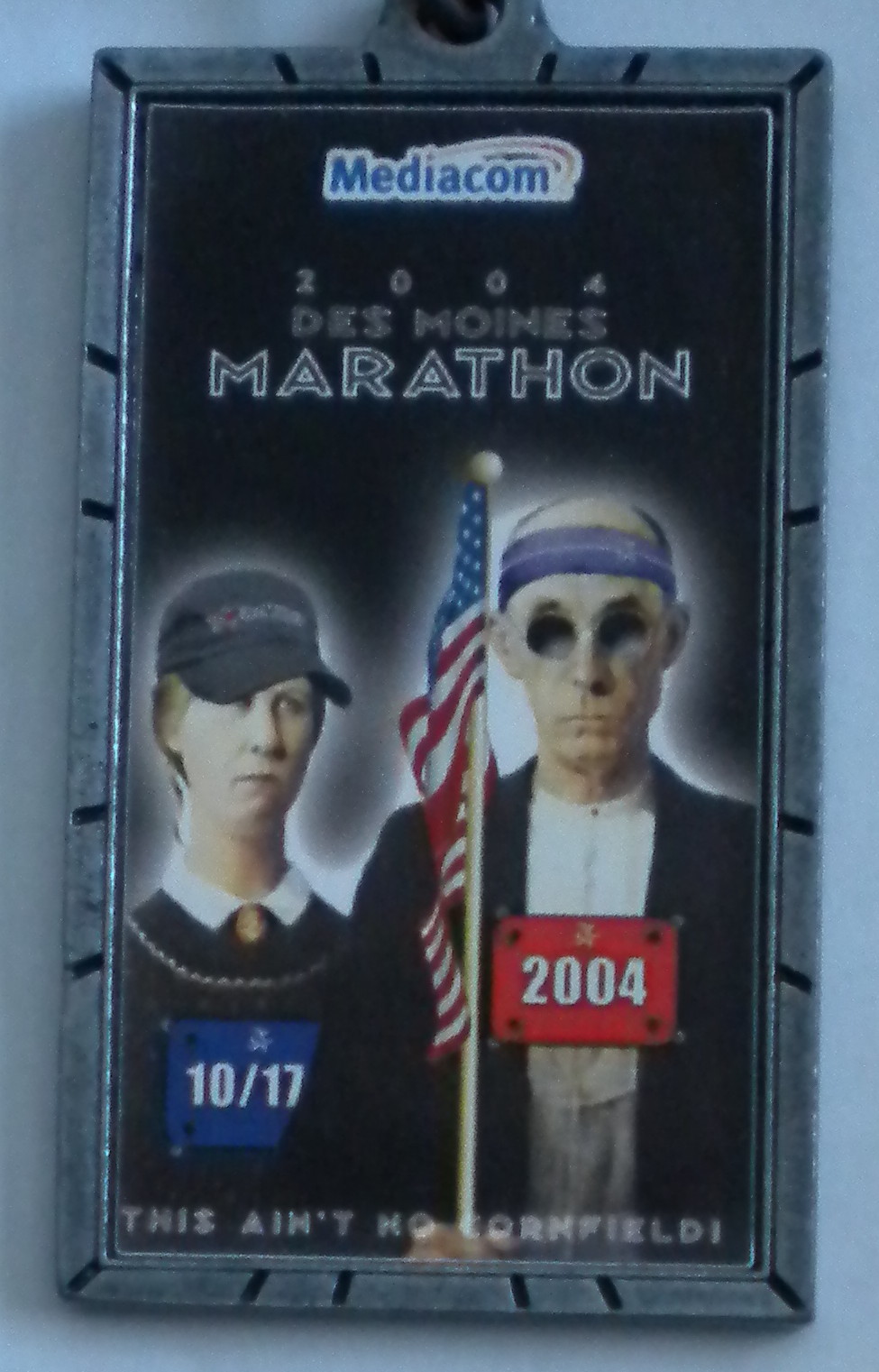

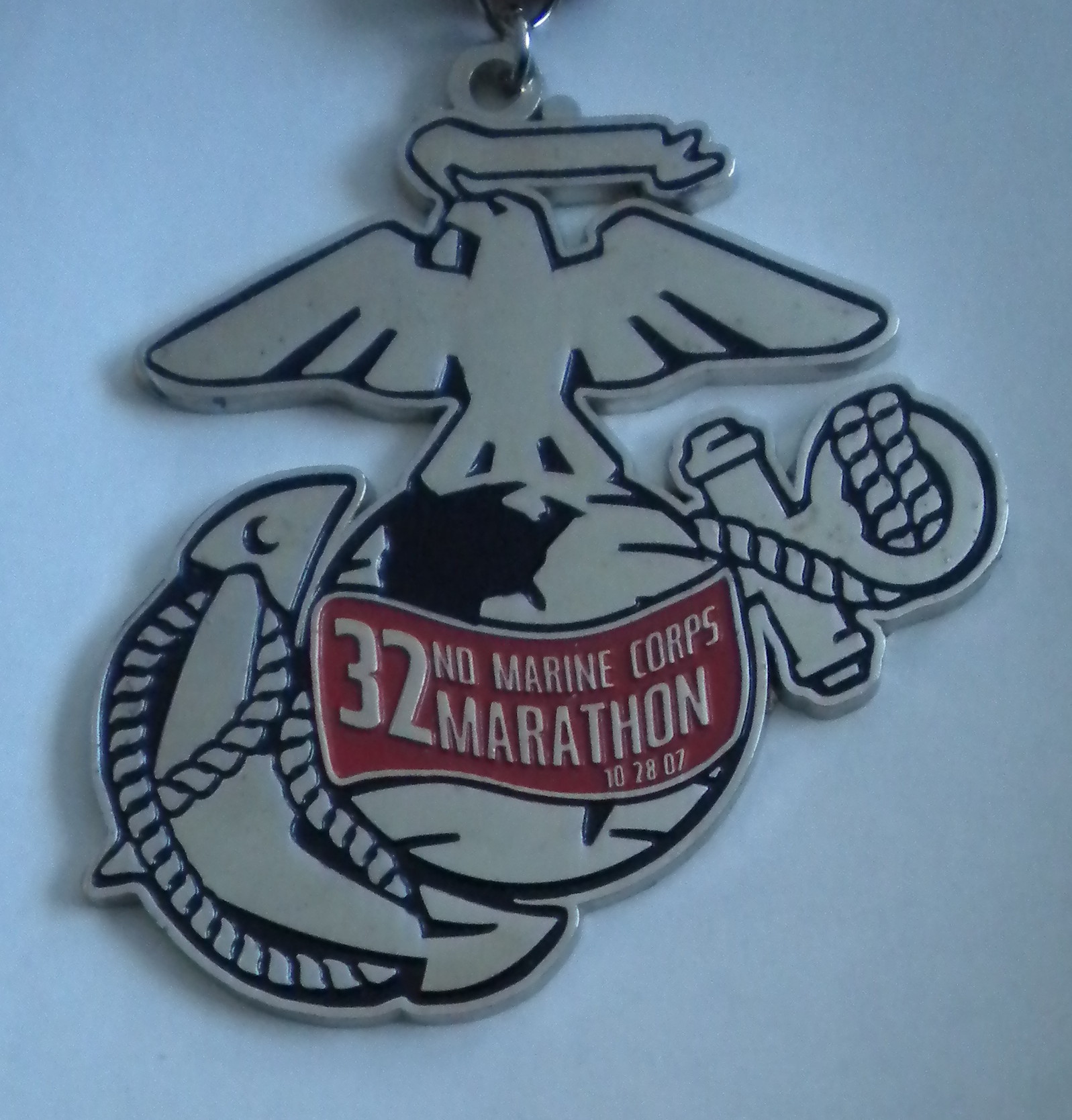

I used to do track repeats when training for marathons and always liked them. (One of my earliest blog entries sang the praises of short iterations and frequent feedback on the track.) I felt again the hit of endorphins every time I completed one loop around the lake. My body got into the rhythm. Another one, another one. My mind doesn't switch off under these conditions, but it does shift into a different mode. I'm thinking, but only in the moment of the current lap. Then there's one more to do.

I wonder if this is one of the reasons some programmers like programming with stories of a limited size, or under the constraints of test-driven design. Both provide opportunities for frequent feedback and frequent learning. They also provide a hit of endorphins every time you make a new test pass, or see the light go green after a small refactoring.

My willingness to do laps, at least in service of a higher goal, may border on the unfathomable. One Sunday many years ago, when I was still running, we had huge thunderstorms all morning and all afternoon. I was in the middle of marathon training and needed a 20-miler that day to stay on my program. So I went to the university gym -- the one mentioned in the blog post linked above, with 9.2 laps to a mile -- and ran 184 laps. "Are you nuts?" I loved it! The short iterations and frequent feedback dropped me in to a fugue-like rhythm. It was easy to track my pace, never running too fast or too slow. It was easy to make adjustments when I noticed something off-plan. In between moments checking my time, I watched people, I breathed, I cleared my mind. I ran. All things considered, it was a good day.

Sunday morning's fifteen laps were workaday in comparison. At the end, I wished I had more time to ride. I felt strong enough. Another five laps would have been fun. That hill wasn't going to get me. And I liked the rhythm.

April 04, 2022 5:45 PM

Leftover Notes

Like many people, I carry a small notebook most everywhere I go. It is not a designer's sketchbook or engineer's notebook; it is intended primarily to capturing information and ideas, a lá Getting Things Done, before I forget then. Most of the notes end up being transferred to one of my org-mode todo lists, to my calendar, or to a topical file for a specific class or project. Write an item in the notebook, transfer to the appropriate bin, and cross it off in the notebook.

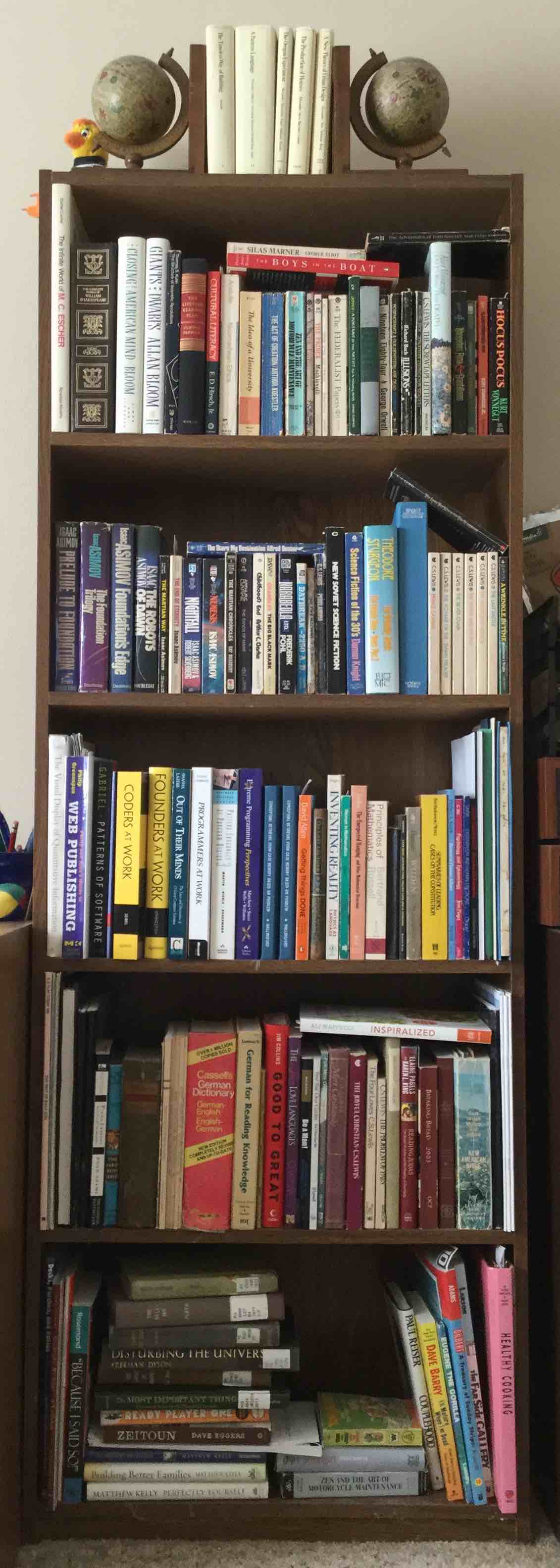

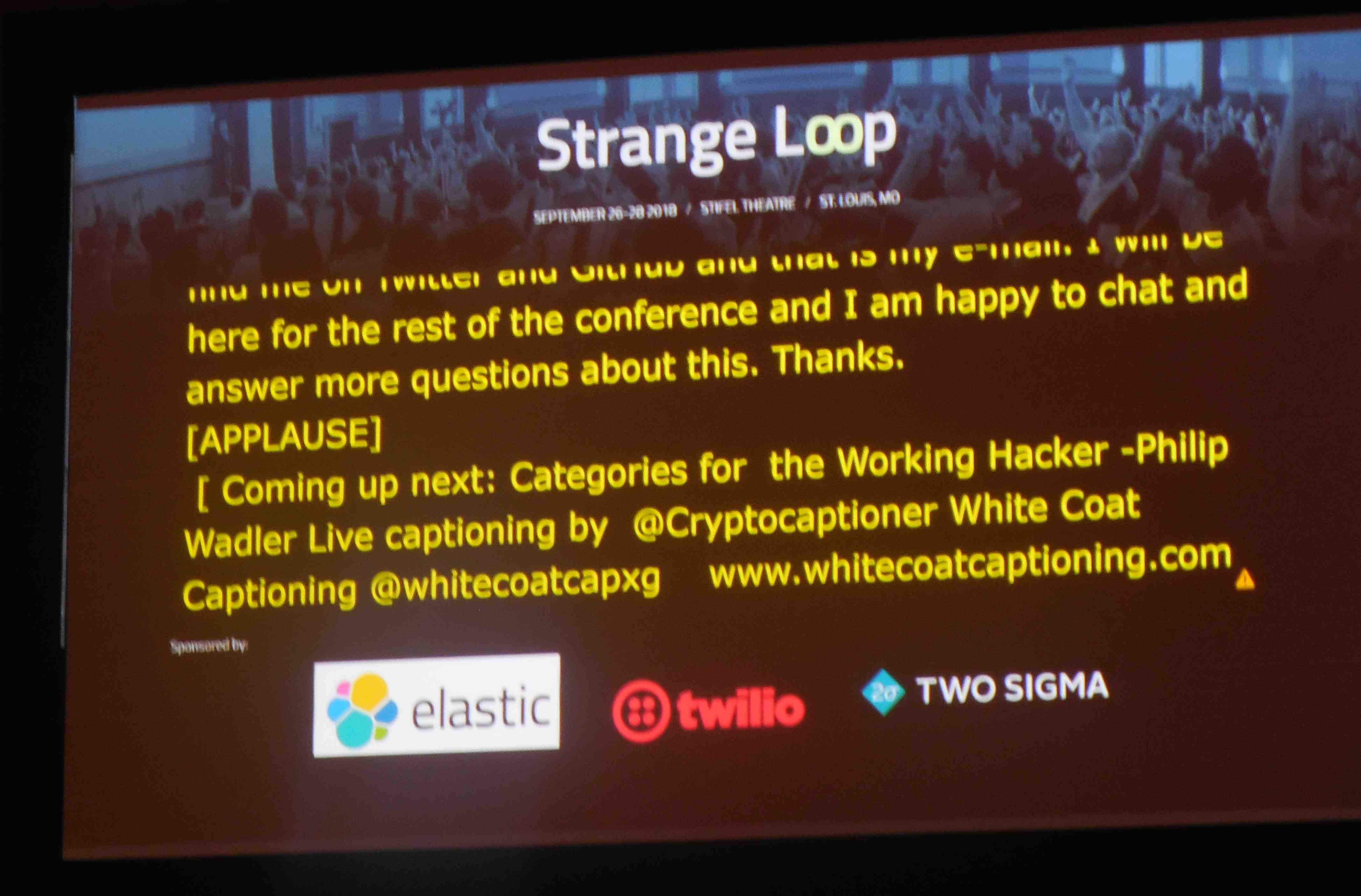

I just filled the last line of my most recent notebook, a Fields Notes classic that I picked up as schwag at Strange Loop a few years ago. Most of the notebook is crossed out, a sign of successful capture and transfer. As I thumbed back through it, I saw an occasional phrase or line that never made into a more permanent home. That is pretty normal for my notebooks. At this point, I usually recycle the used notebook and consign untracked items to lost memories.

For some reason, this time I decided to copy all of the untracked down and savor the randomness of my mind. Who knows, maybe I'll use one of these notes some day.

The Fedsbasic soul math

I want to be #0

routine, ritual

gallery.stkate.edu

M. Dockery

www.wastetrac.org/spring-drop-off-event

Crimes of the Art

What the Puck

Massachusetts ombudsman

I hope it's still funny...

chessable.com

art gallery

ena @ tubi

In Da Club (50 Cent)

Gide 25; 28 May : 1

HFOSS project

April 4-5: Franklin documentary

Mary Chapin Carpenter

"Silent Parade" by Keigo Higashino

www.pbs.org -- search Storm Lake

"Hello, Transcriber" by Hannah Morrissey

Dear Crazy Future Eugene

I recognize most of these, though I don't remember the reason I wrote all of them down. For whatever reason, they never reached an actionable status. Some books and links sound interesting in the moment, but by the time I get around to transcribing them elsewhere, I'm no longer interested enough to commit to reading, watching, or thinking about them further. Sometimes, something pops into my mind, or I see something, and I write it down. Better safe than sorry...

That last one -- Dear Crazy Future Eugene -- ends up in a lot of my notebooks. It's a phrase that has irrational appeal to me. Maybe it is destined to be the title of my next blog.

There were also three multiple-line notes that were abandoned:

poem > reality

words > fact

a model is not identical

I vaguely recall writing this down, but I forget what prompted it. I vaguely agree with the sentiment even now, though I'd be hard-pressed to say exactly what it means.

Scribble pages that separate notes from full presentation

(solutions to exercises)

This note is from several months ago, but it is timely. Just this week, a student in my class asked me to post my class notes before the session rather than after. I don't do this currently in large part because my sessions are a tight interleaving of exercises that the students do in class, discussion of possible solutions, and use of those ideas to develop the next item for discussion. I think that Scribble, an authoring system that comes with Racket, offers a way for me to build pages I can publish in before-and-after form, or at least in an outline form that would help students take notes. I just never get around to trying the idea out. I think the real reason is that I like to tinker with my notes right up to class time... Even so, the idea is appealing. It is already in my planning notes for all of my classes, but I keep thinking about it and writing it down as a trigger.

generate scanner+parser? expand analysis,

codegen (2 stages w/ IR -- simple exps, RTS, full)

optimization! would allow bigger source language?

This is evidence that I'm often thinking about my compiler course and ways to revamp it. This idea is also already in the system. But I keep to prompting myself to think about it again.

Anyway, that was a fun way to reflect on the vagaries of my mind. Now, on to my next notebook: a small pocket-sized spiral notebook I picked up for a quarter in the school supplies section of a big box store a while back. My friend Joe Bergin used to always have one of these in his shirt pocket. I haven't used a spiral-bound notebook for years but thought I'd channel Joe for a couple of months. Maybe he will inspire me to think some big thoughts.

February 13, 2022 12:32 PM

A Morning with Billy Collins

It's been a while since I read a non-technical article and made as many notes as I did this morning on this Paris Review interview with Billy Collins. Collins was poet laureate of the U.S. in the early 2000s. I recall reading his collection, Sailing Alone Around the Room, at PLoP in 2002 or 2003. Walking the grounds at Allerton with a poem in your mind changes one's eyes and hears. Had I been blogging by then, I probably would have commented on the experience, and maybe one or two of the poems, in a post.

As I read this interview, I encountered a dozen or so passages that made me think about things I do, things I've thought, and even things I've never thought. Here are a few.

I'd like to get something straightened out at the beginning: I write with a Uni-Ball Onyx Micropoint on nine-by-seven bound notebooks made by a Canadian company called Blueline. After I do a few drafts, I type up the poem on a Macintosh G3 and then send it out the door.

Uni-Ball Micropoint pens are my preferred writing implement as well, though I don't write enough on paper any more to make buying a particular pen much worth the effort. Unfortunately, just yesterday my last Uni-Ball Micro wrote its last line. Will I order more? It's a race between preference and sloth.

I type up most of the things I write these days on a 2015-era MacBook Pro, often connected to a Magic Keyboard. With the advent of the M1 MacBook Pros, I'm tempted to buy a new laptop, but this one serves me so well... I am nothing if not loyal.

The pen is an instrument of discovery rather than just a recording implement. If you write a letter of resignation or something with an agenda, you're simply using a pen to record what you have thought out. In a poem, the pen is more like a flashlight, a Geiger counter, or one of those metal detectors that people walk around beaches with. You're trying to discover something that you don't know exists, maybe something of value.

Programming may be like writing in many ways, but the search for something to say isn't usually one of them. Most of us sit down to write a program to do something, not to discover some unexpected outcome. However, while I may know what my program will do when I get done, I don't always know what that program will look like, or how it will accomplish its task. This state of uncertainty probably accounts for my preference in programming languages over the years. Smalltalk, Ruby, and Racket have always felt more like flashlights or Geiger counters than tape recorders. They help me find the program I need more readily than Java or C or Python.

I love William Matthews's idea--he says that revision is not cleaning up after the party; revision is the party!

Refactoring is not cleaning up after the party; refactoring is the party! Yes.

... nothing precedes a poem but silence, and nothing follows a poem but silence. A poem is an interruption of silence, whereas prose is a continuation of noise.

I don't know why this passage grabbed me. Perhaps it's just the imagery of the phrases "interruption of silence" and "continuation of noise". I won't be surprised if my subconscious connects this to programming somehow, but I ought to be suspicious of the imposition. Our brains love to make connections.

She's this girl in high school who broke my heart, and I'm hoping that she'll read my poems one day and feel bad about what she did.

This is the sort of sentence I'm a sucker for, but it has no real connection to my life. Though high school was a weird and wonderful time for me, as it was for so many, I don't think anything I've ever done since has been motivated in this way. Collins actually goes on to say the same thing about his own work. Readers are people with no vested interest. We have to engage them.

Another example of that is my interest in bridge columns. I don't play bridge. I have no idea how to play bridge, but I always read Alan Truscott's bridge column in the Times. I advise students to do the same unless, of course, they play bridge. You find language like, South won with dummy's ace, cashed the club ace and ruffed a diamond. There's always drama to it: Her thirteen imps failed by a trick. There's obviously lots at stake, but I have no idea what he's talking about. It's pure language. It's a jargon I'm exterior to, and I love reading it because I don't know what the context is, and I'm just enjoying the language and the drama, almost like when you hear two people arguing through a wall, and the wall is thick enough so you can't make out what they're saying, though you can follow the tone.