September 30, 2005 5:16 PM

Marathon On-Deck

My third marathon is a little over 36 hours away. As I have come to expect from this big, complicated world, my last week before the race did not go as planned.

I did my last last 10-mile run last Sunday. I did the middle four to six miles at marathon goal pace (8:00 miles) with some slower miles to start and finish.

That part was just as I had planned.

Somewhere along the route, though, I took a pit stop and came into contact with some poison ivy. You may recall that I came down with an extreme case of poison ivy last year, which cost me nine days of training. Well, after poison ivy exposure, the body remains highly sensitized for up to several years, and inadvertent contact this year led to another bad case -- one week to the day before my race.

This case hasn't been as bad as last year, but that's not much comfort. Almost no case could be that bad. This case is bad enough to have kept me from running all week long and has kept me in various stages of itchiness and pain throughout. It peaked on Wednesday but has been so in recovery.

Now, a marathon runner usually trims his mileage back severely in the week before a race, but 0 miles is extreme. With no running, I haven't had much appetite and so haven't carbo-loaded very well.

The good news is that I'm getting better, and at a rate fast enough that I hope to be able to run my race. At this point, I hope only to get better enough that I can run it, regardless of my fitness or ability to achieve my big goals. I realize now that I have been looking forward to the marathon for its own sake, as a pleasurable challenge. It's good to know that I have a purer motive than to PR or achieve a particular time.

If I get to run, I will be well-rested. But will I be rusty? A little out of condition? I don't know. I am prepared to adjust my goals so that I can enjoy the run, so if I find that I'm rusty, I'll just run as I can.

Then again, maybe the rest is just what I needed, and I'll be able to shake off the rust and pursue my goal of a 3:30 finish. A small part of me still hopes... It's good to know that I also have some drive to excel.

The forecast calls for an overnight low on Sunday morning of 63 degrees Fahrenheit, on it sway to 81 degrees. That is warm for a marathon! Many folks will enjoy their runs less at those temps, but I don't mind running on warm days. This will be the opposite of last year, when the race started in the mid-30s and only reached 50 or by race end.

Every year seems to bring new conditions. Whether it's weather or illness, that makes for different challenges.

I won't be the only interesting fellow in the race. This marathon doubles as the US national marathon championship, so several elite American runners, such as 2004 Olympian Dan Browne, will be on the course. And I just learned that the Governor of Minnesota and the First Lady will run, too. (Both have complete TCM in the past.)

If you find yourself on-line Sunday and feel the urge to know just where old Eugene finished, check out the race results on-line.

Wish me luck!

September 29, 2005 6:27 PM

What He Said

Every once in a while, someone says something that just makes me say, "Oh, yeah" -- even if I've already written a similar piece myself. From The Fishbowl:

Programming is...

Programming is looking at a feature request in the morning, thinking, "I can do that in one line of code", and then six hours later having refactored the rest of the codebase sufficiently that you can implement the feature...

...in one line of code.

That makes a nice complement to the quote from Jason Marshall and the epigram from Brian Foote quoted in my earlier post. It may have to go on to my office door.

September 29, 2005 1:49 PM

Mathematics Coincidence

An interesting coincidence... Soon after I post my spiel on preparing to study computer science, especially the role played by mathematics courses, the folks on the SIGCSE mailing list have started a busy thread on the place of required math courses in the CS curriculum. The thread began with a discussion of differential equations, which some schools apparently still require for a CS degree. The folks defending such math requirements have relied on two kinds of argument.

One is to assert that math courses teach discipline and problem-solving skills, which all CS students need. I discussed this idea in my previous article. I don't think there is much evidence that taking math courses teaches students problem-solving skills or discipline, at least not as most courses are taught. They do tend to select for problem-solving skills and discipline, though, which makes them handy as a filter -- if that's what you want. But they are not always helpful as learning experiences for students.

The other is to argue that students may find themselves working on scientific or engineering projects that require solving differential equations, so the course is valuable for its content. My favorite rebuttal to this argument came from a poster who listed a dozen or so projects that he had worked on in industry over the years. Each required specific skills from a domain outside computing. Should we then require one or more courses from each of those domains, on the chance that our students work on projects in them? Could we?

Of course we couldn't. Computing is a universal tool, so it can and usually will be applied everywhere. It is something of a chameleon, quickly adaptable to the information-processing needs of a new discipline. We cannot anticipate all the possible applications of computing that our students might encounter any more than we can anticipate all the possible applications of mathematics they might encounter.

The key is to return to the idea that underlies the first defense of math courses, that they teach skills for solving problems. Our students do need to develop such skills. But even if students could develop such skills in math courses, why shouldn't we teach them in computing courses? Our discipline requires a particular blend of analysis and synthesis and offers a particular medium for expressing and experimenting with ideas. Computer science is all about describing what can be systematically described and how to do so in the face of competing forces. The whole point of an education in computing should be to help people learn how to use the medium effectively.

Finally, Lynn Andrea Stein pointed out an important consideration in deciding what courses to require. Most of my discussion and the discussion on the SIGCSE mailing list has focused on the benefits of requiring, say, a differential equations course. But we need also to consider the cost of such a requirement. We have already encountered one: an opportunity cost in the form of people. Certain courses filter out students who are unable to succeed in that course, and we need to be sure that we are not missing out on students who would make good computer science student. For example, I do not think that a student's inability to succeed in differential equations means that the student cannot succeed in computer science. A second opportunity cost comes in the form of instructional time. Our programs can require only so many courses, so many hours of instruction. Could we better spend a course's worth of time in computing on a topic other than differential equations? I think so.

I remember learning about opportunity cost, in an economics course I took as an undergrad. Taking a broad set of courses outside of computing really can be useful.

Posted by Eugene Wallingford | Permalink | Categories: Computing, Software Development, Teaching and Learning

September 27, 2005 7:29 PM

Learning by Dint of Experience

While writing my last article, I encountered one of those strange cognitive moments. I was in the process of writing the trite phrase "through sheer dint of repetition" when I had a sudden urge to use dent in place of 'dint' -- even though I know deep inside that dint is correct.

What to do? I used what for many folks is now the standard Spell Checker for Tough Cases: Google. Googling dent of repetition found 4 matches; googling dint of repetition found 470. This is certainly not conclusive evidence; maybe everyone else is as clueless as I. But it was enough evidence to help me go with my instinct in the face of a temporary brain cramp.

Of course, our growing experience with the World Wide Web and other forms of collaborative technologies is that the group is often smarter than the individual. The wisdom of crowds and all that. It's probably no accident that I link "wisdom of crowds" to Amazon.com, either.

To further confirm my decision to stick with 'dint', I spent some time at Merriam-Webster On-Line, where I learned that 'dint' and 'dent' share a common etymology. It's funny what I can learn when I sit down to write.

September 27, 2005 7:10 PM

Preparing to Study Computer Science

Yesterday, our department hosted a "preview day" for high school seniors who are considering majoring in computer science here at UNI. During the question-and-answer portion of one session, a student asked, "What courses should we take in our senior year to best prepare to study CS?" That's a good question, and one that resulted in a discussion among the CS faculty present.

For most computer science faculty, the almost reflexive answer to this question is math and science. Mathematics courses encourage abstract thinking, attention to detail, and precision. Science courses help think like an empiricist: formulating hypotheses, designing experiments, making and recording observations, and drawing inferences. A computer science student will use all of these skills throughout her career.

I began my answer with math and science, but the other faculty in the room reacted in a way that let me know they had something to say, too. So I let them take the reins. All three downplayed the popular notion that math, at least advanced math, is an essential element of the CS student's background.

One faculty member pointed out the students with backgrounds in music often do very well in CS. This follows closely with the commonly-held view that music helps children to develop skill at spatial and symbolic reasoning tasks. Much of computing deals not with arithmetic reasoning but with symbolic reasoning. As an old AI guy, I know this all too well. In much the same way that music might help CS students, studying language may help students to develop facility manipulating ideas and symbolic representations, skills that are invaluable to the software developer and the computing researcher alike.

We ended up closing our answer to the group by saying that studying whatever interests you deeply -- and really learning that discipline -- will help you prepare to study computer science more than following any blanket prescription to study a particular discipline.

(In retrospect, I wish I had thought to tack on one suggestion to our conclusion: There can be great value in choosing to study something that challenges you, that doesn't interest you as much as everything else, precisely because it forces you to grow. And besides, you may find that you come to understand the something well enough to appreciate it, maybe even like it!)

I certainly can't quibble with the direction our answer went. I have long enjoyed learning from writers, and I believe that my study of language and literature, however narrow, has made me a better computer scientist. I have had many CS students with strong backgrounds in art and music, including one wrote about last year. Studying disciplines other than math and science can lay a suitable foundation for studying computer science.

I was surprised by the strength of the other faculty's reaction to the notion that studying math is among the best ways to prepare for CS. One of these folks was once a high school math teacher, and he has always expressed dissatisfaction with mathematics pedagogy in the US at both the K-12 and university levels. To him, math teaching is mostly memorize-and-drill, with little or no explicit effort put into developing higher-order thinking skills for doing math. Students develop these skills implicitly, if at all, through sheer dint of repetition. In his mind, the best that math courses can do for CS is to filter out folks who have not yet developed higher-order thinking skills; it won't help students develop them.

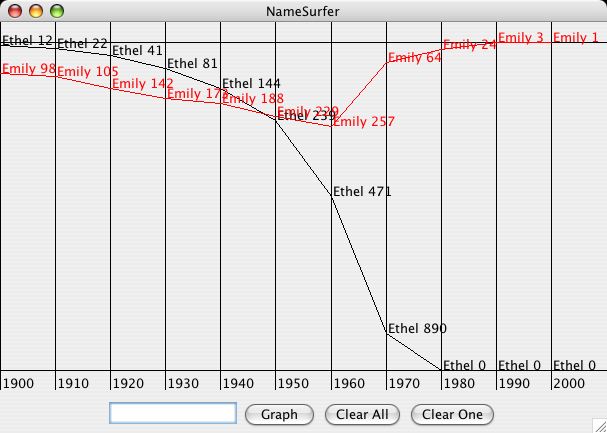

That may well be true, though I know that many math teachers and math education researchers are trying to do more. But, while students may not need advanced math courses to succeed in CS -- at least not in many areas of software development -- they do need to master some basic arithmetical skills. I keep thinking back to a relatively straightforward programming assignment I've given in my CS II course, to implement Nick Parlante's NameSurfer nifty assignment in Java. A typical NameSurfer display looks like this image, from Nick's web page:

As the user resizes the window, the program should grow or shrink its graph accordingly. To draw this image, the student must do some basic arithmetic to lay out the decade lines and to place the points on the lines and the names in the background. To scale the image, the student must do this arithmetic relative to window size, not with fixed values.

Easy, right? When I assigned this program, many students reacted as if I had cut off one of their fingers. Others seemed incapable of constructing the equations needed to do scaling correctly. (And you should have the reaction students had when once, many years ago, I asked students to write a graphical Java version of Mike Clancy's delicious Cat And Mouse nifty assignment. Horror of horror -- polar coordinates!)

This isn't advanced math. This is algebra. All students in our program were required to pass second-year algebra before being admitted to our university. But passing a course does not require mastery, and students find themselves with a course on their transcript but not the skills that the course entails.

Clearly, mastery of basic arithmetic skills is essential to most of computer science, even if more advanced math, even calculus, are not essential. Especially when I think of algebraic reasoning more abstractly, I have hard time imagining how students can go very far in CS without mastering algebraic reasoning. Whatever its other strengths or weaknesses the How to Design Programs approach to teaching programming does one thing well, and that is to make an explicit connection between algebraic reasoning and programs. The result is something in the spirit of Polya's How to Solve It.

This brings us back to what is the weakest part of the "math and science" answer to our brave high school student's question. So much of computing is not theory or analysis but design -- the act of working out the form of a program, interface, or system. While we may talk about the "design" of a proof or scientific experiment, we mean something more complex when we talk about the design of software. As a result, math and science do relatively little to help students develop the design skills which will be so essential to succeeding in the software side of CS.

Studying other disciplines can help, though. Art, music, and writing all involve the students in creating things, making them think about how to make. And courses in those disciplines are more likely to talk explicitly about structure, form, and design than are math and science.

So, we have quite defensible reasons to tell students to study disciplines other than science and math. I would still temper my advice by suggesting that students study both math and science and literature, music, art, and other creative disciplines. While this may not be what our student was looking for, perhaps the best answer is all of the above.

Then again, maybe success in computing is controlled by aptitude, than by learning. If that is the case, then many of us, students and faculty alike, are wasting a lot of time. But I don't think that's true for most folks. Like Alan Kay, I think we just need to understand better this new medium that is computing so that we can find the right ways to empower as many people as possible to create new kinds of artifact.

Posted by Eugene Wallingford | Permalink | Categories: Computing, Software Development, Teaching and Learning

September 23, 2005 7:43 PM

Proof Becky Hirta isn't Doug Schmidt

... is right here.

I'm not saying that Doug doesn't have a social life; for all I know, he's a wild and crazy guy. But he has the well-earned reputation of answering e-mail at all hours of the day. I've sent him e-mail at midnight, only to have an answer hit my box within minutes. His grad students all tell me they've had the same experience, only at even more nocturnal hours. They are in awe. I'm a mix of envious and relieved.

I don't know why this was the first thought that popped into my head when I read Becky's post just now. Maybe it has something to do with the fact that I'm sitting in my office at 7:30 PM on a Friday evening. (Shh. Don't tell my students.)

September 23, 2005 7:26 PM

Ruby Friday

I have written about Scheme my last two times out, so I figured I should give some love to another of my favorite languages.

Like many folks these days, I am a big fan of Ruby. I took a Ruby tutorial at OOPSLA several years ago from Dave Thomas and Andy Hunt, author of the already-classic Programming Ruby. At the time, the only Ruby interpreter I could find for Mac OS 9 was a port written by a Japanese programmer, almost of whose documentation was written in, you guessed, Japanese. But I made it run and learned how to be functional in Ruby within a few hours. That told me something about the language.

(Recalling these tutorial reminds me of two things. One, Dave and Andy give a great tutorial. If you get the chance, learn from them in person. The same can be sai for many OOPSLA tutorials. Two, thank you, Apple, for OS X as a Unix -- and for shipping it with such a nice collction of programming tools.)

- If you want to augment the Pragmatic Programmers' guide to Ruby, check out Why's (Poignant) Guide to Ruby. You can learn Ruby there, plus quite a bit on programming more generally. You could have some fun, too.

- Unlike many dynamic language fans, I like Java just fine. I can enjoy programming in Java, but there is no question

that it gets in my way more than a language like Scheme or Ruby.

Still, I feel compelled to share this opportunity to improve your geekware collection:

Thanks to the magic of CafePress.com, you can buy a variety of merchandise in the Java Rehab line. But why?

Java coding need not be a life-long debilitation. With the proper treatment, and a copy of Programming Ruby, you can return to a life of happy, productive software development.

So, give yourself over to a higher power! Learn Ruby...

Just imagine how much more fun Java would be if it gave itself over to the higher power of higher-order procedures...

- Finally, a little Ruby on Ruby. Check out Sam Ruby's talk, The Case for Dynamic Languages. Sam

uses Ruby to illustrate his argument that the distinction between system languages and scripting languages is slowly

shrinking, as the languages we use everyday become more dynamic. Along the way, he shows the power of several ideas

that have entered mainstream programming parlance only in the last decade, among them closures and higher-order

procedures in the form of blocks.

But my favorite part of Sam's talk is his sub-title:

Reinventing Smalltalk, one decade at a time

Paul Graham says that we are reinventing Lisp, and he has a strong case. With either language as a target, the lives of programmers can only get better. The real question is whether objects as abstractions ultimately displace functions as the starting level of abstraction for the program. Another question, perhaps more important, is whether the language-as-environment can ever displace the minimalism of the scripting language as the programmer's preferred habitat. I have a hunch that the answer to both questions will be the same.

September 22, 2005 8:09 PM

4 to the Millionth Power

First I risk veering off into a political discussion in my review of a Thomas Friedman talk, and now I will write about a topic that arose during a contentious conversation among the science faculty here about Intelligent Design. Oh, my.

However, my post today isn't about ID, but rather numbers. And Scheme.

Here's the set-up: The local chapter of a scientific research society has invited a proponent of ID to give a talk here next week. (It has also invited a strong opponent of ID to speak next month.) Most of the biology professors here have argued quite strenuously that the society should not sponsor the talk, on the grounds that to do so lends legitimacy to ID. A vigorous discussion broke out on the science faculty's mailing list a couple of days ago about the value of fostering open debate on this issue.

That is all well and good, but I'm not much interested in commenting on the debate itself.

However, in the course of the discussion, one writer attempted to characterize the size of the search space inherent in evolving DNA. He used as his example a string of size one million, though I think he was going more for effect than 100% accuracy on the size. Now, each element in a strand of DNA is one of four bases: adenine (A), thymine (T), cytosine (C), and guanine (G). So, for a string one million bases long, there are 41,000,000 unique possible sequences. In order to show the size of this number, he went off and wrote a C program using the longest floating-point values available.

4 to the millionth power overflowed the available space. So he tried 4100,000.

That overflowed the available space, too, so he tried 410,000. Same result.

Finally, 41,000 gave him an answer he could see in exponential notation, a number on the order of 10602. That's big.

The next day, my colleague and I were discussing the combinatorics when I began to wonder how Dr. Scheme might handle his problem. So I turned to my keyboard and entered a Scheme expression at the interpreter prompt:

> (expt 4 1000)

114813069527425452423283320117768198402231770208869520047764273682576626139237031385665948631650626991844596463898746277344711896086305533142593135616665318539129989145312280000688779148240044871428926990063486244781615463646388363947317026040466353970904996558162398808944629605623311649536164221970332681344168908984458505602379484807914058900934776500429002716706625830522008132236281291761267883317206598995396418127021779858404042159853183251540889433902091920554957783589672039160081957216630582755380425583726015528348786419432054508915275783882625175435528800822842770817965453762184851149029376

That answer popped out in no time flat.

Emboldened, I tried 410,000. Again, an answer arrived as fast as Dr. Scheme could print it.

My colleague, a C programmer who doesn't use Scheme, was impressed. "Try 4100,000," he says. This one took a few seconds -- less than five -- before printing the answer. Almost all of the delay was for I/O; the computation time still registered 0 milliseconds.

The look on his face revealed admiration. But we still hadn't produced the number we really wanted, 41,000,000. So we tried.

Dr. Scheme sat quietly, chugging away. Within a few seconds it had its answer, but it took a while longer to produce its output -- a few minutes, in fact. But there it was, the 602,060-digit number that is 41,000,000.

Very nice indeed! I was again impressed with how well Scheme works with big numbers. You can imagine how impressed my colleague was. C compilers produce fast, tight code, but you need something beyond the base language to compute numbers this large. Scheme was more than happy to do the job out of the box.

Of course, if all my colleague wanted to know was the order of magnitude of 41,000,000, we could have had our answer much quicker, using a little piece we all learned in high school:

> (* 1000000 (/ (log 4) (log 10)))

602059.9913279623

602,060 digits. That sounds about right!

September 21, 2005 8:22 PM

Two Snippets, Unrelated?

First... A student related this to me today:

But after your lecture on mutual recursion yesterday, [another student] commented to me, "Is it wrong to think code is beautiful? Because that's beautiful."

It certainly isn't wrong to think code is beautiful. Code can be beautiful. Read McCarthy's original Lisp interpreter, written in Lisp itself. Study Knuth's TeX program, or Wirth's Pascal compiler. Live inside a Smalltalk image for a while.

I love to discover beautiful code. It can be professional code or amateur, open source or closed. I've even seen many beautiful programs written by students, including my own. Sometimes a strong student delivers something beautiful as expected. Sometimes, a student surprises me by writing a beautiful program seemingly beyond his or her means.

The best programmers strive to write beautiful code. Don't settle for less.

(What is mutual recursion, you ask? It is a technique used to process mutually-inductive data types. See my paper Roundabout if you'd like to read more.)

The student who told me the quote above followed with:

That says something about the kind of students I'm associating with.

... and about the kind of students I have in class. Working as an academic has its advantages.

Second... While catching up on some blog reading this afternoon, I spent some time at Pragmatic Andy's blog. One of his essays was called What happens when t approaches 0?, where t is the time it takes to write a new application. Andy claims that this is the inevitable trend of our discipline and wonders how it will change the craft of writing software.

I immediately thought of one answer, one of those unforgettable Kent Beck one-liners. On a panel at OOPSLA 1997 in Atlanta, Kent said:

As speed of development approaches infinity, reusability becomes irrelevant.

If you can create a new application in no time flat, you would never worry about reusing yesterday's code!

----

Is there a connection between these two snippets? Because I am teaching a course in programming languages course this semester, and particularly a unit on functional programming right now, these snippets both call to mind the beauty in Scheme.

You may not be able to write networking software or graphical user interfaces using standard Scheme "out of the box", but you can capture some elegant patterns in only a few lines of Scheme code. And, because you can express rather complex computations in only a few lines of code, the speed of development in Scheme or any similarly powerful language approaches infinity much faster than does development in Java or C or Ada.

I do enjoy being able to surround myself with the possibility of beauty and infinity each day.

Posted by Eugene Wallingford | Permalink | Categories: Computing, Patterns, Software Development, Teaching and Learning

September 15, 2005 8:10 PM

Technology and People in a Flat World

Technology based on the digital computer and networking has radically changed the world. In fact, it has changed what is possible in such a way that how we do business and entertain ourselves in the future may bear little resemblance to what we do today.

This will surely come as no surprise to those of you reading this blog. Blogging itself is one manifestation of this radical change, and many bloggers devote much of their blogging to discussing how blogging has changed the world (ad nauseam, it sometimes seems). But even without blogs, we all know that computing has redefined the parameters within each information is created and shared, and defined a new medium of expression that we and the computer-using world have only begun to understand.

Last night, I had the opportunity to hear Thomas Friedman, Pulitzer Prize-winning international affairs columnist for the New York Times, speak on the material in his bestseller, The World Is Flat: A Brief History of the Twenty-First Century. Friedman's book tells a popular tale of how computers and networks have made physical distance increasingly irrelevant in today's world.

Two caveats up front. The first is simple enough: I have not read the book The The World Is Flat yet, so my comments here will refer only to the talk Friedman delivered here last night. I am excited by the ideas and would like to think and write about them while they are fresh in my mind.

The second caveat is a bit touchier. I know that Friedman is a political writer and, as such carries with him the baggage that comes from at least occasionally advocating a political position in his writing. I have friends who are big fans of his work, and I have friends who are not fans at all. To be honest, I don't know much about his political stance beyond my friends' gross characterizations of him. I do know that he has engendered strong feelings on both sides of the political spectrum. (At least one of his detractors has taken the time to create the Anti-Thomas Friedman Page -- more on that later.) I have steadfastly avoided discussing political issues in this blog, preferring to focus on technical issues, with occasional drift into the cultural effects of technology. This entry will not be an exception. Here, I will limit my comments to the story behind the writing of the book and to the technological arguments made by Friedman.

On a personal note, I learned that, like me, Friedman is from the American Midwest. He was born in Minneapolis, married a girl from Marshalltown, Iowa, and wrote his first op-ed piece for the Des Moines Register.

The idea to write The The World Is Flat resulted as a side effect of research Friedman was doing on another project, a documentary on off-shoring. He was interviewing Narayana Murthy, chairman of the board at Infosys, "the Microsoft of India", when Murthy said, "The global economic playing field is being leveled -- and you Americans are not ready for it." Friedman felt as if he had been sideswiped, because he considers himself well-studied in modern economics and politics, and he didn't know what Murthy meant by "the global economic playing field is being leveled" or how we Americans were so glaringly unprepared.

As writers often do, Friedman set out to write a book on the topic in order to learn it. He studied Bangalore, the renown center of the off-shored American computing industry. Then he studies Dalien, China, the Bangalore of Japan. Until last night, I didn't even know such a place existed. Dalyen plays the familiar role. It is a city of over a million people, many of whom speak Japanese and whose children are now required to learn Japanese in school. They operate call centers, manage supply chains, and write software for japanese companies -- all jobs that used to be done in Japan by Japanese.

Clearly the phenomenon of off-shoring is not US-centric. Other economies are vulnerable. What is the dynamic at play?

Friedman argues that we are in a third era of globalization. The first, which he kitschily calls Globalization 1.0, ran from roughly 1492, roughly when Europe began its imperial expansion across the globe, to the early 1800s. In this era, the agent of globalization was the country. Countries expanded their land holdings and grew their economies by reaching overseas. The second era ran from the early 1800s until roughly 2000. (Friedman chose this number as a literary device, I think... 1989 or 1995 would have made better symbolic endpoints.) In this era, the corporation was the primary agent of globalization. Companies such as the British East India Company reached around the globe to do commerce, carrying with them culture and politics and customs.

We are now in Friedman's third era, Globalization 3.0. Now, the agent of change is the individual. Technology has empowered individual persons to reach across national and continental boundaries, to interact with people of other nationalities, cultures, and faiths, and to perform commercial, cultural, and creative transactions independent of their employers or nations.

Blogging is, again, a great example of this phenomenon. My blog offers me a way to create a "brand identity" independent of any organization. (Hosting it at www.eugenewallingford.com would sharpen the distinction!) I am able to engage in intellectual and even commercial discourse with folks around the world in much the same way I do with my colleagues here at the university. In the last hour, my blog has been accessed by readers in Europe, Australia, South America, Canada, and in all corners of the United States. Writers have always had this opportunity, but at glacial rates of exchange. Now, anyone with a public library card can blog to the world.

Technology -- specifically networking and the digital computer -- has made Globalization 3.0 possible. Friedman breaks our current era into a sequence of phases characterized by particular advances or realizations. The specifics of his history of technology are sometimes arbitrary, but at the coarsest level he is mostly on the mark:

- 11/09/89 - The Berlin Wall falls, symbolically opening the the door for the East and West to communicate. Within a few months, Windows 3.0 ships, and the new accessibility of the personal computer made it possible for all of us to be "digital authors".

- 08/09/95 - Netscape went public. The investment craze of its IPO presaged the dot-com boom, and the resultant investment in network technology companies supplied the capital that wired the world, connecting everyone to everyone else.

- mid 1990s - The technology world began to move toward standards for data interchange and software connectivity. This standards movement resulted in what Friedman calls a "collaboration platform", on which new ways of working together can be built.

These three phases have been followed in rapid succession by a number of faster-moving realizations on top of the collaboration platform:

- outsourcing tasks from one company to another

- offshoring tasks from one country to another

- uploading of digital content by individuals

- supply chaining to maximize the value of offshoring and outsourcing by carefully managing the flow of goods and services at the unit level

- insourcing of other companies into public interface of a company's commercial transactions

- informing oneself via search of global networks

- mobility (my term) of data and means of communication

Uploading is the phase where blogs entered the picture. But there is so much more. Before blogs came open source software, in which individual programmers can change their software platform -- and share their advances with others but uploading code into a common repository. And before open source became popular we had the web itself. If Mark Rupert objects to what he considers Thomas Friedman's "repeated ridicule" of those opposed to globalization, then he can create a web page to make his case. Early on, academics had an edge in creating web content, but the advance of computing hardware and now software has made it possible for anyone to publish content. The blogging culture has even increased the opportunity to participate in wider debate more easily (though, as discussions of the "long tail" have shown, that effect may be dying off as the space of blogs grows beyond what is manageable by a single person).

Friedman's description of insourcing sounded a lot like outsourcing to me, so I may need to read his book to fully get it. He used UPS and FedEx as examples of companies that do outsourced work for other corporations, but whose reach extends deeper into the core functions of the outsourcing company, intermingling in a way that sometimes makes the two companies' identities indistinguishable to the outside viewer.

The quintessential example of informing is, of course, Google, which has made more information more immediately accessible to more people than any entity in history. It seems inevitable that, with time, more and more content will become available on-line. The interesting technical question is how to search effectively in databases that are so large and heterogeneous. Friedman explains well to his mostly non-technical audience that we are at just the beginning of our understanding of search. Google isn't the only player in this field, obviously, as Yahoo!, Microsoft, and a host of other research groups are exploring this problem space. I hold out belief that techniques from artificial intelligence will play an increasing role in this domain. If you are interested in Internet search, I suggest that you read Jeremy Zawodny's blog.

Friedman did not have a good name for the most recent realization atop his collaboration platform, referring to it as all of the above "on steroids". To me, we are in the stage of realizing the mobility and pervasiveness of digital data and devices. Cell phones are everywhere, and usually in use by the holder. Do university students ever hang up? (What a quaint anachronism that is...) Add to this numerous other technologies such as wireless networks, voice over internet, bluetooth devices, ... and you have a time in which people are never without access to their data or their collaborators. Cyberspace isn't "out there" any more. It is wherever you are.

These seven stages of collaboration have, in Friedman's view, engendered a global communication convergence, at the nexus of which commerce, economics, education, and governance have been revolutionized. This convergence is really an ongoing conversion of an old paradigm into a new one. Equally important are two other convergences in process. One he calls "horizontaling ourselves", in which individuals stop thinking in terms of what they create and start thinking in terms of who they collaborate with, of what ideas they connect to. The other is the one that ought to scare us Westerners who have grown comfortable in our economic hegemony: the opening of India, China, and the former Soviet Union, and 3 billion new players walking onto a level economic playing field.

Even if we adapt to all of the changes wrought by our own technologies and become well-suited to compete in the new marketplace, the shear numbers of our competitors will increase so significantly that the market will be a much starker place.

Friedman told a little story later in the evening that illustrates this point quite nicely. I think he attributed the anecdote to Bill Gates. Thirty years ago, would you prefer to have been born a B student in Poughkeepsie, or a genius in Beijing or Bangalore? Easy: a B student in Poughkeepsie. Your opportunities were immensely wider and more promising. Today? Forget it. The soft B student from Poughkeepsie will be eaten alive by a bright and industrious Indian or Chinese entrepreneur.

Or, in other words from Friedman, remember: In China, if you are "1 in a million", then there are 1300 people just like you.

All of these changes will take time, as we build the physical and human infrastructure we need to capitalize fully on new opportunities. The same thing happened when we discovered electricity. The same thing happened when Gutenberg invented the printing press. But change will happen faster now, in large part due to the power of the very technology we are harnessing, computing.

Gutenberg and the printing press. Compared to the computing revolution. Where have we heard this before? Alan Kay has been saying much the same thing, though mostly to a technical audience, for over 30 years! I was saddened to think that nearly everyone in the audience last night thinks that Friedman is the first person to tell this story, but gladdened that maybe now more people will understand the momentous weight of the change that the world is undergoing as we live. Intellectual middlemen such as Friedman still have a valuable role to play in this world.

As Carly Fiorina (who was recently Alan's boss at Hewlett-Packard before both were let go in a mind-numbing purge) said, "The 'IT revolution' was only a warm-up act." Who was it that said, "The computer revolution hasn't happened yet."?

The question-and-answer session that followed Friedman's talk produced a couple of good stories, most of which strayed into policy and politics. One dealt with a topic close to this blog's purpose, teaching and learning. As you might imagine, Friedman strongly suggests education as an essential part of preparing to compete in a flat world, in particular the ability to "learn how to learn" He told us of a recent speaking engagement at which an ambitious 9th grader asked him, "Okay, great. What class do I take to learn how to learn?" His answer may be incomplete, but it was very good advice indeed: Ask all your friends who the best teachers are, and then take their courses -- whatever they teach. It really doesn't matter the content of the course; what matters is to work with teachers who love their material, who love to teach, who themselves love to learn.

As a teacher, I think one of the highest forms of praise I can get from a student is to be told that they want to take whatever course I am teaching the next semester. It may not be in their area of concentration, or in the hot topic du jour, but they want to learn with me. When a student tells me this -- however rare that may be -- I know that I have communicated something of my love for knowledge and learning and mastery to at least one student. And I know that the student will gain just as much in my course as they would have in Buzzword 401.

We in science, engineering, and technology may benefit from Friedman's book reaching such a wide audience. He encourages a focus not merely on education but specifically on education in engineering and the sciences. Any American who has done a Ph.D. in computer science knows that CS graduate students in this country are largely from India and the Far East. These folks are bright, industrious, interesting people, many of whom are now choosing to return to their home countries upon completion of their degrees. They become part of the technical cadre that helps to develop competitors in the flat world.

As I listened last night, Chad Fowler's new book My Job Went to India came to mind. This is another book I haven't read yet, but I've read a lot about it on the web. My impression is that Chad looks at off-shoring not as a reason to whine about bad fortune but as an opportunity to recognize our need to improve our skills for participating in today's marketplace. We need to sharpen our technical skills but also develop our communication skills, the soft skills that enable and facilitate collaboration at a level higher than uploading a patch to our favorite open source project. Friedman, too, looks at the other side of off-shoring, to the folks in Bangalore who are working hard to become valuable contributors in a world redefined by technology. It may be easy to blame American CEOs for greed, but that ignores the fact that the world is changing right before us. It also does nothing to solve the problem.

All in all, I found Friedman to be an engaging speaker who gave a well-crafted talk full of entertaining stories but with substance throughout. I can't recommend his book yet, but I can recommend that you go to hear him speak if you have the opportunity.

Posted by Eugene Wallingford | Permalink | Categories: Computing, Software Development, Teaching and Learning

September 13, 2005 6:08 PM

Thinking About Planning, of the Organizational Variety

In my dual roles of head of my department and chair of my university's graduate faculty, I find myself thinking about planning a lot. The dean of the Graduate College is initiating a year-long strategic planning cycle for graduate education university-wide. The dean of the College of Natural Sciences is initiating a year-long strategic planning cycle for our college. As a new head, I am drawn to developing a long-term plan to guide my department as we make decisions in times that challenge us with threats and opportunities alike.

Administrators think a lot about planning, I think -- at least the ones who want to accomplish something do. The cynical take is that strategic planning is what you do when you want to fill up the time in which you should be doing real work. But I think that unfairly characterizes what many good leaders are trying to do.

Now that I am a department head, I see that I have a lot of choices to make every day. Do I approve this course substitution? Do I spend several hundred dollars on a piece of equipment requested by one of the faculty? Do I schedule this experimental course or that? As important, or more so, are the decisions I make about how to spend my time. Do I make plans to recruit students at a local high school? Do I meet with someone in student services about mock interviews for graduating seniors? Do I write an article, study the budget, network with other administrators on campus, work on my own research?

My job is to serve the faculty and students in my department. I serve them by spending my time and intellectual energy to their benefit. Without a shared vision of where we want to go, how do I know how to do that as well as possible? When it comes to making the sort of decisions that the faculty want me to handle precisely because they are routine or should be -- without a shared vision, how do I do this as well as possible?

The same, of course, applies to faculty. As faculty, we want to make choices with their time and energy that both develop our scholarly interests and help the department improve. Without a plan, we are left to divine good choices from among what is usually a large number of compatible choices.

So we as a department need a plan. But not the sort of strategic plan that one often sees in organizations. The bad plans I've seen usually come in two forms. In the first, the plan is essentially defined from above and pushed down through the organization. Without "ownership" and commitment, such a plan is just paper to file away, to haul out for show when someone wants to see The Plan. In the second, the people build a plan bottom-up, but it is full of vague generalities, platitudes with which no one is likely to disagree -- because it says nothing. Often, such plans are long on vision and values but short on objectives and actions that will achieve them.

I do not have much experience with strategic planning, and certainly none of the sort I want to have before doing it with my department. But I am thinking about strategic planning a lot, about how I can help my department do it in a way that is worth our effort and that helps us move in the right direction.

I've begun to observe a skilled facilitator lead the strategic planning cycle for graduate education here, and I've already learned a bit. She is leading a large group of stakeholders in something that operates like a distributed writers workshop from the PLoP conferences: we will work in groups to identify strengths in the current system, weaknesses in the current system, opportunities to improve the system, and threats to the system. Then we will combine our suggestions toward identifying commonly-held views upon which to build. I hope to learn more from this process as we proceed.

As for my department, I am hoping to proceed in a more agile way, as in the planning game. Our mission will play the role of system metaphor in XP. I want to do just enough thinking about the future that we can identify goals whose success can be measured concretely and the metric for which we identify before taking the actions. As much as we can we will work and make decisions as a whole team, with frequent evaluation of our progress and adjustment of our course as necessary.

I know that others have explicitly tried this sort of approach in a non-programming context, but there is nothing quite like doing something yourself to really learn it. The sooner we get to "code", the sooner we can collect feedback and improve.

September 12, 2005 7:39 PM

The Taper Begins

I'm feeling deja vu all over again, only two weeks ahead of schedule. Last September 27, I blogged about the end of my major training phase for the Des Moines Marathon, my second marathon. I ran Des Moines in 3:45, which was an 18-minute improvement over my inaugural marathon in Chicago.

Today I can write a corresponding entry for my third, the Twin Cities Marathon, which takes place on October 2. If you've been following the story up to now, you know that I've run a 24.8-miler, two 22-milers, and a 20-miler. I've mostly been very happy with my times and how I've felt after my long training runs. I've also had some good speed work-outs, though they haven't always ended as I had hoped.

Yesterday, I did my second 20-miler of the season. Unfortunately, I have been sick for several days, which made this my least satisfying long run in terms of absolute performance all year. To be honest, I probably shouldn't have run at all, should have just rested and found a way to get a few extra miles in over this week. But I have high hopes for a healthy and effective taper over the next three weeks, and I did not want to start out my taper in the hole.

I suppose that, in context, I should be happy with my performance yesterday. I was weak, depleted, tired; but I gutted it out for 2:57:00 and even threw in a 7:48 mile near the end. But that left me even weaker,more depleted, and more tired. All I could do the rest of the day was lie back and watch 35-year-old Andre Agassi gut out three hard sets against world No. 1 Roger Federer in the U.S. Open tennis championship, before falling to time and the relentless, all-purpose game of a great champion. Watching Agassi's concentration and shot-making after having played 3 consecutive 5-set matches, at an age when most professional tennis players have long since hung up their rackets, was inspiring. I hope that I can summon a resolve like his in those last miles of my marathon, as a I approach downtown St. Paul.

I feel a little better today and hope to do an easy 5 miles tomorrow morning. If all goes well, I'll do one last long speed work-out this week and a shorter speed work-out next week. But, as I said last year, my main goal at this point in training is to enjoy my runs, let my body recover from the pounding it's taken the last few weeks (225+ miles over four weeks), and break in a new pair of shoes for the race. The week before the race will consist of just a few short, easy runs, with a couple of miles at marathon goal pace thrown in to preserve muscle memory.

Wish me luck.

September 09, 2005 12:44 PM

Missing PLoP

This being Friday, September 9, I have to come to grips with the fact that I won't be participating in PLoP 2005. This is the 12th PLoP, and it would have been my 10th, all consecutively. PLoP has long been one of my favorite conferences, both for how much it helps me to improve my papers and my writing and for how many neat ideas and people I encounter there. Last year's PLoP led directly to four blog entries, on patterns as autopsy, patterns and change, patterns and myth, and the wiki of the future, not to mention a few others indirectly later. Of course, I also wrote about the wonderful running in and around Allerton Park, the pastoral setting of PLoP. I will dearly miss doing my 20-miler there this weekend...

Sadly, events conspired against me going to PLoP this year. The deadline for submissions fell at about the same time as the deadline for applications for the chair of my department, both of which fell around when I was in San Diego for the spring planning meeting for OOPSLA 2005. Even had I submitted, I would have had a hard time doing justice to paper revisions through the summer, as I learned the ropes of my new job. And now, new job duties make this a rather bad time to hop into the car and drive to Illinois for a few days. (Not that I wasn't tempted early in the week to drive down this morning to join in for a day!)

I am not certain if other academics feel about some of the conferences they attend the way I feel about PLoP, OOPSLA, and ChiliPLoP, but I do know that I don't feel the same way about other conferences that I attend, even ones I enjoy. The PLoPs offer something that no other conference does: a profound concern for the quality of technical writing and communication more generally. PLoP Classic, in particular, has a right-brain feel unlike any conference I've attended. But don't think that this is a result of being overrun by a bunch of non-techies; the conference roster is usually dominated by very good minds from some of the best technical outfits in the world. But these folks are more than just techies.

Unfortunately I'm not the only regular who is missing PLoP this year. The attendee list for 2005 is smaller than in recent years, as was the number of submissions and accepted papers. The Hillside Group is considering the future of PLoP and ChiliPLoP in light of the more mainstream role of patterns in the software world and the loss of cache that comes when one software buzzword is replaced with another. Patterns are no longer the hot buzzword -- they are a couple of generations of buzzwords beyond that -- which changes how conferences in the area need to be run. I think, though, that it is essential for us to maintain a way for new pattern writers to enter the community and be nurtured by pattern experts. It is also essential that we continue to provide a place where we care not only about the quality of technical content but also about the quality of technical writing.

On the XP mailing list this morning, Ron Jeffries mentioned the "patterns movement":

In the patterns movement, there was the notion of "forces". The idea of applying a pattern or pattern language was to "balance" the forces.

I hope Ron's use of past tense was historical, in the sense that the patterns movement first emphasized the notion of forces "back then", rather than a comment that the patterns movement is over. The heyday is over, but I think we software folks still have a lot to learn about design from patterns.

I am missing PLoP 2005. Sigh. I guess there's always ChiliPLoP 2006. Maybe I can even swing EuroPLoP next year.

September 01, 2005 10:44 PM

Back to Scheme in the Classroom

In addition to my department head duties, I am also teaching one of my favorite courses this semester, Programming Languages and Paradigms. The first part of the course introduces students to functional programming in Scheme.

I've noticed an interesting shift in student mentality about programming languages since I first taught this course eight or ten years ago. There are always students whose interest is on learning only those languages that they will be of immediate use to them in their professional careers. For them, Scheme seems at best a distraction and at worst a waste of time. I feel sorry for such folks, because they miss out on a lot of beauty with their eyes so narrowly focused on careers. Even worse, they miss out on a chance to learn ideas that may well show up in the languages they will find themselves using ... such as Python and Ruby.

But increasingly I encounter students who are much more receptive to what Scheme can teach them. It seems that this shift has paralleled the rise of scripting languages. As students have come to use Perl, Python, and PHP for web pages and for hacking around with Linux, they have come to see the power of what they call "sloppy" languages -- languages that don't have a lot of overhead, especially as regards static typing, that let them make crazy mistakes, but that also empower them to say and do a lot in a only a few lines of code. After their experiences in our introductory courses, where students learn Java and Ada, students feel as if freed when using Perl, Python, and PHP -- and, yes, sometimes even Scheme. Now, Scheme is hardly a "sloppy" language in the true sense of the word, and its strange parenthetical syntax is unforgiving. But it gives them power to say things that were inconvenient or darn near impossible in Java or Ada, in very little code. Students also come to appreciate Mumps, which they learn in our Information Storage and Retrieval course, for much the same reason.

I'm looking forward to the rest of this course, both for its Scheme and for its programming languages content. What great material for a computer science student to learn. With any luck, they come to know that languages and compilers aren't magic, though sometimes they seem to do magic. But we computer scientists know the incantations that make them dance.

Speaking of parentheses... Teaching Scheme again reminds me of just how compact Scheme's list notation can be. In class today, we discussed lists, quotation, and the common form of programs and data. Quoted lists of symbols carry all of the structural information we need to reason about many kinds of data. Contrast that with the verbosity of, oh, say, XML. (Do you remember this post from the past?) Just last month Brian Marick spoke a Lisp lover's lament on an agile testing mailing list. Someone had said:

Note to Brian: Explain that XML is not the be-all and end-all of manual data formats. Then explain it's the easiest place to start, modulo your tool quality.

To which Brian replied:

I'll use XML instead of YAML as my concession to reality and penance for not talking about Perl. It will be hard not to launch into my old Lisp programmer's rant about how all the people who thought writing programs in tree structures with parentheses was unreadable now think writing data in tree structures with angle brackets and keyword arguments and quotes is somehow just the cat's pajamas.

( YAML is a lightweight, easy to read mark-up language for use with scripting languages. Brian might also bow to reality and not use LAML, a Lispish mark-up language for the web implemented in Scheme.)

Speaking of XML ... Here is my favorite bit of Angle Bracket Speak from the last month or so, courtesy of James Tauber:

<pipe>Ceci n'est pas une pipe</pipe>

So that's how I'm feeling about my course right now. Any more, when someone asks me how my class went today, I feel like the guy answering in this cartoon: