January 30, 2006 5:51 PM

Making Things Worse in the Introductory Course

Reading Physics Face over at Uncertain Principles reminded me of a short essay I wrote a few months ago. Computer scientists get "the face", but for different reasons. Fewer people take computer science courses, in high school or college, so we don't usually get the face because of bad experiences in one of our courses. We get the face because people have had bad experiences using technology.

(On the flip side, at least physicists don't have to listen to complaints like "Gravity didn't work for me yesterday, but it seems to be working okay right now. Why can't you guys make things work all the time?" or "Why are there so many different ways I can exert force on an object? That's so confusing!")

But the main thrust of Chad's article struck a chord with me. A physics education group at the University of College Park found that introductory physics courses cause student expectations about physics -- about the nature of physics as an intellectual activity -- to deteriorate rather than improve! In every group, students left their intro physics thinking less like a physicist, not more.

I know of no such study of introductory CS courses (if you do, please let me know), but I suspect that many of our courses do the same thing. For students who leave CS 1 or CS 2 unhappy or with an inaccurate view of the discipline, their primary image of computing is an overemphasis on programming drudgery. I've written several times here in the last year or so about how we might make our intro courses more engaging -- make them about something, more than "just programming" -- via the use of engaging problems from the "real world", maybe even with a domain-specific set of applications. I notice that Owen Astrachan and his colleagues at Duke are presenting a paper at SIGCSE in early March on using the science of networks as a motivating centerpiece for CS 1. Whatever the focus, we need to helps students see that computing is about concepts bigger than a for-loop. In the 1980s, we saw a "breadth-first" curriculum movement that aimed to give students a more accurate view of the discipline in their first year or two, but it mostly died out from lack of interest -- and the logistical problem that students do to master programming before they can go very far in most CS programs.

I don't have any new answers, but seeing that physics has documented this problem with their introductory courses makes me wonder even more about the state of science education at the university.

January 28, 2006 2:12 PM

Reading Skills and Practice

Few people would try to run a marathon without training for lots and lots of miles. Few would imagine that they could make their high school basketball team without playing lots of ball and working on their skills. And I doubt any of us think that we could earn a spot in the local symphony by noodling on an instrument for five or ten minutes a day.

Yet it seems that many grade school students expect to succeed in school despite the habit of reading 10 minutes a day or less.

A UNI colleague from the College of Education recently shared this nugget from research into children's literacy. The article he cited (Keith Stanovich, "Matthew Effects in Reading", Reading Research Quarterly, 21:360-406, 1996) reported that fewer than 10% of sixth graders read as much 40 minutes per day on average, and 50% of them read only 10 minutes per day.

So what? That paper is over nine years old now, right? Well, first of all, I doubt that the trend in the last decade has been for students to read significantly more. The trends in television viewing (four hours per day?!) and computer usage have almost certainly prevented students from reading more, and today's students may well read less.

Second, and most immediate to a university professor, those sixth graders are now are college students.

How can anyone become sufficiently skilled at reading with so little practice?

I spent more time daily as a sixth grader shooting baskets than that. No athletic coach would let her players do so little drill work on the fundamental skills of the sport. My piano teacher tells me that I should practice at least 30 minutes a day if I want to make more than glacial progress.

And I certainly want my students to get more practice programming than that. I know that it doesn't always happen, but in some of my courses -- for example, programming languages, with weekly assignments, and compilers, with the large team project that keeps them steadily busy throughout the semester -- students have an opportunity to practice daily, or nearly so. My advice to students sounds a lot like what piano teachers tell there students: program a little bit daily, and develop habits that will serve you well the rest of your career.

But that's programming. Many writers and writing teachers tell folks the same thing about writing... Write daily. Create habits. But do we tell our students the same thing about reading?

One consequence of the difference in reading habits and skills among sixth graders is that, over time, the gap in reading skills and academic performance between the better readers and the poorer readers grows larger. This is sometimes called the Matthew effect, as in the title of Stanovich's paper. This name alludes to a verse in the Christian Bible, Matthew 25:29, which says, "For to everyone who has, more will be given and he will grow rich; but from the one who has not, even what he has will be taken away." Sadly, bad reading habits as a youngster tend to compound over time, and the usurious interest rate that our kids pay is a huge handicap in the classroom and workplace.

How can anyone expect to succeed in college without being able to read really well?

I doubt some students ever think about it much. When some do, I'm sure they figure they can just "get by" as they always have. Later, when school suddenly seems to be more difficult, they decide they don't like school and aren't cut out for the nerdy stuff.

On the flip side, I think that many students think that they don't have to be able read well to do computer science anyway. How wrong they are. College-level computing texts contain complex ideas, written in a technical jargon that can feel like a foreign language to newcomers. If you can't read the textbook I assign, or the lecture notes I write, then you are left to understand ideas and implement them in code with little support.

The guy who cited the statistics I mentioned above went on to say, in an e-mail to a open discussion list,

I'm afraid that many of our students have read so little that they simply do not have the experience to cope with the textbooks used in our courses. Many of them do not choose to read except for what informational reading is necessary for daily survival.

The implication for us as faculty is that we cannot expect students to succeed in our courses based on the reading skills they bring with them. Some faculty suggest that we should simply fail the ones who can't make the grade -- and the numbers say that that could be half or more of them. An alternative is that we have to teach them to read, at least how to read, understand, and write the material in our discipline. We will have to define words for them, both technical jargon and words that are likely outside their working vocabularies. Really, though, that's just an extension of what we already do, which is to explain the ways computer scientists and software developers see the world and think about problems.

Most CS professors know that we have to show students examples of how to write -- programs they can read and the emulate in their own code. These programs grow in size and complexity throughout their careers as students and developers. My education colleague suggests that we might do the same sort of thing for reading, say, by talking aloud while 'reading' -- "stating what you are looking for, reading passages aloud and voicing important points, then stating questions these points raise in your mind; and voicing your expectations of what might come next".

I do things like this with programs in class. In my compiler course, I did a low-grade version of this in our second week, working through a simple compiler that I wrote a couple of years ago. Some of my questions of the code questioned my own design style, which could well have been more object-oriented than it was. I've not often considered doing this with the textbook, though I seem to run across suggestions to do so every few years.

(I have done this in my AI course with a journal paper -- Alan Turing's Computing Machinery and Intelligence. It is usually a great success, because students really get into Turing's claims and counteracts.)

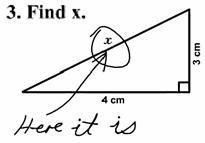

A friend sent me this cartoon yesterday, and I laughed. But it's less funny if we think that our students may be unable to read deep enough to understand the problems we set before them, or to comprehend the textbook that augments our lectures in teaching students how to solve problems. Rather than dumbing down our courses or our textbooks, we may have to take new interest in teaching our students to read -- and not just code. The alternative is to "maintain high standards" and depopulate our majors at a time when our country and world desperately need more citizens educated in computing or at least prepared to live, vote, and make decisions in an increasingly technological world.

"Find x." Okay, it's still funny.

January 25, 2006 6:07 PM

Camouflage in Computer Science

A couple of months ago, I mentioned that I was submitting a proposal to speak at a local conference on camouflage. The conference is called "CAMOUFLAGE: Art, Science and Popular Culture" and will be held at my university on April 22. It is being organized by Roy Behrens, a graphic design professor here about whom I wrote a bit in that old entry. My proposal was accepted. You can see a list of many of the conference speakers on the promotional poster shown here. (Click on the image to see it full size.)

Behrens has attracted speakers from all over the world, despite no financial support for anyone. He recently announced that Marvin Bell, the first Poet Laureate of Iowa, will open the conference by reading a new poem about camouflage, especially written for the event, named "Dead Man". I've enjoyed hearing poets at CS conferences before, most recently Robert Hass at OOPSLA, but usually they've been invited to speak on creativity or some other "right-brain" topic. I've never been at a conference with a world-premiere poetry reading... It should be interesting!

A conference on camouflage run out of a graphics arts program might seem an odd place for a computer science professor to speak, but I thought of proposing a talk almost as soon as I heard about the conference. Computer scientists use camouflage, too, but with a twist -- as a way to transmit a message without anyone but the intended recipient being aware that a message exists. This stands in contrast to encryption, a technique for concealing the meaning of a message even as the message may be publicly known. I've studied encryption a bit in the last couple of years while preparing for and teaching an undergraduate course in algorithms, but I've not read as much on this sort of "computational camouflage", known more formally as steganography.

This is not an area of research for me, at least yet, but it has long been an idea that intrigues me. This audience isn't looking for cutting-edge research in computer science anyway; they are more interested in the idea of hiding things via patterns in their surroundings. This conference affords me a great opportunity to learn more about steganography and other forms of data hiding -- and teach a non-technical audience about it at the same time. If you have ever taught something to beginners, you know that committing to teach a topic forces you to understand it at a deeper level than you might otherwise be able to get away with. For me, this project will be one part studying computer science, one part educating the public, and one part learning about an idea bigger than computer science -- and where CS fits into the picture.

I have titled my talk NUMB3RS Meets The DaVinci Code: Information Masquerading as Art. (I'm proud of that title; I hope it's not too kitschy...) I figure I'll show plenty of examples, in text and images and maybe even music, and then relate steganography to the idea of camouflage more generally.

I also figure that I will have a lot of fun writing code!

January 22, 2006 11:44 AM

Offering Grace and Hope

Two quotes have been running through my mind the last few days, as I have worked on letters of evaluation for faculty on the tenure track or up for promotion:

[Leaders] provide a measure of grace as the antidote to the behavior of people who poison our society with disgrace.

And:

Steeling oneself to become what one is not requires hope. Leaders can give it.

Anyone who thinks that being a manager or a department chair doesn't come with a lot of moral responsibility misunderstands the task.

(Both quotes are from Leadership Jazz, by Max De Pree, a book I'll write more about later.)

January 21, 2006 2:50 PM

Golden Rules, and a Favorite Textbook

What of Tanenbaum's talk? Reading through his slides reminded me a bit of his talk. He titled his address "Ten Golden Rules for Teaching Computer Science", and they all deserve attention:

- Think long-term.

- Emphasize principles, not facts.

- Expect paradigm shifts.

- Explain how things work inside.

- Show students how to master complexity.

- Computer science is not science.

- Think in terms of systems.

- Keep theory under control.

- Ignore hype.

- Don't forget the past.

Items 1-3 and 9-10 aim to keep us professors focused on ideas, not on the accidents and implementations of the day. Those accidents and implementations are the examples we can use to illustrate ideas at work, but they will pass. Good advice.

Items 4-5 and 7 remind us that we and our students need to understand systems inside and out, and that complexity is an essential feature of the problems we solve. Interfaces, implementations, and interactions are the "fundamentals".

Items 6 and 8 reflect a particular view of computing that Tanenbaum and many others espouse: computer science is about building things. I agree that CS is about building things, but I don't want us to discount the fact that, as we build things and study the results, we are in fact laying a scientific foundation for an engineering discipline. We are doing both. (If you have not yet read Herb Simon's The Sciences of the Artificial, hie thee to the library!) That said, I concur with Tanenbaum's reminder to make sure that we apply theory in a way that helps us builds systems better.

I especially liked one slide, which related a story from his own research. One of his students implemented the mkfs program for MINIX using a complex block caching mechanism. The mechanism was so complex that they spent six months making the implementation work correctly. But Tanenbaum estimates that this program "normally runs for about 30 sec[onds] a year". How's that for unnecessary optimization!

The other part of the talk I liked most was his pairwise comparison of a few old textbooks, to show that some authors had thought had captured and taught timeless principles, while others had mostly taught the implementations of the day. He held up as positive examples Per Brinch Hansen's operating systems text and John Hayes's architecture text. I immediately thought of my favorite data structures book ever, Thomas Standish's Data Structure Techniques.

I am perhaps biased, as this was the textbook from which I learned data structures as a sophomore in college. In the years that have followed, many, many people have written data structures books, including Standish himself. The trend has been to make these books language-specific ("... in C", "... using Java") or to have them teach other content at the same time, such as object-oriented programming or software engineering principles. Some of these books are fine, but none seem to get to the heart of data structures as well as Standish did in 1980. And this book made me feel like I was studying a serious discipline. Its dark blue cover with spare lettering; its tight, concise text; its small, dense font; its mathematical notation; its unadorned figures... all communicated that I was studying something real, a topic that mattered. I loved writing programs to make its trees bloom and its hash tables avoid collisions.

A valuable result of the textbook expressing all algorithms using pseudocode is that we had to learn how to write code for ourselves. We thought about the algorithms as algorithms, and then we figured out how to make PL/I programs implement them. (Yes, PL/I. Am I old yet?) We finished the course with both a solid understanding of data structures and a fair amount of experience turning ideas into code.

Reading through someone's presentation slides can be worth the time even if they can't recreate the talk they shadow.

Postscript: Who, to my surprise, has a CS2-related paper in the most recent issue of inroads: The SIGCSE Bulletin? Thomas Standish. It discusses a fast sorting algorithm that works in O(n) for certain kinds of data sets. I haven't studied the paper yet, but I do notice that the algorithm is given in... Java. Oh, well.

January 21, 2006 2:38 PM

On Presentations, Slides, and Talks

Someone on the SIGCSE mailing list recently requested a reference for a presentation at a past conference that had suggested we teach concepts that had "staying power". He had looked through past proceedings for the paper with no success.

It turns out there wasn't a paper, because the presentation had been the 1997 keynote talk by Andrew Tanenbaum, who that year received the SIGCSE award for Outstanding Contributions to Computer Science Education. Fortunately, Prof. Tanenbaum has posted the slides of his talk on his web site.

Of course, reading Tanenbaum's presentation slides is not the same experience at all as hearing his talk as a live performance. Whenever I come across a conference proceedings, I run through the table of contents to see what all happened at the conference. The titles of the keynote addresses and invited talks always look so inviting, and the speakers are usually distinguished, so I turn to the listed page for a paper on the topic of the presentation... only to find at most a one-page abstract of the talk. Sometimes there is no page number at all, because the the proceedings carry no other record of the talk.

This has made me appreciate very much those invited speakers who write a paper to accompany their talks. Of course, reading a paper is not the same experience at all as hearing a talk live, either. But written text can say so much more than the cute graphics and bullet points that constitute most speakers' presentation slides. And for a talk that is done right -- such as Alan Kay's lectures at OOPSLA 2004 -- the presentation materials are so dynamic that the slides convey even less of the talk's real value. (The best way Alan could share his talk materials would be to make the Squeak image he used available for download!)

I think that this is why I like to write such complete notes for the talks I attend, to capture as best I can the experience and thoughts I have in real-time. Having a blog motivates me, too, as it becomes a distribution outlet that justifies even more a job done better.

This is also why I like to write detailed lecture notes, a lá book chapters, for my courses. I write them as much for me as for my students, though the students give me an immediate reason to write and receive what I hope is a substantial benefit.

January 20, 2006 12:25 PM

Marketing a New Academic Program

My department is doing something new, at least for departments here at UNI: marketing our new programs. The modern university is tricked out with a full-featured marketing and public relations department, much like a commercial firm's own marketing division. I am guessing that universities have long had people who do this sort of thing, if only informally, but over time these offices have evolved into something more. In a cultural and political environment where dollars and (good) students are increasingly scarce resources, universities have begun to market themselves actively. This has typically happened at the institution level, "selling the university" to potential students, to potential donors, and legislative bodies. Sometimes, this marketing extended to major new initiatives, such as an interdisciplinary graduate program or a new push to offer programs by distance education. But individual departments have, as near as I can tell, mostly ridden the coattails of university-wide promotion.

But my department has two new majors, bioinformatics and "networking and system administration", that we would like to promote. One of the goals faculty expressed to me when I became department head was to promote these programs and build up their enrollments and financial support. Department-wide enrollment is down quite a bit over the last few years, as it is at most schools, and these programs are seen as opportunities to reverse the trend by reaching new audiences. By promoting the new majors, we would also increase the visibility of our department -- and the visibility of computer science more generally as an academic discipline. In times of tight resources, this seems almost essential. And, because we are not a "research-intensive" university, or a private college with an elite reputation, we sometimes have to try harder to let people know about us.

(How different that is from when I was an undergraduate student of computer science during the first half of the '80s, or even from the mid-'90s, when almost any school with a CS program or something like it was busting at the seams with students!)

I suppose that we could do the same old things that departments always do when they create new programs, but I think it will be difficult or impossible to broaden our base of potential students with just word of mouth, a new brochure, and a good web site. And considering that the CS faculty and I are not experts at marketing or public relations, we probably would not get fair value for our efforts doing amateurish things on our own.

So, our new bioinformatics professor and I met with one of the principals in University Marketing and Public Relations to talk about how we could do better. In response to our conversation, he and his colleagues developed a full-blown marketing plan, like they would for rolling out a new car or brand of toothpaste. They helped us distinguish between features, which tend to be things that we like about our program, and benefits, which are the things that people in the target market -- whether students, parents, alumni, or industry -- care about. They've designed some new promotional materials and helped us to identify ways to reach our target markets, via e-mail, press releases, outreach events, media liaisons, and so on. Some of the things that they propose are things that we can do for ourselves; others are things that they can do. Some are things that they do for free, like write and issue press releases, and others they do for a fee, like design and print materials. As a department, we are left to decide where best to spend our efforts and our scarce discretionary dollars. For a new department head, it's a challenge, but one that I hope the faculty can help me meet.

Marketing? PR? That sounds awfully unacademic... Some faculty ask, "Are we selling something?" The reality is, yes, we are selling something. Students choose to come to UNI, or not. They choose to major in our department, or not.

I am approaching this endeavor in the most idealistic sense of marketing. Students cannot come here to major in bioinformatics if they don't know that the program exists, or what bioinformatics is, or why this would be a good career or academic choice. Their parents, high school counselors, and science teachers cannot suggest our program, or recommend that students consider careers in computational science, if they don't know about them. Iowa's biotechnology companies won't come to recruit our students if they don't know we exist; nor can they help us educate students with their intellectual and financial resources.

Public information is an essential good in a free market, even an intellectual marketplace. This is especially true for computer science in an era when the popular press feeds folks a steady diet of stories about off-shoring, outsourcing, lay-offs, and the dot-com bust. How can students know that this is the best time ever to be a CS student if we don't share the thrill?

These days, the idea of marketing higher education brings to many people's minds the tactics of for-profit institutions. The worst of these schools mislead potential students, but most just play on the fears and insecurities of unhappy people. That is not the sort of marketing a school like UNI does, and not the sort of thing we want to do.

We will not distort information to attract students or focus on style over substance. Computer science is an academic discipline. We are about ideas -- marvelous, exciting, dynamic ideas! We can't tell students and parents that a major in bioinformatics is easy, a soft road to a risk-free career. It won't "exciting" every moment. Misleading claims of this sort don't do the students, our department, or our university any good. It also doesn't advance the state of our discipline, or improve the world. We can be honest about the challenges of majoring in computer science or bioinformatics while being honest about the good features (er, benefits), too. The key is to get the ideas out there so people can make well-informed decisions.

I don't mind students not choosing to major in CS because it doesn't interest them or fit their goals. But I hate the idea of missing out on students who would enjoy computer science and be good at it because of ignorance or bad information.

If you ever see me hawking our programs on a late-night infomercial, you'll know that I've gone over to the dark other side. But I think we can do this right and do something of value for everyone involved. At least we are trying to make something happen, rather than stand at the whim of the prevailing trends. I'm hopeful that we can doing something really good.

The university's PR folks and administration are excited, too. They haven't done this sort of thing for a new program before, so we are a test case. They like to see departments taking charge of their fates, and in an era of budget cuts and stiff competition with other schools for the best students they see this as a potential avenue to broaden the reach of the university.

Oh, on our department's web site... I know that it is not very good. It has always been designed, implemented, maintained in house by CS professors, and the folks who have volunteered their time to do it have generally been focused more on content than presentation. We are in the process of designing a brand new site from scratch. I've asked one of our grad students with considerable design experience to help me create the new site. My goal for now is simple and effective. I think we are well on our way, which the rest of the world will get to see soon.

January 16, 2006 12:46 PM

Chairing Tutorials for OOPSLA 2006

After chairing the OOPSLA Educators' Symposium in 2004 and 2005, I've been entrusted with chairing the tutorials track at OOPSLA 2006. While this may not seem to have the intellectual cachét of the Educators' Symposium, it carries the responsibility of a major financial effect on the conference. If I had screwed an educators' event, I would have made a few dozen people unhappy. If I screw up the tutorials track, I could cost the conference tens of thousands of dollars!

The call for tutorial proposals is out, with a deadline of March 18. My committee and I will also be soliciting a few tutorials on topics we really want to see covered and from folks we especially want to present. We'd like to put together a tutorial track that does a great job of helping software practitioners and academics get a handle on the most important topics in software development these days, with an emphasis on OO and related technologies. In marketing terms, I think of it as exciting the folks who already know that OOPSLA is a must-attend conference and attracting new folks who should be attending.

I'd love to hear what you think we should be thinking about. What are the hottest topics out there, the ones we should all be learning about? Is there something on the horizon that everyone will be talking about in October? I'm thinking not only of the buzzwords that define the industry these days, but also of topics that developers really need to take their work to another level.

Who are the best presenters out there, the ones we should be inviting to present? I'm thinking not only of the Big Names but also of those folks who simply do an outstanding job teaching technical content to professionals. We've all probably attended tutorials where we left room thinking, "Wow, that was good. Who was that guy?"

One idea we are considering this year is to offer tutorials that help people prepare for certifications in areas such as Java and MSCD. Do you think that folks could benefit from tutorials of this sort, or is it an example trying to do too much?

Trying to keep a great conference fresh and exciting requires a mix of old ideas and new. It's a lot like revising a good course... only in front of many more eyes!

Posted by Eugene Wallingford | Permalink | Categories: Computing, Software Development, Teaching and Learning

January 15, 2006 11:15 AM

2005 Running in Review

Our weather this winter has been a collection of extremes. The first couple of weeks of December dumped nearly 20" of snow on us. And it was cold. The next three weeks were 100% cloudy -- as gray and downhearted as it could be. Now, the last couple of weeks have been unseasonably warm, and we've seen the sun almost every day for the last week or so.

The result was that I spent a lot of time inside on the track during December but lately have been back outdoors enjoying an un-January on the roads. This morning, I took in a nice 12-miler, my second Sunday 12-miler in row after five or six weeks of shorter long runs.

After one hour, my mind said, ah, this is a great January run. After one hour and five minutes, my legs said, yeah, but didn't you run eight fast miles on Friday? I finished off the run with no thought of speed, just basking in a bright sun.

Last year, I summarized my 2004 running when I broke 1900 miles on the second to last outing of the year. I finished the year at 1907 miles, up from 1281.8 miles in 2003. That blog entry suggested that I would probably not be able to increase my mileage by even 300 miles in 2005.

My running log backs me up, but... In 2005, I ran 2137.7 miles. Almost 100 miles of this increase came over the first three months of the year, when I ran consistently in the mid- to upper-30 mile per week range. After that, the increase in mileage seems to have been spread pretty evenly.

My shoe locker bears the evidence of all these miles, too. I went through four pairs of shoes, and have two active pairs in use right now. My wife is beginning to wonder what I plan to do with all these shoes...

The year was good for my times, too. I PRed in the 5K twice, first in June (20:50), and again in December (20:44). I also lowered my best time in the half-marathon to 1:34. My 2005 marathon didn't go as well as I had hoped, but I did improve on my debut marathon time.

More miles. Faster times. Plenty of enjoyment. A good year.

I do not yet feel really strong and powerful on the road yet, and my weekly track times are still a bit slower than I've grown accustomed to. But I am getting the itch to run longer miles again, and the the prospect of beginning to train for a marathon again sounds palatable. Where shall I run this fall? Twin Cities, for personal vindication? New York, for a "big event"? Another Chicago, still my gold standard for marathons? Perhaps Des Moines again, for a flat fast course that might buoy my spirits?

January 14, 2006 3:29 PM

Just a Course in Compilers

Soon after I arrived at UNI, my colleague Mahmoud Pegah and I were given the lead in redesigning the department's CS curriculum. We were freshly-minted Ph.D.s with big dreams. We designed a curriculum from scratch based on the ACM's Computing Curricula 1991 guidelines. In a move of perhaps subconscious rebellion, we called one of the upper-level electives "Translation of Programming Languages". This course sat in the position usually occupied by the traditional course in compilers, but at the time we thought that name too limiting. After all, we were Smalltalkers, and our programming environment used a blend of compilation of interpretation, a powerful VM, and all sorts of language processing elements. We were also Unix guys, and the Unix world encourages stepwise transformation of data through pipes made up of simple processors.

Ever since, we have had to explain the name "Translation of Programming Languages", because no one knows what it means. When we say, "Oh, that's like a course in compilers", everyone nods in satisfaction. Most then say, "Does anyone still write compilers any more?"

But I still think that our name choice better reflects what that course should be about, and why it is still important as more than just a great programming experience. The rise of refactoring as a standard programming practice over the last 5-7 years has caused a corresponding need for refactoring tools, and these tools rely well-known techniques from programming languages and compilers. I'm certainly glad that someone taught the folks behind IntelliJ IDEA and Eclipse learned how to write language-processing tools somewhere.

This semester, I am teaching the "compiler course", Translation of Programming Languages. We finally have this course back in the regular rotation of courses we offer, so I get to teach it every so often. (We last offered it in Fall 2003, but we plan to offer it every third semester for the foreseeable future.) I am probably more excited than my students!

I thought long and hard choosing a textbook. To be honest, I would really have liked to use Niklaus Wirth's Compiler Construction. I love small books with big lessons, and Wirth didn't waste any words or space writing this book. In 173 pages, he teaches us how to build a compiler from beginning to end -- and gives us the full source of his compiler, describes a RISC architecture of his own design for which his compiler generates code, and gives us full source code for a simulator of the architecture. Boom, boom, boom. Of course, he doesn't have a lot of time for theory, so he covers many ideas only at a high level and moves quickly to practical issues.

Why not choose this book? Well, I had two misgivings. First, I would have to supplement his book with a lot of outside material, both my own lecture notes and other papers. That's not a major problem, as I tend to write up extensive lecture notes, and I rarely follow big textbooks all that closely anyway. But the real killer was when I went to Amazon to check out the book's availability and saw:

4 used & new available from $274.70

We may be able to get by on four copies, but... $274.70?

The standard text is, of course, Dragon book. There are plenty of copies available at Amazon, and they run a relatively svelte $95.18. (The price of textbooks these days is a subject for another blog entry, another day.) But I have always felt that the Dragon book is a bit too much for juniors and many seniors, who are my primary audience in this course. I do not think that I am contributing to the dumbing down of our curriculum by not using this classic text; indeed, I will draw many of my lecture material from my experiences with this book. But a 15-week course requires some focus, and I don't think that most of our undergraduates will get as much from the course as they might if they get lost in the deep swirls of some of Aho, Sethi, and Ullman's chapters.

I finally settled on Louden's Compiler Construction: Principles and Practice, with which I have had no prior experience. It seems a better choice for my students, one they might be able to read and learn something from. We'll see.

I learned a few lessons teaching this course in Fall 2003. One is: less content. If I try to cover even a significant fraction of what we know about scanning, parsing, static analysis, code generation, and optimization, my students won't get a chance to experience building a compiler from beginning to end. This robs them not only of understanding the compiler material at a deeper level but also of the occasional pain and ultimate triumph of building a non-trivial program that works. A 15-week course requires focus.

A 15-week course in translating programming languages also requires a relatively small source language. In my previous offering, the language the students compiled was simply too big, but in the wrong ways. You don't learn much new from making your scanner and parser recognize a second or third repetition construct; you mostly find yourself just doing more grunt work. I'd rather save that time to get deeper into a later stage of the compiler, or to discuss the notion of syntactic abstractions and how o preprocess a second repetition construct away.

That said, I do want students to do some of the grunt work. I want them to build their own scanners and parsers. Sure, we use parser generators to write these components of most compilers these days, but I want my students to really understand how some of these techniques work and to see that they can implement them and make them fly. I remember the satisfaction I felt when I wrote my first LL parser and watched it recognize a simple sorting program's worth of tokens.

Last time I used a source language I home-brewed from a colleague's course. This time, I am going to have my students process a subset of Oberon, based in part on Wirth's now out-of-print book. It strikes a nice balance between small enough and large enough, and has a nice enough syntax to work with for a semester.

Now that I have administrative duties, I teach only one course a semester. The result is that I get even more psyched about the course, because it is my best chance to get my hands dirty in real computer science during the term, to think deeply in the discipline. It is also gives me a chance to write code. The thing I missed most last semester in my first semester as head was having more time to program. This course offers even more: a chance to get back to a recently-dormant project, a refactoring tool for Scheme. So, I'm psyched.

January 06, 2006 4:02 PM

... But You Doesn't Have to Call Me Lefschetz

In the last two days, I have run across references to John von Neumann twice.

First, I was reading The Geomblog yesterday and found this:

It reminds me of a quote attributed to John von Neumann:

In mathematics you don't understand things. You just get used to them.

I've had that feeling in computer science... A few months ago I described something similar, but in that case I did come to understand the course material; it only seemed as if I never world. My "just get used to it" experiences came in an area right up Suresh's alley: Computational Complexity. I loved that class, but I always felt like I was swimming in the dark -- even as I did well enough in the course.

Then today I was cleaning out a folder of miscellaneous notes and found a clipping from some long-forgotten article.

In Princeton's Fine Hall, someone once posted a "Scale of Obviousness":

- If Wedderburn says it's obvious, everybody in the room has seen it ten minutes ago.

- If Bohnenblust says it's obvious, it's obvious.

- If If Bochner says it's obvious, you can figure it out in half an hour.

- If von Neumann says it's obvious, you can prove it in three months if you are a genius.

- If Lefschetz says it's obvious, it's wrong.

I'll venture to say that students at every institution occasionally make such lists and discuss them with their friends, even if they are too polite to post them in public. That's good for the egos of us faculty members. In our fantasies, we are all von Neumanns. In reality, most of us are Bohnenblusts at best and more likely Wedderburns. And we all have our Lefschetz days.