November 30, 2008 10:04 PM

Disconnected Thoughts to End the Month

... and a week of time away from work and worries.

There is still something special about an early morning run on fresh snow. The world seems new.

November has been a bad month for running, with my health at its lowest ebb since June, but even one three-mile jog brings back a good feeling.

I can build a set of books for our home finances based on the data I have at hand. I do not have to limit myself to the way accountants define transactions. Luca Pacioli was a smart guy who did a good thing, but our tools are better today than they were in 1494. Programs change things.

S-expressions really are a dandy data format. They make so many things straightforward. Some programmers may not like the parens, but simple list delimiters are all I need. Besides, Scheme's (read) does all the dirty work parsing my input.

After a week's rest, I imagine something like one of those signs from God:

That "sustainable pace" thing...

I meant that.

-- The Agile Alliance

I'd put Kent or Ward's name in there, but that's a lot of pressure for any man. And they might not appreciate my sense of humor.

The Biblical story of creation in six days (small steps) with feedback ("And he saw that it was good.") and a day of rest convinces me that God is an agile developer.

November 22, 2008 7:19 AM

Code, and Lots Of It

Today, I was asked the best question ever by a high-school student.

During the fall, we host weekly campus visits by prospective students who are interested in majoring in CS. Most are accompanied by their parents, and most of the dialogue in the sessions is driven by the parents. Today's visitors were buddies from school who attended sans parents. As a part of our talking about careers open to CS grads, I mentioned that some grads like to move into positions where they don't deal much with code. I told them that two of the things I don't like about my current position is that I only get to teach one course each semester and that I don't have much time to cut code. Off-handedly, I said, "I'm a programmer."

Without missing a beat, one the students asked me, "What hobby projects are you working on?"

Score! I talked about a couple of things I work on whenever I can, little utilities I'm growing for myself in Ruby and Scheme, and some refactoring support for myself in Scheme. But the question was much more important than the answer.

Some people like to program. Sometimes we discover the passion in unexpected ways, as we saw in the article I referred to in my recent entry:

[Leah] Culver started out as an art major at the University of Minnesota, but found her calling in a required programming class. "Before that I didn't even know what programming was," she admits. ... She built Pownce from scratch using a programming language called Python.

Programmers find a way to program, just as runners find a way to run. I must admit, though, that I am in awe of the numbers Steve Yegge uses when talking about all the code he has written when you take into account his professional and personal projects:

I've now written at least 30,000 lines of serious code in both Emacs Lisp and JavaScript, which pales next to the 750,000 or so lines of Java I've [spit] out, and doesn't even compare to the amount of C, Python, assembly language or other stuff I've written.

Wow. I'll have to do a back-of-the-envelope estimate of my total output sometime... In any case, I am willing to stipulate to his claim that:

... 30,000 lines is a pretty good hunk of code for getting to know a language. Especially if you're writing an interpreter for one language in another language: you wind up knowing both better than you ever wanted to know them.

The students in our compiler course will get a small taste of this next semester, though even I -- with the reputation of a slave driver -- can't expect them to produce 30 KLOC in a single project! I can assure them that they will make a non-trivial dent in the 10,000 hours of practice they need to master their discipline. And most will be glad for it.

November 20, 2008 8:22 PM

Agile Thoughts: Humans Plus Code

Courtesy of Brian Marick and his Agile Development Practices keynote:

Humans plus running code are smarter than humans plus time.

We can sit around all day thinking, and we may come up with something good. But if we turn our thoughts into code and execute it, we will probably get there faster. Running a piece of code gives us information, and we can use that feedback to work smarter. Besides, the act of writing the code itself tends to make us smarter, because writing code forces us to be honest with ourselves in places where abstract thought can get away with being sloppy.

Brian offers this assertion as an assumption that underlies the agile software value of working software, and specifically as assumption that underlies a guideline he learned from Kent Beck:

No design discussion should last more than 15 minutes without someone turning to a computer to do an experiment.

An experiment gives us facts, and facts have a way of shutting down the paths to fruitless (and often strenuous) argument we all love to follow whenever we don't have facts.

I love Kent Beck. He has a way of capturing great ideas in simple aphorisms that focus my mind. Don't make the mistake that some people make, trying to turn one of his aphorisms into more than it is. This isn't a hard and fast rule, and it probably does not apply in every context. But it does capture an idea that many of us in software development share: execucting a real program usually gives us answers faster and more reliably than a bunch of software developers sitting around pontificating about a theoretical program.

As Brian says:

Rather than spending too much time predicting the future, you take a stab at it and react to what you learn from writing and running code...

This makes for a nice play on Alan Kay's most famous bon mot, "The best way to predict the future is to invent it." The best way to predict the future of a program is to invent it: to write the program, and to see how it works. Agile software development depends on the fact that software is -- or should be -- soft, malleable, workable in our hands. Invent the future of your program, knowing full well that you will get some things wrong, and use what you learn from writing and running the program to make it better. Pretty soon, you will know what the program should look like, because you will have it in hand.

To me, this is one of the best lessons from Brian's keynote, and well worth an Agile Thought.

November 19, 2008 4:36 PM

Where Influential Women in Computing Come From

A recent article at Fast Company highlights some of the Most Influential Women in Web 2.0. This list "wasn't chosen by star power, nor by career altitude" but for the biggest innovations in the nebulous sphere of Web 2.0. This list is also not a random sample, which makes drawing conclusions from it dicey. Yet I could not help noticing how few of them have CS background:

- Sinha: cognitive neuroscience

- Kaplan: economics, government, philosophy, and history

- Page/Des Jardins/Stone: theatre/English lit/political science

- Huffington: economics

- Banister: HS drop-out

- Bianchini: political science, then MBA

- Fake: English

- Trott: English

- Hamlin: political economy and human rights

- Mayer: systems science (after starting in biology and chemistry), then M.S. in CS

- Culver: computer science (after starting in art)

Perhaps this is a non-representative sample of women in computing or Web 2.0. Or perhaps the range of backgrounds we see here, especially its tilt toward the humanities, says more about the social and interactive nature of Web 2.0 than about computer science. Perhaps it says something about women and what they want out of their academic majors.

Somehow, though, I think this list is a great example of why we need to broaden the public's conception of computing, in hopes that more might choose to major in CS. I also think it is also a great example of why we as a discipline need to engage students all across the campus. We need to expose more (all?) students to expose them to the power of the ideas of computing and give them some of the skills they will need to apply it to whatever they decide to do.

I'm glad these innovators and women found computing along the way to turning their ideas into reality, but I'd like for us to eliminate some of the barriers that we erect between computer science and tomorrow's innovators.

November 17, 2008 8:58 PM

Doing It Wrong Fast

Just this weekend I learned about Ashleigh Brilliant, a cartoonist and epigrammist. From little I've seen in a couple of days, his cartoons remind me of Hugh MacLeod's business-card doodles Gaping Void -- only with a 1930s graphic style and language that is more likely SFW.

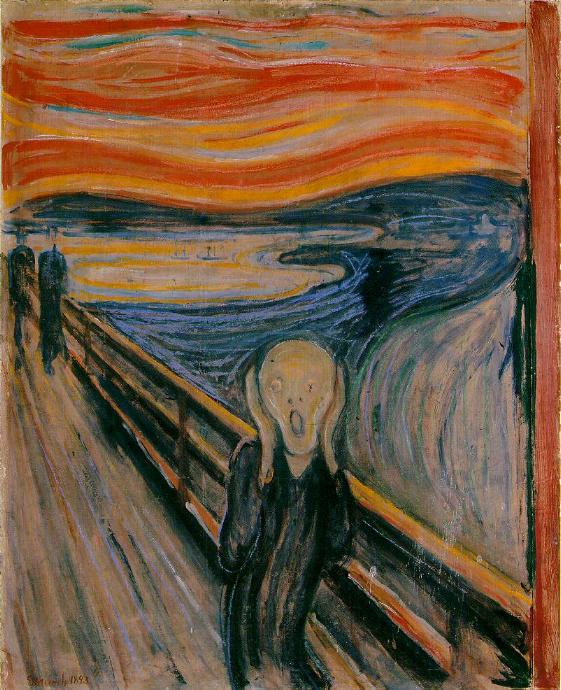

This Brilliant cartoon, #3053, made it into my Agile Development Hall of Fame on first sight:

Doing it wrong fast means that we have a chance to learn sooner, and so have a chance to be less wrong than yesterday.

This lesson was fresh in my mind over the weekend from a small dose of programming. I was working on the financial software I've decided to write for myself, which has me exploring a few corners of PLT Scheme that I don't use daily. As a result, I've been failing more frequently than usual. The best failures come rat-a-tat-tat, because they are often followed closely by an a-ha! Sunday, just before I learned of Brilliant's work, I felt one of those great releases when, for the first time, my code gave me an answer I did not already know and had not wanted to compute by hand: our family net worth. At that moment I was excited to verify the result manually (I'll need that test later!) and enjoy all the wrong wrong work that had brought me to this point. Brilliant has something.

November 13, 2008 6:35 PM

Lest We Forget the Mathematicians

Just before I left for the SECANT workshop, I wrote an entry on programming based on a conversation with a colleague in the math department. Then I went off to SECANT, which gave me a chance to think about the intersection of CS and science, which took my mind off of the role CS plays in mathematics. But the gods are reminding us: Computer science is changing how some mathematicians work, and not just via programming.

The December 2008 issue of the Notices of the American Mathematical Society features four papers on the use of computers in mathematical proof, both to create new results and to ask and explore "new mathematical questions about the nature and technique of such proofs". This idea is known as formal proof. All four papers of the special issue are available as PDF files on the web site. (This is an excellent feature of Notices: the current issue is openly available to the community!).

The lead article of the issue, by Thomas Hales, lays out the problem for which Formal Proof is the solution. In a nutshell: mathematicians verify their results by a social process, which means those results are fallible.

Traditional mathematical proofs are written in a way to make them easily understood by mathematicians. Routine logical steps are omitted. An enormous amount of context is assumed on the part of the reader.

Many of you probably already know that this is true, from your own negative experience. I know that I often felt as if I was missing a lot of context when I was reading proofs in the abstract algebra book we used in grad school!

How are these proofs validated? By other mathematicians reading them and accepting them as valid. The best known and most important proofs have been read and understood by many mathematicians, so we can trust that they are probably correct. If there were something wrong with the result, someone would have found out by now -- either by by finding an error in the proof or by discovering that something else breaks when we rely on it. Programmers know that this is how most programs are "proven" correct: by using them reliably for a long time under many different conditions.

One problem with this process is that some proofs are so long and so complicated that the number of people who can read and understand them is quite small. For the results that break the most new ground, even the best mathematicians have to learn a lot to understand the proof. These "proof assistants" may not have the time, energy, or attention span to validate a result well enough that we "know" it is correct.

A formal proof is an attempt to bypass the social process of mathematicians reading a result, providing the necessary context, filling in the details, and approving the result by automating those steps:

A formal proof is a proof in which every logical inference has been checked all the way back to the fundamental axioms of mathematics. All the intermediate logical steps are supplied, without exception. No appeal is made to intuition, even if the translation from intuition to logic is routine.

A formal proof will be less intuitive to most human readers, especially the advanced ones, but it should be less susceptible to errors by relying on formal specification of the context and of all the intermediate steps. "Show your work!"

Specifying all of the extra detail completely is something humans are not so good at. Many great mathematicians -- and programmers -- are great in some part because they are able to take improbable intuitive leaps onto new ground. But computers thrive on such tedious detail, so work on formal proof comes naturally to rest in the realm of programs that aid the process.

Computerized "proof assistants" are nothing new. Hales cites examples going back to 1954 and the Johniac computer. I can't go that far back (my parents were still in grade school!), but I remember working with programs of this sort back in the mid- to late-1980s as a grad student in AI. Prof. Rich Hall, a philosophy professor specializing in epistemology and philosophy of mind, was a treasured member of my Ph.D. committee. For the philosophy component of my comprehensive, Hall asked me to study two such programs, Tarski's World and Computer Assisted Logic Lessons (CALL), intended as logic tutors and proof assistants for beginners. (Tarski's World is discussed here. CALL was a program home-brewed at Michigan State. I found one reference to it via Google, in an MSU prof's syllabus from 1998.) My task was to identify the fundamental distinctions between the two programs, especially with regard to their respective assumptions, and to evaluate their instructional utility. Remarkably, I still have the paper I wrote for my exam -- formatted in nroff!

Formal proof sounds perfect: Let a program do the grunt work to validate even our most complex proofs. But formal proof offers up to problems... The first is that the human has to specify some of the initial detail for the program in some logical language. One thing that many years in AI taught me is that logic languages are just like programming languages, and writing proofs in them encounters all the same perils as writing a program. Writing proofs is the cross borne by mathematicians, though, and this problem is exactly the one formal proof seeks to solve (a recursive problem!): making sure that our proofs contain all the necessary detail, written and used correctly.

The second problem is that now we have to consider whether the computer program itself is correct. To use the program to validate a proof, we need first to validate the program. This is a different recursion. Fortunately, we have some things on our side. One is scale, both relative to large programs and to the proofs we hope to construct. As Hales points out:

The computer code that implements the axioms and rules of inference is referred to as the kernel of the system. It takes fewer than 500 lines of computer code to implement the kernel of HOL Light [a particular computer proof assistant]. (By contrast, a Linux distribution contains approximately 283 million lines of computer code.)

The "kernel" of the system is small enough to be amenable to validation in several ways. One is the social process used for other programs: make the code open source and let everyone and his brother study it and use it. I love the way Hales embraces this approach:

I wish to see a poster of the lines of the kernel, to be taught in undergraduate courses, and published throughout the world, as the bedrock of mathematics. It is math commenced afresh as executable code.

(The emphasis is mine. More on that later.)

This replaces the social process for validating proofs, up one level. Even if we validate the proof assistant only informally, it will be used repeatedly to validate proofs, and every use is an opportunity to find errors in the program. We can even use the program to validate proofs we already understand well just for the purpose of validating the program. (The world of mathematics is full of test data!) We use a social process to build a tool which then serves us over and over. Building tools is an essence of computer science.

Given the small size of the program, we could also use formal methods to prove its correctness or at least offer evidence that it is correct. John Harrison used HOL Light to something akin to a formal proof of its own soundness. This is the first time in a long while that I have read about Gödel's incompleteness theorem coming into play with a real program... This approach made me think of the compiler-writing technique known as bootstrapping, though that's not quite what Harrison did.

Finally, we might try to validate the program using another proof assistant. Hales calls this "exporting" a proof. The idea is this. Translate a proof written for one assistant into the language of another.

If a proof in one system is incorrect because of an underlying flaw in the theorem-proving program itself, then the export to a different system fails, and the underlying flaw is exposed.

For some reason, this reminded of cross-compilation, where we use a compiler on one platform to generate code for another platform. The purpose of cross compiling is to propagate programs onto systems where they do not exist yet. The purpose of exporting is different, to increase our confidence in one or both proof assistants. When we combine the confidence we have in the program via social acceptance with the confidence we gain from validating exported proofs, we have even more reason to trust the program, and thus the proofs it validates. Our confidence grows.

This process reminds us all that math, while not a natural science, is imbued with the spirit of science:

With a computer -- indeed with any physical artifact, whether a codex, transistor, or a flash drive made of proteins from salt-marsh bacteria -- it is never a matter of achieving philosophical certainty. It is a scientific knowledge of the regularity of nature and human technology, akin to the scientific evidence that Planck's constant lies reliably within its experimental range. Technology can push the probability of a false certification ever closer to zero: 10-6, 10-9, 10-12, ....

We never know our proofs are correct. We only have good reason to believe so. The same is true of programs. That's another reason for a computer scientist like me to be fascinated by this direction in mathematics.

The connection to the topic of the SECANT workshop is strong. Computing is helping to revolutionize how mathematicians work, just as it is revolutionizing how scientists work. Part of the math revolution will resemble the one in science, because some math research is itself inherently computational. The changes we talk about with formal proofs are a bit different, in that they are about how we validate results, not how we create them.

Both traditional mathematics and computational mathematics depend ultimately on validation. Formal proof is aimed at addressing the former, but what of the latter? Certainly some scientists have recognized the problem and embarked on efforts to solve it. I wrote about one such effort early this year, a simulation-and-documentation system that interleaves programs, their execution, and the papers written to publish the results. Not surprisingly, such thinking and the systems that implement it require a change in mindset, one that will likely come only after a long... social process.

Hales recognizes what the use of computing to generate and check proofs means for his discipline: It is math commenced afresh as executable code. I think many disciplines will find themselves redefined in just this way.

November 12, 2008 6:57 AM

That's a Wrap

I have posted all of my reports from the 2008 SECANT workshop. In sum, SECANT is a worthwhile community-building effort. It brings together such a mix: academia and industry, different disciplines, and different kinds of schools, from large Big Ten and other R-I universities down to small liberal arts colleges. This one of the reasons why I love OOPSLA, but this venue provides a smaller, more intimate setting. (Of course, while SECANT lies at the intersection of computing -- and especially programming -- OOPSLA's domain is really Everything Programming, which is even better.)

The workshop was again a great source of ideas and inspiration for me. This seems like a good use of a relatively small amount of money by NSF. The onus is now on us participants... Will we do the work to grow the community? To develop courses and materials? To transform our institutions and disciplines. A tall order.

As for being done with my reports, I feel a small measure of pride. Sure, last year, I posted my final workshop report only five days after the workshop ended, and this year I'm at eleven days. But my report on SIGCSE -- from March -- is still incomplete, with two entries on top: a general description of a panel on bringing the joy and wonder back to CS, and a more detailed report on one of the presentations from that panel, by Eric Roberts.

Is there a statute of limitations on blog entries? Has my coupon to post on that panel session expired? If I were Kent Beck, I'd probably call this long delay a "blog smell" and write a pattern!

For me, blogging suffers from a stack-of-ideas phenomenon. I have ideas, and they get pushed onto the to-blog list. Sometimes, I have more ideas than time to write, and some ideas get pushed deep in the stack before I get a chance to write them up. Time passes... And then I look back at the list of ideas, and most feel stale, or at least no longer have their original hold on my mind. I currently have three levels of "blog ideas" folders, and each one contains a bunch of ideas that I remember wanting to write now -- but which now I feel no desire to write. Sounds like it's time for a little rm -r *.*

Going to a conference only makes the stack-of-ideas problem worse. The sessions follow one upon another, and each one tends to stir me up so much that I push even the previous session way back in my mind. That's one advantage of a 1.5-day workshop over a several day conference like SIGCSE or OOPSLA: the scale does not overflow my small brain.

Do readers care about any of this? Is SIGCSE stale for them? Perhaps, and I figure anyone who was wondering what went on in Portland has likely found the information elsewhere, and in any case moved on. But the topic of the unwritten entry may not be stale yet, so hope remains.

To return to the beginning of this blog, on the end of my SECANT reports: I hope you get as much from reading them as I did writing them.

November 10, 2008 7:31 PM

Workshop 6: The Next Generation of Scientists in the Workforce

[A transcript of the SECANT 2008 workshop: Table of Contents]

The last session of an eventful workshop consisted of two people. One was a last minute sub for a science speaker who had to pull out. The sub, from Microsoft Research, didn't add much science content, but did say something I wish undergrads would pick up on. What do all companies look for these days? Short ramp-up time, self-starters. These boil down to curiosity and initiative.

The second speaker gave the sort of industry report I so enjoyed last year. David Spellmeyer, a Purdue computer science and chemistry grad, is CTO and CIO at Nodality. He titled his talk, "Computational Thinking as a Competitive Advantage in Industry". I love that title! because I love the ways computing confers a competitive advantage over companies that don't get it yet. The downside of Spellmeyer talking about his own company's competitive advantage: he can't post his slides.

Spellmeyer did tell us a bit about his company's science at various points in his story. Nodality works on patient-specific classification of disease and response to therapies. At least part of that involves evaluating phosphoprotein-signaling networks. (I hope that doesn't give too much away.)

He looks for computational thinking skills in all of the scientists Nodality hires. His CT wish list included items familiar and surprising:

- familiarity with the complexity of computing

- exposure to programming languages

- analytical methods for experimental studies

- familiarity with the technology and inner workings of the computer, especially database

These skills give competitive advantage to his company -- and also to the individual! The company is able to do more better and faster. The individual has better judgment across a wider range of problems. These advantages intersect at a point where computational thinking demystifies the computer, computer systems, and programming. Understanding even a little about computers and programs helps to dispel myth of the perfect computer and the perfect computer system. Those myths create frustrations that grow into more. (Spellmeyer used another image to drive this point home: Hitchcock's North by Northwest.)

How does computational thinking help the company do more better and faster? By...

- ... letting scientists spend more time doing what they love.

- ... eliminating low-value-add transactional activities in the business process.

- ... boosting the speed and scalability of their systems.

Notice that these advantages range from the scientific to business process to the technical. It's not only about techies sitting in front of monitors.

On the scientific side of the equation, Nodality has a data problem. A robust assay produces a flood of data:

→ 1010 data points per experiment

Thereafter followed a lot of detail that I couldn't follow in real time, which is probably just as well. There is a reason that Spellmeyer can't post his slides...

How do they eliminate low-value-added transactional activities?

- Talk to customers.

- Find patterns of practice.

- Propose computational tools to improve practice.

- Use an agile approach to gather requirements, design a system, field, get feedback, and iterate in short cycles.

Computational thinking enables scientists and techies to think of their experiments, and how to set them up, in different ways. For example, they might conceive of a way to set up a cytometer differently. They also think differently about experiment analysis and inventory management.

As Spellmeyer wrapped up, he he included a few snippets to motivate his ideas and the scale of the problems that he and his company face. He quoted Margaret Wheatley as saying that all science is a metaphor, a description of a reality we can never fully know. As a pragmatist, this is something I believe almost from the outset. He also said that in business, learning occurs naturally through normal interactions in work practices. Not in classes. "Context, community, and content" are the triumvirate that drives all they do. For this reason, his company puts a lot of effort into its community software tools.

The problem ultimately comes down to an issue at the intersection of combinatorics, pragmatics, and even ethics. We can make billions of unique molecules. Which ones should we make? We need to consider molecules similar enough to ones we understand but dissimilar enough to offer hope of a new result. This leads to a question of similarity and dissimilarity, one of those AI-complete tasks. There is room for a lot of great algorithm exploration here.

Finally, Spellmeyer weighed in on a hot topic from the previous session: Excel is a basic tool in his company. The business guys have developed an extremely complex business model, and all of their work is in Excel. But it's not just a work horse on the business side; scientists use Excel to transform data. He is happy to find scientists and techies alike who know how to use Excel at full strength.

November 07, 2008 8:59 AM

Workshop 5: Curriculum Development

[A transcript of the SECANT 2008 workshop: Table of Contents]

This session was labeled "birds-of-a-feather", likely a track for short talks that didn't fit very well elsewhere. The most common feather was curriculum, efforts to develop it and determine its effect.

First up was Ruth Chabay, on looking in detail at students' computational thinking skills. She is involved in a pilot study that aims to answer the question, "Do students think differently about physics after programming?" This is the sort of outcomes assessment that people who develop curriculum rarely do. Even CS faculty -- despite the fact that we would never think of writing programs and not checking to see whether they ran correctly. This study is mostly question and method at this point, with only hints at answers.

The research methodology is a talk-aloud protocol with videotaping of the participants' behavior. Chabay showed an illustrative video of a student reasoning through a very simple program, talking about the problem. I'd love to be able to observe students from some of my courses in this way. It would be hard to gather useful quantitative data, but the qualitative results would surely give some insight into what some students are thinking when they are going their own way.

Next up was Rubin Landau, who developed a Computational Physics program at Oregon State. He started with a survey from the American Physical Society which reported what do physics grads do 5 years after they leave school. A large percentage are involved in developing software, but alumni said that the number one skill they use is "scientific problem solving". Even for those working in scientific positions, the principles of physics are far from the most important skill. Landau stressed that this does not mean that physics isn't important; it's just that students don't graduate to repeat what they learned as an undergrad. In Landau's opinion, much of physics education is driven by the needs of researchers and for graduate students. Undergraduate curriculum is often designed as a compromise between those forces and the demands of the university and its undergraduates.

Landau described the Computational Physics curriculum they created at Oregon State with the needs of undergrad education as the driving force. I don't know enough physics to follow his description in real-time, but I noticed a few futures. Students should learn two "compiled languages"; it doesn't really matter which, though he still likes it if one is Fortran. The intro courses introduce many numerical analysis concepts involving computation (underflow, rounding). This course is now so well settled that they offer it on-line with candid video mini-lectures. Upper-division courses include content that students may well work with in industry but which have disappeared from other schools' curricula, such as fluid dynamics..

Landau is fun to listen to, because he has an arsenal of one-liners at the ready. He reported one of his favorite computational physics student comments:

Now I know what's "dynamic" in thermodynamics!

Bruce Sherwood reported a physics student comment of his own: "I don't like computers." Sherwood responded, "That's okay. You're a physicist. I don't like them either." But physics students and professors need to realize that saying they don't like computers is like saying, "I don't like voltmeters." If you can't work with a voltmeter or a computer, you are in the wrong business. That's just the way the world is.

My favorite line of Landau's is one that applies as well to computer science as to physics:

We need a curriculum for doers, not monks.

The next two speakers were computer scientists. James Early described a project in which students are developing learner-centered content for their introductory computer science course. This project was motivated by last year's SECANT workshop. Most of the students in their intro course are not CS majors. The goal of the project is to excite these students about computation, so they'll take it back to their majors and put it to good use. I immediately thought, "I'd like to have CS majors get excited about computation and take it back to their major, too!" Too few CS students take full advantage of their programming skills to improve their own academic lives...

Resource link to explore: the Solomon-Felder Index of Learning Styles, which has gained some market share in engineering world. Besides, it's on-line and free!

Mark Urban-Lurain closed the session by describing the CPATH project at Michigan State, my old graduate school stomping grounds. This project is aimed at creating a process for redesigning engineering curriculum. But much of the interesting discussion revolved the fact that most engineering firms request that students have computational skills in... Excel! Several of the CS faculty in the room nodded their heads, because they have pointed this out to their colleagues and run into a stonewall. CS departments balk at such "tools". Now, Excel is not my tool of choice, but macros really are a form of programming. I've been following with interest some work in the Haskell community on programming in spreadsheets (see some of the papers here. We in CS have more powerful tools to use and teach, but we also need to meet users of computation where the live. And in many domains, that is the spreadsheet.

I ended the workshop by chatting with Urban-Lurain, with whom I came into contact as a teaching assistant. His colleague on this CPATH project is my doctoral advisor. It is a small world.

November 05, 2008 8:04 PM

What Motivates Kids These Days

Who am I being when I am not seeing

a connection in the eyes of others?

-- Benjamin Zander

I was all set to write an entry about "students these days", but I see that Mark Guzdial beat me to the punch. Two earlier entries chronicled my experience in class this semester. This is a course I have taught every third semester in recent years, and before that I taught it every year or even every semester for a few years stretching back to the mid-1990s. This has given me a longitudinal view of our student population as it has performed on common content, with common materials and a common approach in the classroom. Certainly the course has evolved a bit in that time, as I try to keep the course forward-looking as well as grounded in basic content. But with all those changes, I don't think the course's fundamental character has changed. If anything, I'd be inclined to say that I do a better job now than way back when, because I've learned how to do a better job. (That may be wishful thinking, of course.)

Yet this semester has felt more challenging than I remember. If I look back at this course over the years, though, can see that there have been signs of change. The last time we offered this course, I noted that students seemed less obviously engaged in the material than in recent times. That group turned out to be well-prepared and thoughtful, but with a quiet personality and a need to see how the course fit into their goals before they made an observable commitment. Maybe in the three years since the last offering before that we have begun to see a different kind of student in CS.

Guzdial describes one of the changes that may be responsible: the broadening of the population that attends college and, indeed, is expected to. With the widening of the pool, we are likely to see more students with varying commitments to the academic enterprise. We might also see students who are less well-prepared. A common hypothesis among faculty I know is that the CS student body we have built up since the dot.com bust has been different from the group we encountered before.

Maybe that's just another example of old fogies wishing for the good old days, but I don't think so. I think we have seen a much wider range of ability, preparation, and motivation in the newer student body than we had back in the "good old days" of the 1990s. With a larger, more diverse set of students attending college now than then, this is a natural outcome.

I don't think today's students learn differently, or have diminished capacity to learn, from exposure to the internet, iPods, and Wiis. And these students are, for the most part, as well-prepared as students before, at least when we account for the increased diversity of the pool. I do think that students have different motivations and different levels of motivation than previous classes.

One of our undergrads tells a story consistent with my observation. He runs free tutoring sessions as a public service to students in our intro programming courses. He wrote recently that he doesn't get as much traffic from CS majors (his primary audience) as he had hoped. On a particular night:

Interestingly enough, all three students were non-CS majors. I'm not entirely sure what that means overall. They are taking a class they don't need -AND- they are seeking help outside of class hours. That alone is unusual. We also discussed all the concepts on paper and each student hand wrote their own notes, which was surprising to me as well.

This tutor is one of our most talented and self-motivated students, so I'm not surprised that he would notice an apparent lack of motivation among his peers. Asking for help is an odd one. In my class, I have several strong students who are scoring lower than they do in other courses, yet only a very few have asked any questions. A couple have, but not until after a quiz that deflates their spirits. I've asked them why they haven't asked questions about the puzzling material earlier. The answers are a mix of optimism ("I just assume that I'll be able to figure this out"), pride ("I don't want to give up and ask for help"), and poor time management ("well, I didn't start the assignment until...").

I was a pretty good student, but I have vivid memories of getting up early one day my sophomore year, picking up a box of punch cards, and heading over to see my Assembler II prof promptly at the start of his 8 AM office hour because I was struggling with a now-forgotten JCL issue. (8 AM?! Many of my students say that 10 AM office hours are too early!) I'm optimistic and proud, but I was also motivated to succeed -- grade-wise, if nothing else. But I suspect that I probably wanted to learn more than I wanted to save face.

As Guzdial says, it may be that today's students are motivated by different things.

... the case for why something is worth learning is increasingly borne by the teacher, ... and the sense of value for what's to be learned is increased based in vocational terms.

This has always been an issue for me when teaching functional programming and Scheme, where the language, style, and ideas are foreign to what students tend to experience in the intro course language du jour and current professional practice. But I would think it'd be easier to motivate many functional programming concepts in a day when Python, Ruby, and even serious languages like C# and Java are bringing to the masses. (Maybe that says something about my skills as a motivator...)

In any case, rather than leave the burden for what's different now at the feet of our students, we CS instructors face the challenge of figuring out how to teach differently. Add this to changes in the discipline and the need for more non-CS students to incorporate computing into their professions and lives, and the challenge becomes even more "interesting".

November 03, 2008 7:19 PM

Workshop 4: Computer Scientists on CS Education Issues

[A transcript of the SECANT 2008 workshop: Table of Contents]

The first day of the workshop ended with two panels of two computer scientists each. The first described two current projects on introductory CS courses, and the second presented two CPATH projects related to the goals of SECANT. I either knew about these projects already or was familiar with their lessons from my department's experiences, so I didn't take quite as detailed notes. Then again, maybe I was just tiring after a long day of good stuff.

On intro CS, Deepak Kumar talked about Learning Computing with Robots, which has developed a course that serves primarily non-majors, with a goal of broadening interest in computing, even as a general education course. This course teaches computing, not robotics. Kumar mentioned that the cost of materials is no longer the issue it once was. They have built the course around a robot kit that costs in the neighborhood o $110 -- about the same price as a textbook these days!

Next, Tom Cortina talked about Teaching Key Principles of Computer Science Without Programming. In many ways, Cortina was swimming against the tide of this workshop, as he argued that non-majors could (should?) learn CS minus the programming. There certainly is a lot of cool stuff that students can learn using canned tools, talking about history, and doing some light math and logic. Cortina's course in particular covers a lot of neat material about algorithms. But still I think students miss out on something useful -- even central to computing -- when they bypass programming altogether. However, if the choice is between this course and a majors-style course that leaves non-majors confused, frustrated, or hating CS, well, then, I'll take this!

The second "panel" presented two related CPATH projects. Valerie Barr of Union College described efforts creating a course in computational science across the curriculum at Union and Lafayette College. The key experience she reported was on how to build an initial audience for the course, so that later word of mouth can spread. Barr's experience sound familiar: blanket e-mail to faculty tends not to work well, but one-on-one conversations with faculty do -- especially ongoing contact and continued conversation. This sort of human contact is time-intensive, which makes it hard to scale as you move to schools much larger than Union or Lafayette. Barr said that they had had good luck dealing with people in their Career Center, who could tell students how useful computational skills are across all the majors on campus. At my school, we have had similar good results working with people in Academic Advising and Career Services. They seem to get the value of computational skills as well as or better than faculty across campus, and they have different channels than we do for reaching students over the long term.

Finally, Lenny Pitt described the iCUBED project at the University of Illinois. The one content fact I remember from Pitt's talk is that they are working to develop applied CS programs and "CS + <X>" programs within other departments. The most memorable part of his talk for me, though, was how he had reconfigured the project's acronym (which they inherited from enabling policy or legislation) based on the workshop's theme and 2008 mantra: "Infiltration: Computing Used By Every Discipline." Creative!