March 30, 2012 5:22 PM

A Reflection on Alan Turing, the Turing Test, and Machine Intelligence

|

In 1950, Alan Turing published a paper that launched the discipline of artificial intelligence, Computing Machinery and Intelligence. If you have not read this paper, go and do so. Now. 2012 is the centennial of Turing's birth, and you owe yourself a read of this seminal paper as part of the celebration. It is a wonderful work from a wonderful mind.

This paper gave us the Imitation Game, an attempt to replace the question of whether a computer could be intelligent by withn something more concrete: a probing dialogue. The Imitation became the Turing Test, now a staple of modern culture and the inspiration for contests and analogies and speculation. After reading the paper, you will understand something that many people do not: Turing is not describing a way for us to tell the difference between human intelligence and machine intelligence. He is telling us that the distinction is not as important as we seem to think. Indeed, I think he is telling us that there is no distinction at all.

I mentioned in an entry a few years ago that I always have my undergrad AI students read Turing's paper and discuss the implications of what we now call the Turing Test. Students would often get hung up on religious objections or, as noted in that entry, a deep and a-rational belief in "gut instinct". A few ended up putting their heads in the sand, as Turing knew they might, because they simply didn't want to confront the implication of intelligences other than our own. And yet they were in an AI course, learning techniques that enable us to write "intelligent" programs. Even students with the most diehard objections wanted to write programs that could learn from experience.

Douglas Hofstadter, who visited campus this month, has encountered another response to the Turing Test that surprised him. On his second day here, in honor of the Turing centenary, Hofstadter offered a seminar on some ideas related to the Turing Test. He quoted two snippets of hypothetical man-machoine dialogue from Turing's seminal paper in his classic Gödel, Escher, Bach. Over the the years, he occasionally runs into philosophers who think the Turing Test is shallow, trivial to pass with trickery and "mere syntax". Some are concerned that it explores "only behavior". Is behavior all there is? they ask.

As a computer programmer, the idea that the Turing test explores only behavior never bothered me. Certainly, a computer program is a static construct and, however complex it is, we can read and understand it. (Students who take my programming languages course learn that even another program can read and process programs in a helpful way.) This was not a problem for Hofstadter either, growing up as he did in a physicist's household. Indeed, he found Turing's formulation of the Imitation Game to be deep and brilliant. Many of us who are drawn to AI feel the same. "If I could write a program capable of playing the Imitation Game," we think, "I will have done something remarkable."

One of Hofstadter's primary goals in writing GEB was to make a compelling case form Turing's vision.

|

Those of us who attended the Turing seminar read a section from Chapter 13 of Le Ton beau de Marot, a more recent book by Hofstadter in which he explores many of the same ideas about words, concepts, meaning, and machine intelligence as GEB, in the context of translating text from one language to another. Hofstadter said the focus in this book is on the subtlety of words and the ideas they embody, and what that means for translation. Of course, these are the some of the issues that underlie Turing's use of dialogue as sufficient for us to understand what it means to be intelligent.

In the seminar, he shared with us some of his efforts to translate a modern French poem into faithful English. His source poem had itself been translated from older French into modern French by a French poet friend of his. I enjoyed hearing him talk about "the forces" that pushed him toward and away from particular words and phrases. Le Ton beau de Marot uses creative dialogues of the sort seen in GEB, this time between the Ace Mechanical Translator (his fictional computer program) and a Dull Rigid Human. Notice the initials of his raconteurs! They are an homage to Turing. The human translator, Douglas R. Hofstadter himself, is cast in the role of AMT, which shares its initials with Alan M. Turing, the man who started this conversation over sixty years ago.

Like Hofstadter, I have often encountered people who object to the Turing test. Many of my AI colleagues are comfortable with a behavioral test for intelligence but dislike that Turing considers only linguistic behavior. I am comfortable with linguistic behavior because it captures what is for me the most important feature of intelligence: the ability to express and discuss ideas.

Others object that it sets too low a bar for AI, because it is agnostic on method. What if a program "passes the test", and when we look inside the box we don't understand what we see? Or worse, we do understand what we see and are unimpressed? I think that this is beside the point. Not to say that we shouldn't want to understand. If we found such I program, I think that we would make it an overriding goal to figure out how it works. But how an entity manages to be "intelligent" is a different question from whether it is intelligent. That is precisely Turing's point!

I agree with Brian Christian, who won the prize for being "The Most Human Human" in a competition based on Turing's now-famous test. In an interview with The Paris Review, he said,

Some see the history of AI as a dehumanizing narrative; I see it as much the reverse.

Turing does not diminish what it is to be human when he suggests that a computer might be able to carry on a rich conversation about something meaningful. Neither do AI researchers or teenagers like me, who dreamed of figuring just what it is that makes it possible for humans to do what we do. We ask the question precisely because we are amazed. Christian again:

We build these things in our own image, leveraging all the understanding of ourselves we have, and then we get to see where they fall short. That gap always has something new to teach us about who we are.

As in science itself, every time we push back the curtain, we find another layer of amazement -- and more questions.

I agree with Hofstadter. If a computer could do what it does in Turing's dialogues, then no one could rightly say that it wasn't "intelligent", whatever that might mean. Turing was right.

~~~~

PHOTOGRAPH 1: the Alan Turing centenary celebration. Source: 2012 The Alan Turing Year.

PHOTOGRAPH 2: Douglas Hofstadter in Bologna, Italy, 2002. Source: Wikimedia Commons.

March 27, 2012 4:53 PM

Faculty Workload and the Cost of Universities

This morning, @tonybibbs tweeted me a link to a Washington Post piece called Do college professors work hard enough?, wondering what I might think.

Author David Levy calls for "reforms for outmoded employment policies that overcompensate faculty for inefficient teaching schedules". Once, he says, faculty were generally underpaid relative to comparably educated professionals; now senior faculty at most state universities earn salaries roughly in line with comparable professionals.

Not changed, however, are the accommodations designed to compensate for low pay in earlier times. Though faculty salaries now mirror those of most upper-middle-class Americans working 40 hours for 50 weeks, they continue to pay for teaching time of nine to 15 hours per week for 30 weeks, making possible a month-long winter break, a week off in the spring and a summer vacation from mid-May until September.

My initial impressions after a quick read this morning were

- Yes, some faculty work too little.

- Most faculty work more than he seems to think.

- Changing #1 is hard.

After a second read, that still my impression. Let me expand.

Before beginning, let me note that Levy mentions three kinds of institutions: research universities, teaching universities, and community colleges. I myself can't offer informed comment on community college faculty. I have spent my professional career as a faculty member and department head at a teaching university. I also spent six years in grad school at an R-1 institution and have many colleagues and friends who work at research schools. Finally, I am in Computer Science, not a more stable discipline. These are the experience on which I draw.

First, #2. Levy seems willing to grant that faculty at research institutions work longer hours, or if not at least that the work they do is so valuable as to earn high pay. I agree. Levy seems unwilling to grant similar effort or importance to what faculty at teaching universities. He thinks himself generous in allowing that the latter might spend as much time in prep as in class and concludes that "the notion that faculty in teaching institutions work a 40-hour week is a myth".

At my school, data on faculty workload have routinely showed that on average faculty work more than fifty hours per week. When I was full time faculty, my numbers were generally closer to sixty. (As a department head for the last few years, I have generally worked more.) These numbers are self-reported, but I have good reason to trust them, having observed what faculty in my department do.

If we aren't meeting an R-1 school's stringent requirements for research, publication, and grant writing, what are we doing? We actually do spend more hours per week working outside the classroom as inside. We are preparing new course materials, meeting with students in office hours and the lab, and experimenting with new programming languages and technologies that can improve our courses (or making some of our course content obsolete). We advise undergrads and supervise their research projects. Many departments have small grad programs, which bring with them some of the duties that R-1 profs face.

We also do scholarship. Most teaching schools do expect some research and publication, though clearly not at the level expected by the R-1s. Teaching schools are also somewhat broader in the venues for publication that they accept, allowing teaching conferences such as SIGCSE or formal workshops like the SPLASH (neé OOPSLA) Educators' Symposium. Given these caveats, publishing a paper or more per year is not an unusual scholarship expectation at schools like mine.

During the summer, faculty at teaching universities are often doing research, writing, or preparing and teaching workshops for which they are paid little, if anything. Such faculty may have time for more vacation than other professionals, but I don't think many of them are sailing the Caribbean for the 20+ weeks that Levy presumes they have free.

Levy does mention service to the institution in the form of committee work. Large organizations do not run themselves. From what I remember of my time in grad school, most of my professors devoted relatively little time to committees. They were busy doing research and leading their teams. The university must have had paid staff doing a lot of the grunt work to keep the institution moving. At a school like mine, many faculty carry heavy service loads. Perhaps we could streamline the bureaucracy to eliminate some of this work, or higher staff to do it, but it really does consume a non-trivial amount of some faculty members' time and energy.

After offering these counterpoints -- which I understand may be seen as self-serving, given where I work -- what of #1? It is certainly the case that some university faculty work too little. Expectations for productivity in research and other scholarship have often been soft in the past, and only now are many schools coming to grips with the full cost of faculty productivity.

Recently, my school has begun to confront a long-term decline in real funding from the state, realizing that it cannot continue to raise tuition to make up for the gap. One administrative initiative asked department heads and faculty to examine scholarly productivity of faculty and assign professors who have not produced enough papers, grant proposals, or other scholarly results over a five-year period to teach an extra course. There were some problems in how administrators launched and communicated this initiative, but the idea is a reasonable one. If faculty are allocated time for scholarship but aren't doing much, then they can use that time to teach a course.

The reaction by most of faculty was skeptical and concerned. (This was true of department heads as well, because most of us think of ourselves as faculty temporarily playing an administrator's role.)

That brings us to #3. Changing a culture is hard. It creates uncertainty. When expectations have been implicit, it is hard to make them explicit in a way that allows enforcement while at the same time recognizing the value in what most faculty have been doing. The very word "enforcement" runs counter to the academic culture, in which faculty are left free to study and create in ways that improve their students' education and in which it is presumed faculty are behaving honorably.

In this sense, Levy's article hits on an issue that faces universities and the people who pay for them: taxpayers, students and parents who pay tuition, and granting agencies. I agree with Levy that addressing this issue is essential as universities come to live in a world with different cost structures and different social contracts. He seems to understand that change will be hard. However, I'm not sure he has an accurate view of what faculty at teaching universities are already doing.

March 25, 2012 11:18 AM

The Relationship at the Center of the Classroom

Sometimes, people think that the most important relationship in a classroom is the one between the student and the teacher. But it's not. The most important relationship in a classroom is the one between the student and the ideas that make up the course.

The teacher's job isn't to tell the student what to think. It is more the role of a matchmaker: to introduce the student to the ideas and to stir up interest. To stoke the fire and keep it burning when interest wanes. And to throw a log on the fire occasionally so that the young love can grow bigger and stronger.

The center of it all is the relationship between the student and the ideas, the discipline. The teacher's most important lasting effect is in the strength of that relationship.

Students who don't understand this never seem to realize that it is their work and their interest that make learning possible. Ultimately, students make a course successful, or not.

Teachers who don't understand this, or who forget over the course of a career, are easily disillusioned. Sometimes they think their most important job is to relay more knowledge to their students. It's usually more important to provoke the students to engage a few powerful ideas and let them seek out what they need when they need it.

Other times, teachers come almost to depend on their relationship with the students. They feel empty when the connection seems lacking. In most such cases, the best way to solve that problem isn't to work on the teacher-student connection, but to work on the connection between students and ideas. Ironically, the best way to improve that connection is often for teachers to reinvigorate their interest and passion in the ideas they think about and teach.

Occasionally something more happens. Teachers and students become fellow travelers on a journey, and that fellowship can outlast a single course or a four-year program of study. Sometimes, lifelong friendships develop. But that relationship is distinct from what happens between student, teacher, and ideas.

March 18, 2012 5:20 PM

Thinking Out Loud about the Compiler in a Pure OO World

John Cook pokes fun at OO practice in his blog post today. The "Obviously a vast improvement." comment deftly ignores the potential source of OOP's benefits, but then that's the key to the joke.

A commenter points to a blog entry by Smalltalk veteran Travis Griggs. I agree with Griggs's recommendation to avoid using verbs-turned-into-nouns as objects, especially lame placeholder words such as "manager" and "loader". As he says, they usually denote objects that fiddle with the private parts of other objects. Those other objects should be providing the services we need.

Griggs allows reasonable linguistic exceptions to the advice. But he also acknowledges the pull of pop culture which, given my teaching assignment this semester, jumped out at me:

There are many 'er' words that despite their focus on what they do, have become so commonplace, that we're best to just stick with them, at least in part. Parser. Compiler. Browser.

I've thought about this break in my own OO discipline before, and now I'm thinking about it again. What would it be like to write compiles without creating parsers and code generators -- and compilers themselves -- as objects?

We could ask a program to compile itself:

program.compileTo( targetMachine )

But is the program a program, or does it start life as a text file? If the program starts as a text file, perhaps we say

program.parse()to create an abstract syntax tree, which we then could ask

ast.compileTo( targetMachine )

(Instead of sending a parse() message, we might send an asAbstractSyntax() message. There may be no functional difference, but I think the two names indicate subtle differences in mindset.)

When my students write their compilers in Java or another OO language, we discuss in class whether abstract syntax trees ought to be able to generate target code for themselves. The danger lies in binding the AST class to the details of a specific target machine. We can separate the details of the target machine for the AST by passing an argument with the compileTo() message, but what?

Given all the other things we have to learn in the course, my students usually end up following Griggs's advice and doing the traditional thing: pass the AST as an argument to a CodeGenerator object. If we had more time, or a more intensive OO design course prior to the compilers course, we could look at techniques that enable a more OO approach without making things worse in the process.

Looking back farther to the parse behavior, would it ever make sense to send an argument with the parse() message? Perhaps a parse table for an LL(1) or LR(1) algorithm? Or the parsing algorithm itself, as a strategy object? We quickly run the risk of taking steps in the direction that Cook joshes about in his post.

Or perhaps parsing is a natural result of creating a Program object from a piece of text. In that approach, when we say

Program source = new Program( textfile );

the internal state of source is an abstract syntax tree. This may sound strange at first, but a program isn't

really a piece of text. It's just that we are conditioned to think that way by the languages and tools most of us learn first and use

most heavily. Smalltalk taught us long ago that this viewpoint is not necessary. (Lisp, too, though in a different way.)

These are just some disconnected thoughts on a Sunday afternoon. There is plenty more to say, and plenty of prior art. I think I'll fire up a Squeak image sometime soon and spend some time reacquainting myself with its take on parsing and code generation, in particular the way it compiles its core out to C.

I like doing this kind of "what if?" thinking. It's fun to explore along the boundary between the land where OOP works naturally and the murky territory where it doesn't seem to fit so well. That's a great place to learn new stuff.

Posted by Eugene Wallingford | Permalink | Categories: Patterns, Software Development, Teaching and Learning

March 13, 2012 8:00 PM

The Writer's Mindset for Programmers

Several people have pointed out that these tips on writing from John Steinbeck are useful for programmers; Chris Freeman even mapped them to writing code. I like to make such connections, most recently to the work of Roger Rosenblatt (in several entries, including The Summer Smalltalk Taught Me OOP) and John McPhee, a master of non-fiction (in an entry on writing, teaching, and programming). Lately I have been reading about and from David Foster Wallace, as I wrote a few weeks ago. Several quotes from interviews he gave in the 1990s and 2000s reminded me of programming, both doing it and learning to do it.

The first ties directly into the theme from the entry on my summer of Smalltalk. As Wallace became more adept at making the extensive cuts to his wide-ranging stories suggested by his editor, he adopted a familiar habit:

Eventually, he learned to erase passages that he liked from his hard drive, in order to keep himself from putting them back in.

It's one thing to kill your darlings. It's another altogether to keep them from sneaking back in. In writing as in programming, sometimes rm -r *.* is your friend.

A major theme in Wallace's work -- and life -- was the struggle not to fall into the comfortable patterns of thought engendered by the culture around us. The danger is, those comfortable ruts separate us from what is real:

Most 'familiarity' is mediated and delusive.

Programmers need to keep this in mind when they set out to learn a new programming language and or a new style of programming. We tend to prefer the familiar, whether it is syntax or programming model. Yet familiarity is conditioned by so many things, most prominently recent experience. It deludes us into thinking some things are easier or better than others, often for no other reason than the accident of history that brought us to a particular language or style first. When we look past the experience that gets in the way of seeing the new thing as it is, we enable ourselves to appreciate the new thing as it is, and not as the lens of our experience distorts it.

Of course, that's easier said than done. This struggle consumed Wallace the writer his entire life.

Even so, we don't want to make the mistake of floating along the surface of language and style. Sometimes, we think that makes us free to explore all ideas unencumbered by commitment to any particular syntax, programming model, or development methodology. But it is in focusing our energies and thinking to use specific tools, to write in a specific cadre of languages, and to use a particular styles that we enable ourselves to create, to do something useful:

If I wanted to matter -- even just to myself -- I would have to be less free, by deciding to choose in some kind of definite way.

This line is a climactic revelation of the protagonist in Wallace's posthumously published unfinished novel, The Pale King. It reminds us that freedom is not always so free.

It is much more important for a programmer to be a serial monogamist than a confirmed bachelor. Digging deep into language and style is what makes us stronger, whatever language or style we happen to work in at any point in time. Letting comfortable familiarity mediate our future experiences is simply a way of enslaving ourselves to the past.

In the end, reading Wallace's work and the interviews he gave shows us again that writers and programmers have a lot in common. Even after we throw away all the analogies between our practices, processes, and goals, we are left with an essential identity that we programmers share with our fellow writers:

Writing fiction takes me out of time. That's probably as close to immortal as we'll ever get.

Wallace said this in the first interview he gave after the publication of his first novel. It is a feeling I know well, and one I never want to live without.

March 09, 2012 3:33 PM

This and That from Douglas Hofstadter's Visit

Update: In the original, I conflated two quotes in

"Food and Hygiene". I have un-conflated them.

In addition to his lecture on Gödel's incompleteness theorem, Douglas Hofstadter spent a second day on campus, leading a seminar and giving another public talk. I'll blog on those soon. In the meantime, here are a few random stories I heard and impressions I formed over the two days.

The Value of Good Names. Hofstadter told a story about his "favorite chapter on Galois theory" (don't we all have one?), from a classic book that all the mathematicians in the room recognized. The only thing Hofstadter didn't like about this chapter was that it referred to theorems by number, and he could never remember which theorem was which. That made an otherwise good text harder to follow than it needed to be.

In contrast, he said, was a book by Galyan that gave each theorem a name, a short phrase evocative of what the theorem meant. So much better for reader! So he gave his students one semester an exercise to make his favorite chapter better: they were to give each of the numbered theorems in the chapter an evocative name.

This story made me think of my favorite AI textbook, Patrick Henry Winston's Artificial Intelligence. Winston's book stands out from the other AI books as quirky. He uses his own vocabulary and teaches topics very much in the MIT AI fashion. But he also gives evocative names to many of the big ideas he wants us to learn, among them the representation principle, the principle of least commitment, the diversity principle, and the eponymous "Winston's principle of parallel evolution". My favorite of all is the convergent intelligence principle:

The world manifests constraints and regularities. If a computer is to exhibit intelligence, it must exploit those constraints and regularities, no matter of what the computer happens to be made.

To me, that is AI.

Food and Hygiene. The propensity of mathematicians to make their work harder for other people to understand, even other mathematicians, reminded Doug Shaw of two passages, from famed mathematicians Gian-Carlo Rota and André Weil. Rota said that we must guard ... against confusing the presentation of mathematics with the content of mathematics. More colorfully, Weil cautioned [If] logic is the hygiene of the mathematician, it is not his source of food. Theorems, proofs, and Greek symbols are mathematical hygiene. Pictures, problems, and understanding are food.

A Good Gig, If You Can Get It. Hofstadter holds a university-level appointment at Indiana, and his research on human thought and the fluidity of concepts is wide enough to include everything under the sun. Last semester, he taught a course on The Catcher in the Rye. He and his students read the book aloud and discussed what makes it great. Very cool.

If You Need a Research Project... At some time in the past, Hofstadter read, in a book or article about translating natural language into formal logic, that 'but' is simply a trivial alternative to 'and' and so can be represented as such. "Nonsense", he said! 'but' embodies all the complexity of human thought. "If we could write a program that could use 'but' correctly, we would have accomplished something impressive."

Dissatisfied. Hofstadter uses that word a lot in conversation, or words like it, such as 'unsatisfying'. He does not express the sentiment in a whiny way. He says it in a curious way. His tone always indicates a desire to understand something better, to go deeper to the core of the question. That's a sign of a good researcher and a deep thinker.

~~~~

Let's just say that this was a great treat. Thanks to Dr. Hofstadter for sharing so much time with us here.

March 07, 2012 5:35 PM

Douglas Hofstadter on Questions, Proofs, and Passion

In the spring of my sophomore year in college, I was chatting with the head of the Honors College at my alma mater. His son, a fellow CS major, had recently read what he considered a must-read book for every thinking computer scientist. I went over to library and checked it out in hardcopy. I thumbed through it, and it was love at first sight. So I bought the paperback and spent my summer studying it, line by line.

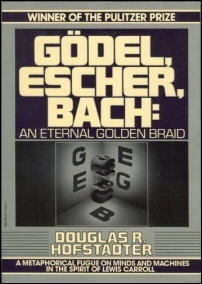

|

Gödel, Escher, Bach seemed to embody everything that excited me about computer science and artificial intelligence. It made, used, and deconstructed analogies. It talked about programming languages, and computer programs as models. Though I allowed myself to be seduced in grad school by other kinds of AI, I never felt completely satisfied. My mind and heart have never really left go of the feeling I had that summer.

Last night, I had the pleasure of seeing Douglas Hofstadter give the annual Hari Shankar Memorial Lecture here. This lecture series celebrates the beauty of mathematics and its accessibility to everyone. Hofstadter said that he was happy to honored to be asked to give such a public lecture, speaking primarily to non-mathematicians. Math is real; it is in the world. It's important, he said, to talk about it in ways that are accessible to all. His lecture would share the beauty of Gödel's Incompleteness Theorem. Rather than give a dry lecture, he told the story as his story, putting the important issues and questions into the context of his own life in math.

As a 14-year-old, he discovered a paperback copy of Gödel's Proof in a used bookstore. His father mentioned that one of the authors, Ernest Nagel, was one of his teachers and friends. Douglas was immediately fascinated. Gödel used a tool (mathematics) to study itself. It was "a strange loop".

As a child, he figured out that two twos is four. The natural next question is, "What is three threes?" But this left him dissatisfied, because two was still lurking in the question. What is "three three threes"? It wasn't even clear to him what that might mean.

But he was asking questions about patterns and and seeking answers. He was on his way to being a mathematician. How did he find answers? He understood science to be about experiments, so he looked for answers by examining a whole bunch of cases, until he had seen enough to convince himself that a claim was true.

He did not know yet what a proof was. There are, of course, many different senses of proof, including informal arguments and geometric demonstrations. Mathematicians use these, but they are not what they mean by 'proof'.

|

So he explored problems and tried to find answers, and eventually he tried to prove his answers right. He became passionate about math. He was excited by every new discovery. (Pi!) In retrospect, his excitement does not surprise him. It took mathematicians hundreds of years to create and discover these new ideas. When he learned about them after the fact, they look like magic.

Hofstadter played with numbers. Squares. Triangular numbers. Primes. He noticed that 2^3 and 3^2 adjacent to one another and wondered if any other powers were adjacent.

Mathematicians have faith that there is an answer to questions like that. It may be 'yes', it may be 'no', but there's an answer. He said this belief is so integral to the mindset that he calls this the Mathematician's Credo:

If something is true, it has a proof, and if something has a proof, then it is true.

As an example, he wrote the beginning of the Fibonacci series on the chalk board: 1, 1, 2, 3, 5, 8, 13, 21, 34, 55, 89, 144, 233. The list contains some powers of integers: 1, 8, and 144. Are there more squares? Are there more powers? Are there infinitely many? How often do they appear? It turns out that someone recently discovered that there are no more integer powers in the list. A mathematician may be surprised that this is true, but she would not be surprised that, if it is true, there is a proof of it.

Then he gave an open question as an example. Consider this variation of a familiar problem:

- Rule 1: n → 2n

- Rule 2: 3n+1 → n

- Start with 1.

Rule 1 takes us from 1 to 2. Rule 1 takes us to 4. Rule 2 takes us to 1. We've already been there, so that's not very interesting. But Rule 1 takes us to 8.... And so on.

Hofstadter called the numbers we visited C-numbers. He then asked a question: Where can we go using these rules? Can we visit every integer? Which numbers are C-numbers? Are all integers C-numbers?

The answer is, we don't know. People have used computers to test all the integers up to a very large number (20 x 2^58) and found that we can reach every one of them from 1. So many people conjecture strongly that all integers are C-numbers. But we don't have a proof, so the purist mathematician will say only, "We don't know".

At this point in the talk, my mind wanders.... (Wonders?) It would be fun to write a program to answer, "Is n a C-number?" in Flair, the language for which my students this semester are writing a compiler. That would make a nice test program. Flair is a subset of Pascal without any data structures, so there is an added challenge... A danger of teaching a compilers course -- any course, really -- is that I would rather write programs than do almost anything else in the world.

One could ask the same question of the Fibonacci series: Is every number a Fibonacci number? It is relatively easy to answer this question with 'no'. The sequence grows larger in each new entry, so once you skip any number, you know it's not in the list. C-numbers are tougher. They grow and shrink. For any given number n, we can search the tree of values until we find it. But there is no proof for all n.

As one last bit of preparation, Hofstadter gave an informal proof of the statement, "There are an infinite number of prime numbers." The key is that his argument used one assumption (there are a finite number of primes) to destroy a necessary consequence of the same (p1*p2*...*pk+1 is prime).

From there, Hofstadter told a compact, relatively simple version of Tarski's undefinability theorem and, at the end, made the bridge to Gödel's theorem. I won't tell that story here, for a couple of reasons. First, this entry is already quite long. Second, Hofstadter himself has already told this story better than I ever could, in Gödel, Escher, Bach. You really should read it there.

This story gave him a way to tell us about the importance of the proof: it drives a wedge between truth and provability. This undermines the Mathematician's Credo. It also allowed him to demonstrate his fascination with Gödel's Proof so many years ago: it uses mathematical logic to say something interesting, powerful, and surprising about mathematical logic itself.

Hofstadter opened the floor to questions. An emeritus CS professor asked his opinion of computer proofs, such as the famous 1976 proof of the four color theorem. That proof depends on a large number of special cases and requires hundreds of pages of analysis. At first, Hofstadter said he doesn't have much of an opinion. Of course, such proofs require new elements of trust, such as trust that the program is correct and trust that the computer is functioning correctly. He is okay with that. But then he said that he finds such proofs to be unsatisfying. Invariably, they are a form of brute force, and that violates the spirit of mathematics that excites him. In the end, these proofs do not help him to understand why something isn true, and that is the whole point of exploring: to understand why.

This answer struck a chord in me. There are whole swaths of artificial intelligence that make me feel the same way. For example, many of my students are fascinated by neural networks. Sure, it's exciting any time you can build a system that solves a problem you care about. (Look ma, no hands!) But these programs are unsatisfying because they don't give me any insight into the nature of the problem, or into how humans solve the problem. If I ask a neural network, "Why did you produce this output for this input?", I can't expect an answer at a conceptual level. A vector of weights leaves me cold.

To close the evening, Hofstadter responded to a final question about the incompleteness theorem. He summarized Gödel's result in this way: Every interesting formal system says true things, but it does not say all true things. He also said that Tarski's result is surprising, but in a way comforting. If an oracle for T-numbers existed, then mathematics would be over. And that would be depressing.

As expected, I enjoyed the evening greatly. Having read GEB and taken plenty of CS theory courses, I already knew the proofs themselves, so the technical details weren't a big deal. What really highlighted the talk for me was hearing Hofstadter talk about his passions: where they came from, how he has pursued them, and how these questions and answers continue to excite him as they do. Listening to an accomplished person tell stories that make connections to their lives always makes me happy.

We in computer science need to do more of what people like Hofstadter do: talk about the beautiful ideas of our discipline to as many people as we can, in way that is accessible to all. We need a Sagan or a Hofstadter to share the beauty.

~~~~

PHOTOGRAPH 1: a photograph of the cover of my copy of Gödel, Escher, Bach.

PHOTOGRAPH 2: Douglas Hofstadter in Bologna, Italy, 2002. Source: Wikimedia Commons.

March 04, 2012 9:34 AM

One Year Later

March 4, 2011, was a Friday like any other. I had fallen into a comfortable weekly routine: easy 5-milers on Tuesday and Thursday, a medium 7-miler on Wednesday, a hard 8 miles on the indoor track on Friday, and 8 miles on Sunday. February had been snowy and cold, which made my preferred 12-mile long run on Sundays a bit too much. In place of those miles, I ran a little faster on Fridays and looked forward to the coming spring thaw.

The morning was crisp and the roads clearer than they had been, so I decided to run outdoors. I picked out my favorite 8-mile route, an old friend I had first met when we lived on the other side of town. It passed by our new house, too, and so made the move with us.

It was an excellent run. Footing on hills and in curves is the big challenge of running outdoors in winter, so I didn't worry about pace. I breathed in the cold air, took in the old sights, and felt my body reach equilibrium with the elements.

|

I did not know at the time, but this would be my last run.

A flu that had been going around hit me later that day, and I was in bed sick for a few days. Then one morning, as I was starting to feel better, I woke up with a sore knee. No big deal, I thought; I'll take advantage of the extra day to be sure I've really licked that flu.

The flu left, but the knee pain did not. It got worse. I eventually went to see a specialist, who gave me the bad news, operated, gave me some more bad news, and operated a second time.

Since then, I have been rehabbing, slowly adding time and effort to my work-outs. But I have not run.

Two days ago, another first Friday of March, 52 weeks later, I had my best post-running workout yet. I still have far to go. The knee is still a little swollen, and it stiffens up after the shortest bouts of inactivity. It feels funny. But I see light.

There are days when I still feel that old urge to lace up my Asics GT-2100s and take off. I expect that summer bring plenty more of those days. The long road to who-knows-where stretches out before me as always, but I won't be exploring it on the run.