December 26, 2019 1:55 PM

An Update on the First Use of the Term "Programming Language"

This tweet and this blog entry on the first use of the term "programming language" evoked responses from readers with some new history and some prior occurrences.

Doug Moen pointed me to the 1956 Fortran manual from IBM, Chapter 2 of which opens with:

Any programming language must provide for expressing numerical constants and variable quantities.

I was aware of the Fortran manual, which I link to in the notes for my compiler course, and its use of the term. But I had been linking to a document dated October 1957, and the file at fortran.com is dated October 15, 1956. That beats the January 1957 Newell and Shaw paper by a few months.

As Moen said in his email, "there must be earlier references, but it's hard to find original documents that are early enough."

The oldest candidate I have seen comes from @matt_dz. His tweet links to this 1976 Stanford tech report, "The Early Development of Programming Languages", co-authored by Knuth. On Page 26, it refers to work done by Arthur W. Burks in 1950:

In 1950, Burks sketched a so-called "Intermediate Programming Language" which was to be the step one notch above the Internal Program Language.

Unfortunately, though, this report's only passage from Burke refers to the new language as "Intermediate PL", which obscures the meaning of the 'P'. Furthermore, the title of Burke's paper uses "program" in the language's name:

Arthur W. Burks, "An intermediate program language as an aid in program synthesis", Engineering Research Institute, Report for Burroughs Adding Machine Company (Ann Arbor, Mich.: Univ. of Michigan, 1951), ii+15 pp.

The use of "program language" in this title is consistent with the terminology in Burks's previous work on an "Internal Program Language", to which Knuth et al. also refer.

Following up on the Stanford tech report, Douglas Moen found the book Mathematical Methods in Program Development, edited by Manfred Broy and Birgit Schieder. It includes a paper that attempts "to identify the first 'programming language', and the first use of that term". Here's a passage from Page 219, via Google Books:

There is not yet an indication of the term 'programming languages'. But around 1950 the term 'program' comes into wide use: 'The logic of programming electronic digital computers' (A. W. Burks 1950), 'Programme organization and initial orders for the EDSAC' (D. J. Wheeler 1950), 'A program composition technique' (H. B. Curry 1950), 'La conception du programme' (Corrado Bohm 1952), and finally 'Logical or non-mathematical programmes' (C. S. Strachey 1952).

And then, on Page 224, it comments specifically on Burks's work:

A. W. Burks ('An intermediate program language as an aid in program synthesis', 1951) was among the first to use the term program(ming) language.

The parenthetical in that phrase -- "the first to use the term program(ming) language" -- leads me to wonder if Burks may use "program language" rather than "programming language" in his 1951 paper.

Is it possible that Knuth et al. retrofitted the use of "programming language" onto Burks's language? Their report documents the early development of PL ideas, not the history of the term itself. The authors may have used a term that was in common parlance by 1976 even if Burks had not. I'd really like to find an original copy of Burks's 1951 ERI report to see if he ever uses "programming language" when talking about his Intermediate PL. Maybe after the holiday break...

In any case, the use of program language by Burks and others circa 1950 seems to be the bridge between use of the terms "program" and "language" independently and the use of "programming language" that soon became standard. If Burke and his group never used the new term for its intermediate PL, it's likely that someone else did between 1951 and release of the 1956 Fortran manual.

There is so much to learn. I'm glad that Crista Lopes tweeted her question on Sunday and that so many others have contributed to the answer!

December 23, 2019 10:23 AM

The First Use of the Term "Programming Language"?

Yesterday, Crista Lopes asked a history question on Twitter:

Hey, CS History Twitter: I just read Iverson's preface of his 1962 book carefully, and suddenly this occurred to me: did he coin the term "programming language"? Was that the first time a programming language was called "programming language"?

In a follow-up, she noted that McCarthy's CACM paper on LISP from roughly the same time called Lisp a 'programming system'", not a programming language.

I had a vague recollection from my grad school days that Newell and Simon might have used the term. I looked up IPL, the Information Processing Language they created in the mid-1950s with Shaw. IPL pioneered the notion of list processing, though at the level of assembly language. I first learned of it while devouring Newell and Simon's early work on AI and reading every thing I could find about programs such as the General Problem Solver and Logic Theorist.

That wikipedia page has a link to this unexpected cache of documents on IPL from Newell, Simon, and Shaw's days at Rand. The oldest of these is a January 1957 paper, Programming the Logic Theory Machine, by Newell and Shaw that was presented at the Western Joint Computer Conference (WJCC) the next month. It details their efforts to build computer systems to perform symbolic reasoning, as well as the language they used to code their programs.

There it is on Page 5: a section titled "Requirements for the Programming Language". They even define what they mean by programming language:

We can transform these statements about the general nature of the program of LT into a set of requirements for a programming language. By a programming language we mean a set of symbols and conventions that allows a programmer to specify to the computer what processes he wants carried out.

Other than the gendered language, that definition works pretty well even today.

The fact that Newell and Shaw defined "programming language" in this paper indicates that the term probably was not in widespread use at the time. The WJCC was a major computing conference of the day. The researchers and engineers who attended it would likely be familiar with common jargon of the industry.

Reading papers about IPL is an education across a range of ideas in computing. Researchers at the dawn of computing had to contend with -- and invent -- concepts at multiple levels of abstraction and figure out how to implement the on machines with limited size and speed. What a treat these papers are.

I love to read original papers from the beginning of our discipline, and I love to learn about the history of words. A few of my students do, too. One student stopped in after the last day of my compilers course this semester to thank me for telling stories about the history of compilers occasionally. Next semester, I teach our Programming Languages and Paradigms course again, and this little story might add a touch of color to our first days together.

All this said, I am neither a historian of computer science nor a lexicographer. If you know of an earlier occurrence of the term "programming language" than Newell and Shaw's from January 1957, I would love to hear from you by email or on Twitter.

December 20, 2019 1:45 PM

More Adventures in Constrained Programming: Elo Predictions

I like tennis. The Tennis Abstract blog helps me keep up with the game and indulge my love of sports stats at the same time. An entry earlier this month gave a gentle introduction to Elo ratings as they are used for professional tennis:

One of the main purposes of any rating system is to predict the outcome of matches--something that Elo does better than most others, including the ATP and WTA rankings. The only input necessary to make a prediction is the difference between two players' ratings, which you can then plug into the following formula:

1 - (1 / (1 + (10 ^ (difference / 400))))

This formula always makes me smile. The first computer program I ever wrote because I really wanted to was a program to compute Elo ratings for my high school chess club. Over the years I've come back to Elo ratings occasionally whenever I had an itch to dabble in a new language or even an old favorite. It's like a personal kata of variable scope.

I read the Tennis Abstract piece this week as my students were finishing up their compilers for the semester and as I was beginning to think of break. Playful me wondered how I might implement the prediction formula in my students' source language. It is a simple functional language with only two data types, integers and booleans; it has no loops, no local variables, no assignments statements, and no sequences. In another old post, I referred to this sort of language as akin to an integer assembly language. And, heaven help me, I love to program in integer assembly language.

To compute even this simple formula in Klein, I need to think in terms of fractions. The only division operator performs integer division, so 1/x for any x gives 0. I also need to think carefully about how to implement the exponentiation 10 ^ (difference / 400). The difference between two players' ratings is usually less than 400 and, in any case, almost never divisible by 400. So My program will have to take an arbitrary root of 10.

Which root? Well, I can use our gcd() function (implemented using Euclid's algorithm, of course) to reduce diff/400 to its lowest terms, n/d, and then compute the dth root of 10^n. Now, how to take the dth root of an integer for an arbitrary integer d?

Fortunately, my students and I have written code like this in various integer assembly languages over the years. For instance, we have a SQRT function that uses binary search to hone in on the integer closest to the square of a given integer. Even better, one semester a student implemented a square root program that uses Newton's method:

xn+1 = xn - f(xn)/f'(xn)

That's just what I need! I can create a more general version of the function that uses Newton's method to compute an arbitrary root of an arbitrary base. Rather than work with floating-point numbers, I will implement the function to take its guess as a fraction, represented as two integers: a numerator and a denominator.

This may seem like a lot of work, but that's what working in such a simple programming language is like. If I want my students' compilers to produce assembly language that predicts the result of a professional tennis match, I have to do the work.

This morning, I read a review of Francis Su's new popular math book, Mathematics for Human Flourishing. It reminds us that math isn't about rules and formulas:

Real math is a quest driven by curiosity and wonder. It requires creativity, aesthetic sensibilities, a penchant for mystery, and courage in the face of the unknown.

Writing my Elo rating program in Klein doesn't involve much mystery, and it requires no courage at all. It does, however, require some creativity to program under the severe constraints of a very simple language. And it's very much true that my little programming diversion is driven by curiosity and wonder. It's fun to explore ideas in a small space uses limited tools. What will I find along the way? I'll surely make design choices that reflect my personal aesthetic sensibilities as well as the pragmatic sensibilities of a virtual machine that know only integers and booleans.

As I've said before, I love to program.

Posted by Eugene Wallingford | Permalink | Categories: Computing, Software Development, Teaching and Learning

December 12, 2019 3:20 PM

A Semester's Worth of Technical Debt

I've been thinking a lot about technical debt this week. My student teams are in Week 13 of their compiler project and getting ready to submit their final systems. They've been living with their code long enough now to appreciate Ward Cunningham's original idea of technical debt: the distance between their understanding and the understanding embodied in their systems.

... it was important to me that we accumulate the learnings we did about the application over time by modifying the program to look as if we had known what we were doing all along and to look as if it had been easy to do in Smalltalk.

Actually, I think they are experiencing two gulfs: one relates to their understanding of the domain, compilers, and the other to their understanding of how to build software. Obviously, they are just learning about compilers and how to build one, so their content knowledge has grown rapidly while they've been programming. But they are also novice software engineers. They are really just learning how to build a big software system of any kind. Their knowledge of project management, communication, and their tools has also grown rapidly in parallel with building their system.

They have learned a lot about both content and process this semester. Several of them wish they had time to refactor -- to pay back the debt they accumulated honestly along the way -- but university courses have to end. Perhaps one or two of them will do what one or two students in most past semesters have done: put some of their own time into their compilers after the course ends, to see if they can modify the program to look as if they had known what they were doing all along, and to look as if it had been easy to do in Java or Python. There's a lot of satisfaction to be found at the end of that path, if they have the time and curiosity to take it.

One team leader tried to bridge the gulf in real time over the last couple of weeks: He was so unhappy with the gap between his team's code and their understanding that he did a complete rewrite of the first five stages of their compiler. This team learned what every team learns about rewrites and large-scale refactoring: they spend time that could otherwise have been spent on new development. In a time-boxed course, this doesn't always work well. That said, though, they will likely end up with a solid compiler -- as well as a lot of new knowledge about how to build software.

Being a prof is fun in many ways. One is getting to watch students learn how to build something while they are building it, and coming out on the other side with new code and new understanding.

December 06, 2019 2:42 PM

OOP As If You Meant It

This morning I read an old blog post by Michael Feathers, The Flawed Theory Behind Unit Testing. It discusses what makes TDD and Clean Room software development so effective for writing code with fewer defects: they define practices that encourage developers to work in a continuous state of reflection about their code. The post holds up well ten years on.

The line that lit my mind up, though, was this one:

John Nolan gave his developers a challenge: write OO code with no getters.

Twenty-plus years after the movement of object-oriented programming into the mainstream, this still looks like a radical challenge to many people. "Whenever possible, tell another object to do something rather than ask for its data." This sort of behavioral abstraction is the heart of OOP and the source of its design power. Yet it is rare to find big Java or C++ systems where most classes don't provide public accessors. When you open that door, client code will walk in -- even if you are the person writing the client code.

Whenever I look at a textbook intended for teaching undergraduates OOP, I look to see how it introduces encapsulation and the use of "getters" and "setters". I'm usually disappointed. Most CS faculty think doing otherwise would be too extreme for relative beginners. Once we open the door , though, it's a short step to using (gulp) instanceof to switch on kinds of objects. No wonder that some students are unimpressed and that too many students don't see any much value in OO programming, which as they learn it doesn't feel much different from what they've done before but which puts new limitations on them.

To be honest, though, it is hard to go Full Metal OOP. Nolan was working with professional programmers, not second-year students, and even so programming without getters was considered a challenge for them. There are certainly circumstances in which the forces at play may drive us toward cracking the door a bit and letting an instance variable sneak out. Experienced programmers know how to decide when the trade-off is worth it. But our understanding the downside of the trade-off is improved after we know how to design independent objects that collaborate to solve problems without knowing anything about the other objects beyond the services they provide.

Maybe we need to borrow an idea from the TDD As If You Meant It crowd and create workshops and books that teach and practice OOP as if we really meant it. Nolan's challenge above would be one of the central tenets of this approach, along with the Polymorphism Challenge and other practices that look odd to many programmers but which are, in the end, the heart of OOP and the source of its design power.

~~~~~

If you like this post, you might enjoy The Summer Smalltalk Taught Me OOP. It's isn't about OOP itself so much as about me throwing away systems until I got it right. But the reason I was throwing systems away was that I was still figuring out how to build an object-oriented system after years programming procedurally, and the reason I was learning so much was that I was learning OOP by building inside of Smalltalk and reading its standard code base. I'm guessing that code base still has a lot to teach many of us.

December 04, 2019 2:42 PM

Make Your Code Say What You Say When You Describe It

Brian Marick recently retweeted this old tweet from Ron Jeffries:

You: Explain this code to me, please.

They: blah blah blah.

You: Show me where the code says that.

They: <silence>

You: Let's make it say that.

I find this strategy quite helpful when writing my own code. If I can't explain any bit of code to myself clearly and succinctly, then I can take a step back and work on fixing my understanding before trying to fix the code. Once I understand, I'm a big fan of creating functions or methods whose names convey their meaning.

This is also a really handy strategy for me in my teaching. As a prof, I spend a fair amount of time explaining code I've written to students. The act of explaining a piece of code, whether written or spoken, often points me toward ways I can make the program better. If I find myself explaining the same piece of code to several students over time, I know the code can probably be better. So I try to fix it.

I also use a gentler variation of Jeffries' approach when working directly with students and their code. I try whenever I can to help my students learn how to write better programs. It can be tempting to start lecturing them on ways that their program could be better, but unsolicited advice of this sort rarely finds a happy place to land in their memory. Asking questions can be more effective, because questions can lead to a conversation in which students figure some things out on their own. Asking general questions usually isn't helpful, though, because students may not have enough experience to connect the general idea to the details of their program.

So: I find it helpful to ask a student to explain their code to me. Often they'll give me a beautiful answer, short and clear, that stands in obvious contrast to the code we are looking at out. This discrepancy leads to a natural follow-up question: How might we change the code so that it says that? The student can take the lead in improving their own programs, guided by me with bits of experience they haven't had yet.

Of course, sometimes the student's answer is complex or rambles off into silence. That's a cue to both of us that they don't really understand yet what they are trying to do. We can take a step back and help them fix their understanding -- of the problem or of the programming technique -- before trying to fix the code itself.

December 02, 2019 11:41 AM

XP as a Long-Term Learning Strategy

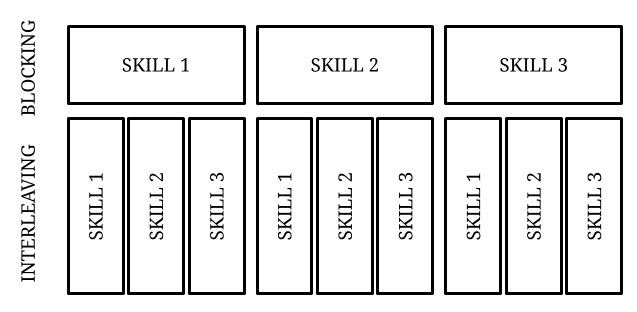

I recently read Anne-Laure Le Cunff's Interleaving: Rethink The Way You Learn. Le Cunff explains why interleaving -- "the process of mixing the practice of several related skills together" -- is more effective for long-term learning than blocked practice, in which students practice a single skill until they learn it and then move on to the next skill. Interleaving forces the brain to retrieve different problem-solving strategies more frequently and under different circumstances, which reinforces neural connections and improves learning.

To illustrate the distinction between interleaving and blocked practice, Le Cunff uses this image:

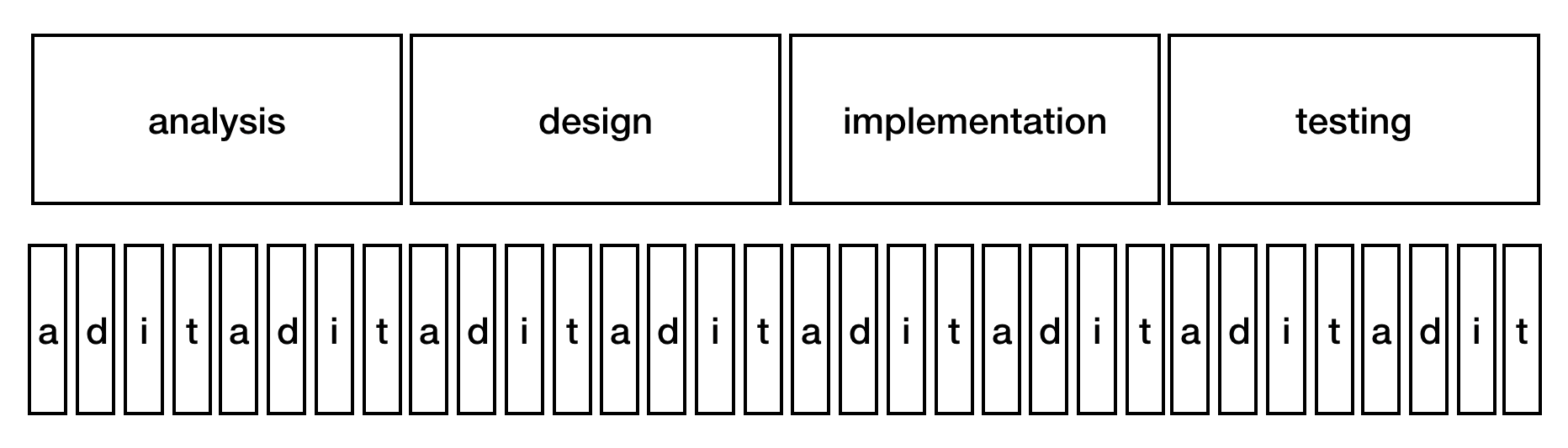

When I saw that diagram, I thought immediately of Extreme Programming. In particular, I thought of a diagram I once saw that distinguished XP from more traditional ways of building software in terms of how quickly it moved through the steps of the development life cycle. That image looked something like this:

If design is good, why not do it all the time? If testing is good, why not do it all the time, too?

I don't think that the similarity between these two images is an accident. It reflects one of XP's most important, if sometimes underappreciated, benefits: By interleaving short spurts of analysis, design, implementation, and testing, programmers strengthen their understanding of both the problem and the evolving code base. They develop stronger long-term memory associations with all phases of the project. Improved learning enables them to perform even more effectively deeper in the project, when these associations are more firmly in place.

Le Cunff offers a caveat to interleaved learning that also applies to XP: "Because the way it works benefits mostly our long-term retention, interleaving doesn't have the best immediate results." The benefits of XP, including more effective learning, accrue to teams that persist. Teams new to XP are sometimes frustrated by the small steps and seemingly narrow focus of their decisions. With a bit more experience, they become comfortable with the rhythm of development and see that their code base is more supple. They also begin to benefit from the more effective learning that interleaved practice provides.

~~~~

Image 1: This image comes from Le Cunff's article, linked above. It is by Anne-Laure Le Cunff, copyright Ness Labs 2019, and reproduced here with permission.

Image 2: I don't remember where I saw the image I hold in my memory, and a quick search through Extreme Programming Explained and Google's archives did not turn up anything like it. So I made my own. It is licensed CC BY-SA 4.0.