Session 17

Local Variables as Syntactic Abstraction

Opening Exercise: Let It Be

let expressions?

(let ((x 5) ;; Exercise 1

(y 6)

(z 7))

(- (+ x z) y))

(let ((x 13) ;; Exercise 2

(y (+ 6 x))

(z x))

(- (+ x z) y))

Puzzling Out These let Expressions

The second expression does not behave as we might expect from

experience with previous languages. When we initialize

y and z to values that depend on the

value of x, we might expect it to be the value of

x in the immediately preceding binding,

x = 13. That is how sequences of local variable

declarations seem to work in other languages.

What is different here?

Last session, we learned that

a Racket let expression does not create a sequence of

declarations in that way. It is equivalent to applying a nameless

lambda expression to the variable's values. When we

translate the let expression into its equivalent

lambda application, we see the difference.

( (lambda (x y z) ;; Exercise 2, translated

(- (+ x z) y))

13

(+ 6 x)

x )

Translating the let expression into a

lambda application makes clear the semantics of the

let form: the region associated with the variables

declared in a let is the body of the expression,

not the whole expression.

This is an example of how knowing about syntactic abstractions can

help you to understand why a language works the way it does. You

might think that let should work some other

way, but knowing that it is an abstraction of a lambda

application lets you see why it cannot work any other way.

y and z using the value of the

new binding for x?

We must initialize y and z within the

region of x, that is, within the body of the

let!

(let ((x 13)) ;; Exercise 2

(let ((y (+ 6 x)) ;; -- with a nested let

(z x))

(- (+ x z) y)))

This ensures that the x referred to in binding

y and z is the one we intend. The value

is 26 - 19 = 7.

Can we solve the similar exercise given at the end of last session's notes in the same way? Here is the exercise:

(let ((x 13) ;; Exercise 2

(y (+ y x))

(z x))

(- (+ x z) y))

Rewritten with a nested let, we have...

(let ((x 13))

(let ((y (+ y x))

(z x))

(- (+ x z) y)))

Now, the references to x are okay, but notice that

y is given a value that depends on y.

The bold y is still free!

Where Are We?

Last session, we began to discuss the idea of syntactic abstractions, those features of a language that are convenient to have but that are not strictly essential to the language. From the programming language perspective, these constructs can complicate the process of interpreting a language. Part of our study of the design of programming languages is to identify which features are essential so that we can understand how our interpreters work.

Keep in mind that the existence of syntactic abstractions may be

essential in terms of program readability and maintainability.

Who would want to read, let alone write, a long program in which

all local variables have been replaced with applications of

anonymous lambdas? Or where we had to create named

functions every time we wanted a local variable? Local variables

are "not essential" only in the sense that our language

processors can live without them. Language designers

and implementers take advantage of this fact to write program

interpreters that are more efficient and easier to maintain.

Syntactic abstractions are not limited to Racket or languages like it. Consider the Python list comprehension. Here is an example:

doubled_numbers = [n * 2 for n in numbers]

which is syntactic sugar for a good old for loop:

doubled_numbers = []

for n in numbers:

doubled_numbers.append(n * 2)

Syntactic abstractions can become almost little languages in their own right. A Python list comprehension can do more. Consider this example:

doubled_numbers = [n * 2 for n in numbers if n % 2 == 1]

It is sugar for:

doubled_numbers = []

for n in numbers:

if n % 2 == 1:

doubled_numbers.append(n * 2)

And there's even more to list comprehensions... Read on in Python's documentation if you care to learn more.

Yesterday, Today, Tomorrow

Earlier in the semester, we learned that functions which take more than one argument are really syntactic sugar. They are not essential because we can curry a multi-argument function into a function that takes one argument and returns a function that expects the rest.

Last session, we began our study of another syntactic abstraction, local variables.

This session, we will make the idea of "local variable as syntactic abstraction" more concrete. Then we will see that functions can be local variables, too, and leave you to read about logical connectives and selection structures.

Local Variable as Abstraction

Recall this BNF description for Racket's let

expression:

<let-expression> ::= (let <binding-list> <body>)

<binding-list> ::= ()

| ( <binding> . <binding-list> )

<binding> ::= (<var> <exp>)

We learned how these expressions work by defining

a translational semantics

for let. A let expression of this form:

(let ((<var_1> <exp_1>)

(<var_2> <exp_2>)

.

.

.

(<var_n> <exp_n>))

<body>)

is equivalent to this lambda application:

((lambda (<var_1> <var_2>...<var_n>)

<body>)

<exp_1> <exp_2>... <exp_n>)

... look at the equivalence in a code diagram!

I then made this claim:

In fact, some Scheme interpreters and compilers automatically translate aletexpression into an equivalentlambdaapplication whenever they see one!

Let's see how that's done.

First step: a one-level translator

Let's write a function (let->app exp) that takes

as input a simple Racket let expression and returns

an equivalent application. For example:

> (let->app '(let ((a 1) (b 2)) (+ (* a a) (* b b)))) ((lambda (a b) (+ (* a a) (* b b))) 1 2)

Note that this translation does not manipulate the body of the

expression. It only puts it into the correct position in an

application. How can you access the variables in the

let expression? Their values? The body?

... write the function together ... or look at the code ...

This small function captures the essence of how a let

expression works, and even what it means. It is useful for

seeing the basic idea of a translational semantics.

However, it's not enough to work for every Racket expression. In

our opening exercise, we saw that

one let expression can be nested inside of another.

In fact, a let expression can be part of any Racket

expression. So we need to look at the body of the let

— and at the values of the variables!

Going All the Way: A Pre-Processor for let

Let's see how the entire process works by adding local variables to our little language as a syntactic abstraction, and then see how it really can make our lives easier.

-

add

letto the language grammar -

create syntax procedures for

letexpressions; examples (define simple-let '(let (a b) (c a))) (define nested-let '(let (a b) (let (c (lambda (d) a)) (c a)))) (let? nested-let) (let->var nested-let) (let->val nested-let) (let->body nested-let) (let? (let->body nested-let)) (let->var (let->body nested-let)) (let->val (let->body nested-let)) (let->body (let->body nested-let)) -

translate a

letexpression into alambdaapplication(preprocess simple-let) (preprocess nested-let)

... write the function together ...

... the result... -

use our translator to show how other functions now work for code

that contains a

letexpression — if we preprocess it first(occurs-free? 'a (preprocess nested-let)) (let ((core-exp (preprocess nested-let))) (occurs-free? 'b core-exp)) ... (occurs-free? 'c core-exp) ... (occurs-free? 'd core-exp) ... (occurs-bound? 'd core-exp) ... (occurs-bound? 'a core-exp)

Which is easier: writing a translator or updating

occurs-free? and occurs-bound??

What if there are other functions like occurs-free?

and occurs-bound?? An interpreter or compiler will

also contain functions such as declared-vars,

unused-var?, and free-vars, that operate

over the language. Now the weight of work strongly favors writing

a pre-processor and keeping it up to date.

That's how syntactic abstraction and translational semantics can make language processors easier to write and manage.

Syntactic Abstraction and Language Processing

What have we done?

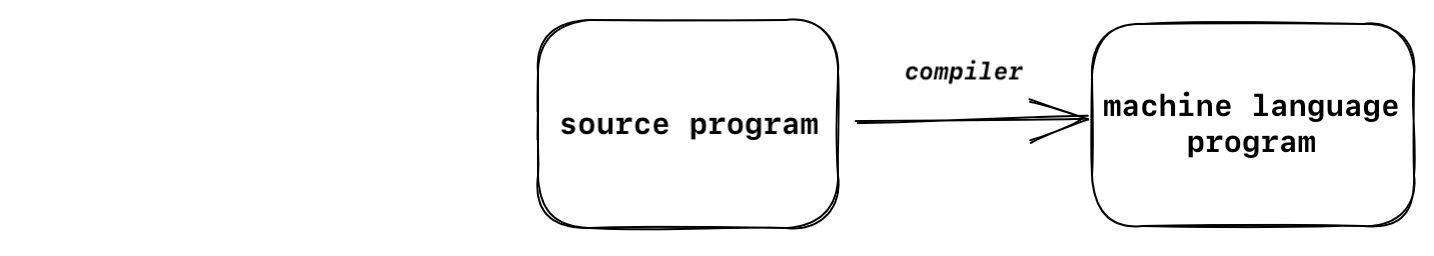

Before creating the preprocess function, compiling

a program worked like this:

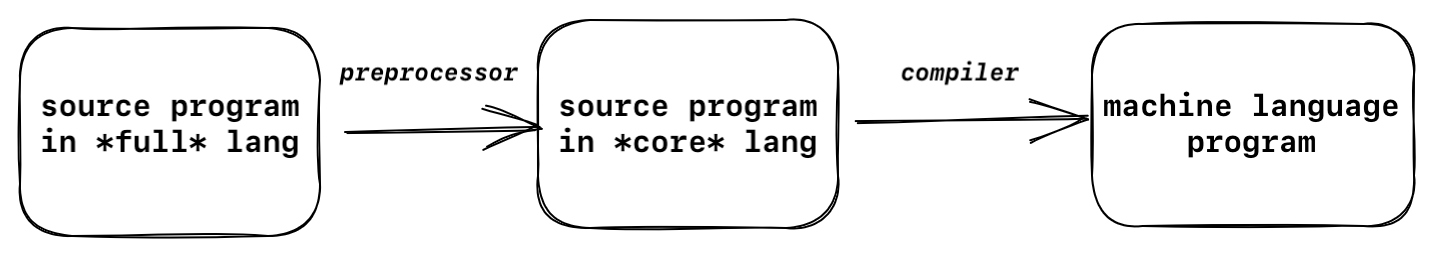

After we add the preprocess, compiling a program works like this:

How can syntactic abstraction make program processors more efficient and easier to maintain? When we pre-process a syntactic abstraction into an equivalent expression that the processor already knows about, we:

- eliminate the code for processing the abstraction, which would duplicate code that already exists in the processor, and

- make it so that the translated code benefits from any optimizations made by the language processor in handling the equivalent expression.

If we extend a language this way, then all of the existing tools that process the language will work on the preprocessed program, so we can use them to process programs in the extended language.

Some other things to consider:

- It is easier to write a preprocessor than to write or modify a compiler or interpreter.

- This approach makes it possible to extend a language without writing a compiler from scratch or extending an existing compiler!

We'll see how the second advantage is implemented in Racket later in this unit.

Pre-processing is an example of translating one program into an equivalent one that uses other features. It takes advantage of the idea of translational semantics, in which we define and understand a syntactic abstraction in terms of more primitive features.

Wrap Up

-

Reading

- Review these notes, especially the final section, Syntactic Abstraction and Language Processing.

-

Read this short section on

using

letto name local functions. Functions in Racket are named like any other value, soletcan do the job! -

Read this short section on

how boolean operators are syntactic abstractions

of

ifexpressions.

-

Homework

- Homework 7 is available and due in one week.

-

Quiz

- I returned Quiz 2 at the end of class today.

-

Note on Session 18

- I have a two-day work retreat Thursday and Friday, so we will not meet at our usual time Thursday. I will post a video for the session that you can watch and work through. Watch the course home page for more details soon.