Session 18

Variable Reference and Scope

Opening Exercise: Let What?

let expression?

(let ((x 3) (y 4))

(+ (let ((z 5)

(x (* 3 y)))

(+ (* x y) z))

(let ((x 6)

(w (- x y)))

(+ (* x w) y))))

The challenge may seem familiar and new all at once. In the

opening exercise

last time,

we saw a case where a variable did not mean what we thought it

might because the reference was free:

the region

of a local variable is the body of the let

expression. That does not include the variables' values.

This time, though, that problem is turned inside out: We have a variable that cannot be seen in its own body, because another declaration hides it!

Keep this in mind as we tackle a seemingly unrelated problem.

Where Are We?

Last session, we dug deeper into the idea that

a let expression is a syntactic abstraction.

A let expression gives us a convenient way to write

code that would otherwise have to be the application of a

lambda. To demonstrate this we wrote code to

translate a let expression into the application of a

lambda. In its simplest form, this is simply a matter

of

deconstructing the let exp

and creating an app expression.

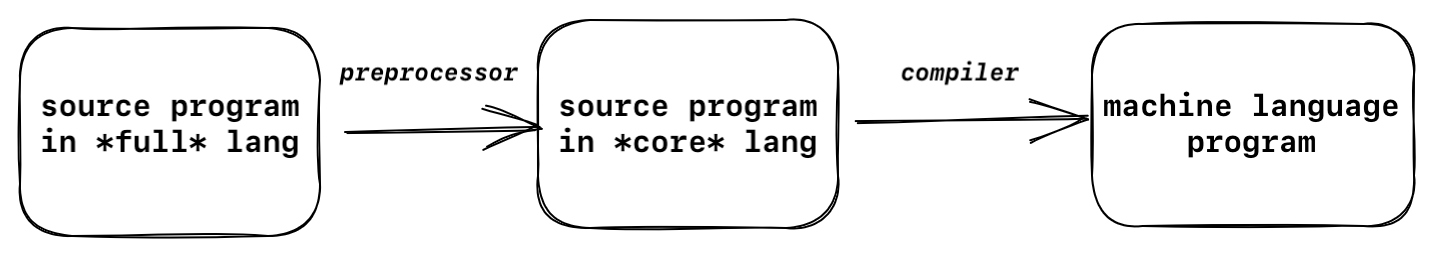

To process expressions from the entire language, though, we needed to write a preprocessor that

- translates sugar into its underlying form, and

- keeps all core expressions in their original form.

Most expressions in the language contain other expressions, so

the preprocess function

is recursive.

The preprocessor translates one expression in the language into another expression in the language. The new expression behaves exactly like the original but uses only the core features of the language.

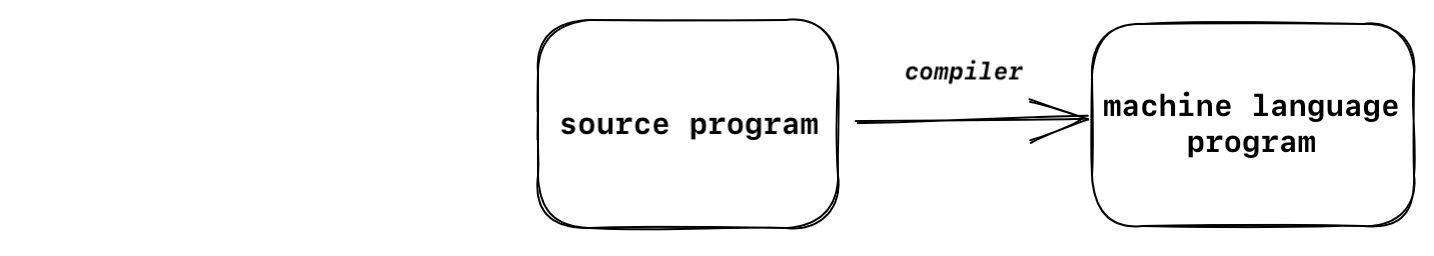

Notice how this changes the process of compiling a program. Before

creating the preprocess function, compiling a program

worked like this:

With the preprocessor , compiling a program works like this:

As you saw in the notes from last time, this technique offers many benefits to the people who write compilers and other language processors. These benefits make it possible for them to offer the benefits of syntactic sugar to those of us you write programs in the language!

This is the fundamental idea behind the idea of syntactic abstractions, those features of a language that are convenient to have but are not essential to the language. In the last few weeks, we have learned that a number of standard language features are really syntactic abstractions of more primitive features, including:

- many conditional forms

- procedures that take more than one argument

- logical connectives

- local variables

- local functions

After your reading for next time, you will see that even

recursive local functions are sugar — though the

translation process is not as simple as that for let

expressions.

Today, we set you up for that reading assignment quickly and then return to the idea of a variable's scope and see how scope works in a block-structured language. This discussion is prelude to something more radical: the idea that variable names themselves are not necessary. They are syntactic abstractions!

A Limit on Local Functions

In

the reading assignment

for today, we saw that we can use a let expression to

create a local function in Racket, because function names

are like any other variable bindings:

(define invert

(lambda (list-of-2-lists)

(let ((swap (lambda (lst)

(list (second lst) (first lst)) )))

(map swap list-of-2-lists)) ))

Hurray! Racket gives us something unexpected for free, which follows from the design decision to make naming functions work like naming any other value. We have already taken advantage of this many times, whenever we pass a function name or value as an argument to another function. (You will see one in today's reading.)

As we've learned to write recursive programs, we have often found ourselves creating helper functions as a part of our programs. From now on, we will be able to create simple local functions whenever they help us. There are several software engineering advantages to creating locals functions, as described last time.

Does this technique work for all functions?

Consider the case of

(list-index target los).

It computes the 0-based first occurrence of target in

los:

> (list-index 'd '(a b c d e f d)) 3 > (list-index 'z '(a b c d e f d)) -1

... live-code this quickly, or show the code pre-built.

To solve this problem, we need a third argument: the position of

the current symbol in the list. So we make list-index

an interface procedure and make our structurally-recursive function

a helper.

We end up with functions that look like something this:

(define list-index

(lambda (target los)

(list-index-counted target los 0)))

(define list-index-counted

(lambda (target los count)

(if (null? los)

-1

(if (eq? target (first los))

count

(list-index-counted target (rest los) (add1 count))))))

list-index-with-count exists only to serve

list-index. No other function or programmer is likely

ever to need it. This sounds like a perfect time to use a local

function... But there is a problem. Do you see why? Your reading

assignment for next time explores this case in more detail.

Quick Exercise: Let What?

{ // Block 1

int x = 4;

int y = 0;

{ // Block 2

int x = 3;

int z = x + 1;

System.out.println("x = " + x + " y = " + y + " z = " + z);

}

y = x + 1;

System.out.println("x = " + x + " y = " + y);

}

Scope and Lexical Analysis

As we saw in Session 17, the region of a variable declaration is the part of a program where that variable declaration is seen. The term "region" is often used synonymously with "scope". If the scope of a variable can be determined at compile time (that is statically), then we say that the language is statically or lexically scoped.

Is there a difference between the region of a variable and the scope of a variable? Yes. We will study the distinction in some detail later. For now, we can consider the region of our Racket identifiers as identical to their scope. These identifiers are, of course, parameter names and local variables.

If regions in a program can be nested inside of each other, then we say that a language is block structured. These regions are called blocks. If a variable in one block is not visible because it has been re-declared within a nested block, we say that the "inner" variable creates a hole in the scope of the "outer" variable. We also say that the inner variable declaration shadows the outer one.

We saw an example of this in

today's opening exercise.

The nested let expressions created holes in the region

of the x declared by the main expression. Each inner

x shadows the outer one.

This happens in other languages, too. Consider the snippet from

our quick exercise above. The

x declared in Block 2 shadows the x

declared in Block 1. We can refer to y in Block 2, if

we want, but we cannot refer to z in Block 1 outside

of Block 2. Its region is limited to the inner block.

Sidebar. That example is Java-like because it is not legal Java. Java does not allow a variable in a nested block to shadow a variable in the outer scope!

We can simulate this idea in Java, though, with a variable declared in a method that shadows an instance variable. See this simple test class. (In some contexts, we can declare astaticblock that lives within another block.)

Can we write a Racket program with a similar structure? Sure.

We can use let to create blocks, though we will need

let* to initialize our inner block properly:

(let ((x 4) ;; Block 1

(y 0))

(let* ((x 3) ;; Block 2

(z (+ x 1)))

...)

...)

In both the C example and the Racket example, we can begin to see how the variable names themselves are unnecessary. Can we change the names? If so, what remains constant in how the variable bindings are determined? Each variable reference can be determined by what block the variable is declared in, along with the position of the declaration within the block.

This is the idea of lexical addressing.

Lexical Addresses

Consider this lambda expression:

(lambda (x y) ;; Block 0

((lambda (a) ;; Block 1

(x (a y)))

x))

Block 1 lies within Block 0. The reference to a in

in Block 1 is to the variable declared in that block. The

references to x and y are to variables

declared in Block 0. In this sense, the variable references

x and y are "deeper" than the reference

a, because the corresponding declarations are one

block farther away.

If we decided to change the names of the variables declared in

Block 0 from x and y to, say,

east and north, we would need to change

the references to x and y as well.

Whatever we call them, x or east refers

to the first variable declared in the block, and y or

north refers to the second.

With these ideas of depth and position, we can reason ourselves to an interesting conclusion: each variable reference can be determined uniquely by the block the variable is declared in and the position of that declaration within the block.

Let's make this concept more concrete.

The lexical address of a variable reference gives the

depth of the reference from the block in which the

variable was declared and the position of the variable's

declaration in that block. A lexical address can take the form

(v : d p), where

-

vis the variable name, -

dis the depth of the reference from its declaration, and -

pis the position of the declaration in that block.

We will treat depth and position as zero-based counters. That is, the depth tells us how many block boundaries we must cross to get from a variable reference to its declaration, and the position tells us how many steps we need to take down the list of local declarations to find the declaration.

For example, in our lambda expression above:

(lambda (x y) ;; Block 0

((lambda (a) ;; Block 1

(x (a y)))

x))

The x in the last line is in Block 0 and refers to the

parameter x declared in Block 0, which is the first

declaration in that block. So the address of this x

has depth 0 and position 0, or (x : 0 0).

The references to x and y in the third

line are also to the parameters declared in Block 0. They appear

in Block 1, but no declarations in that block shadow the original

declarations. So the addresses of those references are

(x : 1 0) and (y : 1 1), respectively.

Finally, the reference to a in the same line is to the

formal parameter of Block 1, so its address is

(a : 0 0).

Note that this idea is not specific to Racket, to a language with

lambda expressions, or to a language with a copious

number of parentheses. The lambda expression above

is similar in form to this Java-like code:

{ // Block 0

Classname1 x = ...;

Classname2 y = ...;

{ // Block 1

Classname1 a = ...;

x.doOneThing( a.doAnotherThing(y) );

}

return x;

}

Lexical Addresses Exercises

(lambda (f g) ;; Problem 1

(lambda (x)

(f (g x))))

((lambda (x) (x 3)) ;; Problem 2

(lambda (x) (* x x)))

(lambda (x) ;; Problem 3

(lambda (y)

((lambda (x)

(x y))

x)))

(define x ;; Problem 4 ... sample usage:

(lambda (x) ;; > (x '(1 2 3))

(map (lambda (x) (add1 x)) x))) ;; (2 3 4)

Removing Variable References

We could annotate the variable references in a lambda

expression with each reference's lexical address. Consider the

first lambda expression in the previous section:

(lambda (x y)

((lambda (a)

((x : 1 0) ((a : 0 0) (y : 1 1))))

(x : 0 0)))

This sort of annotation can be useful to a program that manipulates this expression, because the variable names themselves are meaningless inside the machine. For example, a compiler needs to be able to compute the location of a referenced variable in memory so that it can write the addressing code into the assembly language it generates. A lexical address could be part of that computation.

But can we go even one step further and remove the variable references altogether? Let's see...

(lambda (x y)

((lambda (a)

((: 1 0) ((: 0 0) (: 1 1))))

(: 0 0)))

Have we lost any information? Um... no! Each lexical address specifies exactly which formal parameter is referred to at each point in the code. Given a lexical address, we can look up the associated variable name.

Very cool! The code in blocks no longer refers to variables by name, but we can reconstruct the names if we need them.

As strange as this may sound, let's ask the next question: Can we eliminate the variable names themselves? Let's see. What happens if we replace the parameter list with a number that indicates the length of the list:

(lambda 2

((lambda 1

((: 1 0) ((: 0 0) (: 1 1))))

(: 0 0)))

Have we lost any information? Yes -- but maybe no, if we look at the program from a different perspective.

- We have indeed lost track of the variable names used by the programmer, which were created for human readers. This makes reading the code more difficult to do.

- But within a language processor, the names themselves are mere window dressing which take up space. The processing program can't use the names anyway; what it really needs is a pointer into a data structure of variable/value bindings. The lexical addresses serve this purpose just fine.

On this latter point: We have lost nothing. We can still compute the same answers that we were able to compute before.

The semantics of the expression — its meaning when executed — have been preserved. This must mean that variable names are syntactic sugar. We can translate any piece of code that uses names into a behaviorally-equivalent form that uses no variable names. Our examples here demonstrate the variable names really are a syntactic abstraction.

I remember my first encounter with this idea, an accident that

happened when one of my program files became corrupted. It came

in graduate school when I was learning to program in Smalltalk.

I brought up a debugger on a block of code that had been compiled,

only to find that all of my variable names and parameter names had

been replaced with the generic names t1,

t2, and so on.

multiplyAndScale: t1 and: t2 "multiplies the given numbers and returns the result times 4" | t3 | t3 := t1 * t2. ^t3 * 4

In order to show me my source code, the debugger had to re-construct the code from its bytecode-compiled form, in which all identifiers had been replaced with lexical addresses. It could not re-create my identifier names, so it just created a sequence of unique symbols to put in their places. Not too surprisingly, the code behaved just the same anyway.

Ironically, the idea of that variable names are a syntactic abstraction demonstrates just how important identifier names can be to programmers. Imagine having to read and write expressions containing only lexical addresses, or randomly generated variable names... You may feel similarly disoriented while reading some of my code. I know I sometimes feel equally reading yours. :-)

Replacing variable references with lexical addresses carries the "not-very-descriptive variable name" problem to its comic extreme. Even so, try to learn from them. Remember that code with poorly-named variables can begin to look like this pretty quickly to readers who are unfamiliar with your code, or are otherwise unprepared to interpret the variable names you have chosen.

Use variable names that are as descriptive as possible when you write your code, for the benefit of human readers, knowing that your interpreter or compiler will eliminate them from the internal representation of your code.

Quick Question: Why do you think I use numbers in place of the variable declarations? What do these numbers help me do or know?

A Few Closing Exercises

(lambda 1 ;; Problem 1

(lambda 1

(: 1 0)))

(lambda (x) ;; Problem 2

(lambda (x)

(: 1 0)))

(lambda 3 ;; Problem 3

((: 0 1) ((lambda 2

((: 0 0) (: 1 0) (: 0 1)))

(: 0 2))))

Two Closing Notes

What are lexical addresses used for?

In this course, they help us to understand how our languages work, by exploring what is essential and what is not. Beyond this course, they can be used when implementing interpreters, compilers, and IDEs such as Eclipse. Syntactic analysis is an essential part of practical software engineering whenever code and structured data are involved.

Even variable names are syntactic sugar.

Let that sink in. It helps us to see why the three things every programming language has doesn't contain the concrete items that we all might expect to find on the list. If not even variable names are essential, then what constitutes the core of a programming language really is different than our previous experience may have led us to believe.

Wrap Up

-

Reading

- Review the ideas of variable references, scope, and lexical address from today's session.

- Read about local recursive functions and the challenge they create.

- Do the extra exercises in today's notes. Practice helps!

- Several students asked about set functions that produce lists in a different order than the examples on the assignment. Remember: these functions are working with, and on, sets. Order doesn't matter. This creates a challenge for writing unit tests to check set equality. Check out this short reading on how to do that. It will introduce you to another set function and to a new feature of Rackunit.

-

Homework

- Homework 7 was due yesterday.

- Homework 8 is available and due in one week.