October 28, 2006 8:05 PM

OOPSLA This and That

In addition to the several OOPSLA sessions I've already blogged about, there were a number of other fun or educational moments at the conference. Here are a few...

- Elisa Baniassad presented an intriguing Onward! talk called The Geography of Programming. She suggested that we might learn something about programming language design by considering the differences between Western and Eastern thought. Her motivation came from Richard Nisbett's The Geography of Thought: How Asians and Westerners Think Differently--And Why. One more book added to my must-read list...

- Partly in honor of OOPSLA stalwart John Vlissides, who passed away since OOPSLA'05, and partly in honor of Vlissides et al.'s seminal book Design Patterns, there was a GoF retrospective panel. I learned two bits of trivia... John's favorite patterns were flyweight (which made it into the book) and solitaire (which didn't). The oldest instance of a GoF pattern they found in a real system? Observer -- in Ivan Sutherland's SketchPad! Is anyone surprised that this pattern has been around that long, or that Sutherland discovered its use over 40 years ago? I'm not.

- On the last morning of the conference, there was scheduled a panel on the marriage of XP and Scrum in industry. Apparently, though, before I arrived on the scene it had morphed into something more generally agile. While discussing agile practices, "Object Dave" Thomas admitted he believes that, contrary to what many agilists seem to imply, comments in code are useful. After all, "not all code can be read, being encrypted in Java or C++ as it is". But he then absolved his sin a bit by noting that the comment should be "structurally attached" to the code with which it belongs; that is a tool issue.

- Then, on the last afternoon of the conference, I listened in on the Young Guns panel, in which nearly a dozen computer scientists under the age of 0x0020 commented on the past, present, and future of objects and computing. One of these young researchers commented that scientists tend to make their great discoveries while still very young, because they don't yet know what's impossible. To popularize this wisdom, gadfly and moderator Brian Foote suggested a new motto for our community: "Embrace ignorance."

- During this session, it occurred to me that I am no longer a "young gun" myself, spending the six last days of my 0x0029th year at OOPSLA. This is part of how I try to stay "busy being born", and I look forward to it every year. I certainly don't feel like an old fogie, at least not often.

- Finally, as we were wrapping up the conference in the committee room after the annual ice cream social, I told Dick

Gabriel that I would walk across the street to hear Guy Steele read a restaurant menu aloud. Maybe there is a little

bit of hero

worship going on here, but I always seem to learn something when Steele shares his thoughts on computing.

----

Another fine OOPSLA is in the books. The 2007 conference committee is already at work putting together next year's event, to be held in Montreal during the same week. Wish us wisdom and good fortune!

Posted by Eugene Wallingford | Permalink | Categories: Computing, Software Development, Teaching and Learning

October 28, 2006 7:36 PM

OOPSLA Day 3: Philip Wadler on Faith, Evolution, and Programming Languages

"Of course, the design should be object-oriented." Um. "Of course not. The design should be functional". Day 3's invited speaker, Philip Wadler, is a functional programming guy who might well hear the first statement here at OOPSLA, or almost anywhere out in a world where Java and OO have seeped through the software development culture, and almost as often he reacts with the second statement (if only in his mind). He has come to recognize that these aren't scientific statements or reactions; they are matters of faith. But faith and science as talking about different things. Shouldn't we make language and design choices on the basis of science? Perhaps, but we have a problem: we have not yet put our discipline on a solid enough scientific footing.

The conflict between faith and science in modern culture, such as on the issue of evolution, reminds Wadler of what he sees in the computing world. Programming language design over the last fifty years has been on an evolutionary roller coaster, with occasional advances injected into path of languages growing out of the dominant languages of the previous generation. He came to OOPSLA in a spirit of multiculturalism, to be a member of a "broad church", hoping to help us see the source of his faith and to realize that we often have alternatives available when we face language and design decisions.

The prototypical faith choice that faces every programmer is static typing versus dynamic typing. In the current ecosystem, typing seems to have won out versus no typing, static typing has usually had a strong upper hand over dynamic typing. Wadler reminded us that this choice goes back to the origins of our discipline, between the untyped lambda calculus of Alonzo Church and the typed calculus of Haskell Curry. (Church probably did not know he had a choice; I wonder how he would have decided if he had?)

Wadler then walked us through his evolution as a programming languages researcher, and taught us a little history on the way.

Church: The Origins of Faith

Symbolic logic was largely the product of the 19th century mathematician Gottlob Frege. But Wadler traces the source of his programming faith to the German logician Gerhard Gentzen (1909-1945). Gentzen followed in footsteps of Frege, both as a philosopher of symbolic logic and as an anti-Semite. Wadler must look past Gentzen's personal shortcomings to appreciate his intellectual contribution. Gentzen developed the idea of natural deduction and proof rules.

(Wadler showed us page of inference rules using the notation o mathematical logic, and then asked for a show of hands to see if we understood the ideas and the notation on his slides. On his second question, enough of the audience indicated uncertainty that he slowed down to explain more. He said that he didn't mind the diversion: "It's a lovely story.")

Next he showed the basics of simplifying proofs -- "great stuff", he old us, "at least as important as the calculus", something man had searched for thousands of years. Wadler's love for his faith was evident in the words he chose and the conviction with which he said them.

Next came Alonzo Church, who did his work after Gentzen but still in the first half of the 20th century. Church gave us the lambda calculus, from which the typed lambda calculus was "completely obvious" -- in hindsight. The typed lambda calculus was all we needed to make the connection between logic and programming: a program is a proof, and a type is a proof term. This equivalence is demonstrated in the Curry-Howard isomorphism, named for the logician and computer scientist, respectively, who made the the connection explicit. In Wadler's view, this isomorphism predicts that logicians and computer scientists will develop many of the same ideas independently, discovered from opposite sides of the divide.

This idea, that logic and programming are equivalent, is universal. In the movie, Independence Day, the good guys defeat the alien invaders by injecting a virus written in C into its computer system. The aliens might not have known the C programming language, and thus been vulnerable on that front, but they would have to have known the lambda calculus!

Haskell: Type Classes

The Hindley-Milner algorithm is named for another logician/computer scientist pair that made the next advance in this domain. They showed that even without type annotations an automated system can deduce the most general data types that make the program execute. The algorithm is both correct and complete. Wadler wanted to show us that this idea is so important, so beautiful, that he took a step to the side of his podium and jumped up and down!

Much of Wadler's renown in the programming language derives from his seminal contributions to Haskell, a pure functional language based on Curry-Howard isomorphism and the Hindley-Milner algorithm. Haskell implements these ideas in the form of type classes. Wadler introduced this idea into the development of Haskell, but he humbly credited others for doing the hard work to make things work.

Java: Adding Generics

Java 1.4 was in many ways too simple. We had to use a List of some sort for almost everything, in order to have polymorphic structures. Trying to add C-style templates threatened to make things only worse. What the Java team needed was... the lambda calculus!

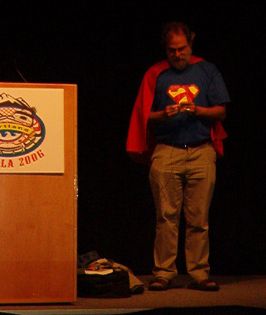

(At this moment, Wadler stopped his talk Superman-style and took off his business suit to reveal his Lambda-man outfit. The crowd responded with hearty applause!)

Java generics have the same syntax as C++, but different semantics. (He added parenthetically that Java generics "have semantics".) The templates are merely syntactic sugar, rewritten into older Java in a technique called "erasure". The rewrite produces identical byte codes that a programmer's own Java might. Much was written about this language addition both before and after he it was made to the Java specification, and I don't want to get into that discussion. But, as Wadler notes, this approaches supports the evolution of the language in a smooth way, consistent with existing Java practice. Java generics also bear more than a passing resemblance to type classes, which means that it could evolve into something more different -- and more.

Links: Reconciliation

Web applications typically consist in three tiers: the browser, the server, and the database. All are typically programmed in different languages, such as HTML, CSS, JavaScript, Perl, and SQL. Wadler's newest language, Links, is intended to be one language used for all three tiers. It compiles to SQL for running directly against a database. Links is similar to the similar-sounding LINQ, a dynamic query language developed at Microsoft. The similarity is no coincidence -- one of its architects, Erik Meijer, came from Haskell community. Again, type classes figure prominently in Links. Programmers in the OO community can think of them in an OO way with no loss of understanding. But they may want to broaden their faith to include something more.

Wadler closed his talk by returning to the themes with which he began: faith, evolution, and multiculturalism. He viewed the OOPSLA conference committee's inviting him to speak as a strong ecumenical step. "Faith is well and good", but he would like for computer science to make inroads helping us to make better decisions about language design and use. Languages like Links, implemented with different features and used in experiments, might help.

October 26, 2006 4:28 PM

OOPSLA Day 2: Jim Waldo "On System Design"

Last year, OOPSLA introduced another new track called Essays. This track shared a motivation from another recent introduction, Onward!, in providing an avenue for people to write and present important ideas that cannot find a home in the research-oriented technical program of the usual academic conference. But not all advances are in the form of novelty, of results from narrowly-defined scientific experiments. Some empirical results are the fruit of experience, reflection, and writing. The Essays track offers an avenue for sharing this sort of learning. The author writes an essay in the spirit of Montaigne and Bacon, using the writing to work out an understanding of his experience. He then presents the essay to the OOPSLA audience, followed by a thoughtful response from a knowledge person who has read the essay and thought about the ideas. (Essays crossed my path in a different arena last week, when I blogged a bit on the idea of blog as essay.)

Jim Waldo, a distinguished engineer at Sun, presented the first essay of OOPSLA 2006, titled "On System Design". He reflected on his many years as a software developer and lead, trying to get a handle on what he now believes about the design of software.

What is a "system"? To Waldo, it is not "just a program" in the sense he thinks meant by the postmodern programming crowd, but a collection of programs. It exists at many levels of scale that must be created and related; hence the need for us to define and manage abstractions.

Software design is a craft, in the classical sense. Many of Waldo's engineer friends are appalled at what software engineers think of as engineering (essentially the application of patterns to roll out product in a reliable, replicable way) because what engineers really do involves a lot of intuition and craft.

Software design is about technique, not facts. Learning technique takes time, because it requires trial and error and criticism. It requires patience -- and faith.

Waldo paraphrase Grady Booch as having said that the best benefit of the Rational toolset was that it gives developers "a way to look like they were doing something while they had time to think".

The traditional way to learn system design is via apprenticeship. Waldo usually asks designers he respects who they apprenticed with. At first he feared that at least a few would look at him oddly, not understanding the question. But he was surprised to find that every person answered without batting an eye. They all not only understood the question but had an immediate answer. He was also surprised to hear the same few names over and over. This may reflect Waldo moving in particular circles, or only a small set of master software developers out there!

In recent years, Waldo has despaired of the lack of time, patience, and faith shown in industry for developing developers. Is all lost? No. In reflecting on this topic and discussing with readers of his early drafts, Waldo sees hope in two parts of the software world: open source and extreme programming.

Consider open source. It has a built-in meritocracy, with masters at the top of the pyramid, controlling the growth and design of their systems. New developers learn from example -- the full source of the system being built. Developers face real criticism and have the time and opportunity to learn and improve.

Consider extreme programming. Waldo is not a fan of the agile approaches and doesn't think that the features on which they are sold are where they offer most. It isn't the illusion of short-term cycles or of the incrementalism that grows a big ball of mud which give him hope. In reality, the agile approaches are based in a communal process that builds systems over time, giving people the time to think and share, mentor and learn. Criticism is built into the process. The system is an open, growing example.

Waldo concludes that we can't teach system design in a class. As an adjunct professor, he believes that system design skills aren't a curriculum component but a curricular outcome. Brian Marick, the discussant on Waldo's took a cynical turn: No one should be allowed to teach students system design if they haven't been a committer to a large open-source project. (Presumably, having had experience building "real" big systems in another context would suffice.) More seriously, Marick suggested that it is only for recent historical reasons that we would turn to academia to solve the problem of producing software designers.

I've long been a proponent of apprenticeship as a way of learning to program, but Waldo is right that doing this as a part of the typical university structure is hard, if not impossible. We heard about a short-lived attempt to do this at last year's OOPSLA, but a lot of work remains. Perhaps if more people like Waldo, not just the more provocative folks at OOPSLA, start talking openly about this we might be able to make some progress.

Bonus reading reference: Waldo is trained more broadly as a philosopher, and made perhaps a surprising recommendation for a great document on the act of designing a new sort of large system: The Federalist Papers. This recommendation is a beautiful example of the value in a broad education. The Federalist Papers are often taught in American political science courses, but from a different perspective. A computer scientist or other thinker about the design of systems can open a whole new vista on this sort of document. Here's a neat idea: a system design course team taught by a computer scientist and a political scientist, with The Federalist Papers as a major reading!

Now, how to make system design as a skill an inextricable element of all our courses, so that an outcome of our major is that students know the technique? ("Know how", not "know that".)

October 26, 2006 3:46 PM

OOPSLA Day 2: Guy Steele on Fortress

The first two sentences of Guy Steele's OOPSLA 2006 keynote this morning were eerily reminiscent of his describes the application of the principles from his justly famous OOPSLA 1998 invited talk Growing a Language. I'm pretty sure that, for that few seconds, he used only one-syllable words!

This talk, A Growable Language, was related to that talk, but in a more practical sense. It applied the spirit and ideas expressed in that talk to a new language. His team at Sun is designing Fortress, a "growable language" with the motto "To Do for Fortran What Java Did for C".

The aim of Fortress is to support high-performance scientific and engineering programming without carrying forward the historical accidents of Fortran. Among the additions to Fortran will be extensive libraries, including for networking, a security model, type safety, dynamic compilation (to enable the optimization of running program), multithreading, and platform independence. The project is being funded by DARPA with the goal of improving programmer productivity for writing scientific and engineering applications -- to reduce the time between when a programmer receives a problem and when the programmer delivers the answer, rather than focus solely on the speed of the compiler or executable. Those are important, too, but we are shortsighted in thinking that they are the only formsof speed that matter. (Other DARPA projects in this vein are the Extend language from IBM[?] and the Chapel language from Cray.)

Given that even desktop computers are moving toward multicore chips, this sort of project offers potential value beyond the scientific programming community.

The key ideas behind the Fortress project are threefold:

- Don't build a language; grow it piecemeal.

- Create a programming notation that is more like the mathematical notation that this programmer community uses.

- Make parallelism the default way of thinking.

Steele reminded his audience of the motivation for growing a language from his first talk: If you plan for a language, and then design it, then build it, you will probably miss the optimal window of opportunity for language. One of the guiding questions of the Fortress project is, Will designing for the growth of a language and its user community change the technical decisions the team makes or, more importantly, the way it makes them?

One of the first technical questions the team faced was what set of primitive data types to build into the language. Integer and float -- but what sizes? Data aggregates? Quaternions, octonions? Physical units such as meter and kilogram?? He "might say 'yes' to all of them, but he must say no to some of them." Which ones -- and why?

The strategy of the team is to, wherever possible, add a desired feature via a library -- and to give library designers substantial control over both the semantics and the syntax of the library. The result is a two-level language design: a set of features to support library designers and a set of features to support application programmers. The former have turned out to be quite obbject-oriented, while the latter is not obbject-oriented at all -- something of a surprise to the team.

At this time, the language defines some very cool types in libraries: lists, vectors, sets, maps (with better, more math-like notion), matrices and multidimensional vectors, and units of measurement. The language also offers as a feature mathematical typography, using a wiki-style mark-up to denote Unicode characters beyond what's available on the ASCII keyboard.

In the old model for designing a language, the designers

- study applications

- add language features to support application developers

- study applications

- add language features to support library designers in creating the desired features

- let library designers create a library that supports application developers

At a strategic level, the Fortress team wants to avoid creating a monolithic "standard library", even when taking into account the user-defined libraries created by a single team or by many. Their idea is instead to treat libraries as replaceable components, perhaps with different versions. Steele says that Fortress effectively has make and svn built into its toolset!

I can just hear some of my old-school colleagues decrying this "obvious bloat", which must surely degrade the language's performance. Steele and his colleagues have worked hard to make abstraction efficient in a way that surpasses many of today's languages, via aggressive static and dynamic optimization. We OO programmers have come to accept that our environments can offer decent efficiency while still having features that make us more productive. The challenge facing Fortress is to sell this mindset to C and Fortran programmers with habits of 10, 20, even 40 years thinking that you have to avoid procedure calls and abstract data types in order to ensure optimal performance.

The first category of features in Fortress intend to support library developers. The list is an impressive integration of ideas from many corners of the programming language community, including first-class types, traits and trait descriptors (where, comprise, exclude), multiple inheritance of code but not fields, and type contracts. In this part of the language definition, knowledge that used to be buried in the compiler is brought out explicitly into code where it can be examined by programmers, reasoned over by the type inference system, and used by library designers. But these features are not intended for use by application programmers.

In order to support application developers, Steele and his team watched (scientific) programmers scribble on their white boards and then tried to convert as much of what they say as possible into their language. For example, Fortress takes advantage of subtle whitespace cues, as in phrases such as

Note the four different uses of the vertical bar, disambiguated in part by the whitespace in the expression.

The wired-in syntax of Fortress consists of some standard notation from programming and math:

- () for grouping

- , for separating values in a tuple

- ; for separating statements on a line

- . for selecting fields and methods

- conservative, traditional rules of precedence

Any other operator can be defined as infix, prefix, or postfix. For example, ! is defined as a postfix for factorial. Similarly, juxtaposition is a binary operator, one which can be defined by the library designer for her own types. Even nicer for the cientific programmer, the compiler knows that the juxtaposition of functions is itself a function (composition!).

The syntax of Fortress is rich and consistent with how scientific programmers think. But they don't think much about "data types", and Fortress supports that, too. The goal is for library designers to think about types a lot, but application programmers should be able to do their thing with type inference filling in most of the type information.

Finally, scientists, engineers, and mathematicians use particular textual conventions -- fonts, characters, layout -- to communicate. Fortress allows programmers to post-process their code into a beautiful mathematical presentation. Of course, this idea and even its implementation are not new, but the question for the Fortress team was what it would be like if a language were designed with this downstream presentation as the primary mode o presentation?

The last section of Steele's talk looked a bit more like a POPL or ICFP paper, as he explained the theoretical foundations underlying Fortress's biggest challenge: mediating the language's abstractions down to efficient executable code for parallel scientific computation. Steele asserted that parallel programming is not a feature but rather a pragmatic compromise. Programmers do not think naturally in parallel and sop need language support. Fortress is an experiment in making parallelism the default mode of computation.

Steele's example focused on the loop, which in most languages conflates two ideas: "do this statement (or block) multiple times" and "do things in this order". In Fortress, the loop itself means only the former; by default its iterations can be parallelized. In order to force sequencing, the programmer modifies the interval of the loop (the range of values that "counts" the loop) with the seqoperator. So, rather than annotate code to get parallel compilation, we must annotate to get sequential compilation.

Fortress uses the idea of generators and reducers -- functions that produce and manipulate, respectively, data structures like sequences and trees -- as the basis of the program transformations from Fortress source code down to executablecode. There are many implementations for these generators and reducers, some that are sequential and some that are not.

From here Steele made a "deep dive" into how generators and reducers are used to implement parallelism efficiently. That discussion is way behind what I can write here. Besides, I will have to study the transformations more closely before I can explain them well.

As Steele wrapped up, he reiterated The Big Idea that guides the Fortress team: to expose algorithm and design decisions in libraries rather than bury them in the compiler -- but to bury them in the libraries rather than expose them in application code. It's an experiment that many of us are eager to see run.

One question from the crowd zeroed in on the danger of dialect. When library designers are able to create such powerful extensions, with different syntactic notations, isn't there a danger that different libraries will implement similar ideas (or different ideas) incompatibly? Yes, Steele acknolwedge, that is a real danger. He hopes that the Fortress community will grow with library designers thinking of themselves as language designers and so exercise restraint in the the extensions they make, and work together to create community standards.

I also learned about a new idea that I need to read about... the BOOM hierarchy. My memory is vagues, but the discussion involved considering whether a particular operation -- which, I can't remember -- is associative, commutative, and idempotent. There are, of course, eight possible combinations of these features, four of which are meaningful (tree, list, bag/multiset, and set). One corresponds to an idea that Steele termed a "mobile", and the rest are, in his terms, "weird". I gotta read more!

October 25, 2006 2:13 PM

OOPSLA Day 1: Gabriel and Goldman on Conscientious Software

The first episode of Onward! was a tag team presentation by Ron Goldman and Dick Gabriel of their paper Conscientious Software. The paper is, of course, in the proceedings and so the ACM Digital Library, but the presentation itself was performance art. Ron gave his part of the talk in a traditional academic style, dressed as casually as most of the folks in the audience. Dick presented an outrageous bit of theater, backed by a suitable rock soundtrack, and so dressed outrageously (for him) in a suit.

In the "serious" part of the talk, Ron spoke about complexity and scale, particularly in biology. His discussion of protein, DNA, and replication soon turned to how biological systems suffer damage daily to their DNA as a natural part of their lifecycle. They also manage to repair themselves, to return to equilibrium. These systems, Ron said, "pay their dues" for self-repair. Later he discussed chemotaxis in E.Coli, wherein "organisms direct their movements according to certain chemicals in their environment". This sort of coordinated but decentralized action occurs not among a bacterium's flagella but also among termites as they build a system, and elephants as they vocalize across miles of open space, and among chimpanzees as they pass emotional state via facial expression.

We have a lot to learn from living things as we write our programs.

What about the un-"serious" part of the talk? Dick acted out a set of vignettes under title slides such as "Thirteen on Visibility", "Continuous (Re)Design", "Requisite Variety", and "What We Can Build".

His first act constructed a syllogism, drawing on the theme that the impossible is famously difficult. Perfect understanding requires abstraction, which is the ability to see the simple truth. Abstraction ignores the irrelevant. Hence abstraction requires ignorance. Therefore, perfect understanding requires ignorance.

In later acts, Dick ended up sitting for a while, first listening to his own address was part of the recorded soundtrack and then carrying on a dialogue with his alter ego, which spoke in a somewhat ominous Darth Vader-ized voice in counterpoint. The alter ego espoused a standard technological view of design and modularity, of reusable components with function and interface. This left Gabriel himself to embody a view centered on organic growth and complexity -- of constant repair and construction.

Dick's talk considered Levittown and the intention of "designed unpredictability", even if inconvenient, such as the placement of the bathroom far from the master bedroom. In Levittown, the 'formal cause' (see my notes on Brenda Laurel's keynote from the preceding session) lay far outside the "users", in Levitt's vision of what suburban life should be. But today Levittown is much different than designed; it is lived-in, a multi-class, multi-ethnic community that bears "the complexity of age".

On requisite variety, Dick started with a list of ideas (including CLOS, Smalltalk, Self, Oaklisp, ...) disappearing one by one to the background music of Fort Minor's Where'd You Go.

The centerpiece of Gabriel's part of the talk followed a slide that read

(google it)

on

"Unconventional Design"

He described an experiment in the artificial evolution of electronic circuits. The results were inefficient to our eyes, but they were correct and carried certain advantages we might not expect from a human-designed solution. The result was labeled "... magical ... unexpected ...". This sort system building is reminiscent of what neural networks promise in the world of AI, an ability to create (intelligent) systems without having to understand the solution at all scales of abstraction. For his parallel, Dick didn't refer to neural nets but to cities -- they are sometimes designed; their growth is usually governed; and they are built (and grow) from modules: a network of streets, subways, sewers.

Dick closed his talk with a vignette whose opening slide read "Can We Get There From Here" (with a graphical flourish I can't replicate here). The coming together of Ron's and Dick's threads suggest one way to try: find inspiration in biology.

October 24, 2006 11:31 PM

OOPSLA Day 1: Brenda Laurel on Designed Animism

Brenda Laurel is well known in computing, especially the computer-human interaction computing, for her books The Art of Human-Computer Interface Design and the iconic Computers as Theatre. I have felt a personal connection to her for a few years, since an OOPSLA a few years ago when I bought Brenda's Utopian Entrepreneur, which describes her part in starting Purple Moon, a software company to produce empowering computer games for young girls. That sense of connection grew this morning as I prepared this article, when I learned that Brenda's mom is from Middletown, Indiana, less than an hour from my birthplace, and just off the road I drove so many times between my last Hoosier hometown and my university.

Laurel opened this year's OOPSLA as the Onward! keynote speaker, with a talk titled, "designed animism: poetics for a new world". Like many OOPSLA keynotes, this one covered a lot of ground that was new to me, and I can remember only a a bit -- plus what I wrote down in real time.

These days, Laurel's interests lie in pervasive, ambient computing. (She recently gave a talk much like this one at UbiComp 2006.) Unlike most folks in that community, her goal is not ubiquitous computing as primarily utilitarian, with its issues of centralized control, privacy, and trust. Her interest is in pleasure. She self-effacingly attributed this move to the design tactic of "finding the void", the less populated portion of the design space, but she need not apologize; creating artifacts and spaces for human enjoyment is a noble goal -- a necessary part of of our charter -- in its own right. In particular, Brenda is interested in the design of games in which real people are characters at play.

(Aside: One of Brenda's earliest slides showed this painting, "Dutch Windmill Near Amsterdam" by Owen Merton (1919). In finding the image I learned that Merton was the father of Thomas Merton, the Buddhist-inspired Catholic monk whom I have quoted here before. Small world!)

Laurel has long considered how we might extend Aristotle's poetics to understand and create interactive form. In the Poetics, Aristotle "set down ... an understanding of narrative forms, based upon notions of the nature and intricate relations of various elements of structure and causation. Drama relied upon performance to represent action." Interactive systems complicate matters relative to Greek drama, and ubiquitous computing "for pleasure" is yet another matter altogether.

To start, drawing on Aristotle, I think, Brenda listed the four kinds of cause of a created thing (at this point, we were thinking drama):

- the end cause -- its intended purpose

- the formal cause -- the platonic ideal in the mind of the creator that shaped the creation

- the efficient cause -- the designer herself

- the material cause -- the stuff out of which it is made, which constrains and defines the thing

In an important sense, the material and formal causes work in opposite directions with respect to dramatic design. The effects of the material cause work bottom-up from material to pattern on up to the abstract sense of the thing, while the effects of the formal cause work top-down from the ideal to components on to the materials we use.

Next, Brenda talked about plot structure and the "shape of experience". The typical shape is a triangle, a sequence of complications that build tension followed by a sequence of resolutions that return us to our balance point. But if we look at the plots of most interesting stories at a finer resolution, we see local structures and local subplots, other little triangles of complication and resolution.

(This part of the talk reminded of a talk I saw Kurt Vonnegut give at UNI almost a decade or so ago,in which he talked about some work he had done as a master's student in sociology at the University of Chicago, playfully documenting the small number of patterns that account for almost all of stories we tell. I don't recall Vonnegut speaking of Aristotle, but I do recall the humor in is own story. Laurel's presentation blended bits of humor with two disparate elements: an academic's analysis and attention to detail, and a child's excitement at something that clearly still lights up her days.)

One of the big lessons that Laurel ultimately reaches is this: There is pleasure in the pattern of action. Finding these parts is essential to telling stories that give pleasure. Another was that by using natural materials (the material causality in our creation), we get pleasing patterns for free, because these patterns grow organically in the world.

I learned something from one of her examples, Johannes Kepler's Harmonices Mundi, an attempt to "explain the harmony of the world" by finding rules common to music and planetary motion within the solar system. As Kepler wrote, he hoped "to erect the magnificent edifice of the harmonic system of the musical scale ... as God, the Creator Himself, has expressed it in harmonizing the heavenly motions." In more recent times, composers such as Stravinsky, deBussy, and Ravel have tried to capture patterns from the visual world in their music, seeking more universal patterns of pleasure.

This led to another of Laurel's key lessons, that throughout history artists have often captured patterns in the world on the basis of purely phenomenological evidence, which were later reified by science. Impressionism was one example; the discovery of fractal patterns in Jackson Pollock's drip projectories were another.

The last part of Laurel's talk moved on to current research with sensors in the ubiquitous computing community, the idea of distributed sensor networks that help us to do a new sort of science. As this science exposes new kinds of patterns in the about the world, Laurel hopes for us to capitalize on the flip side of the art examples before: to be able to move from science, to math, and then on to intuition. She would like to use what we learn to inform the creation of new dramatic structures, of interactive drama and computer games that improve the human condition -- and give us pleasure.

The question-and-answer session offered a couple of fun moments. Guy Steele asked Brenda to react to Marvin Minsky's claim that happiness is bad for you, because once you experience it you don't want to work any more. Brenda laughed and said, "Marvin is a performance artist." She said that he was posing with this claim, and told some stories of her own experiences with Marvin and Timothy Leary (!). And she is even declared a verdict in my old discipline of AI: Rod Brooks and his subsumption architecture are right, and Minsky and the rest of symbolic AI are wrong. Given her views and interests in computing, I was not surprised by her verdict.

Another question asked whether she had seen the tape of Christopher Alexander's OOPSLA keynote in San Jose. She hadn't, but she expressed a kinship in his mission and message. She, too, is a utopian and admitted to trying to affect our values with her talk. She said that her research through the 1980s especially had taught her how she could sell cosmetics right into the insecurities of teenage girls -- but instead she chose to create an "emotional rehearsal space" for them to grow and overcome those insecurities. That is what Purple Moon was all about!

As usual, the opening keynote was well worth our time and energy. As a big vision for the future, as a reminder of our moral center, it hit the spot. I'm still left to think how these ideas might affect my daily work as teacher and department leader.

(I'm also left to track down Ted Nelson's Computer Lib/Dream Machines, a visionary, perhaps revolutionary book-pair that Laurel mentioned. I may need the librarian's help for this one.)

October 23, 2006 11:51 PM

OOPSLA Educators' Symposium 1: Bob Martin on OOD

Unlike last year, the first day of OOPSLA was not an intellectual charge for me. As tutorials chair, I spent the day keeping tabs on the sessions, putting out small fires involving notes and rooms and A/V, and just hanging out. I had thought I might sneak into the back of a technical workshop or a educational workshop, but my time and energy were low.

Today was the Educators' Symposium, my first since chairing in 2004 and 2005. I enjoyed not having to worry about the schedule or making introductions. The day held a couple of moments of inspiration for me, and I'll write about at least one -- on teaching novices to design programs -- later.

The day's endpoint was an invited talk by Robert Uncle Bob Martin. In a twist, Bob let us choose the talk we wanted to hear: either his classic "Advanced Principles of Object-Oriented Class Design" or his newer and much more detailed "Clean Code".

While we thought over our options, Martin started with a little astronomy lesson that involved Henrietta Levitt, globular clusters, distance calculations, and the width of our galaxy. Was this just filler? Was there a point related to software? I don't know. Maybe Bob just likes telescopes.

The vote of the group was for Principles. Sigh. I wanted Clean Code. I've read all of Bob's papers that underlie this talk, and I was in the mood for digging into code. (Too many hours pushing papers and attending meetings will do that to a guy who is Just a Programmer at heart.)

But this was good. With some of the best teachers, reading a paper is no substitute for the performance art of a good presentation. This blog can't recreate the talk, so if you already know Martin's five principles of OO design, you may want to move on to his latest ruminations on naming.

At its core, object-oriented design is about managing dependencies in source code. It aims to circumvent the problems inherent in software with too many dependencies: rigidity, fragility, non-reusability, and high viscosity.

Bob opened with a simple but convincing example of a copy routine, a method that echoes keyboard input to a printer, to one of his five principles, the Dependency Inversion Principle. In procedural code, dependency flows from the top down in a set of modules. In OO code, there a point where top-down dependencies end at an abstraction like an interface, and the dependencies begin to flow up from the details to the same abstractions. My favorite detail in this example was his pointing out that getch() is polymorphic method, programmed to the abstractions of standard input and standard output. ("Who needs all that polymorphism nonsense," say my C-speaking colleagues. "Let's teach students the fundamentals from the bottom up." Hah!)

Martin then went through each of his five principles, including DIP, with examples and a theatrical interaction with his audience. Here are the highlights to me:

- In the Single Responsibility Principle, a class should have one and only one reason to change.

Side lesson: There is no perfect way to write code. There are always competing forces. There is a conflict between the design principle encapsulation and the SRP. To ensure the SRP, we may need to relax encapsulation; to ensure encapsulation, we may need to relax the SRP. How to choose? All other things equal, we should prefer the SRP, as it protects us from a clear and present danger, rather than from potential bad behavior of programmers working with our code in the future. A mini-sermon on overprotective languages and practices followed. The Smalltalk programmers in the audience surely recognized the theme.

- The Open/Closed Principle states that a class should be open for extension and closed for modification.

The idea is that any change to behavior should be implemented in new code, not in changes to existing code.

Side lesson: OO design works best if you can predict the future, so that you can select the abstractions which are likely to change. We can predict the future (or try) either by thinking hard or by letting customers use early versions of the code and reacting to the actual changes they cause. Ceteris paribus, OO design may be better than the alternatives, but it still requires work to get right.

- The Liskov Substitution Principle is the formal name for one of the central principles of my second-course OO programming course over the last decade, which I have usually called "substitutability". We should be able to drop an instance of a subclass into a variable typed to a superclass -- and not have the client know the difference. In practice, we recognize violations of the LSP when we see limitations in subclasses such as overriding methods throwing exceptions.

- Of all Martin's principles, the one I tend to teach and consider consciously least often is the Integration Segregation Principle. Rather than writing classes that depend on a "fat" class that offers many services, we should segregate clients into sets according to their thinner needs by means of interfaces.

- Finally, we return to the Dependency Inversion Principle. In some ways, this is the most basic of the

design ideas in Martin's cadre, and it is the one by which we can often recognize OO pretenders from those who really

get it. Details in our programs should depend on abstractions, not the other way around. If you call a method, create a

subclass, override a method ... you wish the depended-on method or class to be abstract. Abstractions hide the client

code from changes that almost necessarily follow concrete implementations.

Side lesson: Another conflict we face when we program is that between flexibility and type safety. As with the SRP and encapsulation, to achieve one of flexibility and type safety in practice, we often have to relax the other. Martin described how the trend toward test-driven design and full-coverage unit testing eliminates many of the compelling reasons for us to seek type safety in a language. The result is that in this competition between forces we can favor flexibility as our preferred goal -- and choose the languages we use differently! Ruby, Python, duck typing... these trends signal our ability to choose empowering, freeing languages as long as we are willing to achieve the value of type safety through the alternate means of tests.

The talk just ended with no summary or conclusion, probably due to us being short on time. Martin had been generous with his audience interaction throughout, asking and answering questions and playfully enjoying all comments. The talk really was quite good, and I can see how he has become one of the top draws on the OO speaking circuit. At times he sounded like a preacher, moving and intoning in the rhythms of a revival minister. Still, one of his key points was that OO as religion isn't nearly as compelling as OO as proven technique for building better software.

The teacher in me left the symposium feeling a little bit second-best. How might I speak and teach classes in a way that draws such rapt interest from my audience? Could I teach more effectively if I did? The easy answer is that this is just not my style, but that may be an excuse. Can I -- should I -- try to change? Or should I just try to maximize what I do well? (And just what is that?)

October 19, 2006 6:26 PM

Misconceptions about Blogs

When I first started writing this blog, several colleagues rolled their eyes. Another blog no one will read; another blogger wasting his time.

They probably equated all blogging with the confessional, "what I ate for breakfast" diary-like journal that takes up most of the blogspace. I'm not sure exactly what I expected Knowing and Doing to be like back then, but I never intended to write that sort of blog and made great effort to write only something that seemed worth my time to think about -- and any potential reader's time to think about, too. Sometimes my entries were lighthearted, but even then they related to something of some value to my professional life. The one exception is my running category, which is mostly "just about me". But even then I often found myself writing about the intersection of my thoughts on running an my thoughts on, say, software development practice.

My colleagues' eye rolling came to mind again yesterday when I heard one of my university colleagues from the humanities give a talk on the use of video essays in his courses. A video essay is just what it says, an essay in the form of a short film. He tried to help us to understand where the video essay sits in the continuum of all film works, as it injects the creator into the process the way a written essay does but unlike a video documentary, which should present a voice independent of the filmmaker. In the course of his explanation, he raised blogs as the the written equivalent of something that is too personal, too much about the creator and not enough about some idea with which the creator is engaging. To him, blogs were -- by and large -- trivia.

Writing my blog doesn't feel trivial, and I hope that the folks who take the time to read it don't find the content trivial. Were I writing trivia, I could and would write far more often, as I could dump whatever frustrations or uneasiness or even joy I was feeling at the end of each day right into my keyboard. Even when I write a short entry, I want it to be about something, reflect at least that I have done some serious thinking about that something, and use words and language that I've shown at least a modicum of effort to polish for public consumption.

Nearly all of the blogs I read have these features, usually more of each than mine. I'm honored to read the professional and personal ruminations of interesting minds as they try to learn something new, or teach the rest of us something that they have recently learned.

As the speaker told us more about the art of video essay, it occurred to me that the word "blog" is used to describe at least two different classes of on-line writing, and that the kind of blog I enjoy -- and aspire to write -- is not what most folks think of when they hear "blog". This sort of blog is really an essay, and the bloggers in question are essayists, much in the spirit of Michel de Montaigne himself. The web makes it possible for us to share our essays more quickly and more broadly than older media, and it allows us to show more or our work-in-progress than is feasible in a print-dominated world. But these blogs are essays.

So, next week, when I make my annual pilgrimage to the conference currently known as OOPSLA, don't expect me to "blog it"; instead, I'll be writing essays.

Speaking of OOPSLA, I'll be in Portland, Oregon, from Saturday, October 21, through Friday, October 27. If you will be there, too, I'd love to meet up over a break and chat. Drop me a line.

Oh, and to close the loop on my colleague's talk... He showed some of his students' video essays, and they were remarkable. Anyone who doubts that today's students think deeply about anything would have to reconsider this stance after seeing some of these works. Could I use video essay in a CS course? Perhaps not most, but that thought probably says more about my imagination than the possibilities inherent in the medium. I haven't thought deeply enough about this to say any more!

October 13, 2006 11:00 AM

Student Entrepreneurship -- and Prosthetics?

Technology doesn't lead change.

Need leads change.

-- Dennis Clark, O&P1

This morning I am hanging out at a campus conference on Commercializing Creative Endeavors. It deals with entrepreneurship, but from the perspective of faculty. As a comprehensive university, not an R-1 institution, we tend to generate less of the sort of research that generates start-ups. But we do have those opportunities, and even more so the opportunity to work with local and regional industry.

The conference's keynote speaker is Dennis Clark, the president of O&P1, an local orthotics and prosthetics company that is doing amazing work developing more realistic prosthetics. His talk focused on his collaboration with Walter Reed Army Medical Center to augment amputees from the wars in Afghanistan and Iraq. It is an unfortunate truth that war causes his industry to advance, in both research and development. O&P1's innovations include new fabrication techniques, new kinds of liners and sensors, and shortened turnaround times in the design, customization, fabrication, and fitting prosthetics for specific patients.

Dennis has actively sought collaborations with UNI faculty. A few years ago he met with a few of us in computer science to explore some project ideas he had. Ultimately, he ended up working closely with two professors in physics and physical education on a particular prosthetics project, specifically work with the human gait lab at UNI, which he termed "world-class". That we have a world-class gait lab here at UNI was news to me! I have never worked on human gait research myself, though I was immediately reminded of some work on modeling gait by folks in Ohio State's Laboratory for AI Research, which was the intellectual progenitor of the work we did in our intelligent systems lab at Michigan State. This is an area rich in interesting applications for building computer models that support analysis o gait and the design of products.

As I listened to Dennis's talk this morning, two connections to the world of computing came to mind. The first was to understand the remarkable amount of information technology involved in his company's work, including CAD, data interchange, and information storage and retrieval. As in most industries these days, computer science forms the foundation on which these folks do their work, and speed of communication and collaboration are a limiting factor.

Second, Dennis's description of their development process sounded very much like a scrapheap challenge a lá the OOPSLA 2005 workshop of the same name. Creating a solution that works now for a specific individual, whose physical condition is unique, requires blending "space-age technology" with "stone-age technology". They put together whatever artifacts they have available to make what they need, and then they can step back and figure out how to increase their technical understanding for solving similar problems in the future.

The Paul Graham article I discussed yesterday emphasized that students who think they might want to start their own companies should devote serious attention to finding potential partners, if only by surrounding themselves with as many bright, ambitious people as possible. But often just as important is considering potential partnerships with folks in industry. This is different than networking to build a potential client base, because the industrial folks are more partners in the development of a business. And these real companies are a powerful source of problems that need to be solved.

My advice to students and anyone, really, is to be curious. If you are a CS major, pick up a minor or a second major that helps you develop expertise in another area -- and the ability to develop expertise in another area. Oh, and learn science and math! These are the fundamental tools you'll need to work in so many areas.

Great talk. The sad thing is that none of our students heard it, and too few UNI faculty and staff are here as well. They missed out on a chance to be inspired by a guy in the trenches doing amazing work, and helping people as the real product of his company.

October 12, 2006 4:51 PM

Undergraduates and Start-Ups

I enjoy following Paul Graham's writings about start-up companies and how to build one. One of my goals the last few years, and especially now as a department head, is to encourage our students to consider entrepreneurship as a career choice. Some students will be much better served, both intellectually and financially, by starting their own companies, and the resulting culture will benefit other students and our local and state business ecosystem.

Graham's latest essay suggests that even undergraduate students might consider creating a start-up, and examines the trade-offs among undergrads, grad students, and experiences folks already in the working world. He has a lot more experience with start-ups than I, so I figure his suggestions are at least worth thinking about.

Some of his advice is counterintuitive to most undergrads, especially to the undergrads in the more traditional Midwest. At one point, Graham tells students who may want to start their own companies that they probably should not take jobs at places where they will be treated well -- even Google.

I realize this seems odd advice. If they make your life so good that you don't want to leave, why not work there? Because, in effect, you're probably getting a local maximum. You need a certain activation energy to start a startup. So an employer who's fairly pleasant to work for can lull you into staying indefinitely, even if it would be a net win for you to leave.

It's hard for the typical 22-year-old to pass up a comfortable position at a top-notch but staid corporation. Why not enjoy your corporate work until you are ready to start your own company? You can make connections, build experience, learn some patterns and anti-patterns, and save up capital. The reason is that in short order you can create an incredible inertia against moving on -- not the least of which is the habit of receiving a comfortable paycheck each week. Going back to ramen noodles thrice daily is tough after you've had three squares paid for by your boss. I give similar advice to undergrads who say, "I plan to go to graduate school in a few years, after I work for a while." Some folks manage this, but it's harder than it looks to most students.

I also give my students a piece of advice similar to another of Graham's suggestions:

Most people look at a company like Apple and think, how could I ever make such a thing? Apple is an institution, and I'm just a person. But every institution was at one point just a handful of people in a room deciding to start something. Institutions are made up, and made up by people no different from you.

The moral is: Don't be intimidated by a great company. Once it was just a few people mostly like us who had an idea and a willingness to make their idea work. I give similar advice about programs that intimidate my students, language interpreters and compilers. One of the morals of my programming languages and compilers courses is that each of these tools is "Just Another Program". Written by a knucklehead just like me. Learn some basic techniques, apply your knowledge of programming and data structures to the various sub-problems faced when building a language processor, and you can write one, too.

This reference to professors raises another connection to Graham's advice, regarding how many students who want to create a start-up mistake the implementation of their idea as a commercial product with just a big class project:

That leads to our second difference [between a start-up's product and a class project]: the way class projects are measured. Professors will tend to judge you by the distance between the starting point and where you are now. If someone has achieved a lot, they should get a good grade. But customers will judge you from the other direction: the distance remaining between where you are now and the features they need. The market doesn't give a shit how hard you worked. Users just want your software to do what they need, and you get a zero otherwise. That is one of the most distinctive differences between school and the real world: there is no reward for putting in a good effort. In fact, the whole concept of a "good effort" is a fake idea adults invented to encourage kids. It is not found in nature.

The connection between effort, grade, and learning is not nearly as clean as most students think. Some courses require a lot of effort and require little learning; those are the courses that most of us hate. Sometimes one student has to exert much more effort than another to learn the concepts of a course, or to earn an equivalent grade. Every student starts in a different place, and courses exert different forces on different students. The key is to figure out which courses will best reward hard work -- preferably with maximum learning -- and then focus more of our attention there. Time and energy are scarce quantities, so we usually have to ration them.

If an undergraduate knows that she wants to start her own company, she has a head start in making this sort of decision about where to exert their learning efforts:

Another thing you can do [as an undergrad, to prepare to start your own company] is learn skills that will be useful to you in a startup. These may be different from the skills you'd learn to get a job. For example, thinking about getting a job will make you want to learn programming languages you think employers want, like Java and C++. Whereas if you start a startup, you get to pick the language, so you have to think about which will actually let you get the most done. If you use that test you might end up learning Ruby or Python instead.

... or Scheme! (These days, I think I'd go with Ruby.)

As in anything else, having some idea about what you want from your future can help you make better decisions about waht you want to do now. I admire young people have a big dream even as undergrads; sometimes they create cool companies. They also make interesting students to have in class, because their goals have a groundedness to them. They ask interesting questions, and sometimes doze off after a long night trying something new out. And even with this lead in making choices, they usually get out into the world and end up thinking, "Boy, I wish I had paid more attention in [some course]." Life is usually more complicated than we expect, even when we try to think ahead.

October 07, 2006 10:34 AM

The Measure of All Things

The truth belongs to everyone,

but error is ours alone.

-- Ken Alder, The Measure of All Things

On my trip to the Twin Cities last weekend, I had the good fortune to listen to Ken Alder's entertaining The Measure of All Things on tape. Alder tells the story of the Meridian Project, revolutionary France's effort to define the meter -- the Base du Systeme Metrique, the foundation of the metric system -- in terms of the distance between between the North Pole and the equator. Before happening upon this book, I knew nothing of this project or the scientists involved, and less about the political history of the era than I should have known.

In the Meridian Project, two of the finest astronomers of the day set out to measure the line of longitude that runs from Dunkirk on the northern coast of France to Barcelona on the northeast corner of Spain. They used the technique of triangulation, wherein they measured all of the angles in a sequence of coincident triangle running the length of the line and then used the length of a single side to compute the length of the target line. I was surprised by both the quality of the tools and techniques available to 18th century scientists and the fortitude with which they overcame the practical obstacles that stood in their way. Those of us who do science in the 21st century -- and all of us, who enjoy the benefits of science and technology every day -- really do owe a great debt to the men and women who laid our scientific foundation.

This was the Golden Age of geodesy, the art of measuring the Earth. The Meridian Project captured public and political interest. Scientists made trips to places as remote as Peru and Lappland in an effort to draw a more complete picture of the size and shape of the Earth. We are in an age of tremendous growth of computing; what is our signature project? What can capture -- or recapture -- the public's real interest? We in the sciences talk a lot about the Human Genome Project, but I don't think that this will ever have universal appeal outside the sciences. Digital media are now woven inextricably into our lives, but so deeply that few people think twice about them as anything special any more.

Perhaps the key technical point in the Meridian Project story involves error. Mechain and Delambre, the protagonists of the story, used a repeating circle to take their angle measurements. This tool was designed to help users reduce small observational errors by taking repeated observations, amalgamating the results, and computing the actual value from the amalgamation. This made small values that were otherwise imperceptible to the human observer appear manifold, where they were observable as a group. (Anyone who has tried to time a lightning-fast computer operation is familiar with the CS analog of this technique: write a loop to do the operation a million times, and then divide by a million. Values that would otherwise show up as a 0 on your timer are now computable!)

In the course of his measurements in the north of Spain, Mechain encountered a discrepancy between two readings -- and panicked. He didn't want anyone to know about the error, lest it reflect badly on his skill, so he conducted an elaborate cover-up, one that did not alter the ultimate calculation of the length of the meter. Both Delambre and Alder marvel at Mechain's artistry in doctoring his data.

However, if Mechain had understood that there are different kinds of error, he may not have worried so much about the discrepancy in his data, or the potential effect on his reputation for exactitude. For, while the repeating circle's multiple readings helped to increase the precision of his results, they did nothing to increase their accuracy, and in fact made his results less accurate. A technique can produce internal consistency without veridicality, and vice versa.

We live on a fallen planet,

and there is no way back to Eden.

-- Jean-Baptiste-Joseph Delambre

In writing up the results of the Meridian Project for publication, Delambre came to appreciate Mechain's dilemma and was quite generous in his treatment of Mechain's error, by keeping the cover-up out of plain sight. Delambre never did anything to impugn the integrity of the study, but by writing with care he was able to preserve Mechain's contribution to the project, and his public reputation. Delambre's work in the decades following the project are a fine example of a scientist acting honorably, with respect for both truth and his fellow man.

At the time of the Meridian Project, many scientists thought that error could be handled in a purely rational way, through perfection of tools and techniques. Today we have formalized much of our way of handling error in a social process within the community of scientists. Peer review and open discussion of results are central components in this process. Consider Andrew Wiles's proposed proof of Fermat's Last Theorem. In many ways, our open scientific culture owes much to the democratic revolutions in America and Europe around the time of this project.

Another interesting thread running through Alder's book is the story of how definitions and standards are adoption. The US has been discussing the metric system since it was merely a proposal in the French Academy of Science, in our pre-revolutionary days. Indeed, the U.S. Constitution grants Congress the authority to establish national standards of weights and measures, as essential to interstate commerce and the smooth functioning of a national economy. But codifying the metric system ahead of its widespread adoption is in some ways antithetical to democracy, whose primacy was much on the minds of our Founding Fathers. As Benjamin Franklin asked of John Quincy Adams, "Shall we shape the people to the law, or the law to the people?" I see this conflict in many elements of my professional, from trying to set departmental policy as department header, to defining curriculum as a faculty member, to selecting and refining a software methodology as a developer!

Men will always prefer

a worse way of knowing to

a better way of learning.

-- Jean-Jacques Rousseau

Alder writes that, "Science likes to think itself the one human endeavor free of idolatry." But his story, like any complete recount of a real scientific project, points out the many ways in which scientists and their processes elevate some ideas to the status of dogma, both locally within our own programs and globally within the paradigm that dominates science of our time. We have to be on the look-out for these blind spots in our vision. Often, they hide errors in our thinking; sometimes, they hide opportunities to advance our science in a big way.

You're not the only one who's made mistakes

But they're the only thing that you can truly call your own

-- Billy Joel, You're Only Human (Second Wind)

The Meridian Project began in an attempt to anchor "the measure of all things" -- the meter -- to something unassailable in the external world, the size of the Earth. But along the way they helped us to learn the many ways in which the external world is imperfect to this end: the design of our tools, the use of these tools, the setting on approximations in the absence of complete knowledge, the refining of definitions in face of new knowledge and technology, an adoption of standards that is driven by political and social needs, .... Ultimately, the project only reinforced what Protagoras had taught 2500 years ago, that man is the measure of all things.

October 04, 2006 5:45 PM

Hope with Thin Envelopes

I am working on a longer entry about a fine book I listened to this weekend, but various work duties -- including preparing for the rapidly approaching OOPSLA conference -- have kept me busier than I planned. I did run across a bit of news today that will perhaps raise the spirits of high school and college students everywhere who did not get into their dream schools. This from a wide-ranging bits-of-news column by Leah Garchik at the San Francisco Chronicle:

P.S.: A bit of information in Tuesday's story about Andrew Fire of Stanford University, winner of a Nobel Prize for medicine, seems deserving of underlining: Stanford turned down Fire when he applied for undergraduate study there. This revelation is a gift to every high school senior who ever received a thin envelope instead of a fat one.

(Of course, Fire did have the good fortune to study at Berkeley and MIT...)

Worth noting, too, is that Fire studied mathematics as an undergrad, and that his quantitative background probably played an important role in the thinking that led to his Nobel-winning work. Whenever I encounter high school or college students who are interested in other sciences these days, I tell them that studying computer science or math too will almost certainly make them better scientists than only studying a science content area.

I also tell them that computer science is a pretty good content area in its own right!

October 01, 2006 7:54 PM

On the whole...

... I'd rather be in Philadelphia. -- W. C. Fields

My race today effectively ended today at the 21-mile mark, when my legs cramped badly enough that I could no longer run. My training partner, who was running ahead of me by a few minutes, suffered the same fate. I will spare you the details, but suffice to say, I relived much of this experience and ended with a time slower than Chicago 2003.

At least I was wise enough to back off when the truth made itself know, which I had hoped to be yesterday. As a result, I enjoyed the back half of the Twin Cities course more this year. I saw many beautiful churches as we moved down St. Paul's Summit Avenue. I made a little guy who was watching the runners with his daddy smile by making a little face for him. And, when we came upon a couple of elderly ladies dressed nicely and giving their time this morning to cheer us on, I connected with one of the ladies, and the smile that lighted up her face lifted my spirits.

When you end up walking as much as I did today, you have a lot of time to reflect on what the world has served up. What did I do wrong? Peak to soon in training? Taper poorly? Eat poorly in the last week, last days? Hydrate insufficiently on the course -- or too much? (I drank steadily throughout, and more than ever before.) Was the day out of my control, with soaring temperatures that caught even the meteorologists off guard? Am I just not equipped physically to run 8:00 miles for a full 26.2? Is it only Minneapolis?

I have no answers yet. But I do have more doubts about myself after this race than last year's. I may need to walk a lot more more miles to work through the questions and doubts.