April 30, 2010 10:17 AM

Taking the Pulse of the Agile Community

Thanks to all of you who have written in response to my previous entry with suggestions for my May term course on agile software development. Most everyone recommended what I knew to be true: source control, automated builds, automated testing, and refactoring are the foundation of agile teams. Keep those suggestions coming!

Over the last semester I have been reading the XP mailing list a little more closely in an effort to discern the pulse of the community these days. Every so often an interesting thread pops up. For example, a few months back, the group talked about its general aversion for software done "quick and dirty". One poster quoted Steve McConnell as saying, "The trouble with quick and dirty is that dirty remains long after quick has been forgotten."

This thread stood out starkly against comments from a couple of my colleagues who view agile ideas as a poison, not just a bad idea but a set of temptations that prevent developers from learning The Right Way to make software. They often rail against XP and its ilk as encouraging quick-and-dirty development, producing bad code with no documentation before moving on willy-nilly to the next "story".

That sounded quite funny as I read professionals who use agile practices every day promote unit testing and especially test-driven development as ways to guard against a quick-and-dirty process. Similarly, building refactoring into your weekly, daily, or hourly development cycle is hardly a recipe for reckless development; it is a practice that shows deep care for the code base and for the quality of the software we deliver to our clients.

One of the most active threads on the list over the last few weeks has been a discussion of the "characteristics of a great XP team". This thread has been full of enlightening capsules from people who have been doing XP in the trenches for many years. Some of the discussion offered advice that applies to great teams of any sort, such as a desire to know the truth and adapt to it. Others took a stab at highlighting what XP itself brings to the table. In one especially insightful message, Bill Caputo suggested that, among other attributes, a great P team...

- can deliver well-tested software at a regular pace indefinitely, because it has "successfully flattened [the] cost-of-change curve".

- "has mastered the art of adapting [its] process to the needs of [its] environment."

- "have such a distribution of knowledge ... that any one person could leave [the] team, and [its] velocity would not be negatively impacted any more than any other person leaving."

Steve Gordon decomposed the question that launched the thread into two parts:

- What are the characteristics of a great software team?

- How does XP achieve -- or not achieve -- these characteristics?

I think Gordon's decomposition serves as a nice way for me and my students to approach our course, and I think Caputo's list is a good start on what we mean when we talk about agile teams.

April 29, 2010 8:47 PM

Turning My Thoughts to Agile Software Development

April came and went in a flurry. Now begins a busy time of transition. Today was the last session of my programming languages course. This semester taught me a few new things, which I hope to catalog and consider soon.

Ordinarily the next teaching I do after programming languages is the compiler course that follows. I will be teaching that course, in the fall, as we seem to have attracted a healthy enrollment. But my next teaching assignments will be novelty and part-novelty all in one. I am teaching Agile Software Development in our May term, which runs May 10-June 4. This is a novelty for me in several ways. In all my years on the faculty, I have never taught summer school (!), and I have certainly never taught a 3-credit course in only four weeks. I expect the compressed schedule to create an intensity and focus unlike a regular course, but I fear that it will be hard to reflect much as we keep peddling every day for two hours. Full speed ahead!

The course is only part novelty because I have taught Agile Software Development twice before, in regular semesters. I'm also quite in tune with the agile values, principles, and practices. Still, seven years is an eon in the software world, so much has changed since my last offerings in 2003 and prior. Tools such as testing frameworks have evolved, changed outright, or sprung up new. Scrum, lean, and kanban have become major topics of discussion even as the original practices of XP remain the foundation of most agile teams. Languages have faded and surged. There is a lot of new for me in this old course.

The compressed schedule offers opportunities I have not had before when teaching a development course. Class will meet two hours every business day for four weeks. Students will be immersed in this course. Most will be working in the afternoons, but few will be taking a second course. This allows us to engage the material and our projects with an intensity we can't often muster. (I'll also have to be careful to pace the course so that we don't wear ourselves out, which seems a danger. This is a chance for me and the class to practice one of XP's bedrock practices, sustainable pace!)

The class will be small, only a dozen or so, which also offers interesting possibilities for our project. The best way to learn new practices is to use them, and with the class meeting for nearly eleven hours a week we have a chance to dig in and use tools and practice the practices for extended periods, as a group. The chance to pair program and work with a story board has never been so vivid for one of my classes.

I hope that we are able to craft a course and project that help us bypass some of the flaws with typical course projects. Certainly, we will be collocated more frequently and for longer stretches than in my department's usual project course, and we will be together enough to learn to work as a team. There shouldn't be the constant context switching between courses that students face during the academic year. Whether we can manage close interaction with a customer depends a lot on the availability of others and on the project we end up pursuing.

We do face many of the same challenges as my software engineering course last fall. Our curriculum creates a Babel of several programming languages. Students will come to the course with a cavernous range of experience, skills, and maturity. That gap offers a good test of how pair programming collective code ownership, and apprenticeship can help build and share culture and values. The lack of a common tongue is simply a challenge, though, if we hope to deliver software of value in four short weeks.

The next eleven days will find me busy, busy, busy, thinking about my course, organizing readings, and preparing a project and tools.

I am curious to hear what you think:

- Which ideas, tools, and practices from the agile world ten years ago remain essential today?

- What changes in the last decade fundamentally changed what we mean by agile development?

- What readings -- especially accessible primary sources available on the web -- do you recommend?

April 24, 2010 1:25 PM

Futility and Ditch Digging

First, Chuck Hoffman tweeted, The life of a code monkey is frequently depressingly futile.

I had had a long week, filled with the sort of activities that can make a programmer pine for days as a code monkey, and I replied, Life in many roles is frequently depressingly futile. Thoreau was right.

The ever-timely Brian Foote reminded me:

Sometimes utility feels like futility, but someone's gotta do it.

Thanks, Brian. I needed to hear that.

I remember hearing an interview with musician John Mellencamp many years ago in which he talked about making the movie Falling from Grace. Th interviewer was waxing on about the creative process and how different movies were from making records, and Mellencamp said something to the effect of, "A lot of it is just ditch digging: one more shovel of dirt." Mellencamp knew about that sort of manual labor because he had done it, digging ditches and stringing wire for a telephone company before making it as an artist. And he's right: an awful lot of every kind of working is moving one more shovel of dirt. It's not romantic, but it gets the job done.

April 23, 2010 4:52 PM

Who Are You Really Testing?

Earlier this week, I read How to Fail as a Teacher. Given some of the things I have been dealing with as department head lately, one particular method stuck out:

Always view test scores as about the failure or success of the student and not as a tool to evaluate your teaching

Everyone failed one part of the test? The whole class much be dummies. Never assume that you might have taught it wrong. That sort of thinking could lead to new teaching methods, reteaching material or worst case differentiated instruction.

I know that when first starting, I required a big attitude adjustment (country music warning) in this area. First, I was doing a great job, and the students didn't get it. Then, I realized I was doing a less than perfect job, but the students just had to adapt. Finally, I now know that I am doing a less than perfect job, and my job is to find ways to help students get it. Even when I am doing a pretty good job, it is still my job is to find ways to help students get it.

I'm not perfect yet at being imperfect yet, but at least I am aware. It seems that some profs never quite get there.

Lately, we've been thinking a lot about outcomes assessment for our academic programs again. How do we get better as a department? How do we get better as individual instructors, at doing what we hope to do as educators? Writing down the outcomes is step one. Observing results and taking them seriously is step two. Feeding back what we learn into our personal behavior, our courses, and our programs is the third.

If your students aren't reaching the goal, then maybe you need to teach them differently. If most of your students never reach the goal, or if some of your students regularly do not, then almost certainly you need to do something different.

Oh, and the article's first two ways to fail are effective ones, too.

April 22, 2010 8:36 PM

At Some Point, You Gotta Know Stuff

A couple of days ago, someone tweeted a link to Are you one of the 10% of programmers who can write a binary search?, which revisits a passage by Jon Bentley from twenty-five years ago. Bentley observed back than that 90% of professional programmers were unable to produce a correct version of binary search, even with a couple of hours to work. I'm guessing that most people who read Bentley's article put themselves in the elite 10%.

Mike Taylor, the blogger behind The Reinvigorated Programmer, challenged his readers. Write your best version of binary search and report the results: is it correct or not? One of his conditions was that you were not allowed to run tests and fix your code. You had to make it run correctly the first time.

Writing a binary search is a great little exercise, one I solve every time I teach a data structures course and occasionally in courses like CS1, algorithms, and any programming language- or style-specific course. So I picked up the gauntlet.

You can see my solution in a comment on the entry, along with a sheepish admission: I inadvertently cheated, because I didn't read the rules ahead of time! (My students are surely snickering.) I wrote my procedure in five minutes. The first test case I ran pointed out a bug in my stop condition, (>= lower upper). I thought for a minute or so, changed the condition to (= lower (- upper 1)), and the function passed all my tests.

In a sense, I cheated the intent of Bentley's original challenge in another way. One of the errors he found in many professional developers' solution was an overflow when computing the midpoint of the array's range. The solution that popped into my mind immediately, (lower + upper)/2, fails when lower + upper exceeds the size of the variable used to store the intermediate sum. I wrote my solution in Scheme, which handle bignums transparently. My algorithm would fail in any language that doesn't. And to be honest, I did not even consider the overflow issue; having last read Bentley's article many years ago, I had forgotten about that problem altogether! This is yet another good reason to re-read Bentley occasionally -- and to use languages that do heavy lifting for you.

But.

One early commenter on Taylor's article said that the no-tests rule took away some of my best tools and his usual way of working. Even if he could go back to basics, working in an unfamiliar probably made him less comfortable and less likely to produce a good solution. He concluded that, for this reason, a challenge with a no-tests rule is not a good test of whether someone is a good programmer.

As a programmer who prefers an agile style, I felt the same way. Running that first test, chosen to encounter a specific possibility, did exactly what I had designed it to do: expose a flaw in my code. It focused my attention on a problem area and caused me to re-examine not only the stopping condition but also the code that changed the values of lower and upper. After that test, I had better code and more confidence that my code was correct. I ran more tests designed to examine all of the cases I knew of at the time.

As someone who prides himself in his programming-fu, though, I appreciated the challenge of trying to design a perfect piece of code in one go: pass or fail.

This is a conundrum to me. It is similar to a comment that my students often make about the unrealistic conditions of coding on an exam. For most exams, students are away from their keyboards, their IDEs, their testing tools. Those are big losses to them, not only in the coding support they provide but also in the psychological support they provide.

The instructor usually sees things differently. Under such conditions, students are also away from Google and from the buddies who may or may not be writing most of their code in the lab. To the instructor, This nakedness is a gain. "Show me what you can do."

Collaboration, scrapheap programming, and search engines are all wonderful things for software developers and other creators. But at some point, you gotta know stuff. You want to know stuff. Otherwise you are doomed to copy and paste, to having to look up the interface to basic functions, and to being able to solve only those problems Google has cached the answers to. (The size of that set is growing at an alarming rate.)

So, I am of two minds. I agree with the commenter who expressed concern about the challenge rules. (He posted good code, if I recall correctly.) I also think that it's useful to challenge ourselves regularly to solve problems with nothing but our own two hands and the cleverness we have developed through practice. Resourcefulness is an important trait for a programmer to possess, but so are cleverness and meticulousness.

Oh, and this was the favorite among the ones I read:

I fail. ... I bring shame to professional programmers everywhere.

Fear not, fellow traveler. However well we delude ourselves about living in a Garrison Keillor world, we are all in the same boat.

Posted by Eugene Wallingford | Permalink | Categories: Computing, Software Development, Teaching and Learning

April 20, 2010 9:58 PM

Computer Code in the Legal Code

You may have heard about a recent SEC proposal that would require issuers of asset-backed securities to submit "a computer program that gives effect to the flow of funds". What a wonderful idea!

I have written a lot in this blog about programming as a new medium, a way to express processes and the entities that participate in them. The domain of banking and finance is a natural place for us to see programming enter into the vernacular as the medium for describing problems and solutions more precisely. Back in the 1990s, Smalltalk had a brief moment in the sunshine as the language of choice used by financial houses in the creation of powerful, short-lived models of market behavior. Using a program to describe models gave the investors and arbitrageurs not only a more precise description of the model but also a live description, one they could execute against live data, test and tinker with, and use as an active guide for decision-making.

We all know about the role played by computer models in the banking crisis over the last few years, but that is an indictment of how the programs were used and interpreted. The use of programs itself was and is the right way to try to understand a complex system of interacting, independent agents manipulating complex instruments. (Perhaps we should re-consider whether to traffic in instruments so complex that they cannot be understand without executing a complex program. But that is a conversation for another blog entry, or maybe a different blog altogether!)

What is the alternative to using a program to describe the flow of funds engendered by a particular asset-backed security? We could describe these processes using text in a natural language such as English. Natural language is supremely expressive but fraught with ambiguity and imprecision. Text descriptions rely on the human reader to do most of the work figuring out what they mean. They are also prone to gratuitous complexity, which can be used to mislead unwary readers.

We could also describe these processes using diagrams, such as a flow chart. Such diagrams can be much more precise than text, but they still rely on the reader to "execute" them as she reads. As the diagrams grow more complex, the more difficult it is for the reader to interpret the diagram correctly.

A program has the virtue of being both precise and executable. The syntax and semantics of a programming are (or at least can be) well-defined, so that a canonical interpreter can execute any program written in the language and determine its actual value. This makes describing something like the flow of funds created by a particular asset-backed security as precise and accurate as possible. A program can be gratuitously complex, which is a danger. Yet programmers have at their disposal tools for removing gratuitous complexity and focusing on the essence of a program, moreso than we have for manipulating text.

The behavior of the model can still be complex and uncertain, because it depends on the complexity and uncertainty of the environment in which it operates. Our financial markets and the economic world in which asset-backed securities live are enormously complex! But at least we have a precise description of the process being proposed.

When provisions become complex beyond a point, computer code is actually the simplest way to describe them... The SEC does not say so, but it would be useful to add that if there is a conflict between the software and textual description, the software should prevail.

Using a computer program in this way is spot on.

After taking this step, there are still a couple of important issues yet to decide. One is: What programming language should we use? A lot of CS people are talking about the proposal's choice of Python as the required language. I have grown to like Python quite a bit for its relative clarity and simplicity, but I am not prepared to say that it is the right choice for programs that are in effect "legal code". I'll let people who understand programming language semantics better than I make technical recommendations on the choice of language. My guess is that a language with a simpler, more precisely defined semantics would work better for this purpose. I am, of course, partial to Scheme, but a number of functional languages would likely do quite nicely.

Fortunately, the SEC proposal invites comments, so academic and industry computer scientists have an opportunity to argue for a better language. (Computer programmers seem to like nothing more than a good argument about language, even writing programs in their own favorite!)

The most interesting point here, though, is not the particular language suggested but that the proposers suggest any programming language at all. They recognize how much more effectively a computer program cab describe a process than text or diagrams. This is a triumph in itself.

Other people are reminding us that mortgage-backed CDOs at the root of the recent financial meltdown were valued by computer simulations, too. This is where the proposal's suggestion that the code be implemented in open-source software shines. By making the source code openly available, everyone has the opportunity and ability to understand what the models do, to question assumptions, and even to call the authors on the code's correctness or even complexity. The open source model has worked well in the growth of so much of our current software infrastructure, including the simple in concept but complex in scale Internet. Having the code for financial models be open brings to bear a social mechanism for overseeing the program's use and evolution that is essential in a market that should be free and transparent.

This is also part of the argument for a certain set of languages as candidates for the code. If the language standard and implementations of interpreters are open and subject to the same communal forces as the software, this will lend further credibility to the processes and models.

I spend a lot of time talking about code in this blog. This is perhaps the first time I have talked about legal code -- and even still I get to talk about good old computer code. It's good to see programs recognized for what they are and can be.

April 19, 2010 10:40 PM

Different Perspective on Loss

A house burned in my neighborhood tonight. I do not know yet the extent of the damage, but the fight was protracted. My first hope is that no one was hurt, neither residents of the house nor the men and women who battled the blaze.

Such a tragedy puts my family's recent loss into perspective. No matter how valuable our data, when a hard drive fails, no one dies. Even without a backup, life goes. Even without a backup, there is a chance of recovery. We can run utilities that come with our OS. We can run wonderful programs that cost little money. Specialists can pull the platters from the drive and attempt to read data raw.

Things lost in a fire are lost forever.

If we follow a few simple and well-known rules, we can have a backup: a bit-for-bit copy of our data, all our digital stuff, indistinguishable from the original. In principle and in practice, we can encounter failures and lose nothing. In the material world, we cannot make a copy of everything we own. Yes, we can make copies of important documents, and we can store some of our stuff somewhere else. But we don't live in a bizarro Steven Wright world where we possess an identical copy of every book, every piece of clothing, every memento.

In the digital world, we can make copies that preserve our world.

So, I type this with a different outlook. The world reminds me that there are things worse than a lost disk drive. I hope that my daughters -- who lost the most in our failure -- can feel this way, too. We are well on the way to resuming our digital lives, buoyed by technology that will help us not to suffer such a loss again.

That said, it's worth keeping in mind Jamie Zawinski's cautionary words, "the universe tends toward maximum irony", and stay alert.

April 15, 2010 8:50 PM

Listen To Your Code

On a recent programming languages assignment, I asked students to write a procedure named if->boolean, whose spec was to recognize certain undesirable if expressions and return in their places equivalent but simpler boolean expressions. This procedure could be part of a simple refactoring engine for a Scheme-like language, though I don't know that we discussed it in such terms.

One student's procedure made me smile in a way only a teacher can smile. As expected, his procedure was a cond expression selecting among the undesirable ifs. His procedure began something like this:

(define if->boolean

(lambda (exp)

(cond ((not (if? exp))

exp)

; Has the form (if condition #t #f)

((and (list? exp)

(= (length exp) 4)

(eq? (car exp) 'if)

(exp? (cadr exp))

(true-lit? (caddr exp))

(false-lit? (cadddr exp)))

(if->boolean (cadr exp)))

...

The rest of the procedure was more of the same: comments such as

; Has the form (if condition #t another)

followed by big and expressions to recognize the noted undesirable if and a call to a helper procedure that constructed the preferred boolean expression. The code was long and tedious, but the comments made its intent clear enough.

Next to his code, I wrote a comment of my own:

Listen to your code. It is saying, "Write syntax procedures!"

How much clearer this code would have been had it read:

(define if->boolean

(lambda (exp)

(cond ((not (if? exp)) exp)

((trivial-if? exp) (if->boolean (cadr exp)))

...

When I talked about this code in class (presented anonymously in order to guard the student's privacy, in case he desired it), I made it clear that I was not being all that critical of the solution. It was thorough and correct code. Indeed, I praised it for the concise comments that made the intent of the code clearer than it would have been without them.

Still, the code could have been better, and students in the class -- many of whom had written code similar but not always as good as this -- knew it. For several weeks now we have been talking about syntax procedures as a way to define the interface of an ADT and as a way to hide detail that complicates a piece of code.

Syntax Procedure is one pattern in a family of patterns we have learned in order to write structurally-recursive code over an inductive data type. Syntax procedures are, of course, much more broadly applicable than their role in structural recursion, but they are especially useful in helping us to isolate code for manipulating a particular data representation from the code that processes the data values and recurses over their parts. That can be especially useful when students are at the same time still getting used to a language as unusual to them as Scheme.

The note I wrote on the student's printout was one measure of chiding (Really, have you completely forgotten about the syntax procs we've been writing for weeks?) mixed with nine -- or ninety-nine -- measures of stylistic encouragement:

Yes! You have written a good piece of code, but don't stop here. The comment you wrote to help yourself create this code, which you left in so that you would be able to understand the code later, is a sign. Recognize the sign, and use what it says to make your code better.

Most experienced programmers can tell us about the dangers of using comments to communicate a program's intent. When a comment falls out of sync with the code it decorates, woe to future readers trying to understand and modify it. Sometimes, we need a comment to explain a design decision that shapes the code, which the code itself cannot tell us. But most of the time, a comment is just that, a decorator: something meant to spruce up the place when the place doesn't look as good as we know it should. If the lack of syntax procedures in my student's code is a code smell, then his comment is merely deodorant.

Listen to your code. This is one of my favorite pieces of advice to students at all levels, and to professional programmers as well. I even wrote this advice up in a pattern of its own, called Speak the Problem's Language. I knew this pattern from many years writing Smalltalk to build knowledge-based systems in domains from accounting to enginnering. Then I read Peter Norvig's Paradigms of Artificial Intelligence Programming, and his Chapter 2 expressed the wisdom so well that I wanted to make it available as a core coding pattern in all of the pattern languages I was writing at the time. It is still one of my favorites. I hope my student comes to grok it, too.

After class, one of the other students in the class stopped to chat. She is a double major in CS and graphic design, and she wanted to say how odd it was to hear a computer science prof saying, "Listen to your code." Her art professors tell her this sort of thing all time. Let the painting tell you where it wants to go. And, The sculpture is already in the stone; your job is to set it free.

Whatever we want to say about software 'engineering', when we write a program to do something new, our act of creation is not all that different from the painter's, the sculptor's, or the graphic designer's. We shape the code, and it shapes us. Listen.

This was a pretty good way to spend an afternoon talking to students.

April 13, 2010 9:03 PM

Unexpected Encounters with Knowledge

In response to a question from Francesco Cirillo, Ward Cunningham says:

Reflecting on your career choices is there anything you would have done differently?

I'm pretty happy with my career, though I never did enough calculus homework if I think how much calculus has influenced how I think in terms of small units of change.

Calculus came up in my programming languages course today, while we were talking about maps, finite functions, and discrete math. Our K-12 system aims students toward calculus, and when they arrive at the university they often end up taking a calculus course if they haven't yet already. Many CS students struggle in calc. They can't help but notice the dearth of applications of calculus in most of their CS courses and naturally ask, "Why are we required to take this class?"

This is a common discussion even among faculty. I can argue both sides of the case, though I admit to believing that understanding the calculus at some level is an essential part of being an educated person, just as understanding the literary and historical context in which one grows and lives is essential. The calculus is one of the crowning achievements of the Enlightenment and helped to usher in the scientific advances that define in large part the world in which we all live today. But Cunningham's reflection encourages us to think about calculus in a different light.

Notice that Cunningham does not talk about direct application of the calculus in any program he wrote. The only program he mentions specifically is WyCash, a portfolio management system. Nor does he talk in an abstract academic way about intellectual achievement and the Age of Reason.

He says instead that the calculus's notion of small units of change has affected the way he thinks. I'm confident that he is thinking here not only of agile software development, with its short iterations and rapid feedback cycle, but also of test-driven development, patterns, and wiki. One can accumulate value in the smallest of the slices. If one accumulates enough of them, then over time the value one amasses can be the area under quite a large curve of action.

This is an indirect application of knowledge. Ward either did enough calculus homework or paid enough attention in class that he was able to understand one of the central ideas underlying the discipline. That understanding probably lay fallow in his mind until he began to see how the idea was recurring in his programming, in his community building, and in his approach to software development. He was then able to think about the implications of the idea in his current work and learn from what we know about the calculus.

I am a fan of Ward's in large part because of his wonderful ability to make such connections. It is hard to anticipate this kind of connection across domains. That's why it's so important to be educated widely and to take seriously ideas from all corners of human accomplishment. Even calc class.

April 08, 2010 8:56 PM

Baseball, Graphics, Patterns, and Simplicity

I love these graphs. If you are a baseball fan or a lover of graphics, you will, too. Baseball is the most numbers-friendly of all sports, with a plethora of statistics that can extracted easily from its mano-a-mano confrontations between pitchers and batters, catchers and baserunners, American League and National. British fan Craig Robinson goes a step beyond the obvious to create beautiful, information-packed graphics that truths both quirky and pedestrian.

Some of the graphs are more complex than others. Baseball Heaven uses concentric rings, 30-degree wedges, and three colors to show that the baseball gods smile their brightest on the state of Arizona. I like some of these complex graphs, but I must admit that sometimes they seem like more work than they should be. Maybe I'm not visually-oriented in the right way.

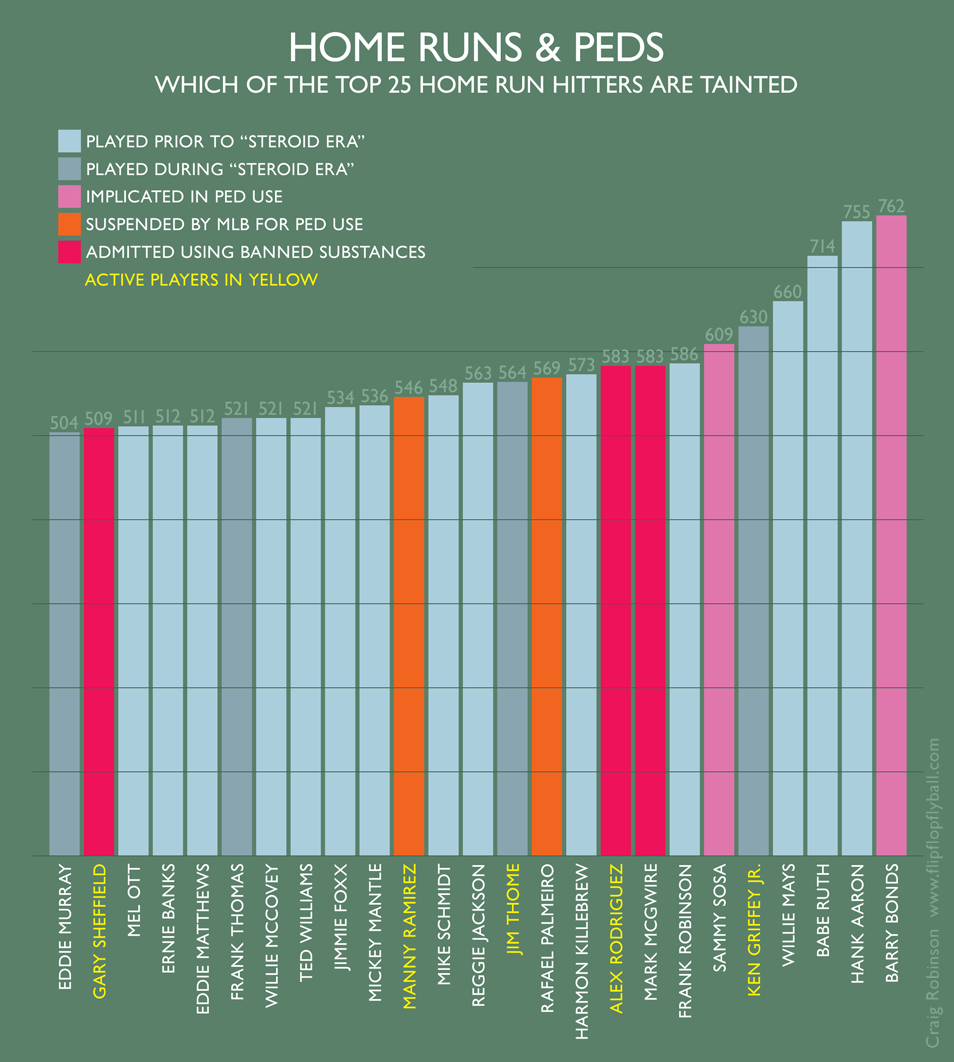

I notice that many of my favorites have something in common. Consider this chart showing the intersection of the game's greatest home run hitters and the steroid era:

It doesn't take much time looking at this graph for a baseball fanatic to sigh with regret and hope that Ken Griffey, Jr., has played clean. (I think he has.) A simple graphic, a poignant bit of information.

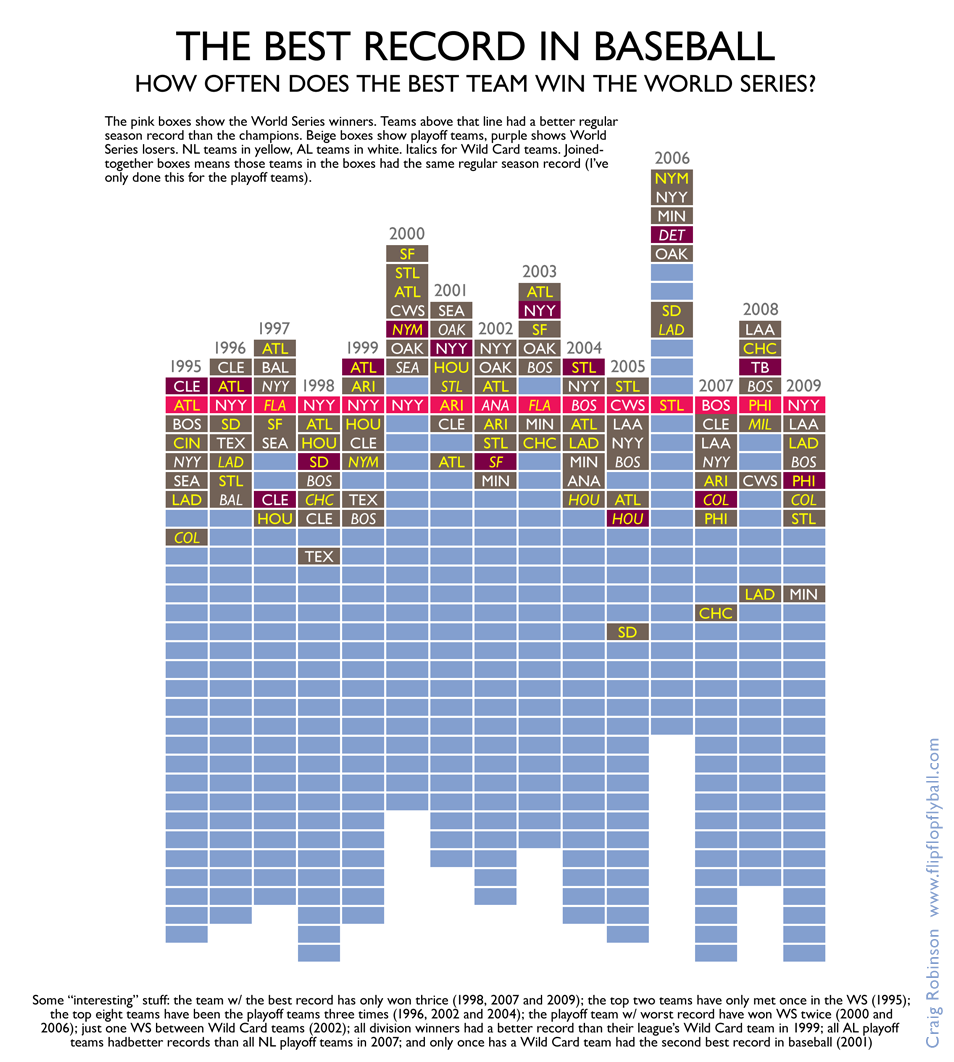

Next, take a look at this graph that answers the question, how does baseball's winningest team fare in the World Series?:

This is a more complex than the previous one, but the idea is simple: sort teams by win/loss record, identify the playoff and World Series teams by color, and make the World Series winners the min axis of the graph. Who would have thought that the playoff team with the worst record would win the World Series almost as often as the the team with the best record?

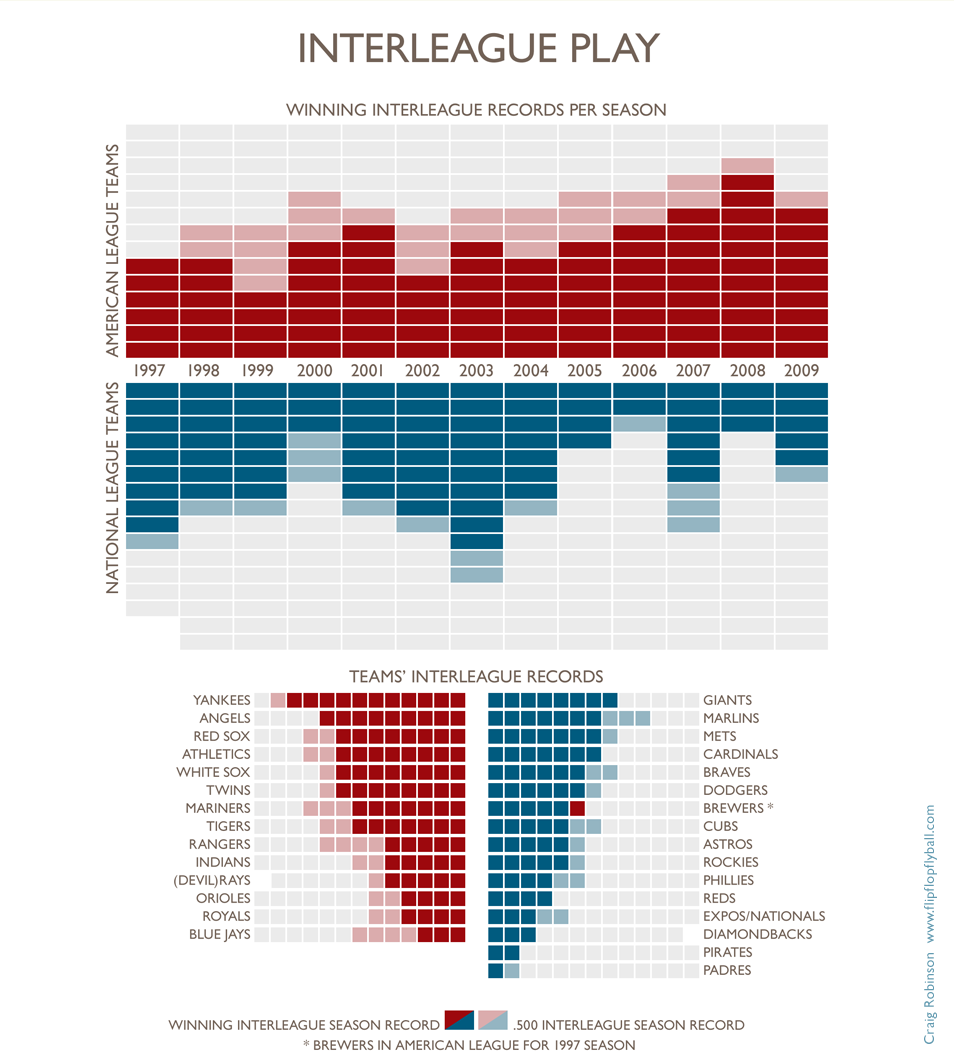

Finally, take a look at what is my current favorite from the site, an analysis of interleague play's winners and losers.

I love this one not for its information but for its stark beauty. Two grids with square and rectangular cells, two primary colors, and two shades of each are all we need to see that the two leagues have played pretty evenly overall, with the American League dominating in recent years, and that the AL's big guns -- the Yankees, Red Sox, and Angels -- are big winners against their NL counterparts. This graph is so pretty, I want to put a poster-sized print of it on my wall, just so that I can look at it every day.

The common theme I see among these and my other favorite graphs is that they are variations of the unpretentious bar chart. No arcs, line charts with doubly-labeled axes, or 3D effects required. Simple colors, simple labels, and simple bars illuminating magnitudes of interest.

Why am I drawn to these basic charts? Am I too simple to appreciate the more complex forms, the more complex interweaving of dimensions and data?

I notice this as a common theme across domains. I like simple patterns. I am most impressed when writers and artists employ creative means to breathe life into unpretentious forms. It is far more creative to use a simple bar chart in a nifty or unexpected way than it is to use spirals, swirls of color, concentric closed figures, or multiple interlocking axes and data sources. To take a relationship, however complex, and boil its meaning down to the simplest of forms -- taken with a twist, perhaps, but unmistakably straightforward nonetheless -- that is artistry.

I find that I have similar tastes in programming. The simplest patterns learned by novice programmers captivate me: a guarded action or linear search; structural recursion over a BNF definition or a mutual recursion over two; a humble strategy object or factory method. Simple tools used well, adapted to the unique circumstances of a problem, exposing just the right amount of detail and shielding us from all that doesn't matter. A pattern used a million times never in the same way twice. My tastes are simple, but I can taste a wide range of flavors.

Now that I think about it, I think this theme explains a bit of what I love about baseball. It is a simple game, played on a simple form with simple equipment. Though its rules address numerous edge cases, at bottom they, too, are as simple as one can imagine: throw the ball, hit the ball, catch the ball, and run. Great creativity springs from these simple forms when they are constrained by simple forms. Maybe this is why baseball fans see their sport as an art form, and why people like Craig Robinson are driven to express its truths in art of their own.

April 06, 2010 7:36 PM

Shame at Causing Loved Ones Harm

The universe tends toward maximum irony.

Don't push it.

-- JWZ

I have had Jamie Zawinski's public service announcement on backups sitting on desk since last fall. I usually keep my laptop and my office machine pretty well in sync, so I pretty much always have a live back-up. But some files live outside the usual safety zone, such as a temporary audio files on my desktop, which also contains one or two folders of stuff. I knew I need to be more systematic and complete in safeguarding myself from disk failure, so printed Zawinski's warning and resolved to Do the Right Thing.

Last week, I read John Gruber's ode to backups and disk recovery. This article offers a different prescription but the same message. You must be 100% backed up, including even the files that you are editing now in the minutes or hours before the next backup. Drives fail. Be prepared.

Once again, I was energized to Do the Right Thing. I got out a couple of external drives that I had picked out for a good price recently. The plan was to implement a stable, complete backup process this coming weekend.

The universe tends toward maximum irony. Don't push it.

If the universe were punishing me for insufficient respect for its power, you would think that the hard drive in either my laptop or my office machine would have failed. But both chug along just fine. Indeed, I still have never had a hard drive fail in any of my personal or work computers.

It turns out that the universe's sense of irony is much bigger than my machines.

On Sunday evening, the hard drive in our family iMac failed. I rarely use this machine and store nothing of consequence there. Yet this is a much bigger deal.

My wife lost a cache of e-mail, an address book, and a few files. She isn't a big techie, so she didn't have a lot to lose there. We can reassemble the contact information at little cost, and she'll probably use this as a chance to make a clean break from Eudora and POP mail and move to IMAP and mail in the cloud. In the end, it might be a net wash.

My teenaged daughters are a different story. They are from a new generation and live a digital life. They have written a large number of papers, stories, and poems, all of which were on this machine. They have done numerous projects for schools and extracurricular activities. They have created artwork using various digital tools. They have taken photos. All on this machine, and now all gone.

I cannot describe how I felt when I first realized what had happened, or how I feel now, two days later. I am the lead techie in our house, the computer science professor who knows better and preaches better, the husband and father who should be taking care of what matters to his family. This is my fault. Not that the hard drive failed, because drives fail. It is my fault that we don't have a reliable, complete backup of all the wonderful work my daughters have created.

Fortunately, not all is lost. At various times, we have copied files to sundry external drives and servers for a variety of reasons. I sometimes copy poetry and stories and papers that I especially like onto my own machines, for easy access. The result is a scattering of files here and there, across a half dozen machines. I will spend the next few days reassembling what we have as best I can. But it will not be all, and it will not be enough.

The universe maximized its irony this time around by getting me twice. First, I was gonna do it, but didn't.

That was just the head fake. I was not thinking much at all about our home machine. That is where the irony came squarely to rest.

Shut up. I know things. You will listen to me. Do it anyway.

Trust Zawinski, Gruber, and every other sane computer user. Trust me.

Do it. Run; don't walk. Whether your plan uses custom tools or a lowly cron running rysnc, do it now. Whether you go as far as using a service such as dropbox to maintain working files or not, set up an automatic, complete, and bootable backup of your hard drives.

I know I can't be alone. There must be others like me out there. Maybe you used to maintain automatic and complete system backups and for whatever reason fell out of the habit. Maybe you have never done it but know it's the right thing to do. Maybe, for whatever reason, you have never thought about a hard drive failing. You've been lucky so far and don't even know that your luck might change at any moment.

Do it now, before dinner, before breakfast. Do it before someone you love loses valuable possessions they care deeply about.

I will say this: my daughters have been unbelievable through all this. Based on what happened Sunday night, I certainly don't deserve their trust or their faith. Now it's time to give them what they deserve.